Home >Technology peripherals >AI >Meitu AI partial redrawing technology revealed! Change it however you want! Partial redrawing of beautiful pictures allows you to do whatever you want

Meitu AI partial redrawing technology revealed! Change it however you want! Partial redrawing of beautiful pictures allows you to do whatever you want

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2024-03-02 09:55:561646browse

Recently, the "AI Expansion" function has caused a sensation with its sudden enlargement effect. Its funny and interesting auto-fill results have frequently become popular and set off a craze on the Internet. Users actively tried this feature, and its huge 180-degree transformation also made people marvel, and the popularity of the topic continued to rise.

While arousing laughter and enthusiasm, it also means that people are constantly paying attention to whether AI can really help them solve real-world problems and improve user experience. With the rapid development of AIGC technology, AI application scenarios are accelerating to be implemented, which indicates that we will usher in a new productivity revolution.

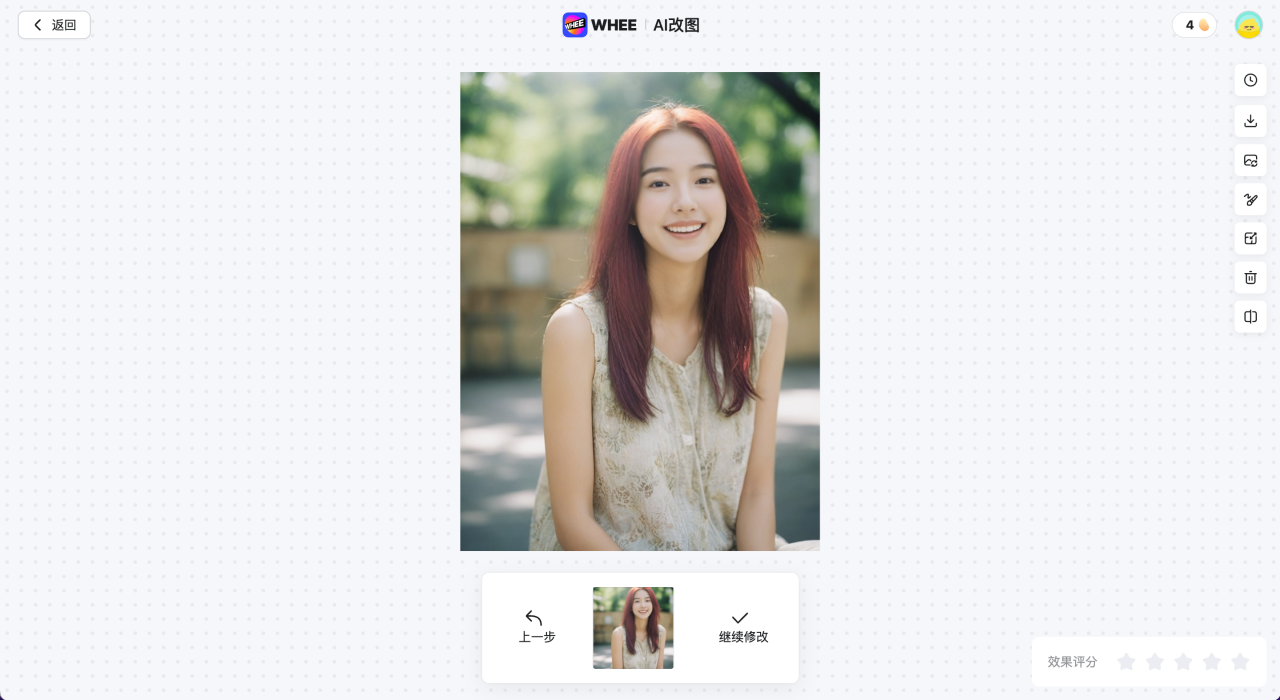

Recently, Meitu's WHEE and other products have launched AI image expansion and AI image modification functions. With simple prompt input, users can modify images, remove screen elements, and expand the screen at will. With convenience The operation and stunning effects greatly reduce the threshold for using the tool and bring users an efficient and high-quality image creation experience.

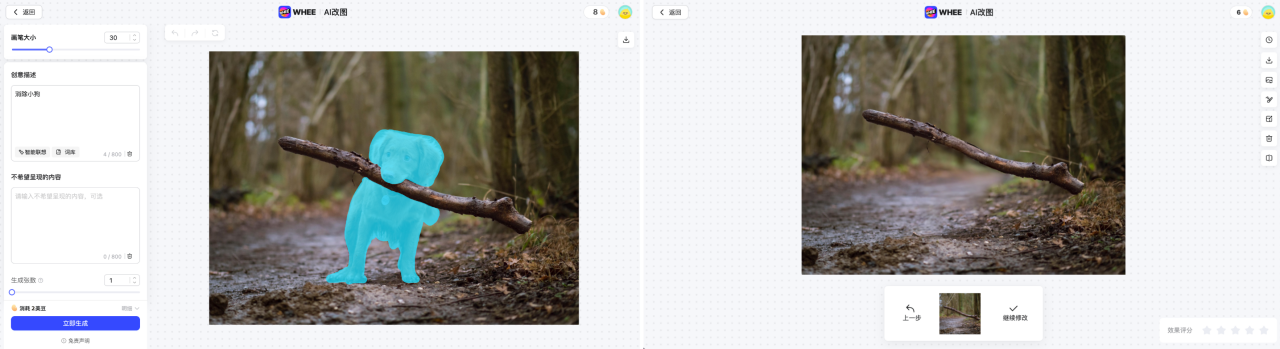

MiracleVision (Qixiang Intelligence) eliminates the result

MiracleVision (Qixiang Intelligence) replaces the effect Before

MiracleVision (Qixiang Intelligence) replacement effect After

MiracleVision (Qixiang Intelligence) AI image modification effect

Powerful model capabilities, allowing you to edit images as you wish

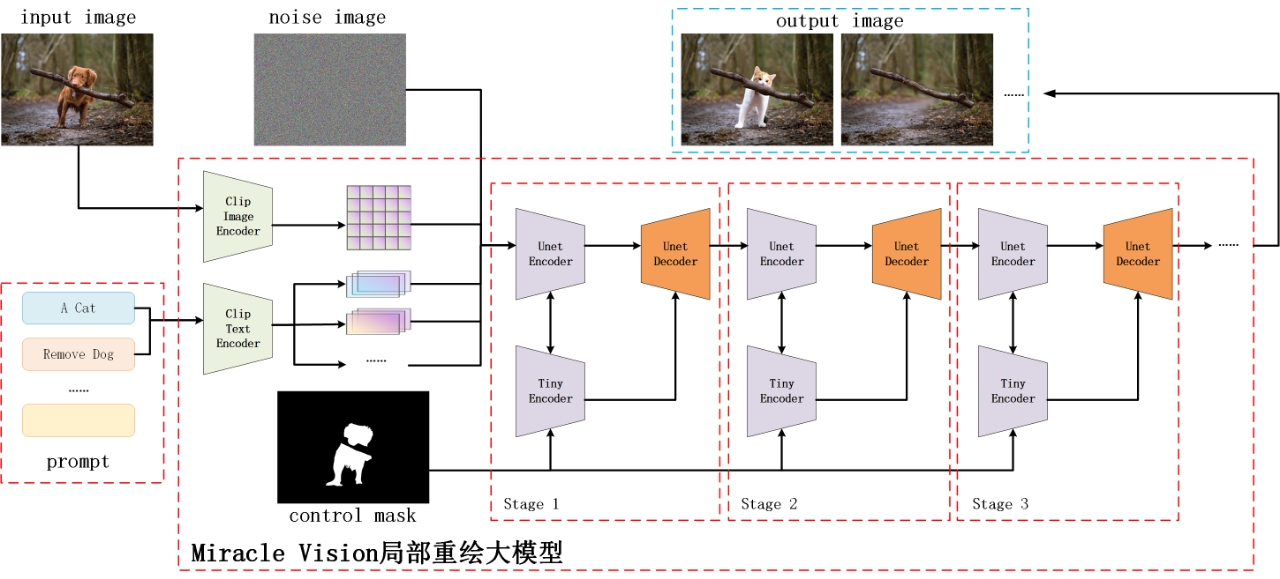

Meitu AI partial redrawing model builds a complete inpaint & outpaint model framework based on diffusion model (Diffision Model) technology, redrawing internal areas and foregrounds Tasks such as target elimination and external area expansion are unified into one solution, and special optimization designs are made for some specific effect issues.

The MiracleVision model is a Vincentian graph model. Although it can be adapted to the inpaint task by transforming the first convolutional layer and fine-tuning the entire unet, this requires modifying the original weights of unet. , which may lead to a decline in model performance when the amount of training data is insufficient.

Therefore, in order to make full use of MiracleVision's existing generation capabilities, the team does not directly fine-tune MiracleVision's unet model in the partial redraw model, but uses controlnet to add a The input branch of the mask is controlled.

At the same time, in order to save training costs and speed up inference, the compressed controlnet module is used for training to reduce the amount of calculation as much as possible. During the training process, the parameters of the unet model will be fixed, and only the controlnet module will be updated, eventually allowing the entire model to gain the ability to inpaint.

Meitu AI partially redraws the model architecture diagram

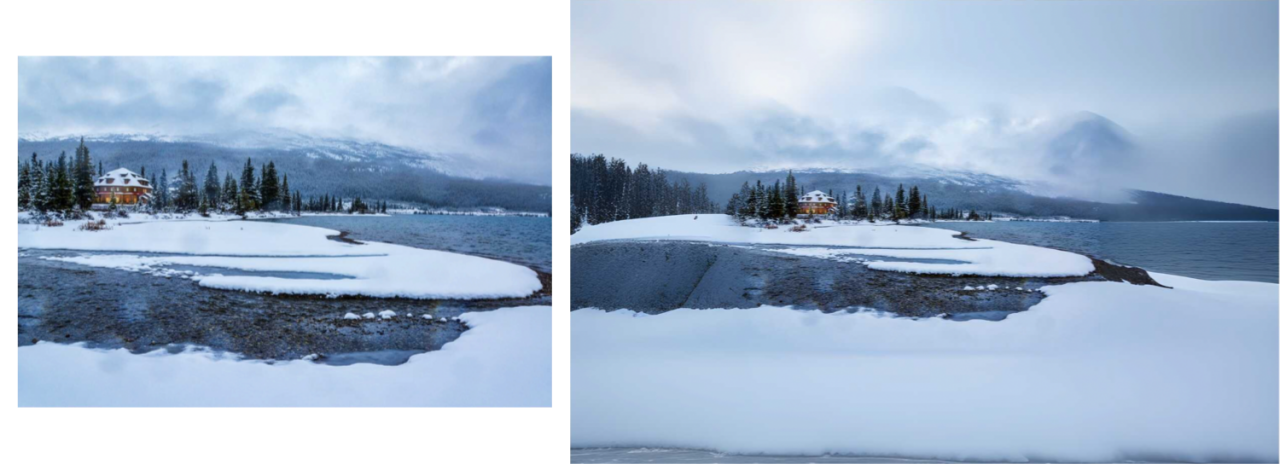

The outpaint task is the reverse operation of the crop task. The crop task is to crop the original image along the image boundary, retaining only the required part, which is a subtraction operation of the image content; while the outpiant task is to expand outward along the image boundary, using the generation ability of the model to create out of thin air Extracting content that does not originally exist is an addition operation of image content.

Essentially, the outpaint task can also be regarded as a special inpaint task, except that the mask area is located on the periphery of the image.

Freely control the generation and elimination of objects through a variety of training strategies

General diffusion models are better at replacing rather than eliminating when performing inpaint tasks. When a certain target needs to be eliminated, the model can easily draw some new foreground targets in the mask area that do not originally exist, especially when the mask area This phenomenon is especially obvious when the area is relatively large, even if these targets do not appear in the prompt. The reasons are mainly the following three aspects:

1. The prompt of the training set generally only describes what is in the image, but not what is not in the image, so the trained model is allowed to be based on It's easy to tell a prompt to generate a target, but it's hard to stop it from generating a target. Even with the Classifier-Free Guidance strategy, the generation of this target can be suppressed by adding unwanted objects to negative words, but it is never possible to write all possible targets into negative words, so the model will still tend to Generate some unexpected targets;

2. From the distribution of training data, since most of the images in the large-scale image training set are composed of foreground and background, pure background images account for is relatively small, which means that the diffusion model has learned a potential rule during training, that is, there is a high probability of a target foreground in an image (even if it is not mentioned in the prompt), which also causes the model to When performing the inpaint task, it is more likely to generate something in the mask area, so that the output image is closer to the distribution during training;

3. The shape of the mask area to be filled sometimes also contains certain semantic information. , for example, without other guidance, the model will be more inclined to fill a new cat in a mask area with the shape of a cat, causing the elimination task to fail.

In order to make MiracleVision capable of both target generation and target elimination, the team adopted a multi-task training strategy:

1. In the training phase, when the mask area falls on the texture When there are fewer pure background areas, add a specific prompt keyword as a trigger guide word, and during the model inference stage, add this keyword as a forward guide word to the prompt embedding to prompt the model to generate more background areas. .

2. Since pure background images account for a relatively small proportion in the entire training set, in order to increase their contribution to training, in each training batch, a certain proportion of background images are manually sampled and added to the training, so that the background images The proportion in the training samples remains generally stable.

3. In order to reduce the semantic dependence of the model on the mask shape, various masks of different shapes will be randomly generated during the training phase to increase the diversity of mask shapes.

High-precision texture generation, more natural fusion

Since the high-definition texture data in the training set only accounts for a small part of the total training data, when performing the inpaint task, Usually, results with very rich textures are not generated, which results in unnatural fusion and a sense of boundary in scenes with rich original textures.

In order to solve this problem, the team used the self-developed texture detail model as a guide model to assist MiracleVision in improving the generation quality and suppressing over-fitting, so that the generated area is between the generated area and other areas of the original image. can fit together better.

Original image v.s without added texture details v.sMiracleVision enlarged image effect

Faster, better effect, more efficient interaction!

Diffusion model solutions usually require a multi-step reverse diffusion process during inference, resulting in the processing of a single image taking too long. In order to optimize the user experience while maintaining generation quality, the Meitu Imaging Research Institute (MT Lab) team created a special tuning solution for AI partial redrawing technology, ultimately achieving the best balance between performance and effect.

First of all, a large number of matrix calculations in the pre- and post-processing and inference processes of MiracleVision are transplanted to the GPU for parallel computing as much as possible, thus effectively speeding up the calculation and reducing the load on the CPU. At the same time, during the process of assembling pictures, we fuse the layers as much as possible, use FlashAttention to reduce video memory usage, improve inference performance, and tune the Kernel implementation to maximize the use of GPU computing power for different NVIDIA graphics cards.

In addition, relying on the self-developed model parameter quantification method, MiracleVision is quantized to 8bit without obvious loss of accuracy. Since different GPU graphics cards have different support for 8-bit quantization, we innovatively adopt a mixed-precision strategy to adaptively select the optimal operator under different server resource environments to achieve the optimal solution for overall acceleration.

For user-input images with higher resolutions, it is difficult to perform inference directly at the original resolution due to limitations in server resources and time costs. In this regard, the team first compressed the image resolution to a suitable size, then performed inference based on MiracleVision, and then used a super-resolution algorithm to restore the image to the original resolution, and then performed image fusion with the original image, thereby maintaining both It generates clear images and saves memory usage and execution time during the inference process.

Meitu cooperates in depth with Samsung to create a new mobile image editing experience using AI

On January 25, Samsung Electronics held a new product launch conference for the Galaxy S24 series in China. Meitu has deepened its cooperation with Samsung to create a new AI image editing experience for Samsung's new Galaxy S24 series mobile phone albums. The generative editing independently developed by Meitu Imaging Research Institute (MT Lab) - AI image expansion and AI image modification functions are also It has been officially launched to help open up a new space for mobile image editing and creation.

With the AI image editing function, users can easily move, delete or resize the image by simply pressing and holding the image they want to edit. In addition, when the horizontal line of the picture is not vertical, the AI image expansion function can intelligently fill in the missing areas of the photo and correct the composition of the picture after the user adjusts the angle.

Based on the AI function brought by MiracleVision, Meitu not only helps users easily achieve professional-level editing effects on mobile phones and create more personalized photo works, but will also continue to promote and enhance the entire AI image processing capabilities in the mobile phone industry.

Relying on the powerful technical capabilities of Meitu Imaging Research Institute (MT Lab), MiracleVision has been iterated to version 4.0 in less than half a year. In the future, Meitu will continue to strive to improve user experience in e-commerce, advertising, gaming and other industries, and help practitioners in different scenarios improve workflow efficiency.

The above is the detailed content of Meitu AI partial redrawing technology revealed! Change it however you want! Partial redrawing of beautiful pictures allows you to do whatever you want. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- How to use redraw and reflow

- [Trend Weekly] Global Artificial Intelligence Industry Development Trend: OpenAI submitted a 'GPT-5' trademark application to the U.S. Patent Office

- The scale of my country's computing industry reaches 2.6 trillion yuan, with more than 20.91 million general-purpose servers and 820,000 AI servers shipped in the past six years.

- Improving Web Page Performance: Tips to Reduce Redraws and Reflows

- Key Strategies to Reduce HTML Reflow and Redraw: Front-End Performance Optimization