In machine learning, model calibration refers to the process of adjusting the probability or confidence of a model output to make it more consistent with actual observations. In classification tasks, models often output the probability or confidence that a sample belongs to a certain category. Through calibration, we hope that these probabilities or confidence levels will accurately reflect the probability of the class to which the sample belongs, thus improving the predictive reliability of the model.

Why is model calibration needed?

Model calibration is very important in practical applications for the following reasons:

In order to enhance the reliability of model predictions, calibration is required to ensure The output probability or confidence matches the actual probability.

2. It is very important to ensure the consistency of model output. For samples of the same category, the model should output similar probabilities or confidence levels to ensure the stability of the model's prediction results. If there are inconsistencies in the probabilities or confidence levels output by the model, the model's predictions will become unreliable. Therefore, when training the model, we should take corresponding measures to adjust the model to ensure the consistency of the output. This can be achieved by adjusting the parameters of the model or improving the training data.

3. Avoid being overconfident or overly cautious. An uncalibrated model may be overconfident or overcautious, i.e., for some samples, the model may overestimate or underestimate their probability of belonging to a certain class. This situation can lead to inaccurate predictions from the model.

Common model calibration methods

Common model calibration methods include the following:

1. Linear calibration: Linear calibration is a simple and effective calibration method that calibrates the output probability of the model by fitting a logistic regression model. Specifically, linear calibration first passes the original output of the model through a Sigmoid function to obtain the probability value, and then uses a logistic regression model to fit the relationship between the true probability and the model output probability, thereby obtaining the calibrated probability value. The advantage of linear calibration is that it is simple and easy to implement, but the disadvantage is that it requires a large amount of labeled data to train the logistic regression model.

2. Non-parametric calibration: Non-parametric calibration is a ranking-based calibration method. It does not need to assume a specific form between the model output probability and the true probability, but uses a A method called monotonic regression is used to fit the relationship between them. Specifically, non-parametric calibration sorts the model output probabilities from small to large, and then uses monotonic regression to fit the relationship between the true probability and the sorted model output probability, thereby obtaining the calibrated probability value. The advantage of non-parametric calibration is that it does not require the assumption of a specific form between the model output probability and the true probability, but the disadvantage is that it requires a large amount of labeled data to train the model.

3. Temperature scaling: Temperature scaling is a simple and effective calibration method that calibrates the output probability of the model by adjusting the temperature of the model output probability. Specifically, temperature scaling divides the model output probability by a temperature parameter, and then passes the scaled probability through a Sigmoid function to obtain the calibrated probability value. The advantage of temperature scaling is that it is simple and easy to implement and does not require additional labeled data, but the disadvantage is that it requires manual selection of temperature parameters and may not be able to handle complex calibration issues.

4. Distribution calibration: Distribution calibration is a calibration method based on distribution matching. It calibrates the output probability of the model by matching the model output probability distribution and the true probability distribution. Specifically, distribution calibration transforms the model output probability distribution to make it more similar to the real probability distribution, thereby obtaining the calibrated probability distribution. The advantage of distribution calibration is that it can handle complex calibration problems, but the disadvantage is that it requires additional labeled data and has high computational complexity.

The above is the detailed content of Application of model calibration in machine learning. For more information, please follow other related articles on the PHP Chinese website!

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AM

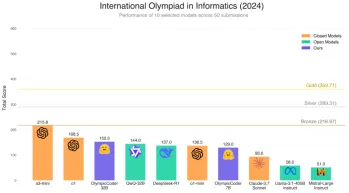

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AMHugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AM

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AMHow many of you have wished AI could do more than just answer questions? I know I have, and as of late, I’m amazed by how it’s transforming. AI chatbots aren’t just about chatting anymore, they’re about creating, researchin

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AM

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AMAs smart AI begins to be integrated into all levels of enterprise software platforms and applications (we must emphasize that there are both powerful core tools and some less reliable simulation tools), we need a new set of infrastructure capabilities to manage these agents. Camunda, a process orchestration company based in Berlin, Germany, believes it can help smart AI play its due role and align with accurate business goals and rules in the new digital workplace. The company currently offers intelligent orchestration capabilities designed to help organizations model, deploy and manage AI agents. From a practical software engineering perspective, what does this mean? The integration of certainty and non-deterministic processes The company said the key is to allow users (usually data scientists, software)

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AM

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AMAttending Google Cloud Next '25, I was keen to see how Google would distinguish its AI offerings. Recent announcements regarding Agentspace (discussed here) and the Customer Experience Suite (discussed here) were promising, emphasizing business valu

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AM

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AMSelecting the Optimal Multilingual Embedding Model for Your Retrieval Augmented Generation (RAG) System In today's interconnected world, building effective multilingual AI systems is paramount. Robust multilingual embedding models are crucial for Re

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AM

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AMTesla's Austin Robotaxi Launch: A Closer Look at Musk's Claims Elon Musk recently announced Tesla's upcoming robotaxi launch in Austin, Texas, initially deploying a small fleet of 10-20 vehicles for safety reasons, with plans for rapid expansion. H

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AM

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AMThe way artificial intelligence is applied may be unexpected. Initially, many of us might think it was mainly used for creative and technical tasks, such as writing code and creating content. However, a recent survey reported by Harvard Business Review shows that this is not the case. Most users seek artificial intelligence not just for work, but for support, organization, and even friendship! The report said that the first of AI application cases is treatment and companionship. This shows that its 24/7 availability and the ability to provide anonymous, honest advice and feedback are of great value. On the other hand, marketing tasks (such as writing a blog, creating social media posts, or advertising copy) rank much lower on the popular use list. Why is this? Let's see the results of the research and how it continues to be

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AM

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AMThe rise of AI agents is transforming the business landscape. Compared to the cloud revolution, the impact of AI agents is predicted to be exponentially greater, promising to revolutionize knowledge work. The ability to simulate human decision-maki

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.