Hugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model

The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product of its Open-R1 initiative. This model, specifically designed for competitive programming, leverages a Chain-of-Thought (CoT)-enhanced Codeforces dataset for fine-tuning. Its performance has already surpassed Claude 3.7 Sonnet on the IOI benchmark, sparking questions about its overall capabilities compared to closed-source alternatives. This analysis will explore OlympicCoder-7B's benchmark scores, its underlying architecture, usage instructions, and broader implications.

Understanding OlympicCoder

The Open-R1 initiative, a community-driven project by Hugging Face, aims to create open, high-quality reasoning models. This has resulted in two code-specialized models: OlympicCoder-7B and OlympicCoder-32B. OlympicCoder-7B is built upon Alibaba Cloud's open-source Qwen2.5-Coder-7B-Instruct model, but its key differentiator is its fine-tuning with the CodeForces-CoTs dataset. This dataset, incorporating thousands of competitive programming problems from Codeforces and enhanced with CoT reasoning, enables the model to break down complex problems into manageable steps, moving beyond simple code generation to true logical problem-solving.

The CodeForces-CoTs dataset itself is a meticulously curated collection. Nearly 100,000 high-quality samples, refined using the R1 model, were included. Each sample contains a problem statement, a detailed thought process, and a verified solution in both C and Python, ensuring model robustness and adaptability. A rigorous filtering process guarantees the accuracy and functionality of the code, addressing a common challenge in code model training.

IOI Benchmark Results

OlympicCoder-7B's performance was evaluated using the IOI Benchmark, a rigorous test inspired by the International Olympiad in Informatics. This benchmark assesses a model's ability to handle real-world competitive programming problems, emphasizing logical reasoning, constraint satisfaction, and optimal solutions.

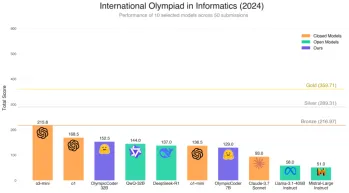

The chart displays the performance of various models on the 2024 IOI benchmark across 50 competitive programming tasks. Key findings include:

- OlympicCoder-7B achieved a score of 129.0, exceeding Claude 3.7 Sonnet (93.0) and other open models.

- While slightly behind DeepSeek-R1 (137.0), its smaller parameter count and open accessibility make its performance notable.

- It outperformed QwQ-32B (144.0) in reasoning clarity despite its smaller size.

- Although not reaching the performance of closed models like GPT-4 variants, its results are impressive for an open-source 7B model.

Using OlympicCoder-7B with Hugging Face

Let's explore how to utilize OlympicCoder-7B on Google Colab.

Accessing OlympicCoder: You'll need a Hugging Face access token (obtained from https://www.php.cn/link/ae714b2895215ee6c844a04374bde7fb). Ensure the token has the necessary permissions.

Running OlympicCoder-7B:

-

Install Libraries:

!pip install transformers accelerate -

Hugging Face Login:

!huggingface-cli login(using your access token) -

Import and Load: Import

torchandtransformers, then load the model:pipe = pipeline("text-generation", model="open-r1/OlympicCoder-7B", torch_dtype=torch.bfloat16, device_map="auto") - Run Inference: Provide a prompt (e.g., "Write a Python program that prints prime numbers up to 100") within a message structure. The model will generate code. Note that inference time can be significant.

Alternative Access: For users with sufficient hardware, LM Studio (https://www.php.cn/link/1d35446bf1a709c48f740928326cb4a7) offers local model deployment.

Key Lessons from Training OlympicCoder:

Hugging Face's experience highlights several valuable insights:

- Efficient sample packing enhances reasoning depth.

- Higher learning rates can stabilize training.

- Including Codeforces editorials improves performance.

- "

tags" encourage longer, more coherent thought chains. - 8-bit optimizers enable efficient training of large models.

Recent Open-R1 Developments:

The Open-R1 project continues to evolve with advancements like GRPO (a new reinforcement learning method), the Open R1 Math Dataset, a reasoning course, and active community contributions.

Applications of OlympicCoder-7B:

OlympicCoder-7B finds applications in:

- Competitive programming training.

- Code review with reasoning.

- Generating editor-style explanations.

- Creating custom coding tutors.

- Educational applications for algorithms and data structures.

Conclusion:

OlympicCoder-7B represents a significant advancement in open-source code reasoning models. Its strong performance, innovative dataset, and practical applications make it a valuable asset for various users. Continued community support and development promise to solidify its position within the open-source AI ecosystem.

Frequently Asked Questions (FAQs):

- Q1: What is the IOI benchmark? A1: It measures a model's ability to solve competitive programming problems.

- Q2: What is Qwen? A2: A series of large language models from Alibaba Cloud.

- Q3: OlympicCoder-32B's base model? A3: Qwen/Qwen2.5-Coder-32B-Instruct.

-

Q4:

open-r1/codeforces-cots? A4: The dataset used for training OlympicCoder-7B.

The images remain in their original format and positions as requested.

The above is the detailed content of Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?. For more information, please follow other related articles on the PHP Chinese website!

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AM

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AMThe burgeoning capacity crisis in the workplace, exacerbated by the rapid integration of AI, demands a strategic shift beyond incremental adjustments. This is underscored by the WTI's findings: 68% of employees struggle with workload, leading to bur

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AM

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AMJohn Searle's Chinese Room Argument: A Challenge to AI Understanding Searle's thought experiment directly questions whether artificial intelligence can genuinely comprehend language or possess true consciousness. Imagine a person, ignorant of Chines

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AM

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AMChina's tech giants are charting a different course in AI development compared to their Western counterparts. Instead of focusing solely on technical benchmarks and API integrations, they're prioritizing "screen-aware" AI assistants – AI t

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AM

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AMMCP: Empower AI systems to access external tools Model Context Protocol (MCP) enables AI applications to interact with external tools and data sources through standardized interfaces. Developed by Anthropic and supported by major AI providers, MCP allows language models and agents to discover available tools and call them with appropriate parameters. However, there are some challenges in implementing MCP servers, including environmental conflicts, security vulnerabilities, and inconsistent cross-platform behavior. Forbes article "Anthropic's model context protocol is a big step in the development of AI agents" Author: Janakiram MSVDocker solves these problems through containerization. Doc built on Docker Hub infrastructure

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AM

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AMSix strategies employed by visionary entrepreneurs who leveraged cutting-edge technology and shrewd business acumen to create highly profitable, scalable companies while maintaining control. This guide is for aspiring entrepreneurs aiming to build a

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AM

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AMGoogle Photos' New Ultra HDR Tool: A Game Changer for Image Enhancement Google Photos has introduced a powerful Ultra HDR conversion tool, transforming standard photos into vibrant, high-dynamic-range images. This enhancement benefits photographers a

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AM

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AMTechnical Architecture Solves Emerging Authentication Challenges The Agentic Identity Hub tackles a problem many organizations only discover after beginning AI agent implementation that traditional authentication methods aren’t designed for machine-

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM(Note: Google is an advisory client of my firm, Moor Insights & Strategy.) AI: From Experiment to Enterprise Foundation Google Cloud Next 2025 showcased AI's evolution from experimental feature to a core component of enterprise technology, stream

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Atom editor mac version download

The most popular open source editor

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Chinese version

Chinese version, very easy to use

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.