Technology peripherals

Technology peripherals AI

AI Google discloses its own 'AI+ software engineering' framework DIDACT: Thousands of developers have tested it internally, and they all say it is highly productive after using it

Google discloses its own 'AI+ software engineering' framework DIDACT: Thousands of developers have tested it internally, and they all say it is highly productive after using itAny large-scale software is not fully conceived from the beginning, but is improved, edited, unit tested, repaired by developers, solved by code review, and solved again and again until it is satisfied and goes online. The code can be merged into the warehouse only after the requirements are met.

The knowledge of controlling the entire process is called Software Engineering.

Software engineering is not an independent process, but consists of developers, code reviewers, bug reporters, software architects and various development tools (such as compilers, unit tests, Connector, static analyzer).

Recently, Google announced its own DIDACT (Dynamic Integrated Developer ACTivity, dynamic integrated developer activity) framework, which uses AI technology to enhance software engineering and integrate software development The intermediate states are used as training data to assist developers in writing and modifying code, and understand the dynamics of software development in real time.

DIDACT is a multi-task model trained on development activities including editing, debugging, fixing and code review

The researchers built and deployed three DIDACT tools in-house, Annotation Parsing, Build Repair, and Tip Prediction, each integrated at different stages of the development workflow.

Software Engineering = Interaction Log

For decades, Google’s software engineering tool chain stored every operation related to code as a tool and development Logs of interactions between people.

In principle, users can use these records to replay in detail the key change process in the software development process, that is, how Google's code base was formed, including every code edit, Compilation, annotation, variable renaming, etc.

Google's development team will store the code in a monorepo (mono repository), which is a code repository that contains all tools and systems.

Software developers typically make code modifications in a local copy-on-write workspace managed by Clients in the Cloud (CitC) systems. experiment.

When a developer is ready to package a set of code changes together to achieve a certain task (such as fixing a bug), he or she needs to create a code change in Critique, Google's code review system. Changelist (CL).

Like common code review systems, developers communicate with peer reviewers about functionality and style, and then edit the CL to address issues raised during review comments.

Eventually, the reviewer declared the code "LGTM!" and merged the CL into the code base.

Of course, in addition to conversations with code reviewers, developers also need to maintain a large number of "dialogues" with other software engineering tools, including compilers, test frameworks, linkers, Static analyzers, fuzz testing tools, etc.

An illustration of the complex network of activities involved in software development: the activities of developers, interactions with code reviewers, and the use of tools such as compilers transfer.

Multi-task models in software engineering

DIDACT leverages the interaction between engineers and tools to empower machine learning models by suggesting or optimizing developers’ execution of software actions during engineering tasks to assist Google developers in participating in the software engineering process.

To this end, the researchers defined a number of tasks regarding individual developer activities: fixing broken builds, predicting code review comments, processing code review comments, renaming variables, editing files, etc. .

Then define a common form for each activity: get a certain State (code file), an Intent (annotation specific to an activity, such as code review annotation or compilation processor error) and generate an Action (an operation for processing the task).

Action is like a mini programming language that can be expanded into newly added activities, covering editing, adding comments, renaming variables, marking code errors, etc. It can also be called this The first language is DevScript.

The input prompts of the DIDACT model are tasks, code snippets and comments related to the task, and the output is development actions, such as editing or comments

Status- The definition form of Intent-Action (State-Intent-Action) can capture different tasks in a common way. More importantly, DevScript can express complex actions concisely without the need to output the entire state after the action occurs ( original code), making the model more efficient and interpretable.

For example, renaming may modify multiple places in the code file, but the model only needs to predict one renaming operation.

Assign a programmer to the AI model

DIDACT runs very well on personal auxiliary tasks. For example, the following example demonstrates the code of DIDACT after the function is completed. For cleanup work, first enter the code reviewer's final comments (marked human in the picture), and then predict the operations required to solve the problems raised in the comments (shown with diff).

Given an initial snippet of code and the comments the code reviewer attached to the snippet, DIDACT's Pre-Submit Cleanup task generates a Editing operations (insertion and deletion of text)

The multi-modal nature of DIDACT also gives rise to some completely new behaviors that emerge with scale, one of which is history enhancement ( history augmentation), this capability can be enabled via prompts. Knowing what the developer has done recently allows the model to better predict what the developer should do next.

##Demonstration of historical enhanced code completion

The history enhanced code completion task can demonstrate this ability. In the example above, the developer added a new function parameter (1) and moved the cursor into the document (2). Based on the developer's editing history and cursor position, the model is able to accurately predict the docstring entry for the new parameter and complete the third step.

In the more difficult task of history-augmented edit prediction, the model is able to select the location of the next edit in a historically consistent manner.

Demonstration of edit prediction over multiple chained iterations

If a developer removes a function parameter (1), the model can correctly predict an update to the docstring (2) that removes the parameter based on history (without requiring a human developer to manually place the cursor there), and in the syntax correctly (and arguably semantically) update the statement in function (3).

With the history, the model can clearly decide how to correctly continue the "editing code process", but without the history, the model has no way of knowing that the missing function parameters were intentional ( Because the developer was doing a longer editing operation to remove the parameter) or was it an unexpected situation (the model should re-add the parameter to fix the problem).

In addition, the model can also complete more tasks, such as starting from a blank file and requiring the model to continuously predict the next editing operations until a complete code is written. document.

Most importantly, the model assists in writing code in a step-by-step manner that is natural to developers:

Start by creating a complete working framework with imports, flags, and a basic main function; then gradually add new functionality, such as reading and writing results from files, and adding filtering of certain lines based on user-supplied regular expressions Function.

ConclusionDIDACT transforms Google’s software development process into training demos for machine learning developer assistants and uses these demo data to train models in a step-by-step manner Build code, interact with tools and code reviewers.

The DIDACT approach complements the great achievements of large-scale language models from Google and others to reduce workload, increase productivity, and improve the quality of software engineers' work.

The above is the detailed content of Google discloses its own 'AI+ software engineering' framework DIDACT: Thousands of developers have tested it internally, and they all say it is highly productive after using it. For more information, please follow other related articles on the PHP Chinese website!

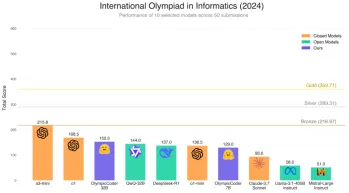

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AM

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AMHugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AM

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AMHow many of you have wished AI could do more than just answer questions? I know I have, and as of late, I’m amazed by how it’s transforming. AI chatbots aren’t just about chatting anymore, they’re about creating, researchin

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AM

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AMAs smart AI begins to be integrated into all levels of enterprise software platforms and applications (we must emphasize that there are both powerful core tools and some less reliable simulation tools), we need a new set of infrastructure capabilities to manage these agents. Camunda, a process orchestration company based in Berlin, Germany, believes it can help smart AI play its due role and align with accurate business goals and rules in the new digital workplace. The company currently offers intelligent orchestration capabilities designed to help organizations model, deploy and manage AI agents. From a practical software engineering perspective, what does this mean? The integration of certainty and non-deterministic processes The company said the key is to allow users (usually data scientists, software)

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AM

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AMAttending Google Cloud Next '25, I was keen to see how Google would distinguish its AI offerings. Recent announcements regarding Agentspace (discussed here) and the Customer Experience Suite (discussed here) were promising, emphasizing business valu

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AM

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AMSelecting the Optimal Multilingual Embedding Model for Your Retrieval Augmented Generation (RAG) System In today's interconnected world, building effective multilingual AI systems is paramount. Robust multilingual embedding models are crucial for Re

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AM

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AMTesla's Austin Robotaxi Launch: A Closer Look at Musk's Claims Elon Musk recently announced Tesla's upcoming robotaxi launch in Austin, Texas, initially deploying a small fleet of 10-20 vehicles for safety reasons, with plans for rapid expansion. H

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AM

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AMThe way artificial intelligence is applied may be unexpected. Initially, many of us might think it was mainly used for creative and technical tasks, such as writing code and creating content. However, a recent survey reported by Harvard Business Review shows that this is not the case. Most users seek artificial intelligence not just for work, but for support, organization, and even friendship! The report said that the first of AI application cases is treatment and companionship. This shows that its 24/7 availability and the ability to provide anonymous, honest advice and feedback are of great value. On the other hand, marketing tasks (such as writing a blog, creating social media posts, or advertising copy) rank much lower on the popular use list. Why is this? Let's see the results of the research and how it continues to be

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AM

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AMThe rise of AI agents is transforming the business landscape. Compared to the cloud revolution, the impact of AI agents is predicted to be exponentially greater, promising to revolutionize knowledge work. The ability to simulate human decision-maki

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 Chinese version

Chinese version, very easy to use

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),