AI is resource intensive for any platform, including public cloud. Most artificial intelligence technologies require a large amount of inference calculations, which collectively require higher processor, network, and storage requirements, and will also result in higher electricity bills, infrastructure costs, and carbon footprints.

The rise of generative artificial intelligence systems, taking ChatGPT as an example, has brought the above issues into focus again. Given the widespread adoption of this technology, and its likely large-scale adoption by companies, governments, and the general public, we can see the electricity consumption growth curve taking on a worrying arc.

Artificial intelligence technology has been around since the 1970s. Since the work of a mature artificial intelligence system requires a large amount of resources, it did not have much commercial impact initially. I remember that the artificial intelligence system I designed when I was in my 20s required more than $40 million in hardware, software, and data center space to run it. Spoiler alert: That project and many other AI projects never got off the ground. Not viable as a business case.

Cloud computing changes everything. Things that were once out of reach are now sufficiently cost-effective with the public cloud. In fact, as you might have guessed, the rise of the cloud has roughly coincided with the rise of artificial intelligence over the past 10 to 15 years. What I'm trying to say is that they are now tightly integrated.

Sustainability and Cost of Cloud Resources

You really don’t need to do much research to predict what will happen here. There will be a surge in demand for artificial intelligence services, such as generative AI systems that are now attracting attention, as well as other artificial intelligence and machine learning systems. This surge will be led by businesses looking for innovative advantages, such as smart supply chains, and even thousands of college students hoping to have a generative AI system to write their term papers.

More demand for artificial intelligence means more demand for the resources used by these artificial intelligence systems, such as public clouds and the services they provide. This demand is likely to be fulfilled through multiple data centers that house power-hungry servers and network equipment.

Public cloud providers, like any other utility resource provider, will increase prices as demand increases, just like we see seasonal increases in household electricity bills (also based on demand). Therefore, we often reduce usage, for example, turning the air conditioner to 24 degrees instead of 20 degrees in the summer.

However, higher cloud computing costs may not have the same impact on businesses. Enterprises may find that these artificial intelligence systems drive certain key business processes and are not dispensable. In many cases, they may try to save money within the business, perhaps by reducing headcount to offset the cost of AI systems. It’s no secret that generative AI systems will soon replace many information workers.

What can be done?

What can we do if the resource demands of running AI systems will result in higher computing costs and carbon output? The answer may lie in finding more efficient ways for AI to utilize resources such as processing, networking and storage.

For example, the sampling pipeline can speed up deep learning by reducing the amount of data processed. Research from MIT and IBM shows that you can use this approach to reduce the resources required to run neural networks on large data sets. However, it also limits accuracy, which may be acceptable for some business use cases, but not all.

Another approach already used in other technology areas is in-memory computing. This architecture can speed up AI processing by not moving data in and out of memory. Instead, AI calculations run directly within the memory module, which speeds things up significantly.

Other methods are also under development. For example, changing the physical processor, using co-processors for artificial intelligence calculations to increase computing speed, or adopting next-generation computing models such as quantum. You can expect a lot of technical announcements from the big public cloud vendors on how to solve many of these problems.

What should you do?

My advice is certainly not to avoid AI in order to get a lower cloud computing bill or save the planet. Artificial Intelligence is a foundational computing method that most businesses can leverage to gain significant value.

I recommend that you enter an AI-enabled or net new AI system development project with a clear understanding of the cost and impact on sustainability, which are directly related. You have to make a cost/benefit choice, and that really comes back to the value of cost and risk that you can bring to the business. After all, there is actually nothing new.

I do believe that most problems will be solved in innovative ways, whether it's memory or quantum computing or solutions we haven't seen yet. Both AI technology providers and cloud computing providers are keen to make AI more cost-effective and green. This is good news.

Source: www.cio.com

The above is the detailed content of The cost and sustainability of generative AI. For more information, please follow other related articles on the PHP Chinese website!

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AM

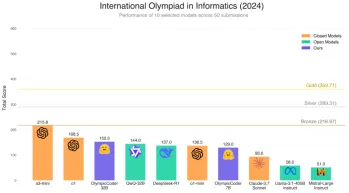

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AMHugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AM

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AMHow many of you have wished AI could do more than just answer questions? I know I have, and as of late, I’m amazed by how it’s transforming. AI chatbots aren’t just about chatting anymore, they’re about creating, researchin

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AM

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AMAs smart AI begins to be integrated into all levels of enterprise software platforms and applications (we must emphasize that there are both powerful core tools and some less reliable simulation tools), we need a new set of infrastructure capabilities to manage these agents. Camunda, a process orchestration company based in Berlin, Germany, believes it can help smart AI play its due role and align with accurate business goals and rules in the new digital workplace. The company currently offers intelligent orchestration capabilities designed to help organizations model, deploy and manage AI agents. From a practical software engineering perspective, what does this mean? The integration of certainty and non-deterministic processes The company said the key is to allow users (usually data scientists, software)

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AM

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AMAttending Google Cloud Next '25, I was keen to see how Google would distinguish its AI offerings. Recent announcements regarding Agentspace (discussed here) and the Customer Experience Suite (discussed here) were promising, emphasizing business valu

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AM

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AMSelecting the Optimal Multilingual Embedding Model for Your Retrieval Augmented Generation (RAG) System In today's interconnected world, building effective multilingual AI systems is paramount. Robust multilingual embedding models are crucial for Re

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AM

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AMTesla's Austin Robotaxi Launch: A Closer Look at Musk's Claims Elon Musk recently announced Tesla's upcoming robotaxi launch in Austin, Texas, initially deploying a small fleet of 10-20 vehicles for safety reasons, with plans for rapid expansion. H

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AM

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AMThe way artificial intelligence is applied may be unexpected. Initially, many of us might think it was mainly used for creative and technical tasks, such as writing code and creating content. However, a recent survey reported by Harvard Business Review shows that this is not the case. Most users seek artificial intelligence not just for work, but for support, organization, and even friendship! The report said that the first of AI application cases is treatment and companionship. This shows that its 24/7 availability and the ability to provide anonymous, honest advice and feedback are of great value. On the other hand, marketing tasks (such as writing a blog, creating social media posts, or advertising copy) rank much lower on the popular use list. Why is this? Let's see the results of the research and how it continues to be

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AM

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AMThe rise of AI agents is transforming the business landscape. Compared to the cloud revolution, the impact of AI agents is predicted to be exponentially greater, promising to revolutionize knowledge work. The ability to simulate human decision-maki

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Dreamweaver Mac version

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software