Technology peripherals

Technology peripherals AI

AI OpenAI releases GPT-4. What technology trends are worth paying attention to?

OpenAI releases GPT-4. What technology trends are worth paying attention to?OpenAI releases GPT-4. What technology trends are worth paying attention to?

This article is Zhang Junlin, head of new technology research and development at Sina Weibo and director of the Chinese Information Society of China, in his answer to the Zhihu question "OpenAI releases GPT-4, what are the technical optimizations or breakthroughs?" It summarizes the GPT4 technical report The three directions pointed out here also mentioned two other technical directions.

#At this historic moment, answer a question and leave your own footprints as a witness to history.

The technical report of GPT4 clearly pointed out three new directions:

First, the closure of the most cutting-edge research on LLM Or small circles. The technical report stated that due to competition and safety considerations, technical details such as model size were not announced. From the open source of GPT 2.0, to GPT 3.0 there were only papers, to ChatGPT there were no papers, and until GPT 4.0 the technical reports were more like performance evaluation reports. An obvious trend is that OpenAI has solidified its name as CloseAI, and OpenAI will no longer release papers on its cutting-edge LLM research.

In this case, other companies with relatively advanced technology have two options. One is to do a more extreme open source LLM. For example, Meta seems to have chosen this path. This is generally a reasonable choice made by companies at a competitive disadvantage, but the relevant technology is often not the most cutting-edge technology; the other option is Following up on OpenAI, we also chose to close the technology. Google was previously considered the second echelon of LLM. But under the combined punch of "Microsoft OpenAI", the situation is now a bit embarrassing. GPT 4.0 was completed in August last year. It is estimated that GPT 5.0 is currently in the process of being refined. With such a long time window, Google will end up in the current situation. Think about some very critical research such as Transformer and CoT, which are all done by themselves. I don’t know what the senior officials think when they come out and end up like this. If Google can follow up quickly later, it should not be a big problem to stay in the second tier. It is likely to be much ahead of the third place in technology. Due to competitive considerations, I guess Google will most likely follow OpenAI's path of technological closure. The most advanced LLM technology will be used first to refine its own elixir, rather than writing a paper and releasing it to benefit the public, especially OpenAI. This is likely to lead to the closure of the most cutting-edge research in LLM.

Counting from now on, after a period of time in China (it should be faster to achieve a 60 to 30% discount on ChatGPT, and it is estimated that it will take a longer time to equal), it will inevitably be forced to Entering into a situation of independent innovation. Judging from various domestic situations in the past three months, what will the future be like? Most likely not optimistic. Of course, this level is definitely difficult, but it must be passed. I can only wish those who have the ability and determination to do their best.

Second, the "Capability Prediction" of the LLM model mentioned in the GPT 4 technical report is a very valuable new research direction (in fact, there are also some other materials before, I remember reading it, but I can’t remember which one specifically). Use a small model to predict a certain ability of a large model under certain parameter combinations. If the prediction is accurate enough, it can greatly shorten the elixir refining cycle and greatly reduce the cost of trial and error. Therefore, regardless of the theoretical or practical value, this is definitely It is worth carefully studying the specific technical methods.

Thirdly, GPT 4 open sourced an LLM evaluation framework, which is also a very important direction for the rapid development of LLM technology later. Especially for Chinese, it is of particular significance to build practical Chinese LLM evaluation data and framework. Good LLM evaluation data can quickly discover the current shortcomings and improvement directions of LLM, which is of great significance. However, it is obvious that this area is basically blank at present. . This resource requirement is actually not that high and is suitable for many organizations, but it is indeed hard work.

In addition to the three directions clearly pointed out in the GPT 4 technical report, because there has been a lot of news about LLM recently, I will write down two other technical directions.

First of all, Stanford University is based on Meta’s 7B open source LLaMA and adds Self InstructTechnical construction Alpaca also represents a technical direction. If summarized, this direction can be called the direction of "low-cost reproduction of ChatGPT". The so-called Self Instruct is to adopt certain technical means without manually marking the Instruct. Instead, the Instruct is extracted from the OpenAI interface, which is better known as "distilling" it. That is, it does not require human marking. Instead, ChatGPT acts as a teacher and marks your Instruct. result. This brings the cost of Instruct marking directly to the benchmark of several hundred dollars, and the time cost is even shorter. In addition, the scale of Model 7B is not large, so it can be regarded as a technical route to "reproduce ChatGPT at low cost".

I estimate that many people in China have already adopted this technical route. There is no doubt that this is a shortcut, but there are advantages and disadvantages to taking shortcuts, so I won’t go into details. In the process of catching up with ChatGPT, I personally think it is feasible and supportive to reduce the cost first and reproduce ChatGPT to 70 to 80%. After all, the poor have their own ways of playing. Of course, the pursuit of making the model smaller without sacrificing the effect is very valuable if it can be done in a down-to-earth manner.

In addition, embodied intelligence will undoubtedly be the key research direction of LLM in the next stage. The representative in this regard is PaLM-E released by Google a while ago. With the current GPT 4, we can think that humans have created a super brain, but still locked it in a GPU cluster. And this super brain needs a body. GPT 4 needs to connect, communicate and interact with the physical world, and get real feedback in the physical world to learn to survive in the real world, and use real-world feedback, such as reinforcement learning. Come and learn the ability to move around the world. This will definitely be the hottest LLM research direction in the near future.

Multimodal LLM gives GPT 4 its eyes and ears, while embodied intelligence gives GPT 4 its body, feet and hands. GPT 4 has some connections with you and me, and relying on the powerful learning ability of GPT 4 itself, this thing is expected to appear around us soon.

If you think about it carefully, there are actually many other promising directions. My personal judgment is that the next 5 to 10 years will be the golden decade of the fastest development of AGI. If we stand at the time point of the next 30 years, when we look back on these 10 years, some of us will definitely think of the following verse: "Understand, but it is too late, they make the sun sad on the way, nor gentle. into that good night.”

The above is the detailed content of OpenAI releases GPT-4. What technology trends are worth paying attention to?. For more information, please follow other related articles on the PHP Chinese website!

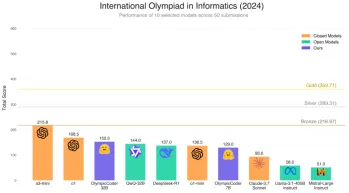

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AM

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AMHugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AM

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AMHow many of you have wished AI could do more than just answer questions? I know I have, and as of late, I’m amazed by how it’s transforming. AI chatbots aren’t just about chatting anymore, they’re about creating, researchin

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AM

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AMAs smart AI begins to be integrated into all levels of enterprise software platforms and applications (we must emphasize that there are both powerful core tools and some less reliable simulation tools), we need a new set of infrastructure capabilities to manage these agents. Camunda, a process orchestration company based in Berlin, Germany, believes it can help smart AI play its due role and align with accurate business goals and rules in the new digital workplace. The company currently offers intelligent orchestration capabilities designed to help organizations model, deploy and manage AI agents. From a practical software engineering perspective, what does this mean? The integration of certainty and non-deterministic processes The company said the key is to allow users (usually data scientists, software)

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AM

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AMAttending Google Cloud Next '25, I was keen to see how Google would distinguish its AI offerings. Recent announcements regarding Agentspace (discussed here) and the Customer Experience Suite (discussed here) were promising, emphasizing business valu

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AM

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AMSelecting the Optimal Multilingual Embedding Model for Your Retrieval Augmented Generation (RAG) System In today's interconnected world, building effective multilingual AI systems is paramount. Robust multilingual embedding models are crucial for Re

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AM

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AMTesla's Austin Robotaxi Launch: A Closer Look at Musk's Claims Elon Musk recently announced Tesla's upcoming robotaxi launch in Austin, Texas, initially deploying a small fleet of 10-20 vehicles for safety reasons, with plans for rapid expansion. H

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AM

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AMThe way artificial intelligence is applied may be unexpected. Initially, many of us might think it was mainly used for creative and technical tasks, such as writing code and creating content. However, a recent survey reported by Harvard Business Review shows that this is not the case. Most users seek artificial intelligence not just for work, but for support, organization, and even friendship! The report said that the first of AI application cases is treatment and companionship. This shows that its 24/7 availability and the ability to provide anonymous, honest advice and feedback are of great value. On the other hand, marketing tasks (such as writing a blog, creating social media posts, or advertising copy) rank much lower on the popular use list. Why is this? Let's see the results of the research and how it continues to be

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AM

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AMThe rise of AI agents is transforming the business landscape. Compared to the cloud revolution, the impact of AI agents is predicted to be exponentially greater, promising to revolutionize knowledge work. The ability to simulate human decision-maki

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Atom editor mac version download

The most popular open source editor

Dreamweaver Mac version

Visual web development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version