The prevailing trend in machine learning involves transferring data to the model's environment for training. However, what if we reversed this process? Given that modern databases are significantly larger than machine learning models, wouldn't it be more efficient to move the models to the datasets?

This is the fundamental concept behind PostgresML – the data remains in its location, and you bring your code to the database. This inverted approach to machine learning offers numerous practical advantages that challenge conventional notions of a "database."

PostgresML: An Overview and its Advantages

PostgresML is a comprehensive machine learning platform built upon the widely-used PostgreSQL database. It introduces a novel approach called "in-database" machine learning, enabling you to execute various ML tasks within SQL without needing separate tools for each step.

Despite its relative novelty, PostgresML offers several key benefits:

- In-database ML: Trains, deploys, and runs ML models directly within your PostgreSQL database. This eliminates the need for constant data transfer between the database and external ML frameworks, enhancing efficiency and reducing latency.

- SQL API: Leverages SQL for training, fine-tuning, and deploying machine learning models. This simplifies workflows for data analysts and scientists less familiar with multiple ML frameworks.

- Pre-trained Models: Integrates seamlessly with HuggingFace, providing access to numerous pre-trained models like Llama, Falcon, Bert, and Mistral.

- Customization and Flexibility: Supports a wide range of algorithms from Scikit-learn, XGBoost, LGBM, PyTorch, and TensorFlow, allowing for diverse supervised learning tasks directly within the database.

- Ecosystem Integration: Works with any environment supporting Postgres and offers SDKs for multiple programming languages (JavaScript, Python, and Rust are particularly well-supported).

This tutorial will demonstrate these features using a typical machine learning workflow:

- Data Loading

- Data Preprocessing

- Model Training

- Hyperparameter Fine-tuning

- Production Deployment

All these steps will be performed within a Postgres database. Let's begin!

A Complete Supervised Learning Workflow with PostgresML

Getting Started: PostgresML Free Tier

- Create a free account at https://www.php.cn/link/3349958a3e56580d4e415da345703886:

- Select the free tier, which offers generous resources:

After signup, you'll access your PostgresML console for managing projects and resources.

The "Manage" section allows you to scale your environment based on computational needs.

1. Installing and Setting Up Postgres

PostgresML requires PostgreSQL. Installation guides for various platforms are available:

- Windows

- Mac OS

- Linux

For WSL2, the following commands suffice:

sudo apt update sudo apt install postgresql postgresql-contrib sudo passwd postgres # Set a new Postgres password # Close and reopen your terminal

Verify the installation:

psql --version

For a more user-friendly experience than the terminal, consider the VSCode extension.

2. Database Connection

Use the connection details from your PostgresML console:

Connect using psql:

psql -h "host" -U "username" -p 6432 -d "database_name"

Alternatively, use the VSCode extension as described in its documentation.

Enable the pgml extension:

CREATE EXTENSION IF NOT EXISTS pgml;

Verify the installation:

SELECT pgml.version();

3. Data Loading

We'll use the Diamonds dataset from Kaggle. Download it as a CSV or use this Python snippet:

import seaborn as sns

diamonds = sns.load_dataset("diamonds")

diamonds.to_csv("diamonds.csv", index=False)

Create the table:

CREATE TABLE IF NOT EXISTS diamonds ( index SERIAL PRIMARY KEY, carat FLOAT, cut VARCHAR(255), color VARCHAR(255), clarity VARCHAR(255), depth FLOAT, table_ FLOAT, price INT, x FLOAT, y FLOAT, z FLOAT );

Populate the table:

INSERT INTO diamonds (carat, cut, color, clarity, depth, table_, price, x, y, z) FROM '~/full/path/to/diamonds.csv' DELIMITER ',' CSV HEADER;

Verify the data:

SELECT * FROM diamonds LIMIT 10;

4. Model Training

Basic Training

Train an XGBoost regressor:

SELECT pgml.train( project_name => 'Diamond prices prediction', task => 'regression', relation_name => 'diamonds', y_column_name => 'price', algorithm => 'xgboost' );

Train a multi-class classifier:

SELECT pgml.train( project_name => 'Diamond cut quality prediction', task => 'classification', relation_name => 'diamonds', y_column_name => 'cut', algorithm => 'xgboost', test_size => 0.1 );

Preprocessing

Train a random forest model with preprocessing:

SELECT pgml.train(

project_name => 'Diamond prices prediction',

task => 'regression',

relation_name => 'diamonds',

y_column_name => 'price',

algorithm => 'random_forest',

preprocess => '{

"carat": {"scale": "standard"},

"depth": {"scale": "standard"},

"table_": {"scale": "standard"},

"cut": {"encode": "target", "scale": "standard"},

"color": {"encode": "target", "scale": "standard"},

"clarity": {"encode": "target", "scale": "standard"}

}'::JSONB

);

PostgresML provides various preprocessing options (encoding, imputing, scaling).

Specifying Hyperparameters

Train an XGBoost regressor with custom hyperparameters:

sudo apt update sudo apt install postgresql postgresql-contrib sudo passwd postgres # Set a new Postgres password # Close and reopen your terminal

Hyperparameter Tuning

Perform a grid search:

psql --version

5. Model Evaluation

Use pgml.predict for predictions:

psql -h "host" -U "username" -p 6432 -d "database_name"

To use a specific model, specify its ID:

CREATE EXTENSION IF NOT EXISTS pgml;

Retrieve model IDs:

SELECT pgml.version();

6. Model Deployment

PostgresML automatically deploys the best-performing model. For finer control, use pgml.deploy:

import seaborn as sns

diamonds = sns.load_dataset("diamonds")

diamonds.to_csv("diamonds.csv", index=False)

Deployment strategies include best_score, most_recent, and rollback.

Further Exploration of PostgresML

PostgresML extends beyond supervised learning. The homepage features a SQL editor for experimentation. Building a consumer-facing ML service might involve:

- Creating a user interface (e.g., using Streamlit or Taipy).

- Developing a backend (Python, Node.js).

- Using libraries like

psycopg2orpg-promisefor database interaction. - Preprocessing data in the backend.

- Triggering

pgml.predictupon user interaction.

Conclusion

PostgresML offers a novel approach to machine learning. To further your understanding, explore the PostgresML documentation and consider resources like DataCamp's SQL courses and AI fundamentals tutorials.

The above is the detailed content of PostgresML Tutorial: Doing Machine Learning With SQL. For more information, please follow other related articles on the PHP Chinese website!

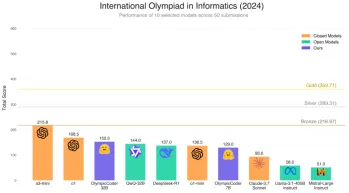

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AM

Does Hugging Face's 7B Model OlympicCoder Beat Claude 3.7?Apr 23, 2025 am 11:49 AMHugging Face's OlympicCoder-7B: A Powerful Open-Source Code Reasoning Model The race to develop superior code-focused language models is intensifying, and Hugging Face has joined the competition with a formidable contender: OlympicCoder-7B, a product

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AM

4 New Gemini Features You Can't Afford to MissApr 23, 2025 am 11:48 AMHow many of you have wished AI could do more than just answer questions? I know I have, and as of late, I’m amazed by how it’s transforming. AI chatbots aren’t just about chatting anymore, they’re about creating, researchin

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AM

Camunda Writes New Score For Agentic AI OrchestrationApr 23, 2025 am 11:46 AMAs smart AI begins to be integrated into all levels of enterprise software platforms and applications (we must emphasize that there are both powerful core tools and some less reliable simulation tools), we need a new set of infrastructure capabilities to manage these agents. Camunda, a process orchestration company based in Berlin, Germany, believes it can help smart AI play its due role and align with accurate business goals and rules in the new digital workplace. The company currently offers intelligent orchestration capabilities designed to help organizations model, deploy and manage AI agents. From a practical software engineering perspective, what does this mean? The integration of certainty and non-deterministic processes The company said the key is to allow users (usually data scientists, software)

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AM

Is There Value In A Curated Enterprise AI Experience?Apr 23, 2025 am 11:45 AMAttending Google Cloud Next '25, I was keen to see how Google would distinguish its AI offerings. Recent announcements regarding Agentspace (discussed here) and the Customer Experience Suite (discussed here) were promising, emphasizing business valu

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AM

How to Find the Best Multilingual Embedding Model for Your RAG?Apr 23, 2025 am 11:44 AMSelecting the Optimal Multilingual Embedding Model for Your Retrieval Augmented Generation (RAG) System In today's interconnected world, building effective multilingual AI systems is paramount. Robust multilingual embedding models are crucial for Re

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AM

Musk: Robotaxis In Austin Need Intervention Every 10,000 MilesApr 23, 2025 am 11:42 AMTesla's Austin Robotaxi Launch: A Closer Look at Musk's Claims Elon Musk recently announced Tesla's upcoming robotaxi launch in Austin, Texas, initially deploying a small fleet of 10-20 vehicles for safety reasons, with plans for rapid expansion. H

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AM

AI's Shocking Pivot: From Work Tool To Digital Therapist And Life CoachApr 23, 2025 am 11:41 AMThe way artificial intelligence is applied may be unexpected. Initially, many of us might think it was mainly used for creative and technical tasks, such as writing code and creating content. However, a recent survey reported by Harvard Business Review shows that this is not the case. Most users seek artificial intelligence not just for work, but for support, organization, and even friendship! The report said that the first of AI application cases is treatment and companionship. This shows that its 24/7 availability and the ability to provide anonymous, honest advice and feedback are of great value. On the other hand, marketing tasks (such as writing a blog, creating social media posts, or advertising copy) rank much lower on the popular use list. Why is this? Let's see the results of the research and how it continues to be

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AM

Companies Race Toward AI Agent AdoptionApr 23, 2025 am 11:40 AMThe rise of AI agents is transforming the business landscape. Compared to the cloud revolution, the impact of AI agents is predicted to be exponentially greater, promising to revolutionize knowledge work. The ability to simulate human decision-maki

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Notepad++7.3.1

Easy-to-use and free code editor

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment