Technology peripherals

Technology peripherals AI

AI The largest reconstruction in history of 25km²! NeRF-XL: Really effective use of multi-card joint training!

The largest reconstruction in history of 25km²! NeRF-XL: Really effective use of multi-card joint training!The largest reconstruction in history of 25km²! NeRF-XL: Really effective use of multi-card joint training!

Original title: NeRF-XL: Scaling NeRFs with Multiple GPUs

Paper link: https://research.nvidia.com/labs/toronto-ai/nerfxl/assets/nerfxl.pdf

Project link: https://research.nvidia.com/labs/toronto-ai/nerfxl/

Author affiliation: NVIDIA University of California, Berkeley

Thesis idea:

This paper proposes NeRF-XL, a principle method for interoperating between multiple graphics processors (GPUs) Allocates Neural Ray Fields (NeRFs), thereby enabling the training and rendering of NeRFs with arbitrarily large capacities. This paper first reviews several existing GPU methods that decompose large scenes into multiple independently trained NeRFs [9, 15, 17] and identifies several fundamental issues with these methods that are problematic when using additional Computing resources (GPUs) for training hinder the improvement of reconstruction quality. NeRF-XL solves these problems and allows NeRFs with any number of parameters to be trained and rendered by simply using more hardware. The core of our approach is a novel distributed training and rendering formulation, which is mathematically equivalent to the classic single-GPU case and minimizes communication between GPUs. By unlocking NeRFs with an arbitrarily large number of parameters, our method is the first to reveal the GPU scaling laws of NeRFs, showing improved reconstruction quality as the number of parameters increases, and as more GPUs are used The speed increases with the increase. This paper demonstrates the effectiveness of NeRF-XL on a variety of datasets, including MatrixCity [5], which contains approximately 258K images and covers an urban area of 25 square kilometers.

Paper design:

Recent advances in new perspective synthesis have greatly improved our ability to capture neural radiation fields (NeRFs), making the process more Easy to approach. These advances allow us to reconstruct larger scenes and finer details within them. Whether by increasing the spatial scale (e.g., capturing kilometers of a cityscape) or increasing the level of detail (e.g., scanning blades of grass in a field), broadening the scope of a captured scene involves incorporating a greater amount of information into NeRF to Achieve accurate reconstruction. Therefore, for information-rich scenes, the number of trainable parameters required for reconstruction may exceed the memory capacity of a single GPU.

This paper proposes NeRF-XL, a principled algorithm for efficient distribution of neural radial scenes (NeRFs) across multiple GPUs. The method in this article makes it possible to capture scenes with high information content (including scenes with large-scale and high-detail features) by simply increasing hardware resources. The core of NeRF-XL is to allocate NeRF parameters among a set of disjoint spatial regions and train them jointly across GPUs. Unlike traditional distributed training processes that synchronize gradients in backward propagation, our method only needs to synchronize information in forward propagation. Furthermore, by carefully rendering the equations and associated loss terms in a distributed setting, we significantly reduce the data transfer required between GPUs. This novel rewrite improves training and rendering efficiency. The flexibility and scalability of this method enable this paper to efficiently optimize multiple GPUs and use multiple GPUs for efficient performance optimization.

Our work contrasts with recent approaches that have adopted GPU algorithms to model large-scale scenes by training a set of independent stereoscopic NeRFs [9, 15, 17]. Although these methods do not require communication between GPUs, each NeRF needs to model the entire space, including background areas. This results in increased redundancy in model capacity as the number of GPUs increases. Furthermore, these methods require blending of NeRFs when rendering, which degrades visual quality and introduces artifacts in overlapping regions. Therefore, unlike NeRF-XL, these methods use more model parameters in training (equivalent to more GPUs) and fail to achieve improvements in visual quality.

This paper demonstrates the effectiveness of our approach through a diverse set of capture cases, including street scans, drone flyovers, and object-centric videos. The cases range from small scenes (10 square meters) to entire cities (25 square kilometers). Our experiments show that as we allocate more computing resources to the optimization process, NeRF-XL begins to achieve improved visual quality (measured by PSNR) and rendering speed. Therefore, NeRF-XL makes it possible to train NeRF with arbitrary capacity on scenes of any spatial scale and detail.

#Figure 1: This article’s principle-based multi-GPU distributed training algorithm can expand NeRFs to any large scale.

Figure 2: Independent training and multi-GPU joint training. Training multiple NeRFs [9, 15, 18] independently requires each NeRF to model both the focal region and its surrounding environment, which leads to redundancy in model capacity. In contrast, our joint training method uses non-overlapping NeRFs and therefore does not have any redundancy.

Figure 3: Independent training requires blending when new perspectives are synthesized. Whether blending is performed in 2D [9, 15] or 3D [18], blur will be introduced in the rendering.

Figure 4: Independent training leads to different camera optimizations. In NeRF, camera optimization can be achieved by transforming the inaccurate camera itself or all other cameras as well as the underlying 3D scene. Therefore, training multiple NeRFs independently along with camera optimization may lead to inconsistencies in camera corrections and scene geometry, which brings more difficulties to hybrid rendering.

Figure 5: Visual artifacts that may be caused by 3D blending. The image on the left shows the results of MegaNeRF trained using 2 GPUs. At 0% overlap, artifacts appear at the boundaries due to independent training, while at 15% overlap, severe artifacts appear due to 3D blending. The image on the right illustrates the cause of this artifact: while each independently trained NeRF renders the correct color, the blended NeRF does not guarantee correct color rendering.

Figure 6: The training process of this article. Our method jointly trains multiple NeRFs on all GPUs, with each NeRF covering a disjoint spatial region. Communication between GPUs only occurs in forward pass and not in backward pass (as indicated by the gray arrow). (a) This paper can be implemented by evaluating each NeRF to obtain sample color and density, and then broadcasting these values to all other GPUs for global volume rendering (see Section 4.2). (b) By rewriting the volume rendering equation, this paper can significantly reduce the amount of data transmission to one value per ray, thus improving efficiency (see Section 4.3).

Experimental results:

Figure 7: Qualitative comparison. Compared with previous work, our method effectively leverages multi-GPU configurations and improves performance on all types of data.

Figure 8: Quantitative comparison. Previous work based on independent training failed to achieve performance improvements with the addition of additional GPUs, while our method enjoys improvements in rendering quality and speed as training resources increase.

Figure 9: Scalability of this article’s method. More GPUs allow for more learnable parameters, which results in greater model capacity and better quality.

Figure 10: More rendering results on the large scale capture. This paper tests the robustness of our method on a larger captured data set using more GPUs. Please see the web page of this article for a video tour of these data.

Figure 11: Comparison with PyTorch DDP on University4 dataset. PyTorch Distributed Data Parallel (DDP) is designed to speed up rendering by distributing light across the GPU. In contrast, our method distributes parameters across GPUs, breaking through the memory limitations of a single GPU in the cluster and being able to expand model capacity for better quality.

Figure 12: Synchronization cost on University4. Our partition-based volume rendering (see Section 4.3) allows tile-based communication, which is much less expensive than the original sample-based communication (see Section 4.2) and therefore enables faster rendering.

Summary:

In summary, this paper revisits existing methods of decomposing large-scale scenes into independently trained NeRFs (Neural Radiation Fields) and finds that This presents a significant problem that hinders the efficient utilization of additional computing resources (GPUs), which contradicts the core goal of leveraging multi-GPU setups to improve large-scale NeRF performance. Therefore, this paper introduces NeRF-XL, a principled algorithm capable of efficiently leveraging multi-GPU setups and enhancing NeRF performance at any scale by jointly training multiple non-overlapping NeRFs. Importantly, our method does not rely on any heuristic rules and follows NeRF’s scaling laws in a multi-GPU setting and is applicable to various types of data.

Quote:

@misc{li2024nerfxl,title={NeRF-XL: Scaling NeRFs with Multiple GPUs}, author={Ruilong Li and Sanja Fidler and Angjoo Kanazawa and Francis Williams},year={2024},eprint={2404.16221},archivePrefix={arXiv},primaryClass={cs.CV}}The above is the detailed content of The largest reconstruction in history of 25km²! NeRF-XL: Really effective use of multi-card joint training!. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

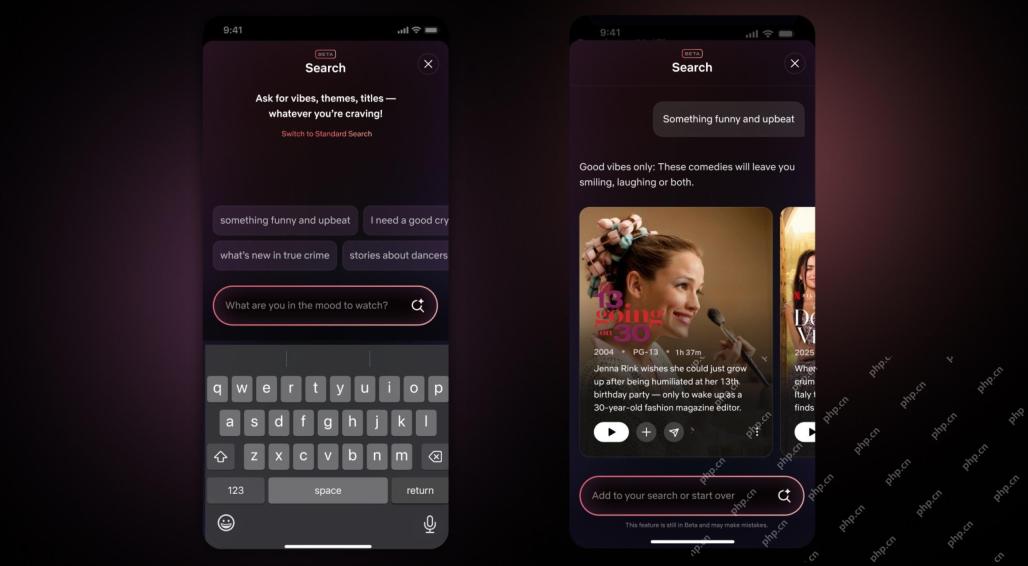

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft