Technology peripherals

Technology peripherals AI

AI In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology

In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technologyIn addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology

The core goal of video understanding is to accurately understand spatiotemporal representation, but it faces two main challenges: there is a large amount of spatiotemporal redundancy in short video clips, and complex spatiotemporal dependencies. Three-dimensional convolutional neural networks (CNN) and video transformers have performed well in solving one of these challenges, but they have certain shortcomings in addressing both challenges simultaneously. UniFormer attempts to combine the advantages of both approaches, but encounters difficulties in modeling long videos.

The emergence of low-cost solutions such as S4, RWKV and RetNet in the field of natural language processing has opened up new avenues for visual models. Mamba stands out with its Selective State Space Model (SSM), which achieves a balance of maintaining linear complexity while facilitating long-term dynamic modeling. This innovation drives its application in vision tasks, as demonstrated by Vision Mamba and VMamba, which exploit multi-directional SSM to enhance 2D image processing. These models are comparable in performance to attention-based architectures while significantly reducing memory usage.

Given that the sequences produced by videos are inherently longer, a natural question is: does Mamba work well for video understanding?

Inspired by Mamba, this article introduces VideoMamba, an SSM (Selective State Space Model) specifically tailored for video understanding. VideoMamba draws on the design philosophy of Vanilla ViT and combines convolution and attention mechanisms. It provides a linear complexity method for dynamic spatiotemporal background modeling, especially suitable for processing high-resolution long videos. The evaluation mainly focuses on four key capabilities of VideoMamba:

Scalability in the visual field: This article evaluates the scalability of VideoMamba The performance was tested and found that the pure Mamba model is often prone to overfitting when it continues to expand. This paper introduces a simple and effective self-distillation strategy, so that as the model and input size increase, VideoMamba can be used without the need for large-scale data sets. Achieve significant performance enhancements without pre-training.

Sensitivity to short-term action recognition: The analysis in this paper is extended to evaluate VideoMamba’s ability to accurately distinguish short-term actions, especially those with Actions with subtle motion differences, such as opening and closing. Research results show that VideoMamba exhibits excellent performance over existing attention-based models. More importantly, it is also suitable for mask modeling, further enhancing its temporal sensitivity.

Superiority in long video understanding: This article evaluates VideoMamba’s ability to interpret long videos. With end-to-end training, it demonstrates significant advantages over traditional feature-based methods. Notably, VideoMamba runs 6x faster than TimeSformer on 64-frame video and requires 40x less GPU memory (shown in Figure 1).

Compatibility with other modalities: Finally, this article evaluates the adaptability of VideoMamba with other modalities. Results in video text retrieval show improved performance compared to ViT, especially in long videos with complex scenarios. This highlights its robustness and multimodal integration capabilities.

In-depth experiments in this study reveal VideoMamba’s great potential for short-term (K400 and SthSthV2) and long-term (Breakfast, COIN and LVU) video content understanding. VideoMamba demonstrates high efficiency and accuracy, indicating that it will become a key component in the field of long video understanding. To facilitate future research, all code and models have been made open source.

- Paper address: https://arxiv.org/pdf/2403.06977.pdf

- Project address: https://github.com/OpenGVLab/VideoMamba

- Paper title: VideoMamba: State Space Model for Efficient Video Understanding

Method introduction

Figure 2a below shows the details of the Mamba module.

Figure 3 illustrates the overall framework of VideoMamba. This paper first uses 3D convolution (i.e. 1×16×16) to project the input video Xv ∈ R 3×T ×H×W into L non-overlapping spatio-temporal patches Xp ∈ R L×C, where L=t×h×w (t=T, h= H 16, and w= W 16). The token sequence input to the next VideoMamba encoder is

Spatiotemporal scanning: To apply the B-Mamba layer to the spatiotemporal input, In Figure 4 of this article, the original 2D scan is expanded into different bidirectional 3D scans:

(a) Spatial first, organize spatial tokens by position, and then stack them frame by frame;

(b) Time priority, arrange time tokens according to frames, and then stack them along the spatial dimension;

(c) Space-time mixing, both space priority and There is time priority, where v1 executes half of it and v2 executes all (2 times the calculation amount).

The experiments in Figure 7a show that space-first bidirectional scanning is the most efficient yet simplest. Due to Mamba's linear complexity, VideoMamba in this article can efficiently process high-resolution long videos.

For SSM in the B-Mamba layer, this article uses the same default hyperparameter settings as Mamba, setting the state dimension and expansion ratio to 16 and 2 respectively. Following ViT's approach, this paper adjusts the depth and embedding dimensions to create models of comparable size to those in Table 1, including VideoMamba-Ti, VideoMamba-S and VideoMamba-M. However, it was observed in experiments that larger VideoMamba is often prone to overfitting in experiments, resulting in suboptimal performance as shown in Figure 6a. This overfitting problem exists not only in the model proposed in this paper, but also in VMamba, where the best performance of VMamba-B is achieved at three-quarters of the total training period. To combat the overfitting problem of larger Mamba models, this paper introduces an effective self-distillation strategy that uses smaller and well-trained models as "teachers" to guide the training of larger "student" models. The results shown in Figure 6a show that this strategy leads to the expected better convergence.

Regarding the masking strategy, this article proposes different row masking techniques, as shown in Figure 5 , specifically for the B-Mamba block's preference for consecutive tokens.

Experiment

Table 2 shows the results on the ImageNet-1K dataset. Notably, VideoMamba-M significantly outperforms other isotropic architectures, improving by 0.8% compared to ConvNeXt-B and 2.0% compared to DeiT-B, while using fewer parameters. VideoMamba-M also performs well in a non-isotropic backbone structure that employs layered features for enhanced performance. Given Mamba's efficiency in processing long sequences, this paper further improves performance by increasing the resolution, achieving 84.0% top-1 accuracy using only 74M parameters.

Table 3 and Table 4 list the results on the short-term video dataset. (a) Supervised learning: Compared with pure attention methods, VideoMamba-M based on SSM gained obvious advantages, outperforming ViViT-L on the scene-related K400 and time-related Sth-SthV2 datasets respectively. 2.0% and 3.0%. This improvement comes with significantly reduced computational requirements and less pre-training data. VideoMamba-M's results are on par with SOTA UniFormer, which cleverly integrates convolution and attention in a non-isotropic architecture. (b) Self-supervised learning: With mask pre-training, VideoMamba outperforms VideoMAE, which is known for its fine motor skills. This achievement highlights the potential of our pure SSM-based model to understand short-term videos efficiently and effectively, emphasizing its suitability for both supervised and self-supervised learning paradigms.

As shown in Figure 1, VideoMamba’s linear complexity makes it very suitable for end-to-end training with long videos. . The comparison in Tables 6 and 7 highlights the simplicity and effectiveness of VideoMamba over traditional feature-based methods in these tasks. It brings significant performance improvements, enabling SOTA results even at smaller model sizes. VideoMamba-Ti shows a significant 6.1% improvement over ViS4mer using Swin-B features, and also a 3.0% improvement over Turbo's multi-modal alignment method. Notably, the results highlight the positive impact of scaling models and frame rates for long-term tasks. On nine diverse and challenging tasks proposed by LVU, this paper adopts an end-to-end approach to fine-tune VideoMamba-Ti and achieves results that are comparable to or superior to current SOTA methods. These results not only highlight the effectiveness of VideoMamba, but also demonstrate its great potential for future long video understanding.

As shown in Table 8, under the same pre-training corpus and similar training strategy, VideoMamba It is better than ViT-based UMT in zero-sample video retrieval performance. This highlights Mamba's comparable efficiency and scalability compared to ViT in processing multi-modal video tasks. Notably, VideoMamba shows significant improvements on datasets with longer video lengths (e.g., ANet and DiDeMo) and more complex scenarios (e.g., LSMDC). This demonstrates Mamba's capabilities in challenging multimodal environments, even where cross-modal alignment is required.

For more research details, please refer to the original paper.

The above is the detailed content of In addition to CNN, Transformer, and Uniformer, we finally have more efficient video understanding technology. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

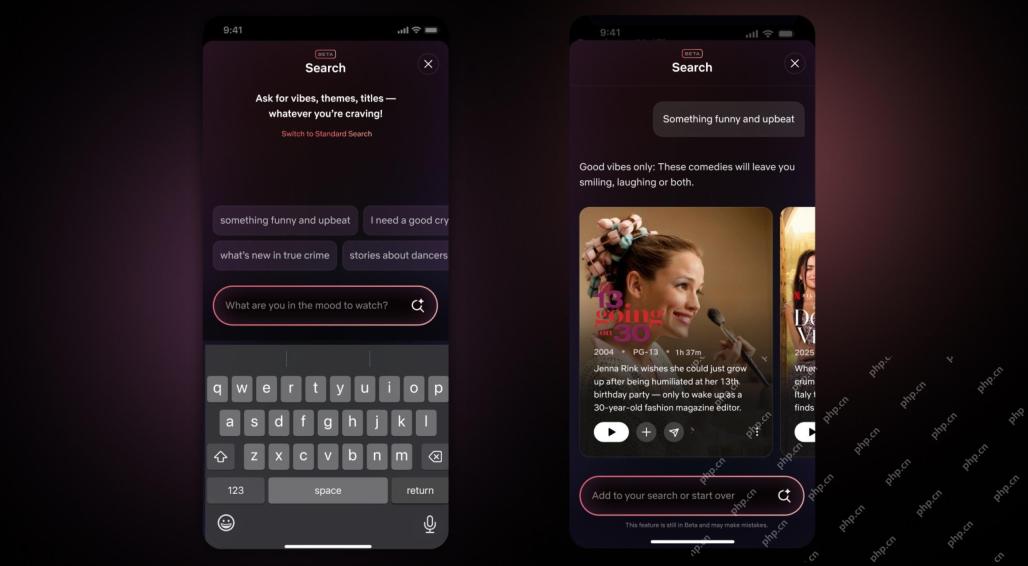

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 English version

Recommended: Win version, supports code prompts!

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool