Technology peripherals

Technology peripherals AI

AI A review of the development of the CLIP model, the cornerstone of Vincentian diagrams

A review of the development of the CLIP model, the cornerstone of Vincentian diagramsA review of the development of the CLIP model, the cornerstone of Vincentian diagrams

CLIP stands for Contrastive Language-Image Pre-training, which is a pre-training method or model based on contrastive text-image pairs. It is a multimodal model that relies on contrastive learning. The training data for CLIP consists of text-image pairs, where an image is paired with its corresponding text description. Through contrastive learning, the model aims to understand the relationship between text and image pairs.

Open AI released DALL-E and CLIP in January 2021. Both models are multi-modal models that can combine images and text. DALL-E is a model that generates images based on text, while CLIP uses text as a supervision signal to train a transferable visual model.

In the Stable Diffusion model, the text features extracted by the CLIP text encoder are embedded into the UNet of the diffusion model through cross attention. Specifically, text features are used as the key and value of attention, while UNet features are used as query. In other words, CLIP is actually a key bridge between text and pictures, organically combining text information and image information. This combination enables the model to better understand and process information between different modalities, thereby achieving better results when handling complex tasks. In this way, the Stable Diffusion model can more effectively utilize the text encoding capabilities of CLIP, thereby improving overall performance and expanding application areas.

CLIP

This is the earliest paper published by OpenAI in 2021. If you want to understand CLIP, We need to deconstruct the acronym into its three components: (1) Contrastive, (2) Language-Image, (3) Pre-training.

Let’s start with Language-Image.

In traditional machine learning models, usually only a single modality of input data can be accepted, such as text, images, tabular data or audio. If you need to use different modalities of data to make predictions, you must train multiple different models. In CLIP, "Language-Image" means that the model can accept both text (language) and image input data. This design enables CLIP to process information of different modalities more flexibly, thus improving its predictive capabilities and application scope.

CLIP handles text and image input by using two different encoders, a text encoder and an image encoder. These two encoders map the input data into a lower-dimensional latent space, generating corresponding embedding vectors for each input. An important detail is that the text and image encoders embed the data into the same space, i.e. the original CLIP space is a 512-dimensional vector space. This design enables direct comparison and matching between text and images without additional conversion or processing. In this way, CLIP can represent text descriptions and image content in the same vector space, thereby enabling cross-modal semantic alignment and retrieval functions. The design of this shared embedding space gives CLIP better generalization capabilities and adaptability, allowing it to perform well on a variety of tasks and datasets.

Contrastive

While embedding text and image data into the same vector space may be a useful starting point, simply doing this does not There is no guarantee that the model can effectively compare representations of text and images. For example, it is important to establish a reasonable and interpretable relationship between the embedding of “dog” or “a picture of a dog” in a text and the embedding of a dog image. However, we need a way to bridge the gap between these two models.

In multimodal machine learning, there are various techniques to align two modalities, but currently the most popular method is contrast. Contrastive techniques take pairs of inputs from two modalities: say an image and its caption and train the model's two encoders to represent these input data pairs as closely as possible. At the same time, the model is incentivized to take unpaired inputs (such as images of dogs and the text "pictures of cars") and represent them as far away as possible. CLIP is not the first contrastive learning technique for images and text, but its simplicity and effectiveness have made it a mainstay in multimodal applications.

Pre-training

Although CLIP itself is useful for tasks such as zero-shot classification, semantic search, and unsupervised data exploration, etc. Applications are useful, but CLIP is also used as a building block for a host of multimodal applications, from Stable Diffusion and DALL-E to StyleCLIP and OWL-ViT. For most of these downstream applications, the initial CLIP model is considered the starting point for "pre-training" and the entire model is fine-tuned for its new use case.

While OpenAI never explicitly specified or shared the data used to train the original CLIP model, the CLIP paper mentioned that the model was performed on 400 million image-text pairs collected from the Internet. trained.

https://www.php.cn/link/7c1bbdaebec5e20e91db1fe61221228f

ALIGN: Scaling Up Visual and Vision -Language Representation Learning With Noisy Text Supervision

Using CLIP, OpenAI uses 400 million image-text pairs, because no details are provided, so we don’t Probably knows exactly how to build the dataset. But in describing the new dataset, they looked to Google's Conceptual Captions as inspiration - a relatively small dataset (3.3 million image-caption pairs) that uses expensive filtering and post-processing techniques, although these The technology is powerful, but not particularly scalable).

So high-quality data sets have become the direction of research. Shortly after CLIP, ALIGN solved this problem through scale filtering. ALIGN does not rely on small, carefully annotated, and curated image captioning datasets, but instead leverages 1.8 billion pairs of images and alt text.

While these alt text descriptions are much noisier on average than the titles, the sheer size of the dataset more than makes up for this. The authors used basic filtering to remove duplicates, images with over 1,000 relevant alt text, as well as uninformative alt text (either too common or containing rare tags). With these simple steps, ALIGN reaches or exceeds the state-of-the-art on various zero-shot and fine-tuning tasks.

https://arxiv.org/abs/2102.05918

K-LITE: Learning Transferable Visual Models with External Knowledge

Like ALIGN, K-LITE is also solving problems for Comparing the problem of limited number of pre-trained high-quality image-text pairs.

K-LITE focuses on explaining concepts, i.e. definitions or descriptions as context and unknown concepts can help develop broad understanding. A popular explanation is that when people first introduce technical terms and uncommon vocabulary, they usually simply define them! Or use an analogy to something everyone knows.

To implement this approach, researchers from Microsoft and the University of California, Berkeley, used WordNet and Wiktionary to enhance the text in image-text pairs. For some isolated concepts, such as class labels in ImageNet, the concepts themselves are enhanced, while for titles (e.g. from GCC), the least common noun phrases are enhanced. With this additional structured knowledge, pre-trained models show substantial improvements on transfer learning tasks.

https://arxiv.org/abs/2204.09222

OpenCLIP: Reproducible scaling laws for contrastive language-image learning

By the end of 2022, the transformer model has been established in the text and visual fields. Pioneering empirical work in both fields has also clearly shown that the performance of transformer models on unimodal tasks can be well described by simple scaling laws. This means that as the amount of training data, training time, or model size increases, one can predict the performance of the model fairly accurately.

OpenCLIP extends the above theory to multi-modal scenarios by using the largest open source image-text pair dataset released to date (5B), systematically studying the performance of training data pair models. Impact on performance in zero-shot and fine-tuning tasks. As in the unimodal case, this study reveals that model performance on multimodal tasks scales with a power law in terms of computation, number of samples seen, and number of model parameters.

Even more interesting than the existence of power laws is the relationship between power law scaling and pre-training data. Retaining OpenAI's CLIP model architecture and training method, the OpenCLIP model shows stronger scaling capabilities on sample image retrieval tasks. For zero-shot image classification on ImageNet, OpenAI’s model (trained on its proprietary dataset) demonstrated stronger scaling capabilities. These findings highlight the importance of data collection and filtering procedures on downstream performance.

https://arxiv.org/abs/2212.07143However, shortly after OpenCLIP was released, the LAION data set was removed from the Internet because it contained illegal images.

MetaCLIP: Demystifying CLIP Data

OpenCLIP attempts to understand how the performance of downstream tasks scales with data volume, The amount of calculation and the number of model parameters vary, while MetaCLIP focuses on how to select data. As the authors say, "We believe that the main factor in CLIP's success is its data, rather than the model architecture or pre-training goals."

In order to verify this hypothesis, the author fixed the model architecture and training steps and conducted experiments. The MetaCLIP team tested a variety of strategies related to substring matching, filtering, and balancing data distribution, and found that the best performance was achieved when each text appeared a maximum of 20,000 times in the training data set. To test this theory, they would even The word "photo", which occurred 54 million times in the initial data pool, was also limited to 20,000 image-text pairs in the training data. Using this strategy, MetaCLIP was trained on 400M image-text pairs from the Common Crawl dataset, outperforming OpenAI’s CLIP model on various benchmarks.

https://arxiv.org/abs/2309.16671

DFN: Data Filtering Networks

With the research on MetaCLIP, it can be shown that data management may be the most important factor in training high-performance multi-modal models such as CLIP. MetaCLIP's filtering strategy is very successful, but it is also mainly based on heuristic methods. The researchers then turned to whether a model could be trained to do this filtering more efficiently.

To verify this, the author uses high-quality data from the conceptual 12M to train the CLIP model to filter high-quality data from low-quality data. This Data Filtering Network (DFN) is used to build a larger high-quality dataset by selecting only high-quality data from an uncurated dataset (in this case Common Crawl). CLIP models trained on filtered data outperformed models trained only on initial high-quality data and models trained on large amounts of unfiltered data.

https://arxiv.org/abs/2309.17425

Summary

OpenAI’s CLIP model has significantly changed The way we deal with multimodal data. But CLIP is just the beginning. From pre-training data to the details of training methods and contrastive loss functions, the CLIP family has made incredible progress over the past few years. ALIGN scales noisy text, K-LITE enhances external knowledge, OpenCLIP studies scaling laws, MetaCLIP optimizes data management, and DFN enhances data quality. These models deepen our understanding of the role of CLIP in the development of multimodal artificial intelligence, demonstrating progress in connecting images and text.

The above is the detailed content of A review of the development of the CLIP model, the cornerstone of Vincentian diagrams. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

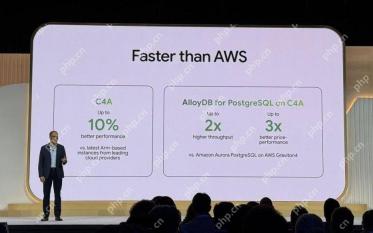

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft