Home >Technology peripherals >AI >LimSim++: A new stage for multi-modal large models in autonomous driving

LimSim++: A new stage for multi-modal large models in autonomous driving

- PHPzforward

- 2024-03-12 15:10:111229browse

Paper name: LimSim: A Closed-Loop Platform for Deploying Multimodal LLMs in Autonomous Driving

Project homepage: https://pjlab-adg.github.io/ limsim_plus/

Introduction to the simulator

With the multi-modal large language model ((M)LLM) in the field of artificial intelligence Setting off a research boom, its application in autonomous driving technology has gradually become the focus of attention. These models provide strong support for building safe and reliable autonomous driving systems through powerful generalized understanding and logical reasoning capabilities. Although there are existing closed-loop simulation platforms such as HighwayEnv, CARLA and NuPlan, which can verify the performance of LLM in autonomous driving, users usually need to adapt these platforms themselves, which not only raises the threshold for use, but also limits the in-depth exploration of LLM capabilities.

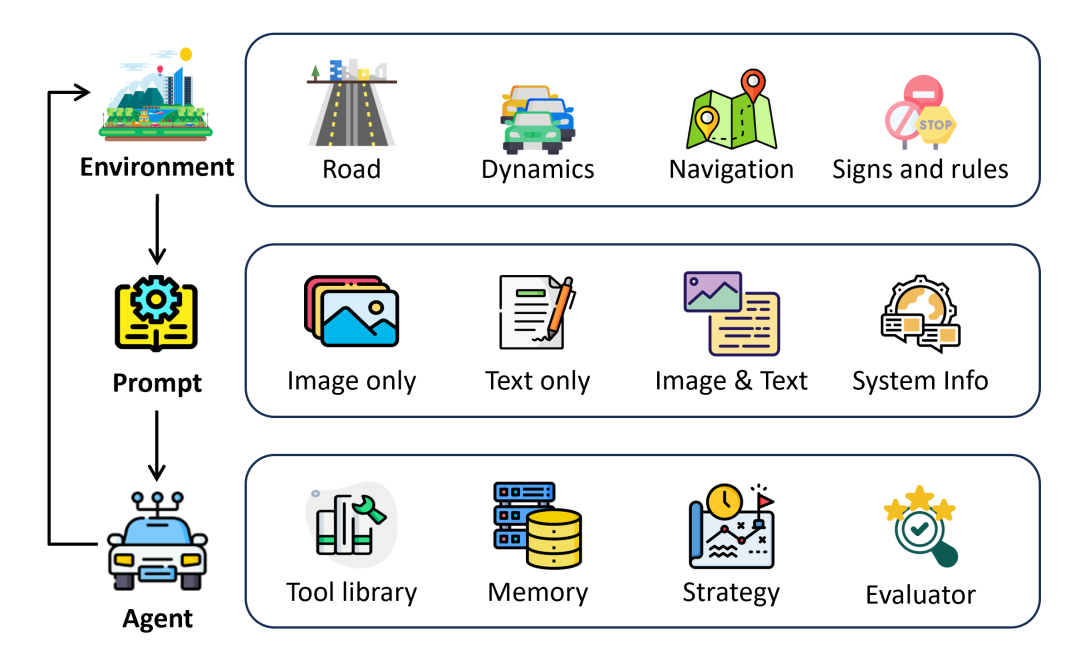

To overcome this challenge, the Intelligent Transportation Platform Group of Shanghai Artificial Intelligence Laboratory launched **LimSim**, an autonomous driving closed-loop simulation platform specially designed for (M)LLM. The launch of LimSim aims to provide researchers in the field of autonomous driving with a more suitable environment to comprehensively explore the potential of LLM in autonomous driving technology. The platform can extract and process scene information from simulation environments such as SUMO and CARLA, converting it into various input forms required by LLM, including image information, scene cognition and task description. In addition, LimSim also has a motion primitive conversion function, which can quickly generate appropriate driving trajectories based on LLM's decisions to achieve closed-loop simulation. More importantly, LimSim creates a continuous learning environment for LLM, which helps LLM continuously optimize driving strategies and improve the driver agent's driving performance by evaluating decision results and providing feedback.

Simulator Features

LimSim has significant features in the field of autonomous driving simulation and provides driver agents driven by (M)LLM An ideal closed-loop simulation and continuous learning environment.

- LimSim supports the simulation of a variety of driving scenarios, such as intersections, ramps, and roundabouts, ensuring that the Driver Agent can accept challenges in various complex road conditions. This diverse scene setting helps LLM gain richer driving experience and improve its adaptability in real environments.

- LimSim supports large language models with multiple modal inputs. LimSim not only provides rule-based scene information generation, but can also be jointly debugged with CARLA to provide rich visual input to meet the visual perception needs of (M)LLM in autonomous driving.

- LimSim focuses on continuous learning capabilities. LimSim integrates modules such as evaluation, reflection, and memory to help (M)LLM continuously accumulate experience and optimize decision-making strategies during the simulation process.

Create your own Driver Agent

LimSim provides users with a rich interface that can meet the needs of Driver Agent Customization requirements improve the flexibility of LimSim development and lower the threshold for use.

- Prompt build

- LimSim supports user-defined prompts to change the text information input to (M)LLM, including roles Information such as settings, task requirements, scene descriptions, etc.

- LimSim provides scene description templates based on json format, allowing users to modify prompts with zero code without considering the specific implementation of information extraction.

- Decision Evaluation Module

- LimSim provides a baseline for evaluating (M)LLM decision results. Users can Adjust evaluation preferences by changing weight parameters.

- Flexibility of the framework

- LimSim supports users to add customized tool libraries for (M)LLM, such as Perceptual tools, numerical processing tools, and more.

Get started quickly

- Step 0:Install SUMO (Version≥v1.15.0, ubuntu)

sudo add-apt-repository ppa:sumo/stablesudo apt-get updatesudo apt-get install sumo sumo-tools sumo-doc

- #Step 1: Download the LimSim source code compression package, decompress it and switch to the correct branch

git clone https://github.com/PJLab-ADG/LimSim.gitgit checkout -b LimSim_plus

- Step 2:Install dependencies (conda is required)

cd LimSimconda env create -f environment.yml

- Step 3: Run simulation

- Run simulation alone

python ExampleModel.py

- Use LLM for autonomous driving

export OPENAI_API_KEY='your openai key'python ExampleLLMAgentCloseLoop.py

- Using VLM for autonomous driving

# Terminal 1cd path-to-carla/./CarlaUE4.sh# Termnial 2cd path-to-carla/cd PythonAPI/util/python3 config.py --map Town06# Termnial 2export OPENAI_API_KEY='your openai key'cd path-to-LimSim++/python ExampleVLMAgentCloseLoop.py

For more information, please check LimSim’s github: https:// github.com/PJLab-ADG/LimSim/tree/LimSim_plus. If you have any other questions, please raise them in Issues on GitHub or contact us directly by email!

We welcome partners from academia and industry to jointly develop LimSim and build an open source ecosystem!

The above is the detailed content of LimSim++: A new stage for multi-modal large models in autonomous driving. For more information, please follow other related articles on the PHP Chinese website!