Technology peripherals

Technology peripherals AI

AI How to create a complete computer vision application in minutes with just two Python functions

How to create a complete computer vision application in minutes with just two Python functionsHow to create a complete computer vision application in minutes with just two Python functions

Translator| Li Rui

Reviser| Chonglou

This article begins with a brief introduction to the basic requirements for computer vision applications. Then, Pipeless, an open source framework, is introduced in detail, which provides a serverless development experience for embedded computer vision. Finally, a detailed step-by-step guide is provided that demonstrates how to create and run a simple object detection application using a few Python functions and a model.

Creating Computer Vision Applications

One way to describe “computer vision” is to define it as “the use of cameras and algorithmic technology to perform The field of image recognition and processing". However, this simple definition may not fully satisfy people's understanding of the concept. Therefore, in order to gain a deeper understanding of the process of building computer vision applications, we need to consider the functionality that each subsystem needs to implement. The process of building computer vision applications involves several key steps, including image acquisition, image processing, feature extraction, object recognition, and decision making. First, image data is acquired through a camera or other image acquisition device. The images are then processed using algorithms, including operations such as denoising, enhancement, and segmentation for further analysis. During the feature extraction stage, the system identifies key features in the image, such as

In order to process a 60 fps video stream in real time, each frame needs to be processed within 16 milliseconds. This is usually achieved through multi-threading and multi-processing processes. Sometimes it's even necessary to start processing the next frame before the previous one is complete to ensure really fast frame processing.

For artificial intelligence models, fortunately there are many excellent open source models available now, so in most cases there is no need to develop your own model from scratch, just fine-tune the parameters to meet a specific Just use cases. These models run inference on every frame, performing tasks such as object detection, segmentation, pose estimation, and more.

•Inference runtime: The inference runtime is responsible for loading the model and running it efficiently on different available devices (GPU or CPU).

In order to ensure that the model can run quickly during the inference process, the use of GPU is essential. GPUs can handle orders of magnitude more parallel operations than CPUs, especially when processing large amounts of mathematical operations. When processing frames, you need to consider the memory location where the frame is located. You can choose to store it in GPU memory or CPU memory (RAM). However, copying frames between these two different memories can result in slower operations, especially when the frame size is large. This also means that memory choices and data transfer overhead need to be weighed to achieve a more efficient model inference process.

The multimedia pipeline is a set of components that take a video stream from a data source, split it into frames, and then use it as input to the model. Sometimes, these components can also modify and reconstruct the video stream for forwarding. These components play a key role in processing video data, ensuring that the video stream can be transmitted and processed efficiently.

• Video stream management: Developers may want applications to be able to resist interruption of video streams, reconnection, dynamically add and remove video streams, handle multiple video streams simultaneously, and so on.

All of these systems need to be created or incorporated into the project, and therefore, code needs to be maintained. However, the problem faced is that you end up maintaining a large amount of code that is not application specific, but rather a subsystem that surrounds the actual case specific code.

Pipeless Framework

To avoid building all of the above from scratch, you can use the Pipeless framework instead. This is an open source framework for computer vision that allows for some case-specific functionality and is capable of handling other things.

The Pipeless framework divides the application's logic into "stages", one of which is like a micro-application for a single model. A stage can include preprocessing, running inference using the preprocessed input, and postprocessing the model output for action. You can then chain as many stages as you like to make up a complete application, even using multiple models.

To provide the logic for each stage, simply add an application-specific code function and Pipeless takes care of calling it when needed. This is why Pipeless can be considered a framework that provides a server-like development experience for embedded computer vision and provides some functionality without worrying about the need for additional subsystems.

Another important feature of Pipeless is the ability to automate video stream processing by dynamically adding, removing, and updating video streams via CLI or REST API. You can even specify a restart policy, indicating when processing of the video stream should be restarted, whether it should be restarted after an error, and so on.

Finally, to deploy the Pipeless framework, just install it and run it with your code functions on any device, whether in a cloud computing virtual machine or containerized mode, or directly on an edge device such as Nvidia Jetson, Raspberry, etc. middle.

Creating an Object Detection Application

The following is an in-depth look at how to create a simple object detection application using the Pipeless framework.

The first is installation. The installation script makes it very simple to install:

Curl https://raw.githubusercontent.com/pipeless-ai/pipeless/main/install.sh | bash

Now, a project must be created. A Pipeless project is a directory containing stages. Each stage is in a subdirectory, and in each subdirectory, a file containing hooks (specific code functions) is created. The name provided for each stage folder is the stage name that must be indicated to the Pipeless box later when you want to run that stage for the video stream.

pipeless init my-project --template emptycd my-project

Here, the empty template tells the CLI to just create the directory, if no template is provided, the CLI will prompt a few questions to create the stage interactively.

As mentioned above, it is now necessary to add a phase to the project. Download a stage example from GitHub using the following command:

wget -O - https://github.com/pipeless-ai/pipeless/archive/main.tar.gz | tar -xz --strip=2 "pipeless-main/examples/onnx-yolo"

This will create a stage directory onnx-yolo, where Contains application functions.

Then, check the contents of each stage file, which is the application hooks.

Here is a pre-process.py file that defines a function (hooks) that accepts a frame and a scene. This function performs some operations to prepare the input data receiving RGB frames so that it matches the format expected by the model. This data is added to frame_data['interence_input'], which is the data that Pipeless will pass to the model.

def hook(frame_data, context):frame = frame_data["original"].view()yolo_input_shape = (640, 640, 3) # h,w,cframe = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)frame = resize_rgb_frame(frame, yolo_input_shape)frame = cv2.normalize(frame, None, 0.0, 1.0, cv2.NORM_MINMAX)frame = np.transpose(frame, axes=(2,0,1)) # Convert to c,h,winference_inputs = frame.astype("float32")frame_data['inference_input'] = inference_inputs... (some other auxiliar functions that we call from the hook function)There is also the process.json file which indicates the Pipeless inference runtime to use (ONNX runtime in this case ), where to find the model it should load, and some of its optional parameters, such as the execution_provider to use, i.e. CPU, CUDA, TensorRT, etc.

{ "runtime": "onnx","model_uri": "https://pipeless-public.s3.eu-west-3.amazonaws.com/yolov8n.onnx","inference_params": { "execution_provider": "tensorrt" }}Finally, the post-process.py file defines a function similar to the one in pre-process.py. This time, it accepts the inference output that Pipeless stores in frame_data["inference_output"] and performs the operation of parsing that output into a bounding box. Later, it draws the bounding box on the frame and finally assigns the modified frame to frame_data['modified']. This way, Pipeless will forward the provided video stream, but with modified frames, including bounding boxes.

def hook(frame_data, _):frame = frame_data['original']model_output = frame_data['inference_output']yolo_input_shape = (640, 640, 3) # h,w,cboxes, scores, class_ids = parse_yolo_output(model_output, frame.shape, yolo_input_shape)class_labels = [yolo_classes[id] for id in class_ids]for i in range(len(boxes)):draw_bbox(frame, boxes[i], class_labels[i], scores[i])frame_data['modified'] = frame... (some other auxiliar functions that we call from the hook function)

The last step is to start Pipeless and provide a video stream. To start Pipeless, just run the following command in the my-project directory:

pipeless start --stages-dir .

Once run, the video stream from the webcam (v4l2) will be provided, and display the output directly on the screen. It should be noted that a list of stages that the video stream executes in sequence must be provided. In this example, it's just the onnx-yolo stage:

pipeless add stream --input-uri "v4l2" --output-uri "screen" --frame-path "onnx-yolo"

Conclusion

Creating computer vision applications is A complex task as there are many factors and subsystems that must be implemented around it. With a framework like Pipeless, getting up and running only takes a few minutes, allowing you to focus on writing code for specific use cases. In addition, Pipeless "stages" are highly reusable and easy to maintain, so maintenance will be easy and it can be iterated very quickly.

If you wish to participate in the development of Pipeless, you can do so through its GitHub repository.

Original title: Create a Complete Computer Vision App in Minutes With Just Two Python Functions, author: Miguel Angel Cabrera

Link: https://www.php.cn/link/e26dbb5b1843bf566ea7ec757f3325c4

The above is the detailed content of How to create a complete computer vision application in minutes with just two Python functions. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AM

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AMEasy to implement even for small and medium-sized businesses! Smart inventory management with ChatGPT and Excel Inventory management is the lifeblood of your business. Overstocking and out-of-stock items have a serious impact on cash flow and customer satisfaction. However, the current situation is that introducing a full-scale inventory management system is high in terms of cost. What you'd like to focus on is the combination of ChatGPT and Excel. In this article, we will explain step by step how to streamline inventory management using this simple method. Automate tasks such as data analysis, demand forecasting, and reporting to dramatically improve operational efficiency. moreover,

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AM

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AMUse AI wisely by choosing a ChatGPT version! A thorough explanation of the latest information and how to check ChatGPT is an ever-evolving AI tool, but its features and performance vary greatly depending on the version. In this article, we will explain in an easy-to-understand manner the features of each version of ChatGPT, how to check the latest version, and the differences between the free version and the paid version. Choose the best version and make the most of your AI potential. Click here for more information about OpenAI's latest AI agent, OpenAI Deep Research ⬇️ [ChatGPT] OpenAI D

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AM

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AMTroubleshooting Guide for Credit Card Payment with ChatGPT Paid Subscriptions Credit card payments may be problematic when using ChatGPT paid subscription. This article will discuss the reasons for credit card rejection and the corresponding solutions, from problems solved by users themselves to the situation where they need to contact a credit card company, and provide detailed guides to help you successfully use ChatGPT paid subscription. OpenAI's latest AI agent, please click ⬇️ for details of "OpenAI Deep Research" 【ChatGPT】Detailed explanation of OpenAI Deep Research: How to use and charging standards Table of contents Causes of failure in ChatGPT credit card payment Reason 1: Incorrect input of credit card information Original

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AM

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AMFor beginners and those interested in business automation, writing VBA scripts, an extension to Microsoft Office, may find it difficult. However, ChatGPT makes it easy to streamline and automate business processes. This article explains in an easy-to-understand manner how to develop VBA scripts using ChatGPT. We will introduce in detail specific examples, from the basics of VBA to script implementation using ChatGPT integration, testing and debugging, and benefits and points to note. With the aim of improving programming skills and improving business efficiency,

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

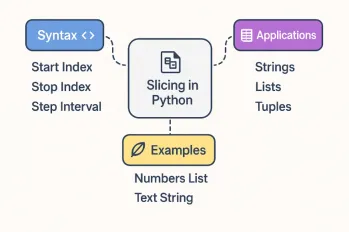

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools