Scale Invariant Features (SIFT) algorithm

The Scale Invariant Feature Transform (SIFT) algorithm is a feature extraction algorithm used in the fields of image processing and computer vision. This algorithm was proposed in 1999 to improve object recognition and matching performance in computer vision systems. The SIFT algorithm is robust and accurate and is widely used in image recognition, three-dimensional reconstruction, target detection, video tracking and other fields. It achieves scale invariance by detecting key points in multiple scale spaces and extracting local feature descriptors around the key points. The main steps of the SIFT algorithm include scale space construction, key point detection, key point positioning, direction assignment and feature descriptor generation. Through these steps, the SIFT algorithm can extract robust and unique features to achieve efficient recognition and matching of images.

The SIFT algorithm has the main feature of being invariant to changes in the scale, rotation and brightness of the image, and can extract unique and stable feature points to achieve efficient matching and recognition. . Its main steps include scale space extreme value detection, key point positioning, direction assignment, key point description and matching, etc. Through scale space extreme value detection, the SIFT algorithm can detect extreme points in images at different scales. In the key point positioning stage, key points with stability and uniqueness are determined through local extreme value detection and edge response elimination. The direction assignment stage assigns the dominant direction to each key point to improve the rotation invariance of feature description. The key point description stage uses the image gradient information around the key points to generate features

1. Scale space extreme value detection

Through the Gaussian difference function The original image undergoes scale space processing in order to detect extreme points with different scales. Then, the DoG operator is used to detect these extreme points, that is, the differences between two adjacent layers of Gaussian images in Gaussian pyramids of different scales and spatial positions are compared to obtain scale-invariant key points.

2. Key point positioning

Next, the SIFT algorithm assigns directions to each key point to ensure invariance to rotation transformation . Direction allocation uses the gradient histogram statistical method to calculate the gradient value and direction of the pixels around each key point, then distribute these values to the gradient histogram, and finally select the largest peak in the histogram as the main point of the key point. direction.

3. Direction allocation

After key point positioning and direction allocation, the SIFT algorithm uses the feature descriptor of the local image block to describe each Regional characteristics of key points. The descriptor is constructed based on pixels around key points to ensure invariance to rotation, scale and brightness changes. Specifically, the SIFT algorithm divides the image blocks around the key point into several sub-regions, then calculates the gradient magnitude and direction of the pixels in each sub-region, and constructs a 128-dimensional feature vector to describe the local characteristics of the key point. .

4. Key point description and matching

Finally, the SIFT algorithm performs image matching by comparing the key point feature vectors in the two images. . Specifically, the algorithm evaluates the similarity between two feature vectors by calculating their Euclidean distance or cosine similarity, thereby achieving feature matching and target recognition.

How does the scale-invariant feature transformation algorithm detect key points in images?

The SIFT algorithm performs scale space processing on the original image through the Gaussian difference function to detect extreme points with different scales. Specifically, the SIFT algorithm realizes the scale transformation of the image by constructing a Gaussian pyramid, that is, continuously convolving and downsampling the original image to obtain a series of Gaussian images with different scales. Then, the scale-invariant key points are obtained by performing a difference operation, that is, the DoG operator, on two adjacent layers of Gaussian images.

Before performing the DoG operator operation, it is necessary to determine the number of layers of the Gaussian pyramid and the scale of each layer of the image. The SIFT algorithm usually divides the Gaussian pyramid into several layers, and the size of each layer's image is half of the previous layer's image. This ensures that the scale change of the image will not affect the detection of key points. For each image layer, the SIFT algorithm also selects multiple scales in order to detect key points at different scales.

After determining the number of layers of the Gaussian pyramid and the scale of the image in each layer, the SIFT algorithm will look for extreme points on each image layer, that is, in each layer of the Gaussian pyramid. Among the 26 pixels around a pixel, find the maximum or minimum value and compare it with the corresponding pixels in the two adjacent layers of Gaussian pyramid to determine whether the point is an extreme point in scale space. This enables the detection of key points with stability and uniqueness in images of different scales. It should be noted that the SIFT algorithm will also perform some screening of the detected extreme points, such as excluding low contrast and edge points.

After determining the location of the key points, the SIFT algorithm will also perform key point positioning and direction allocation to ensure invariance to rotation transformation. Specifically, the SIFT algorithm calculates the gradient value and direction of the pixels around each key point and assigns these values to the gradient histogram. Then, the SIFT algorithm will select the largest peak in the histogram as the main direction of the key point and use it as the direction of the point. This ensures that the key points are rotationally invariant and provides direction information for subsequent feature description.

It should be noted that the detection and positioning of key points in the SIFT algorithm are based on the Gaussian pyramid and DoG operator, so the algorithm has good robustness to changes in the scale of the image. . However, the SIFT algorithm has high computational complexity and requires a large number of image convolution and difference operations. Therefore, certain optimization and acceleration are required in practical applications, such as using integral image and fast filter technologies.

In general, the SIFT algorithm, as an effective feature extraction algorithm, has strong robustness and accuracy, and can effectively handle the scale, rotation and Brightness and other transformations to achieve efficient matching and recognition of images. This algorithm has been widely used in the fields of computer vision and image processing, making important contributions to the development of computer vision systems.

The above is the detailed content of Scale Invariant Features (SIFT) algorithm. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

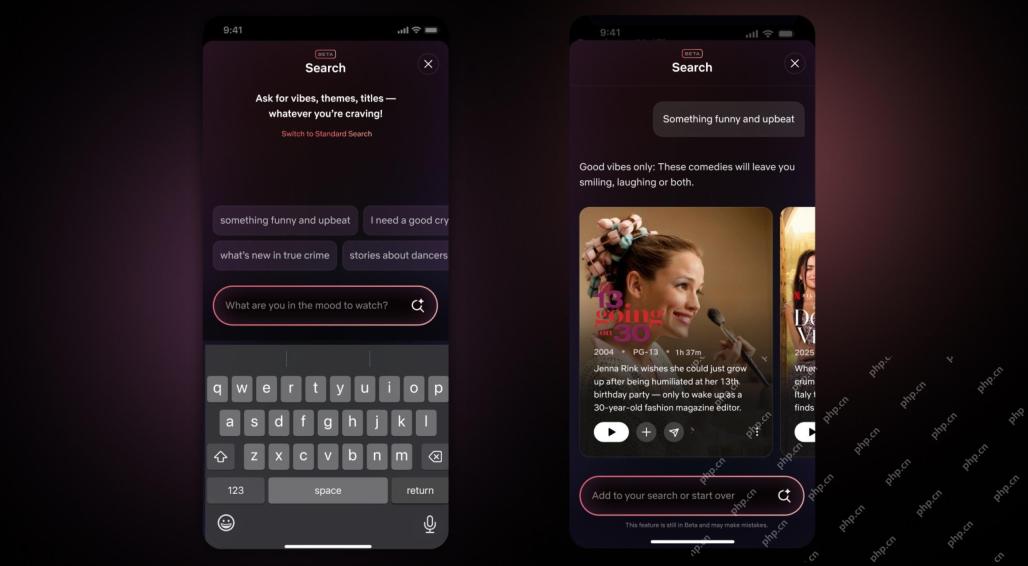

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Dreamweaver Mac version

Visual web development tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.