Technology peripherals

Technology peripherals AI

AI Using GPU instances to demonstrate Alibaba Cloud Linux 3's support for the AI ecosystem

Using GPU instances to demonstrate Alibaba Cloud Linux 3's support for the AI ecosystemUsing GPU instances to demonstrate Alibaba Cloud Linux 3's support for the AI ecosystem

Recently, Alibaba Cloud Linux 3 has provided some optimization and upgrades to make the AI development experience more efficient. This article is a preview of the series of articles "Introduction to AI Capabilities of Alibaba Cloud Linux 3". It takes a GPU instance as an example to demonstrate Alibaba Cloud. Linux 3 supports the AI ecosystem. Next, two series of articles will be published, mainly introducing the cloud market image based on Alinux to provide users with an out-of-box AI basic software environment, and introducing the differentiation of AI capabilities based on AMD. Stay tuned. For more information about Alibaba Cloud Linux 3, please visit the official website: https://www.aliyun.com/product/ecs/alinux

When developing artificial intelligence (AI) applications on Linux operating systems, developers may encounter some challenges, which include but are not limited to:

1. GPU Driver: In order to use an NVIDIA GPU for training or inference on a Linux system, the correct NVIDIA GPU driver needs to be installed and configured. Some additional work may be required as different operating systems and GPU models may require different drivers.

2. AI Framework Compilation: When programming with an AI framework on a Linux system, you need to install and configure the appropriate compiler and other dependencies. These frameworks often require compilation, so you need to ensure that the compiler and other dependencies are installed correctly, and that the compiler is configured correctly.

3. Software compatibility: The Linux operating system supports many different software and tools, but there may be compatibility issues between different versions and distributions. This may cause some programs to not run properly or be unavailable on some operating systems. Therefore, R&D personnel need to understand the software compatibility of their working environment and make necessary configurations and modifications.

4. Performance issues: The AI software stack is an extremely complex system that usually requires professional optimization of different models of CPUs and GPUs to achieve its best performance. Performance optimization of software and hardware collaboration is a challenging task for AI software stacks, requiring a high level of technology and expertise.

Alibaba Cloud Linux 3 (hereinafter referred to as "Alinux 3"), Alibaba Cloud's third-generation cloud server operating system, is a commercial version of the operating system developed based on the Anolis OS, providing developers with Powerful AI development platform, by supporting the Dragon Lizard ecological repo (epao), Alinux 3 achieves full support for the mainstream nvidia GPU and CUDA ecosystem, making AI development more convenient and efficient. In addition, Alinux 3 also supports the optimization of AI by different CPU platforms such as mainstream AI frameworks TensorFlow/PyTorch and Intel/amd. It will also introduce native support for large model SDKs such as modelscope and huggingface, providing developers with rich resources and tool. These supports make Alinux 3 a complete AI development platform, solving the pain points of AI developers without having to fiddle with the environment, making the AI development experience easier and more efficient.

Alinux 3 provides developers with a powerful AI development platform. In order to solve the above challenges that developers may encounter, Alinux 3 provides the following optimization upgrades:

1. Alinux 3 supports developers to install mainstream NVIDIA GPU drivers and CUDA acceleration libraries with one click by introducing the Dragon Lizard Ecological Software Repository (epao) , saving developers the need to match driver versions and manual installation time.

2. The epao warehouse also provides version support for the mainstream AI framework Tensorflow/PyTorch. At the same time, the dependency problem of the AI framework will be automatically solved during the installation process. Developers do not need to perform additional compilation. Use the system Python environment for rapid development.

3. Before the AI capabilities of Alinux 3 are provided to developers, all components have been tested for compatibility. Developers can install the corresponding AI capabilities with one click, eliminating possible problems in environment configuration. Modifications to system dependencies have improved stability during use.

4. Alinux 3 has been specially optimized for AI for CPUs on different platforms such as Intel/AMD to better release the full performance of the hardware.

5. In order to adapt to the rapid iteration of the AIGC industry, Alinux 3 will also introduce native support for large model SDKs such as ModelScope and HuggingFace, providing developers with a wealth of resources and tools.

With the support of multi-dimensional optimization, Alinux 3 has become a complete AI development platform, solving the pain points of AI developers and making the AI development experience easier and more efficient.

The following uses Alibaba Cloud GPU instances as an example to demonstrate Alinux 3’s support for the AI ecosystem:

1. Purchase a GPU instance

2. Select Alinux 3 image

3. Install epao repo configuration

dnf install -y anolis-epao-release

4. Install nvidia GPU driver

Before installing nvidia driver, make sure kernel-devel is installed to ensure that nvidia driver is installed successfully.

dnf install -y kernel-devel-$(uname-r)

Install nvidia driver:

dnf install -y nvidia-driver nvidia-driver-cuda

After the installation is complete, you can view the GPU device status through the nvidia-smi command.

5. Install cuda ecological library

dnf install -y cuda

6. Install the AI framework tensorflow/pytorch

Currently provides the CPU version of tensorflow/pytorch, and will support the GPU version of the AI framework in the future.

dnf install tensorflow -y dnf install pytorch -y

After the installation is completed, you can check whether the installation is successful through a simple command:

7. Deployment model

Using Alinux 3’s ecological support for AI, the GPT-2 Large model can be deployed to continue the task of writing this article.

Install Git and Git LFS to facilitate subsequent downloading of models.

dnf install -y git git-lfs wget

Update pip to facilitate subsequent deployment of the Python environment.

python -m pip install --upgrade pip

Enable Git LFS support.

git lfs install

Download the write-with-transformer project source code and pre-trained model. The write-with-transformer project is a web writing APP that can use the GPT-2 large model to continue writing content.

git clone https://huggingface.co/spaces/merve/write-with-transformer

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/gpt2-large

wget https://huggingface.co/gpt2-large/resolve/main/pytorch_model.bin -O gpt2-large/pytorch_model.bin

Install the dependent environments required by write-with-transformer.

cd ~/write-with-transformer

pip install --ignore-installed pyyaml==5.1

pip install -r requirements.txt

After the environment is deployed, you can run the web version of the APP to experience the fun of writing with the help of GPT-2. Currently GPT-2 only supports text generation in English.

cd ~/write-with-transformer

sed -i 's?"gpt2-large"?"../gpt2-large"?g' app.py

sed -i '34s/10/32/;34s/30/120/' app.py

streamlit run app.py --server.port 7860

The echo information appears External URL: http://

Click to try the cloud product for free now: https://click.aliyun.com/m/1000373503/

Original link: https://click.aliyun.com/m/1000379727/

This article is original content of Alibaba Cloud and may not be reproduced without permission.

The above is the detailed content of Using GPU instances to demonstrate Alibaba Cloud Linux 3's support for the AI ecosystem. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

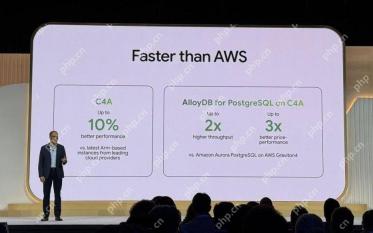

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver CS6

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)