Home >Technology peripherals >AI >OpenAI updates GPT-4 and other models, adds new API function calls, and reduces prices by up to 75%

OpenAI updates GPT-4 and other models, adds new API function calls, and reduces prices by up to 75%

- 王林forward

- 2023-06-15 22:10:081147browse

A few days ago, OpenAI CEO Sam Altman revealed OpenAI’s recent development path during a global speech tour, which is mainly divided into two stages. The top priority in 2023 is to launch cheaper and faster GPT-4 , longer context windows, etc.; focus in 2024 on multimodality.

OpenAI’s 2023 goals are being achieved one by one. In just a few short months since the launch of ChatGPT, OpenAI has built incredible applications based on models like GPT-3.5 Turbo, GPT-4, and more. On June 13, local time, OpenAI released function calls and other API updates, including:

- Added new function calls to the Chat Completions API, allowing the model to Call the function when needed and generate the corresponding JSON object as output;

- Updated and more controllable versions of GPT-4 and GPT-3.5 Turbo;

- 16k contextual version of gpt-3.5-turbo (the standard is the 4k version);

- The cost of the most advanced embeddings model has been reduced by 75%;

- gpt-3.5-turbo input token cost is reduced by 25%;

- announced gpt-3.5-turbo-0301 and gpt-4-0314 models Deprecation schedule.

#OpenAI said: GPT-4 and GPT-3.5 Turbo models in the API now support calling user-defined functions, allowing the model to use tools designed by the user. In addition, the price for users to use the model has been reduced, and OpenAI has also released some new model versions (including 16k context GPT-3.5 Turbo):

Function call

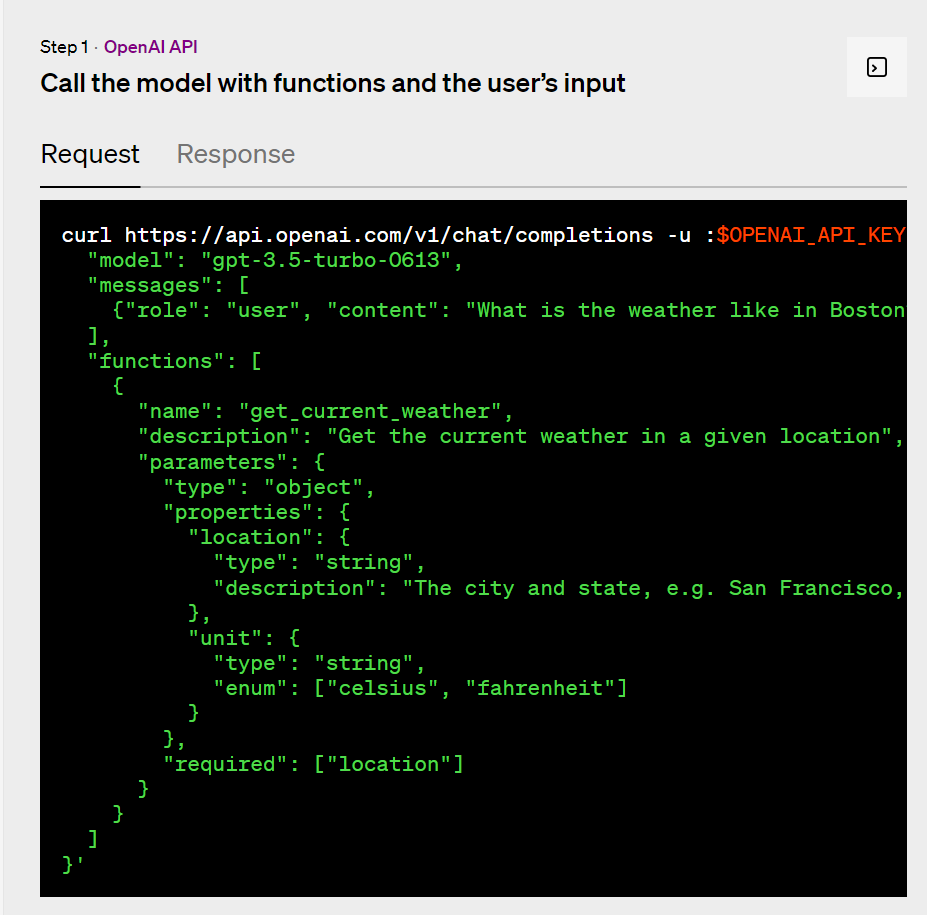

Now Developers can describe functions to gpt-4-0613 and gpt-3.5-turbo-0613 and have the model intelligently choose to output a JSON object containing the parameters required to call these functions. This is a new way to more reliably connect GPT's capabilities with external tools and APIs.

The model is fine-tuned to both detect when a function needs to be called (depending on user input) and respond with JSON that matches the function signature. Function calls allow developers to obtain structured data from models more reliably. For example, a developer could:

- Create a chatbot that answers questions by calling an external tool:

For example, use A query such as "Send Anya an email and ask her if she wants coffee next Friday" is converted into a function call send_email (to: string, body: string); or "What's the weather like in Boston?" is converted into get_current_weather (location: string, unit: 'celsius' | 'fahrenheit').

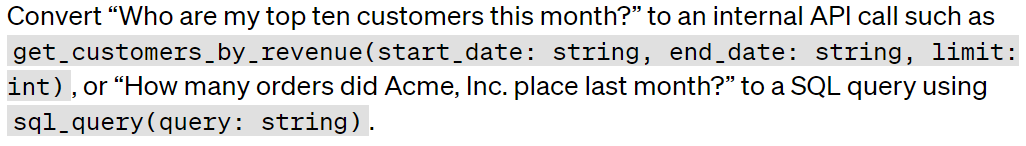

- Convert natural language into API calls or database queries:

For example, "Who is my friend this month?" Top ten customers?" is converted into an internal API call get_customers_by_revenue (start_date: string, end_date: string, limit: int); or "How many orders did Acme place last month?" is converted into a SQL query sql_query (query: string) .

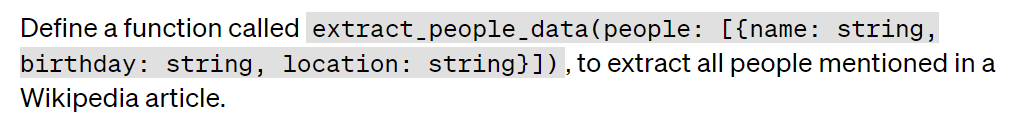

- #Extract data structure from text:

For example, define a function named extract_people_data (people: [{name: string, birthday: string, location: string}]) to extract all people mentioned in Wikipedia .

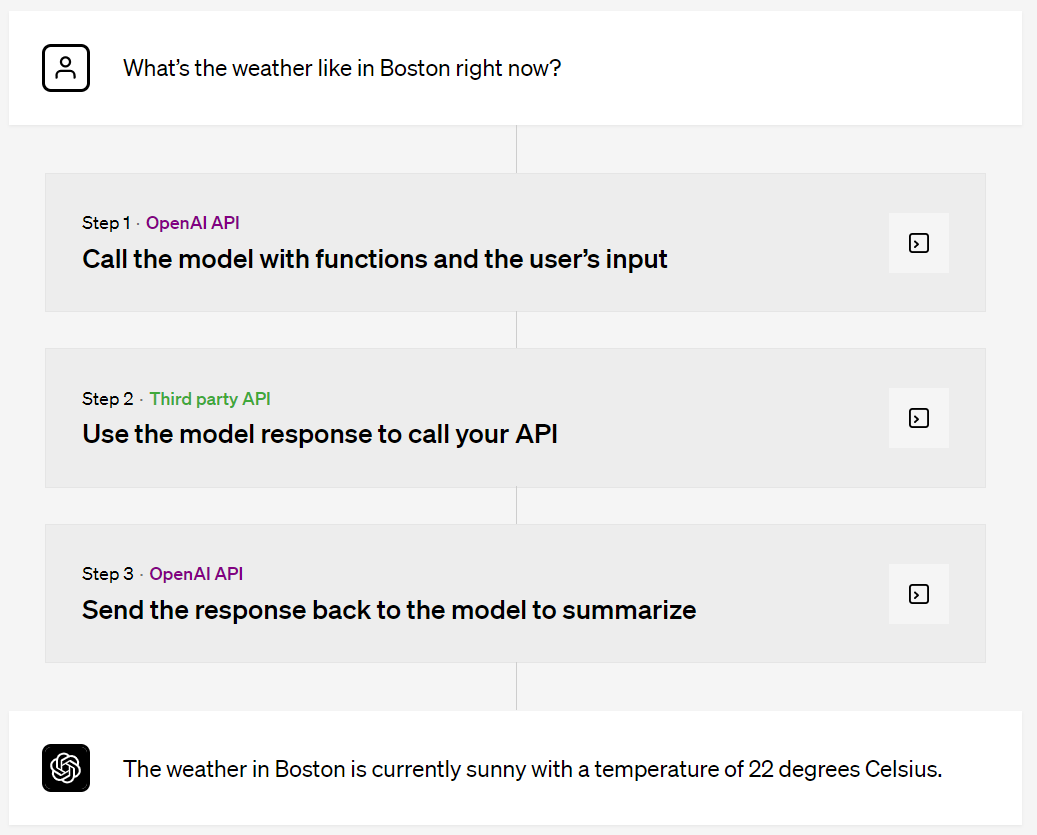

Function call example For example, a user asks "How is the weather in Boston now?" After a series of processing, the final model outputs the answer: "The weather in Boston is currently sunny and the temperature is 22 degrees Celsius." The following figure corresponds to the first step in the above figure, including request and response: First up is GPT-4: Then comes GPT - 3.5 Turbo: Model deprecation of gpt-4 and gpt-3.5-turbo released in March The initial version will be deprecated and upgraded. Applications will use the more stable models gpt-3.5-turbo, gpt-4, and gpt-4-32k, which will be automatically upgraded on June 27. If you want to compare different versions of your model, you can use the Eval library for public and private evaluation. Additionally, those developers who need transition time can continue to use older versions of models gpt-3.5-turbo-0301, gpt-4-0314 or gpt-4-32k-0314, But after September 13th, requests to use these models will fail. To learn more about model deprecation news, please refer to: Model deprecation query: https://platform.openai.com/docs/deprecations/ In addition, the price is lower in this update. Text-embedding-ada-002 is the most popular embedding model for embedding systems. Today, its cost is reduced by 75% to as low as $0.0001 per 1K tokens. Finally, there is GPT-3.5 Turbo that everyone is paying more attention to, which provides ChatGPT functionality to millions of users. Today, the input token cost of gpt-3.5-turbo is reduced by 25%. Developers using this model now pay just $0.0015 per 1K input tokens and $0.002 per 1K output tokens, which equates to about 700 pages per dollar.

New models

The above is the detailed content of OpenAI updates GPT-4 and other models, adds new API function calls, and reduces prices by up to 75%. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology