Technology peripherals

Technology peripherals AI

AI The amount of text data used for training Google PaLM 2 is nearly 5 times that of the original generation

The amount of text data used for training Google PaLM 2 is nearly 5 times that of the original generationThe amount of text data used for training Google PaLM 2 is nearly 5 times that of the original generation

According to news on May 17, Google launched its latest large-scale language model PaLM 2 at the 2023 I/O Developer Conference last week. Internal company documents show that the amount of text data used to train new models starting in 2022 is almost five times that of the previous generation.

It is reported that Google’s newly released PaLM 2 can perform more advanced programming, computing and creative writing tasks. Internal documents revealed that the number of tokens used to train PaLM 2 is 3.6 trillion.

The so-called token is a string. People will segment the sentences and paragraphs in the text used to train the model. Each string is usually called a token. This is an important part of training large language models, teaching them to predict which word will come next in a sequence.

The previous generation of large language model PaLM released by Google in 2022 used 780 billion tokens in training.

Although Google has been keen to demonstrate its prowess in artificial intelligence technology, showing how it can be embedded in search engines, email, word processing and spreadsheets, it has been reluctant to disclose the scale of training data. or other details. Microsoft-backed OpenAI is also keeping details of its newly released GPT-4 large-scale language model secret.

Both companies stated that the reason for not disclosing this information is the fierce competition in the artificial intelligence industry. Both Google and OpenAI want to attract users who want to use chatbots instead of traditional search engines to search for information.

But as competition in the field of artificial intelligence heats up, the research community is demanding more transparency.

Since launching PaLM 2, Google has said that the new model is smaller than the previous large language model, which means the company's technology can become more efficient at completing more complex tasks. Parameters are often used to describe the complexity of a language model. According to internal documents, PaLM 2 was trained with 340 billion parameters, and the original PaLM was trained with 540 billion parameters.

Google had no immediate comment.

In a blog post about PaLM 2, Google said that the new model uses a "new technology" called "compute-optimal scaling" (compute-optimal scaling), which can make PaLM 2 " More efficient, with better overall performance, such as faster inference, fewer service parameters, and lower service costs."

When releasing PaLM 2, Google revealed that the new model was trained in 100 languages and Capable of performing a variety of tasks. PaLM 2 is used in 25 features and products, including Google's experimental chatbot Bard. PaLM 2 has four different versions according to parameter scale, ranging from small to large: Gecko, Otter, Bison and Unicorn.

According to information publicly disclosed by Google, PaLM 2 is more powerful than any existing model. Facebook announced the launch of a large language model called LLaMA in February this year, which used 1.4 trillion tokens in training. OpenAI disclosed the relevant training scale when it released GPT-3. At that time, the company stated that the model had been trained on 300 billion tokens. In March this year, OpenAI released a new model, GPT-4, and said it performed at “human levels” in many professional tests.

According to the latest documents, the language model launched by Google two years ago was trained on 1.5 trillion tokens.

As new generative AI applications quickly become mainstream in the technology industry, the controversy surrounding the underlying technology is becoming increasingly fierce.

In February of this year, El Mahdi El Mhamdi, a senior scientist in Google’s research department, resigned due to the company’s lack of transparency. On Tuesday, OpenAI CEO Sam Altman testified at a U.S. Senate Judiciary Subcommittee hearing on privacy and technology and agreed with new systems to deal with artificial intelligence.

“For a very new technology, we need a new framework,” Altman said. “Of course, companies like ours have a lot of responsibility for the tools they put out.”

The above is the detailed content of The amount of text data used for training Google PaLM 2 is nearly 5 times that of the original generation. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

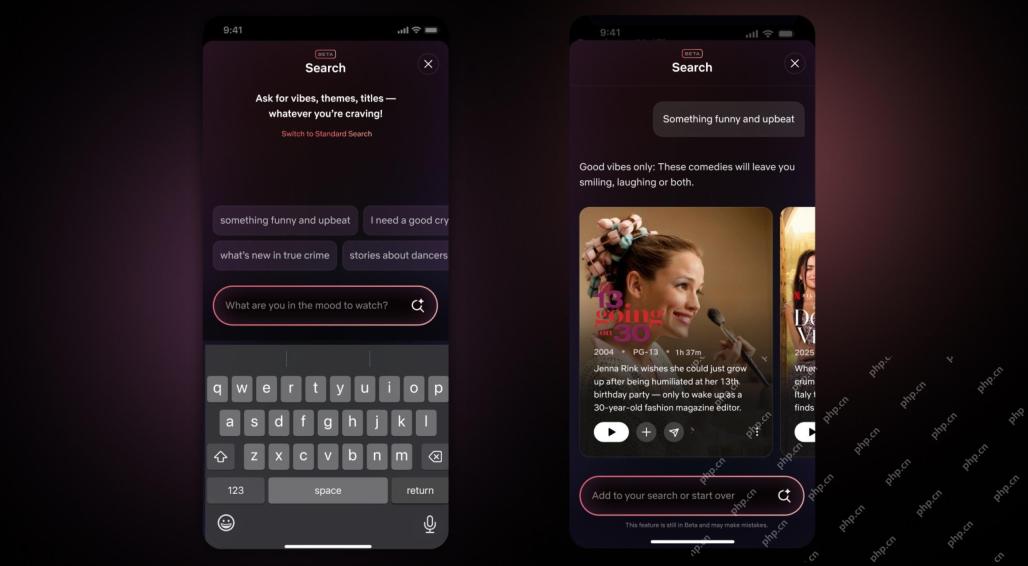

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft