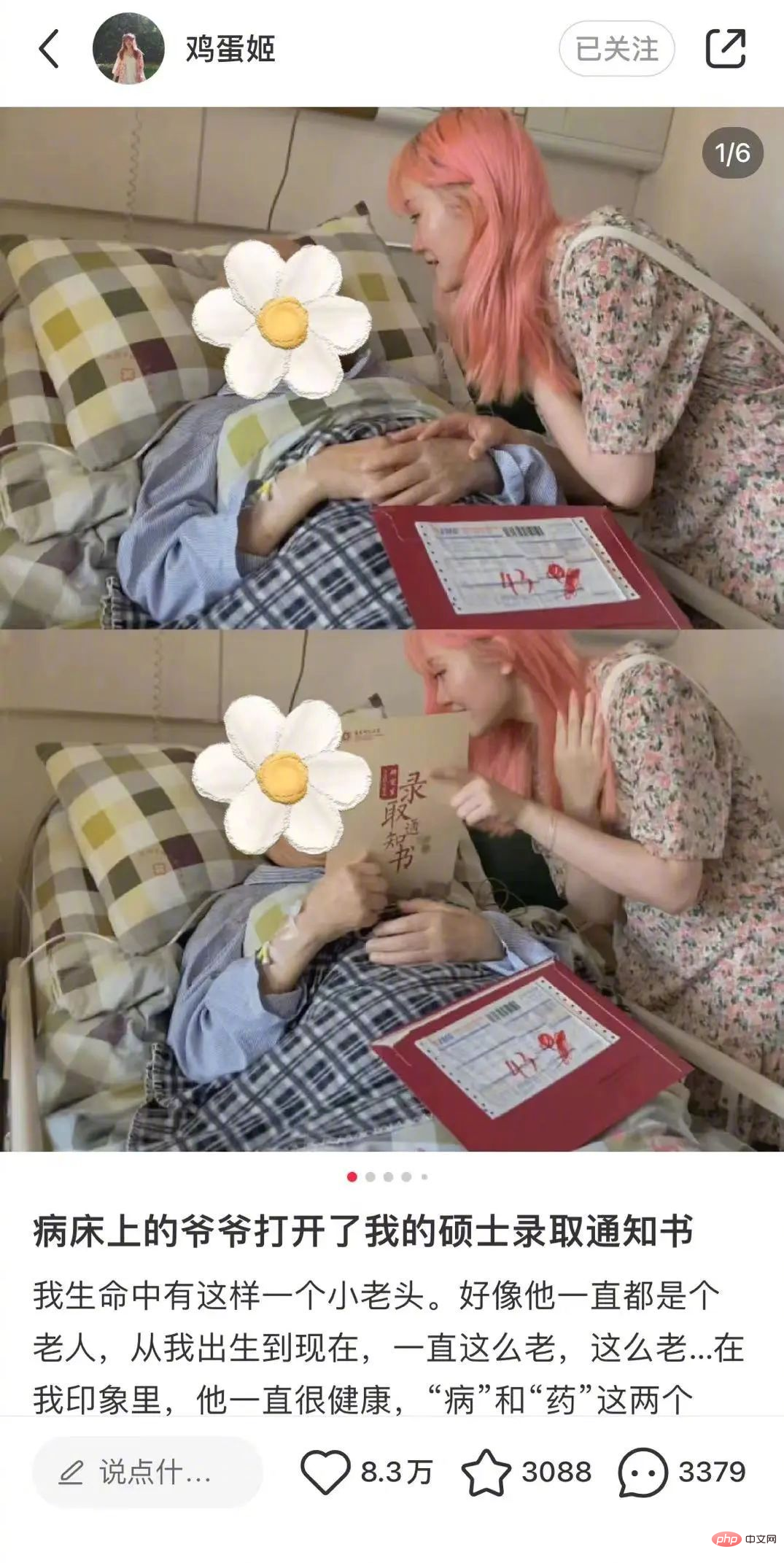

A girl born in 1995 committed suicide because she posted a blog post online.

##After the release of this tender blog post, the protagonist Zheng Linghua encountered a lot of rumors and abuse.

Some people questioned her identity and spread rumors that she was a "barmaid" just because her hair was pink; some people spread rumors that she was a "young and old lover" and a "cha-flow" , a "liar" who spread rumors that she was taking advantage of her grandfather's illness to make money.

This is so unsightly. Although many media refuted the rumors, Zheng Linghua did not receive an apology. Even when Zheng Linghua took up legal weapons to defend his rights, he was still harassed.

In the end, Zheng Linghua suffered from depression and ended his life not long ago.

Such things have happened many times on the Internet. This kind of "terrible words" that permeate the Internet world not only makes people feel disgusted, but can even take people's lives. There is also a special term for this behavior - cyber violence, or "cyber violence" for short.

So today, when AI can already communicate with humans fluently, can AI play a role in identifying cyber atrocities?

Overcoming two major difficulties, the accuracy rate of identifying online violent comments exceeds 90%

Although most Internet platforms have already launched some special recognition systems Speech systems, but most of these systems only detect and delete key words, which is obviously not smart enough. You can circumvent these restrictions with a little attention.

In fact, to accurately identify online violent remarks, two things need to be done:

How to accurately identify online violent remarks based on the context? - How to effectively identify online violent comments?

-

The first point to pay attention to is the content of cyber violence remarks, because most letters and messages on the Internet are segmented, and cyber violence often occurs during conversations. Gradually it becomes serious and eventually leads to negative consequences.

The second point of concern is speed. After all, in the Internet environment, there is too much data to be processed. If the detection takes a long time, then this system will have no practical value and will not be adopted.

#A team of researchers in the UK has developed a new artificial intelligence model called BiCapsHate that overcomes The research results of these two challenges were published in a paper in "IEEE Transactions on Computational Social Systems" on January 19.

The model consists of five layers of deep neural network, which starts with an input layer, processes the input text, and then to An embedding layer embeds text into a numerical representation, then a BiCaps layer learns sequential and linguistic context representations, a dense layer prepares the model for final classification, and finally an output layer outputs the results.

The BiCaps layer is the most important component. It effectively obtains contextual information in different directions before and after the input text through capsule networks. Make your model even more informative thanks to a wealth of hand-tuned shallow and deep auxiliary functions (including Hatebase dictionaries). The researchers conducted extensive experiments on five benchmark datasets to demonstrate the effectiveness of the proposed BiCapsHate model. Overall results show that BiCapsHate outperforms existing state-of-the-art methods, including fBERT, HateBERT and ToxicBERT.

On balanced and unbalanced data sets, BiCapsHate achieved 94% and 92% f-score accuracy respectively.

Fast and can run on GPU

Tarique Anwar is a lecturer in the Department of Computer Science at the University of York, He participated in this research. He noted that online debates often result in negative, hateful and abusive trolling comments, which cannot be controlled by existing content moderation practices on social media platforms.

He said: "In addition, online hate speech sometimes reflects the real environment, leading to crime and violence." Tariq Anwar also said that online hate speech leads to physical violence There were several instances of rioting.

#To help solve this problem, Tariq Anwar’s team decided to develop BiCapsHate and achieved very good results. Effect.

As Anwar points out, language can be ambiguous in some cases, i.e. a word can be positive in one context and negative in another. Previous models were not good enough in this regard, such as HateBERT, toxicBERT and fBERT. These artificial intelligences can capture context to a certain extent, but Tariq Anwar believes that "these are still not good enough."

Another advantage of BiCapsHate is the model’s ability to perform computations using limited hardware resources. "[Other models] require high-end hardware resources like GPUs, and high-end systems for computing," Tariq Anwar explained. "In contrast, BiCapsHate... can be executed on a CPU machine, even if you only have 8GB of memory."

It is worth noting that this artificial intelligence has been developed and tested so far , is only designed to analyze English speech, so it will need to be adapted into other languages. It's also less good at spotting offensive words with a mild or subtle hateful tone than more intense hate speech.

The researchers hope to next explore ways to assess the mental health of users who express hate online. If one is concerned that the person is mentally unstable and may be physically violent toward people in the real world, early intervention may be considered to reduce the likelihood of this occurring.

The above is the detailed content of Use AI to combat keyboard warriors and internet trolls, and protect the lives of those who are bullied online. For more information, please follow other related articles on the PHP Chinese website!