Home >Technology peripherals >AI >'Call for innovation: UCL Wang Jun discusses the theory and application prospects of ChatGPT general artificial intelligence'

'Call for innovation: UCL Wang Jun discusses the theory and application prospects of ChatGPT general artificial intelligence'

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-24 19:55:071336browse

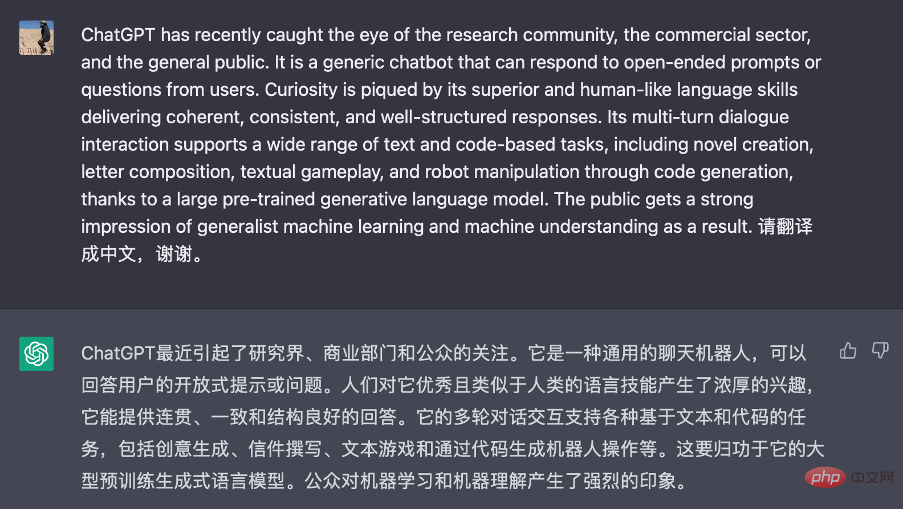

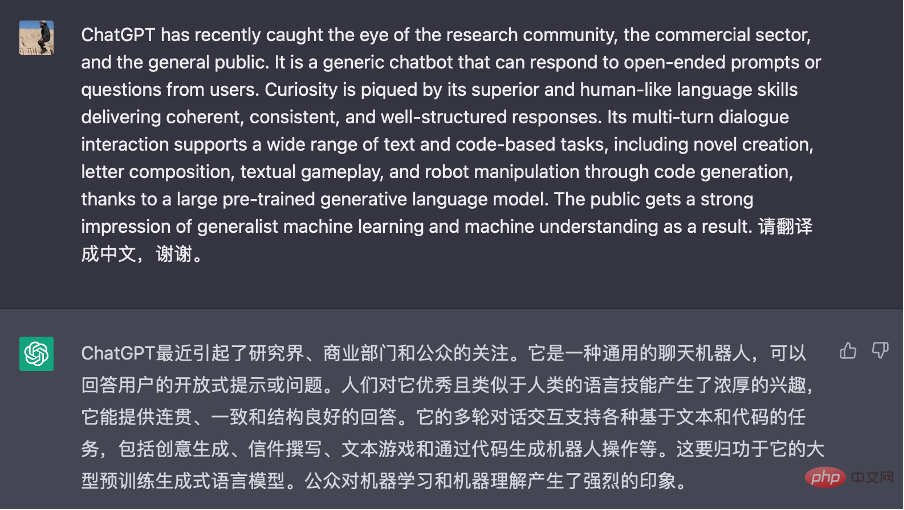

*This article was originally written in English, Chinese translation was completed by ChatGPT, and is presented as it is, with a few ambiguities marked for correction (red and yellow parts). Please see the appendix for the English manuscript. The author found that the inadequacies in the ChatGPT translation are often due to the lack of fluent expression in the original English manuscript. Interested readers should read it carefully.

ChatGPT has recently attracted attention from the research community, business community, and the general public. It is a general chatbot that can answer open-ended prompts or questions from users. Curiosity arose about its remarkable, human-like language skills, which allow it to provide coherent, consistent and well-structured responses. Thanks to a large pre-trained generative language model, its multi-turn conversational interactions support a variety of text- and code-based tasks, including novel creation, word play, and even robot manipulation through code generation. This leads the public to believe that general machine learning and machine understanding will soon be achievable.

If one digs deeper, one may find that when programming code is added as training data, certain reasoning abilities, common sense understanding, and even thought chains (a series of Intermediate reasoning steps) may emerge. While this new discovery is exciting and opens up new possibilities for artificial intelligence research and applications, it raises more questions than it solves. For example, could these emergingemergent abilities serve as early indicators of advanced intelligence, or are they simply naive imitations of human behavior? Could continuing to expand already massive models lead to the creation of artificial general intelligence (AGI), or are these models just ostensibly AI with limited capabilities? If these questions are answered, they could lead to fundamental shifts in the theory and application of artificial intelligence.

Therefore, we urge not only to replicate the success of ChatGPT, but more importantly to drive groundbreaking research and new application development in the following areas of artificial intelligence (this is not an exhaustive list):

1. New machine learning theory, beyond based on TaskSpecific Established Paradigm of Machine Learning

Inductive reasoning is a type of reasoning in which we draw conclusions about the world based on past observations in conclusion. Machine learning can be loosely thought of as inductive reasoning in that it uses past (training) data to improve performance on new tasks. Taking machine translation as an example, a typical machine learning process includes the following four main steps:

1. Define a specific problem, for example needs to translate English sentences into Chinese : E → C,

2. Collect data, for example, sentence pairs {E → C},

3. Train the model, for example using Deep neural network with input {E} and output {C},

4. Apply the model to unknown data points, for example, input a new English sentence E' and output Chinese translation C ' and evaluate the results.

As shown above, traditional machine learning isolates the training of each specific task. Therefore, for each new task, the process must be reset and re-executed from step 1 to step 4, losing all acquired knowledge (data, models, etc.) from previous tasks. For example, if you want to translate French to Chinese, you need a different model.

Under this paradigm, the work of machine learning theorists focuses on understanding the ability of a learning model to generalize from training data to unseen test data. For example, a common question is how many samples are needed in training to achieve a certain error bound on predicting unseen test data. We know that inductive biasbias (i.e., prior knowledge or prior assumptions) is necessary for a learning model to predict outputs that it has not encountered. This is because the output value in the unknown situation is completely arbitrary and it is impossible to solve the problem without making certain assumptions. The famous no free lunch theorem further illustrates that any inductive bias has limitations; it will only work for certain sets of problems, and it may fail elsewhere if the a priori knowledge assumed is incorrect.

Figure 1 Screenshot of ChatGPT used for machine translation. User prompts contain instructions only and do not require demonstration examples.

#While the above theory still applies, the emergence of basic language models may have changed our approach to machine learning. The new machine learning process can be as follows (taking the machine translation problem as an example, see Figure 1):

1. API accesses basic language models trained by others, for example, training includes English/ Models for diverse documents including Chinese paired corpora.

2. Based on few or no examples, design an appropriate textual description (called a prompt) for the task at hand, such as PromptPrompt = {A few examples E ➔ C}.

3. Conditioned on the prompt and given a new test data point, the language model generates an answer, such as appending E’ to the prompt and generating C’ from the model.

4. Interpret the answer as a prediction.

As shown in step 1, the basic language model serves as a universalone-size-fits-all knowledge base. The hints and context provided in step 2 allow the base language model to be customized to solve a specific goal or problem based on a small number of demonstration examples. While the above pipeline is primarily limited to text-based problems, it is reasonable to assume that as base pre-trained models evolve across modalities (see Section 3), it will become the standard for machine learning. This could break down necessary mission barriers and pave the way for Artificial General Intelligence (AGI).

However, it is still in the early stages of determining how the demo examples in the prompt text will operate. From some earlier work, we now understand that the format of the demo sample is more important than the correctness of the labels (e.g., as shown in Figure 1, we do not need to provide translated examples, but Only need to provide language description), but is there any theoretical limit to its adaptability, as stated in the "no free lunch" theorem? Can knowledge about the context and imperatives stated in the prompts be integrated into the model for future use? These questions only begin to be explored. Therefore, we call for new understanding and new principles of this new form of contextual learning and its theoretical limits and properties, such as investigating where the boundaries of generalization lie.

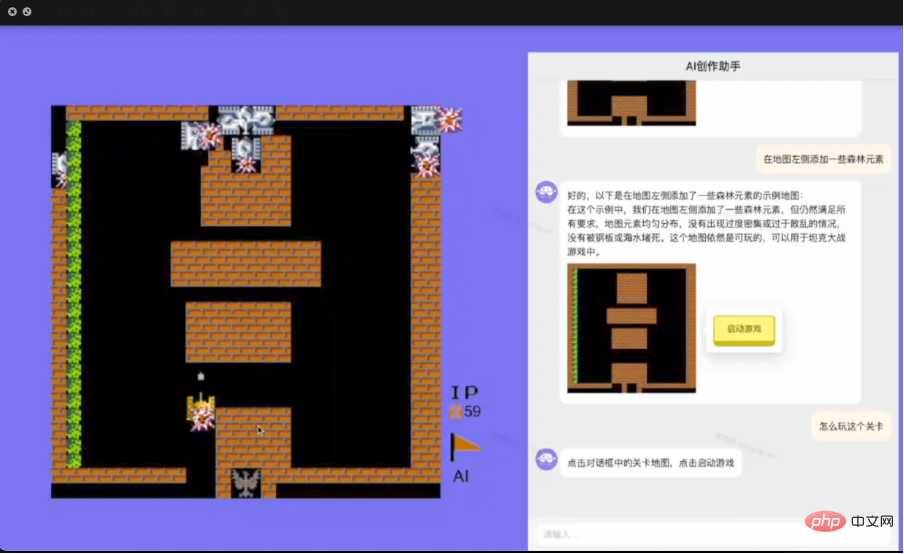

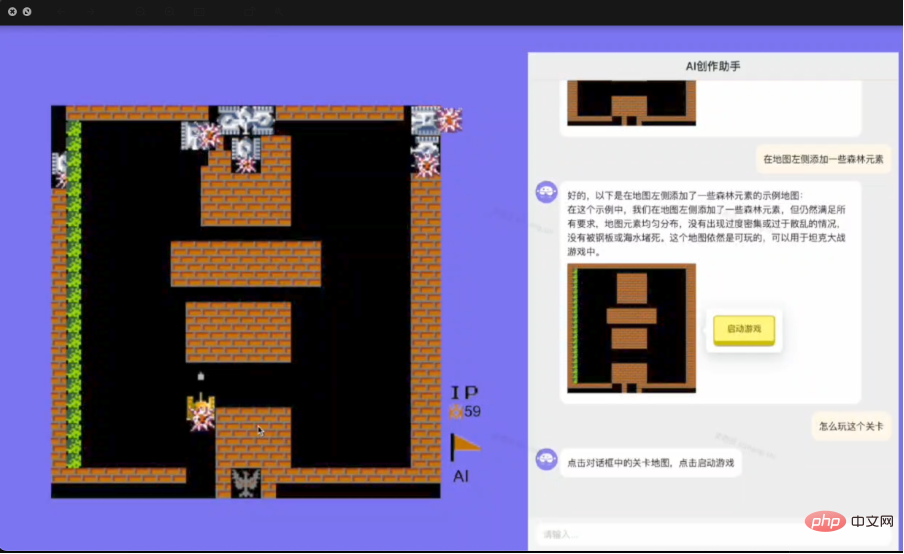

Figure 2 Illustration of Artificial Intelligence Decision Generation (AIGA) used to design computer games.

2. Hone your reasoning skills

We are on the edge of an exciting era in which all of our language and behavioral data can be mined for training (and assimilated by giant computerized models). This is a huge achievement because our entire collective experience and civilization can be digested into a (hidden) knowledge base (in the form of artificial neural networks) for later use. In fact, ChatGPT and the large base model are thought to exhibit some form of reasoning ability and perhaps even some degree of understanding of the state of mind of others (theory of mind). This is achieved through data fitting (masked language token predictions as training signals) and imitation (human behavior). However, whether this entirely data-driven strategy will lead to greater intelligence is debatable.

To illustrate this point, take the example of instructing an agent (agent) how to play chess. Even if the agent (agent) had access to an unlimited amount of human chess-playing data, it would be very difficult to generate a new strategy that is better than the existing data just by imitating the existing strategy. However, using this data, one can build an understanding of the world (e.g., the rules of a game) and use it to "think" (build a simulator in its brain to gather feedback to create better strategies). This highlights the importance of inductive bias; instead of simply using a brute force approach, the learning agent (agent) is required to have a certain world model in order to improve itself.

Therefore, there is an urgent need to deeply study and understand the emerging capabilities of the underlying model. In addition to language skills, we advocate the acquisition of practical reasoning abilities through the study of underlying mechanisms. One promising approach is to draw inspiration from neuroscience and brain science to decipher the mechanisms of human reasoning and advance the development of language models. At the same time, building a solid theory of mind may also require a deep understanding of multi-agent learning and its underlying principles.

3. From AI Generated Content (AIGC) to AI Generated Action (AIGA)

Development of human language The implicit semantics are crucial to the basic language model. How to exploit this is a key topic in general machine learning. For example, once the semantic space is aligned with other media (such as photos, videos, and sounds) or other forms of human and machine behavioral data (such as robot trajectories/actions), we can obtain semantic interpretation capabilities for them at no additional cost. In this way, machine learning (prediction, generation, and decision-making) becomes general and decomposable. However, handling cross-modal alignment is a significant difficulty we face because annotating relationships is labor-intensive. Furthermore, the alignment of human values becomes difficult when many interested parties are in conflict.

One fundamental disadvantage of ChatGPT is that it can only communicate directly with humans. However, once sufficient alignment with the external world is established, the underlying language model should be able to learn how to interact with a wide variety of actors and environments. This is important because it will give its reasoning abilities and language-based semantics broader applications and capabilities beyond just having a conversation. For example, it could be developed into a universal agent (intelligent agent), capable of browsing the Internet, controlling computers, and manipulating robots. Therefore, it is even more important to implement procedures that ensure that the agent (agent) 's responses (usually in the form of generated actions) are safe, reliable, unbiased, and trustworthy.

Figure 2 shows an example of AIGA interacting with a game engine to automate the process of designing a video game.

4. The theory of multi-agent interaction with basic language models

ChatGPT uses context learning and hint engineering to Drive multiple rounds of conversations with a person in a single session, i.e. given a question or prompt, the entire previous conversation (questions and answers) is sent to the system as additional context to build the response. This is a simple conversation-driven Markov Decision Process (MDP) model:

{state = context, action = response, reward = like/dislike rating}.

Although effective, this strategy has the following disadvantages: First, the prompt only provides a description of the user's response, but the user's true intention may not be explicitly stated and must be inferred. Perhaps a powerful model, such as the Partially Observable Markov Decision Process (POMDP) previously proposed for conversational bots, can accurately model hidden user intentions.

Secondly, ChatGPT first targets the generation of fitted language uses language adaptability for training, and then Training/fine-tuning of conversational objectives using human labels. Due to the open nature of the platform, actual user goals and objectives may not align with training/fine-tuning rewards. In order to examine the equilibria and conflicts of interest between humans and agents(agents), it may be worthwhile to use a game theory perspective.

5. New Applications

As demonstrated by ChatGPT, we believe that the basic language model has two unique characteristics, they will become the driving force for future machine learning and basic language model applications. The first is its superior language skills, while the second is its embedded semantic and early reasoning abilities (in the form of human language). As an interface, the former will greatly reduce the entry barrier for applying machine learning, while the latter will significantly promote the application scope of machine learning.

As shown in the new learning process introduced in Part 1, prompts and contextual learning eliminate the bottleneck of data engineering and the effort required to build and train models. Additionally, leveraging reasoning capabilities allows us to automatically break down and solve each sub-task of a difficult task. Therefore, it will significantly change many industries and application areas. In Internet enterprises, conversation-based interfaces are obvious applications for web and mobile search, recommendation systems, and advertising. However, since we are accustomed to keyword-based URL inverted index search systems, change is not easy. People have to be retaught to use longer queries and natural language as queries. Furthermore, underlying language models are often rigid and inflexible. They lack current information about recent events. They often conjure up facts and do not provide retrieval capabilities and verification. Therefore, we need an instant base model that can evolve dynamically over time.

Therefore, we call for the development of new applications, including but not limited to the following areas:

-

Innovation

Noveltip engineering, process and software support. - Model-based web search, recommendation and ad generation; a new business model for conversational advertising.

- Technology for conversation-based IT services, software systems, wireless communications (personalized messaging systems) and customer service systems.

- Generate Robotic Process Automation (RPA) and software testing and validation from underlying language models.

- AI assisted programming.

- A new content generation tool for the creative industry.

-

Integrating language models with Operations Research

Operations Research, enterprise intelligence and optimization are unified. - A way to efficiently and cost-effectively serve large base models in cloud computing.

-

For reinforcement learning, multi-agent learning and other artificial intelligencedecision-making

Develop the basic model of thedomain. - Language-assisted robotics technology.

- Basic models and reasoning for combinatorial optimization, Electronic Design Automation (EDA), and chip design.

About the Author

Wang Jun, Professor of Computer Science at University College London (UCL), co-founder and dean of Shanghai Digital Brain Research Institute, mainly researches decision-making intelligence and large models, including machine learning, reinforcement learning, and multi-agent , data mining, computational advertising, recommendation systems, etc. He has published more than 200 academic papers and two academic monographs, won multiple best paper awards, and led the team to develop the world's first multi-agent decision-making model and the world's first-tier multi-modal decision-making model.

Appendix:

Call for Innovation: Post-ChatGPT Theories of Artificial General Intelligence and Their Applications

ChatGPT has recently caught the eye of the research community, the commercial sector, and the general public. It is a generic chatbot that can respond to open-ended prompts or questions from users. Curiosity is piqued by its superior and human-like language skills delivering coherent, consistent, and well-structured responses. Its multi-turn dialogue interaction supports a wide range of text and code-based tasks, including novel creation, letter composition, textual gameplay, and even robot manipulation through code generation, thanks to a large pre-trained generative language model. This gives the public faith that generalist machine learning and machine understanding are achievable very soon.

If one were to dig deeper, they may discover that when programming code is added as training data, certain reasoning abilities, common sense understanding, and even chain of thought (a series of intermediate reasoning steps) may appear as emergent abilities [1] when models reach a particular size. While the new finding is exciting and opens up new possibilities for AI research and applications, it, however, provokes more questions than it resolves. Can these emergent abilities, for example, serve as an early indicator of higher intelligence, or are they simply naive mimicry of human behavior hidden by data? Would continuing the expansion of already enormous models lead to the birth of artificial general intelligence (AGI), or are these models simply superficially intelligent with constrained capability? If answered, these questions may lead to fundamental shifts in artificial intelligence theory and applications.

We therefore urge not just replicating ChatGPT's successes but most importantly, pushing forward ground-breaking research and novel application development in the following areas of artificial intelligence ( by no means an exhaustive list):

##1.New machine learning theory that goes beyond the established paradigm of task-specific machine learning

Inductive reasoning is a type of reasoning in which we draw conclusions about the world based on past observations. Machine learning can be loosely regarded as inductive reasoning in the sense that it leverages past (training) data to improve performance on new tasks. Taking machine translation as an example, a typical machine learning pipeline involves the following four major steps:

1.define the specific problem, e.g., translating English sentences to Chinese: E →C,

##2.collect the data, e.g., sentence pairs { E→C },3.train a model, e.g. , a deep neural network with inputs {E} and outputs {C},

4.apply the model to an unseen data point, e.g., input a new English sentence E' and output a Chinese translation C' and evaluate the result.

As shown above, traditional machine learning isolates the training for each specific task. Hence, for each new task, one must reset and redo the process from step 1 to step 4, losing all acquired knowledge (data, models, etc.) from previous tasks. For instance, you would need a different model if you want to translate French into Chinese, rather than English to Chinese.

Under this paradigm, the job of machine learning theorists is focused chiefly on understanding the generalisation ability of a learning model from the training data to the unseen test data [2, 3]. For instance, a common question would be how many samples we need in training to achieve a certain error bound of predicting unseen test data. We know that inductive bias (i.e.prior knowledge or prior assumption) is required for a learning model to predict outputs that it has not encountered. This is because the output value in unknown circumstances is completely arbitrary, making it impossible to address the problem without making certain assumptions. The celebrated no-free-lunch theorem [5] further says that any inductive bias has a limitation; it is only suitable for a certain group of problems, and it may fail elsewhere if the prior knowledge assumed is incorrect.

Figure 1 A screenshot of ChatGPT used for machine translation. The prompt contains instruction only, and no demonstration example is necessary.

While the above theories still hold, the arrival of foundation language models may have altered our approach to machine learning. The new machine learning pipeline could be the following (using the same machine translation problem as an example; see Figure 1):

1.API access to a foundation language model trained elsewhere by others, e.g., a model trained with diverse documents, including paring corpus of English/Chinese,

2.with a few examples or no example at all, design a suitable text description (known as a prompt) for the task at hand, e.g., Prompt = {a few examples E→C },

3.conditioned on the prompt and a given new test data point, the language model generates the answer, e.g., append E’ to the prompt and generate C’ from the model,

4.interpret the answer as the predicted result.

As shown in step 1, the foundation language model serves as a one-size-fits-all knowledge repository. The prompt (and context) presented in step 2 allow the foundation language model to be customised to a specific goal or problem with only a few demonstration instances. While the aforementioned pipeline is primarily limited to text-based problems, it is reasonable to assume that, as the development of cross-modality (see Section 3) foundation pre-trained models continues, it will become the standard for machine learning in general. This could break down the necessary task barriers to pave the way for AGI.

But, it is still early in the process of determining how the demonstration examples in a prompt text operate. Empirically, we now understand, from some early work [2], that the format of demonstration samples is more significant than the correctness of the labels (for instance, as illustrated in Figure 1, we don’t need to provide example translation but are required to provide language instruction), but are there any theoretical limits to its adaptability as stated in the no-free-lunch theorem? Can the context and instruction-based knowledge stated in prompts (step 2) be integrated into the model for future usage? We're only scratching the surface with these inquiries. We therefore call for a new understanding and new principles behind this new form of in-context learning and its theoretical limitations and properties, such as generalisation bounds.

Figure 2 An illustration of AIGA for designing computer games.

2.Developing reasoning skills

We are on the edge of an exciting era in which all our linguistic and behavioural data can be mined to train (and be absorbed by) an enormous computerised model. It is a tremendous accomplishment as our whole collective experience and civilisation could be digested into a single (hidden) knowledge base (in the form of artificial neural networks) for later use. In fact, ChatGPT and large foundation models are said to demonstrate some form of reasoning capacity. They may even arguably grasp the mental states of others to some extent (theory of mind) [6]. This is accomplished by data fitting (predicting masked language tokens as training signals) and imitation (of human behaviours). Yet, it is debatable if this entirely data-driven strategy will bring us greater intelligence.

To illustrate this notion, consider instructing an agent how to play chess as an example. Even if the agent has access to a limitless amount of human play data, it will be very difficult for it, by only imitating existing policies, to generate new policies that are more optimal than those already present in the data. Using the data, one can, however, develop an understanding of the world (e.g., the rules of the game) and use it to “think” (construct a simulator in its brain to gather feedback in order to create more optimal policies). This highlights the importance of inductive bias; rather than simple brute force, a learning agent is demanded to have some model of the world and infer it from the data in order to improve itself.

Thus, there is an urgent need to thoroughly investigate and understand the emerging capabilities of foundation models. Apart from language skills, we advocate research into acquiring of actual reasoning ability by investigating the underlying mechanisms [9]. One promising approach would be to draw inspiration from neuroscience and brain science to decipher the mechanics of human reasoning and advance language model development. At the same time, building a solid theory of mind may also necessitate an in-depth knowledge of multiagent learning [10,11] and its underlying principles.

3.From AI Generating Content (AIGC) to AI Generating Action (AIGA)

The implicit semantics developed on top of human languages is integral to foundation language models. How to utilise it is a crucial topic for generalist machine learning. For example, once the semantic space is aligned with other media (such as photos, videos, and sounds) or other forms of data from human and machine behaviours, such as robotic trajectory/actions, we acquire semantic interpretation power for them with no additional cost [7, 14]. In this manner, machine learning (prediction, generation, and decision-making) would be generic and decomposable. Yet, dealing with cross-modality alignment is a substantial hurdle for us due to the labour-intensive nature of labelling the relationships. Additionally, human value alignment becomes difficult when numerous parties have conflicting interests.

A fundamental drawback of ChatGPT is that it can communicate directly with humans only. Yet, once a sufficient alignment with the external world has been established, foundation language models should be able to learn how to interact with various parties and environments [7, 14]. This is significant because it will bestow its power on reasoning ability and semantics based on language for broader applications and capabilities beyond conversation. For instance, it may evolve into a generalist agent capable of navigating the Internet [7], controlling computers [13], and manipulating robots [12]. Thus, it becomes more important to implement procedures that ensure responses from the agent (often in the form of generated actions) are secure, reliable, unbiased, and trustworthy.

Figure 2 provides a demonstration of AIGA [7] for interacting with a game engine to automate the process of designing a video game.

4.Multiagent theories of interactions with foundation language models

ChatGPT uses in-context learning and prompt engineering to drive multi-turn dialogue with people in a single session, i.e., given the question or prompt, the entire prior conversation (questions and responses) is sent to the system as extra context to construct the response. It is a straightforward Markov decision process (MDP) model for conversation:

{State = context, Action = response, Reward = thumbs up/down rating}.

While effective, this strategy has the following drawbacks: first, a prompt simply provides a description of the user's response, but the user's genuine intent may not be explicitly stated and must be inferred. Perhaps a robust model, as proposed previously for conversation bots, would be a partially observable Markov decision process (POMDP) that accurately models a hidden user intent.

Second, ChatGPT is first trained using language fitness and then human labels for conversation goals. Due to the platform's open-ended nature, actual user's aim and objective may not align with the trained/fined-tuned rewards. In order to examine the equilibrium and conflicting interests of humans and agents, it may be worthwhile to use a game-theoretic perspective [9].

5.Novel applications

As proven by ChatGPT, there are two distinctive characteristics of foundation language models that we believe will be the driving force behind future machine learning and foundation language model applications. The first is its superior linguistic skills, while the second is its embedded semantics and early reasoning abilities (in the form of human language). As an interface, the former will greatly lessen the entry barrier to applied machine learning, whilst the latter will significantly generalise how machine learning is applied.

As demonstrated in the new learning pipeline presented in Section 1, prompts and in-context learning eliminate the bottleneck of data engineering and the effort required to construct and train a model. Moreover, exploiting the reasoning capabilities could enable us to automatically dissect and solve each subtask of a hard task. Hence, it will dramatically transform numerous industries and application sectors. In internet-based enterprises, the dialogue-based interface is an obvious application for web and mobile search, recommender systems, and advertising. Yet, as we are accustomed to the keyword-based URL inverted index search system, the change is not straightforward. People must be retaught to utilise longer queries and natural language as queries. In addition, foundation language models are typically rigid and inflexible. It lacks access to current information regarding recent events. They typically hallucinate facts and do not provide retrieval capabilities and verification. Thus, we need a just-in-time foundation model capable of undergoing dynamic evolution over time.

We therefore call for novel applications including but not limited to the following areas:

- Novel prompt engineering, its procedure, and software support.

- Generative and model-based web search, recommendation and advertising; novel business models for conversational advertisement.

- Techniques for dialogue-based IT services, software systems, wireless communications (personalised messaging systems) and customer service systems.

- Automation generation from foundation language models for Robotic process automation (RPA) and software test and verification.

- AI-assisted programming.

- Novel content generation tools for creative industries.

- Unifying language models with operations research and enterprise intelligence and optimisation.

- Efficient and cost-effective methods of serving large foundation models in Cloud computing.

- Foundation models for reinforcement learning and multiagent learning and, other decision-making domains.

- Language-assisted Robotics.

- Foundation models and reasoning for combinatorial optimisation, EDA and chip design.

The above is the detailed content of 'Call for innovation: UCL Wang Jun discusses the theory and application prospects of ChatGPT general artificial intelligence'. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology