Home >Technology peripherals >AI >'Yi Tay: A scientist who left Google and embarked on the road of entrepreneurship, publishing 16 high-quality papers in three years'

'Yi Tay: A scientist who left Google and embarked on the road of entrepreneurship, publishing 16 high-quality papers in three years'

- PHPzforward

- 2023-04-21 13:46:091373browse

On the second day after GPT-4 was released, Turing Award winner Geoffrey Hinton contributed a wonderful metaphor: "A caterpillar extracts nutrients from food and then turns into a butterfly. People Billions of clues to understanding have been extracted, GPT-4 is humanity’s butterfly."

In just two weeks, this butterfly seems to have set off a hurricane in various fields. Accordingly, the AI industry has ushered in a new wave of entrepreneurship. Among them are many entrepreneurs who have come from big companies such as Google.

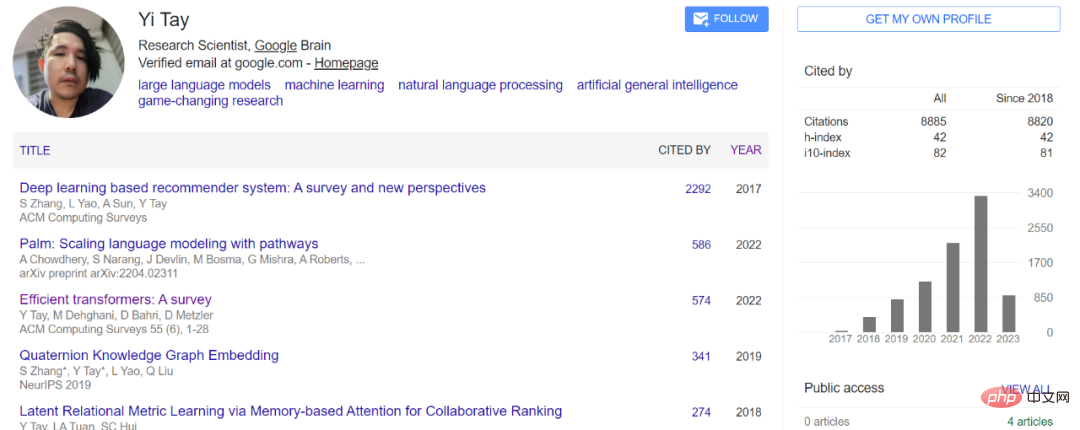

If you often read papers on large AI models, Yi Tay must be a familiar name. As a Senior Research Scientist at Google Brain, Yi Tay has contributed to many well-known large-scale language models and multi-modal models, including PaLM, UL2, Flan-U-PaLM, LaMDA/Bard, ViT-22B, PaLI, MUM, and others.

According to Yi Tay’s personal statistics, during his 3.3 years at Google Brain, he participated in writing a total of about 45 papers, and was a co-author of 16 of them. Authored papers include UL2, U-PaLM, DSI, Synthesizer, Charformer and Long Range Arena, etc.

Like most Transformer authors who left Google to start their own businesses, Yi Tay also found a suitable time to leave and move towards a new life. journey.

In a blog post, Yi Tay officially announced the news of his resignation, and revealed his next move in his updated personal information: participating in the founding of a company called Reka company, and serves as the company's chief scientist, focusing on large-scale language models.

Yi Tay also revealed that Reka is based in the San Francisco Bay Area and was co-founded by a strong team of former DeepMind, FAIR, and Google Brain researchers and engineers.

In addition to Yi Tay, Liu Qi, assistant professor of the Department of Computer Science at the University of Hong Kong, also mentioned on his personal homepage that he is participating in the creation of a company called "Reka" dedicated to multi-mode Development of dynamic basic models. During his PhD studies, Liu Qi interned at Google for a period of time, and co-authored papers such as "Quaternion Knowledge Graph Embedding" with Yi Tay.

Since the official website of "Reka" cannot be opened yet, we are temporarily unable to obtain more information about the company.

On the occasion of bidding farewell to Google, Yi Tay wrote a blog with gratitude, saying that leaving Google does feel like a graduation, because he has learned from Google and his outstanding colleagues, I learned a lot from my mentors and managers.

Google will always be special to me because it’s where I learned to do truly great research. I think back to when I first joined, when I saw the authors of so many famous and influential papers in such close proximity, I was like a fan meeting a favorite star. This is a great sensory stimulation for me, very motivating and inspiring. To this day, I am still grateful that I got to work and study with many of them, at least before most of them left.

I have learnt a lot.

From a broader perspective, I learned the importance of conducting critical research and how to drive research toward the goal of having a concrete impact. In college, we are only told that we must submit N number of conference papers (in order to graduate or do other things). At Google, things have to hit the ground running, and they have to have real impact.

For me, the biggest inspiration here is about how to own the research innovation process in an end-to-end manner, that is, from idea to paper/patent, to production, and finally serving users . To a large extent, I think this process has made me a better researcher.

If I have to describe my growth, I think my entire research process can be said to be "smooth" rather than "sudden." I think my own research abilities improved linearly over time as I got better and as I became more immersed in the Google culture. It's like a diffusion process. To this day, I still believe that research environment is very important.

Everyone says that “people” are Google’s greatest benefit. I totally agree. I am forever grateful to all of my close collaborators and mentors who have played a huge role in my growth as a researcher and as a person.

From the bottom of my heart, I want to thank my current manager (Quoc Le) and my previous manager (Don Metzler) for giving me the opportunity to work together and for always helping me and taking care of me. Me - not just as a subordinate, but as a human being. I would also like to thank veterans such as Ed Chi, Denny Zhou and Slav Petrov for supporting me throughout this journey. Finally, I want to thank Andrew Tomkins, who took a liking to me and hired me to join Google.

I would also like to thank my closest friends/collaborators (Mostafa Dehghani, Vinh Tran, Jason Wei, Hyung Won, Steven Zheng, Siamak Shakeri) for spending all this time with me Have a great time: sharing hot topics, learning from each other, writing papers together, and discussing research.

It is worth mentioning that while the wave of large-scale AI model entrepreneurship has been launched abroad, domestic startups have also launched a fierce battle for talent. For example, some media revealed that Wang Huiwen, who aims to build China's OpenAI, is also recruiting troops and plans to acquire two Tsinghua NLP companies: Shenyan Technology and Wall-Facing Intelligence. The Heart of the Machine Talent AI column also learned that top domestic AI laboratories, multiple startups, and a number of quantitative investment institutions are also actively looking for large model talents.

The above is the detailed content of 'Yi Tay: A scientist who left Google and embarked on the road of entrepreneurship, publishing 16 high-quality papers in three years'. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology