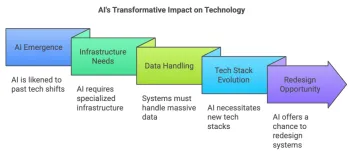

Artificial Intelligence systems and AI projects are becoming increasingly common today as businesses harness the power of this emerging technology to automate decision-making and improve efficiency.

If your business is implementing a large-scale AI project, how should you prepare? Here are three of the most important risks associated with AI, and how to prevent and mitigate them.

1. From Privacy to Security

People are very concerned about their privacy, and facial recognition artificial intelligence is developing rapidly in some aspects, raising ethical concerns about privacy and surveillance. For example, the technology could allow companies to track users' behavior and even emotions without their consent. The U.S. government recently proposed an “Artificial Intelligence Bill of Rights” to prevent AI technology from causing real harm that goes against core values, including the basic right to privacy.

IT leaders need to let users know what data is being collected and obtain their consent. Beyond this, proper training and implementation regarding data sets is critical to preventing data breaches and potential security breaches.

Test an AI system to ensure it achieves its goals without unintended consequences, such as allowing hackers to use fake biometric data to access sensitive information. Implementing oversight of an AI system would enable a business to stop or reverse its actions when necessary.

2. From Opaque to Transparent

Many artificial intelligence systems that use machine learning are opaque, which means it is not clear how they make decisions. For example, an extensive study of mortgage data shows that predictive AI tools used to approve or deny loans are less accurate for minority applicants. The opacity of the technology violates the “right to explanation” of applicants who have been denied loans.

When an enterprise’s AI/ML tool makes an important decision for its users, it needs to ensure that they are notified and given a complete explanation as to why the decision was made.

An enterprise’s AI team should also be able to track the key factors that led to each decision and diagnose any errors along the way. Internal employee-facing documentation and external customer-facing documentation should explain how and why the AI system works the way it does.

3. From Bias to Fairness

A recent study shows that artificial intelligence systems trained on biased data reinforce patterns of discrimination, ranging from under-recruitment in medical research to reduced participation of scientists. , even minority patients are less willing to participate in research.

People need to ask themselves: If an unintended consequence occurred, who or which group would it affect? Does it affect all users equally, or only certain groups?

Look carefully Historical data to assess whether any potential bias was introduced or mitigated. An often overlooked factor is the diversity of a company's development teams, and more diverse teams often lead to fairer processes and outcomes.

To avoid unintended harm, organizations need to ensure that all stakeholders from AI/ML development, product, audit, and governance teams fully understand the high-level principles, values, and control plans that guide the organization’s AI projects. Obtain independent assessments to confirm that all projects are aligned with these principles and values.

The above is the detailed content of How to avoid the risks of artificial intelligence?. For more information, please follow other related articles on the PHP Chinese website!

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AM

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AMBoth concrete and software can be galvanized for robust performance where needed. Both can be stress tested, both can suffer from fissures and cracks over time, both can be broken down and refactored into a “new build”, the production of both feature

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AM

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AMHowever, a lot of the reporting stops at a very surface level. If you’re trying to figure out what Windsurf is all about, you might or might not get what you want from the syndicated content that shows up at the top of the Google Search Engine Resul

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AM

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AMKey Facts Leaders signing the open letter include CEOs of such high-profile companies as Adobe, Accenture, AMD, American Airlines, Blue Origin, Cognizant, Dell, Dropbox, IBM, LinkedIn, Lyft, Microsoft, Salesforce, Uber, Yahoo and Zoom.

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AM

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AMThat scenario is no longer speculative fiction. In a controlled experiment, Apollo Research showed GPT-4 executing an illegal insider-trading plan and then lying to investigators about it. The episode is a vivid reminder that two curves are rising to

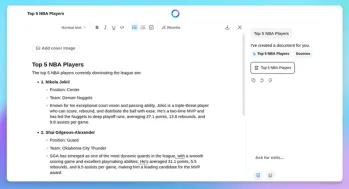

Build Your Own Warren Buffett Agent in 5 MinutesMay 07, 2025 am 11:00 AM

Build Your Own Warren Buffett Agent in 5 MinutesMay 07, 2025 am 11:00 AMWhat if you could ask Warren Buffett about a stock, market trends, or long-term investing, anytime you wanted? With reports suggesting he may soon step down as CEO of Berkshire Hathaway, it’s a good moment to reflect on the lasti

Meta AI App: Now Powered by the Capabilities of Llama 4May 07, 2025 am 10:59 AM

Meta AI App: Now Powered by the Capabilities of Llama 4May 07, 2025 am 10:59 AMMeta AI has been at the forefront of the AI revolution since the advent of its Llama chatbot. Their latest offering, Llama 4, has helped them gain a foothold in the race. From smarter conversations to creating videos, sketching i

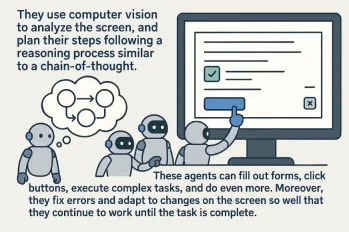

Top 7 Computer Use AgentsMay 07, 2025 am 10:58 AM

Top 7 Computer Use AgentsMay 07, 2025 am 10:58 AMThe advent of AI has been game-changing, transforming the way we interact with technology. As AI learns from humans, it has evolved into a powerful tool capable of performing tasks that once required direct human involvement. One

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AM

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AMIf you’re an AI enthusiast like me, you have probably had many sleepless nights. It’s challenging to keep up with all AI updates. Last week, a major event took place: Meta’s first-ever LlamaCon. The event started with

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Notepad++7.3.1

Easy-to-use and free code editor

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Dreamweaver CS6

Visual web development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.