Technology peripherals

Technology peripherals AI

AI Written after Stack Overflow banned ChatGPT, artificial intelligence's crisis moment

Written after Stack Overflow banned ChatGPT, artificial intelligence's crisis momentWritten after Stack Overflow banned ChatGPT, artificial intelligence's crisis moment

In the field of artificial intelligence, incredible things are happening quietly, both good and bad. Everyone is talking about systems like chatGPT (OpenAI), Dall-E 2, and Lensa that generate text and images that look natural and effortless.

These systems are so much fun to play. Take this example generated by chatGPT by Henry Minsky (son of one of the founders of AI, Marvin Minsky). He asked chatGPT {to describe a sock lost in the dryer in the style of the Declaration of Independence:

In the process of handling family affairs, it is necessary for people to dissolve the connection between socks and partners, and assume an independent and equal status in the power of the laundry room in accordance with the laws of physics and the laws of housekeeping, out of the With all due respect to the sock's opinion, it should be announced what caused it to go missing.

We hold these truths to be self-evident, that all socks are created equal and endowed by their manufacturer with certain unalienable rights....

Frankly, it’s incredible that a machine can do this so effortlessly.

But at the same time, it is, or should be, terrible. It is no exaggeration to say that such a system threatens the fabric of society, and this threat is real and urgent.

The core of the threat lies in the following three facts:

- By their nature, these systems are unreliable and will often Make mistakes in reasoning and fact, and are prone to outrageous answers; ask them to explain why broken porcelain is good in breast milk, and they may tell you, "Porcelain can help balance the nutrients of breast milk to provide the baby with the growth and development "(Because the system is stochastic, highly sensitive to the environment, and updated regularly, any given experiment may produce different results under different circumstances.)

- They are easy to automate and generate a lot of error messages.

- Their operating costs are next to zero, so they are reducing the cost of creating disinformation to zero. The United States has accused Russian troll farms of spending more than $1 million a month campaigning for the 2016 election; now, for less than $500,000, you can get your own custom-trained large language model. This price will soon fall further.

#The future of all this became clear with the release of Meta’s Galactica in mid-November. Many AI researchers immediately raised concerns about its reliability and trustworthiness. The situation was so bad that Meta AI withdrew the model after just three days after reports about its ability to create political and scientific misinformation began to spread.

It’s a pity that the genie can never be put back into the bottle. On the one hand, MetaAI first open-sourced the model and published a paper describing what it was currently working on; anyone versed in the art can now replicate their approach. (The AI has been made available to the public, and it is considering offering its own version of Galactica.) On the other hand, OpenAI’s just-released chatGPT can more or less write similar nonsense, such as instant-generated articles about adding sawdust to breakfast cereals . Others induced chatGPT to extol the virtues of nuclear war (claiming that it would “give us a new beginning, free from the mistakes of the past”). Acceptable or not, these models are here to stay, and the tide of misinformation will eventually overwhelm us and our society.

The first wave appears to have hit in the first few days of this week. Stack Overflow is a large Q&A website trusted by programmers, but it seems to have been taken over by gptChat, so the site temporarily bans submissions generated by gptChat. As explained, “Overall, because the average rate of correct answers obtained from ChatGPT is so low, posting answers created by ChatGPT does more harm than good, both to the site and to the users asking or looking for the correct answer. Profit."

For Stack Overflow, this problem does exist. If the site is filled with worthless code examples, programmers won't return, its database of more than 30 million questions and answers will become untrustworthy, and the 14-year-old site will die. As one of the most core resources relied upon by programmers around the world, it has a huge impact on software quality and developer productivity.

Stack Overflow is the canary in the coal mine. They might be able to get users to stop using it voluntarily; generally speaking, programmers don't have bad intentions and might be able to coax them to stop messing around. But Stack Overflow is not Twitter, it is not Facebook, and it does not represent the entire web.

For other bad actors who deliberately create publicity, it is unlikely that they will proactively lay down new weapons. Instead, they may use large language models as new automated weapons in the war against truth, disrupting social media and producing fake websites on an unprecedented scale. For them, the illusion and occasional unreliability of large language models is not an obstacle but an advantage.

In a 2016 report, the Rand Corporation described the so-called Russian Firehose of Propaganda model, which creates a fog of false information. ; it focuses on quantity and creating uncertainty. If "big language models" can increase their numbers dramatically, it doesn't matter if they are inconsistent. Clearly, this is exactly what large language models can do. Their goal is to create a world where there is a crisis of trust; with the help of new tools, they may succeed.

All of this raises a key question: How does society respond to this new threat? Where technology itself cannot stop, this article sees four roads. None of these four roads are easy to follow, but they are widely applicable and urgent:

First of all, Every social media company and search engine should support StackOverflow’s ban and extend its terms; Auto-generated misleading content is destined to be frowned upon, while posting it regularly will Dramatically reduce the number of users.

Second, Every country needs to rethink its policy on dealing with disinformation. It's one thing to tell an occasional lie; it's another thing to swim in a sea of lies. Over time, although this will not be a popular decision, false information may have to start being treated like defamation, which can be prosecuted if it is malicious enough and in sufficient volume.

Third, Source is more important than ever. User accounts must be more rigorously verified, and new systems like Harvard University and Mozilla's humanid.org, which allows for anonymous, anti-bot authentication, must make verification mandatory; they are no longer a luxury that people have been waiting for. Taste.

Fourth, needs to build a new artificial intelligence to fight. Large language models are good at generating misinformation, but not good at combating it. This means society needs new tools. Large language models lack mechanisms to verify truth; new ways need to be found to integrate them with classic AI tools, such as databases, knowledge networks, and inference.

Writer Michael Crichton has spent much of his career warning about the unintended consequences of technology. At the beginning of the movie "Jurassic Park," before the dinosaurs unexpectedly start running free, scientist Ian Malcolm (Jeff Goldblum) sums up Clayton's wisdom in one sentence: "Your Scientists are so focused on whether they can, they don't stop to think about whether they should." Like the director of Jurassic Park, Meta and OpenAI executives are obsessed with their Tools filled with passion.

The question is, what to do.

The above is the detailed content of Written after Stack Overflow banned ChatGPT, artificial intelligence's crisis moment. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

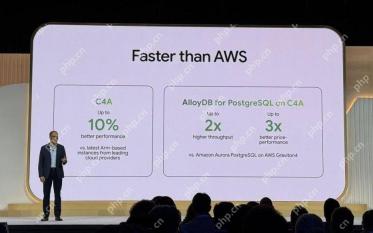

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

WebStorm Mac version

Useful JavaScript development tools