Technology peripherals

Technology peripherals AI

AI Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100

Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100

In 2023, there seem to be only two camps left in the chatbot field: "OpenAI's ChatGPT" and "Others".

ChatGPT is powerful, but it’s almost impossible for OpenAI to open source it. The "other" camp performed poorly, but many people are working on open source, such as LLaMA, which was open sourced by Meta some time ago.

LLaMA is the general name for a series of models, with the number of parameters ranging from 7 billion to 65 billion. Among them, the 13 billion parameter LLaMA model can outperform the parameters "on most benchmarks" GPT-3 with a volume of 175 billion. However, the model has not undergone instruction tuning (instruct tuning), so the generation effect is poor.

In order to improve the performance of the model, researchers from Stanford helped it complete the instruction fine-tuning work and trained a new 7 billion parameter model called Alpaca (based on LLaMA 7B). Specifically, they asked OpenAI's text-davinci-003 model to generate 52K instruction-following samples in a self-instruct manner as training data for Alpaca. Experimental results show that many behaviors of Alpaca are similar to text-davinci-003. In other words, the performance of the lightweight model Alpaca with only 7B parameters is comparable to that of very large-scale language models such as GPT-3.5.

For ordinary researchers, this is a practical and cheap way to fine-tune, but it still requires a large amount of calculations (the author said They fine-tuned it for 3 hours on eight 80GB A100s). Moreover, Alpaca's seed tasks are all in English, and the data collected are also in English, so the trained model is not optimized for Chinese.

In order to further reduce the cost of fine-tuning, another researcher from Stanford, Eric J. Wang, used LoRA (low-rank adaptation) technology to reproduce the results of Alpaca. Specifically, Eric J. Wang used an RTX 4090 graphics card to train a model equivalent to Alpaca in only 5 hours, reducing the computing power requirements of such models to consumer levels. Furthermore, the model can be run on a Raspberry Pi (for research).

Technical principles of LoRA. The idea of LoRA is to add a bypass next to the original PLM and perform a dimensionality reduction and then dimensionality operation to simulate the so-called intrinsic rank. During training, the parameters of the PLM are fixed, and only the dimensionality reduction matrix A and the dimensionality enhancement matrix B are trained. The input and output dimensions of the model remain unchanged, and the parameters of BA and PLM are superimposed during output. Initialize A with a random Gaussian distribution and initialize B with a 0 matrix to ensure that the bypass matrix is still a 0 matrix at the beginning of training (quoted from: https://finisky.github.io/lora/). The biggest advantage of LoRA is that it is faster and uses less memory, so it can run on consumer-grade hardware.

Alpaca-LoRA project posted by Eric J. Wang.

Project address: https://github.com/tloen/alpaca-lora

For classes that want to train themselves This is undoubtedly a big surprise for researchers who use ChatGPT models (including the Chinese version of ChatGPT) but do not have top-level computing resources. Therefore, after the advent of the Alpaca-LoRA project, tutorials and training results around the project continued to emerge, and this article will introduce several of them.

How to use Alpaca-LoRA to fine-tune LLaMA

In the Alpaca-LoRA project, the author mentioned that in order to perform fine-tuning cheaply and efficiently, they used Hugging Face’s PEFT . PEFT is a library (LoRA is one of its supported technologies) that allows you to take various Transformer-based language models and fine-tune them using LoRA. The benefit is that it allows you to fine-tune your model cheaply and efficiently on modest hardware, with smaller (perhaps composable) outputs.

In a recent blog, several researchers introduced how to use Alpaca-LoRA to fine-tune LLaMA.

Before using Alpaca-LoRA, you need to have some prerequisites. The first is the choice of GPU. Thanks to LoRA, you can now complete fine-tuning on low-spec GPUs like NVIDIA T4 or 4090 consumer GPUs; in addition, you also need to apply for LLaMA weights because their weights are not public.

Now that the prerequisites are met, the next step is how to use Alpaca-LoRA. First you need to clone the Alpaca-LoRA repository, the code is as follows:

git clone https://github.com/daanelson/alpaca-lora cd alpaca-lora

Secondly, get the LLaMA weights. Store the downloaded weight values in a folder named unconverted-weights. The folder hierarchy is as follows:

unconverted-weights ├── 7B │ ├── checklist.chk │ ├── consolidated.00.pth │ └── params.json ├── tokenizer.model └── tokenizer_checklist.chk

After the weights are stored, use the following command to PyTorch The weight of the checkpoint is converted into a format compatible with the transformer:

cog run python -m transformers.models.llama.convert_llama_weights_to_hf --input_dir unconverted-weights --model_size 7B --output_dir weights

The final directory structure should be like this:

weights ├── llama-7b └── tokenizermdki

Process the above two Step 3: Install Cog:

sudo curl -o /usr/local/bin/cog -L "https://github.com/replicate/cog/releases/latest/download/cog_$(uname -s)_$(uname -m)" sudo chmod +x /usr/local/bin/cog

The fourth step is to fine-tune the model. By default, the GPU configured on the fine-tuning script is weak, but if you have For a GPU with better performance, you can increase MICRO_BATCH_SIZE to 32 or 64 in finetune.py. Additionally, if you have directives to tune a dataset, you can edit the DATA_PATH in finetune.py to point to your own dataset. It should be noted that this operation should ensure that the data format is the same as alpaca_data_cleaned.json. Next run the fine-tuning script:

cog run python finetune.py

The fine-tuning process took 3.5 hours on a 40GB A100 GPU and more time on less powerful GPUs.

The last step is to run the model with Cog:

$ cog predict -i prompt="Tell me something about alpacas." Alpacas are domesticated animals from South America. They are closely related to llamas and guanacos and have a long, dense, woolly fleece that is used to make textiles. They are herd animals and live in small groups in the Andes mountains. They have a wide variety of sounds, including whistles, snorts, and barks. They are intelligent and social animals and can be trained to perform certain tasks.

The author of the tutorial said that after completing the above steps, you can continue to try various gameplays. Including but not limited to:

- Bring your own data set and fine-tune your own LoRA, such as fine-tuning LLaMA to make it speak like an anime character. See: https://replicate.com/blog/fine-tune-llama-to-speak-like-homer-simpson

- Deploy the model to the cloud platform;

- Combine with other LoRA, such as Stable Diffusion LoRA, and apply these to the image field;

- Use the Alpaca data set (or other data sets) to fine-tune the update large LLaMA models and see how they perform. This should be possible with PEFT and LoRA, although it will require a larger GPU.

Alpaca-LoRA derivative project

Although Alpaca's performance is comparable to GPT 3.5, its seed tasks are all in English, and the data collected are also in English , so the trained model is not friendly to Chinese. In order to improve the effectiveness of the dialogue model in Chinese, let’s take a look at some of the better projects.

The first is the open source Chinese language model Luotuo (Luotuo) by three individual developers from Central China Normal University and other institutions. This project is based on LLaMA, Stanford Alpaca, Alpaca LoRA, Japanese-Alpaca -When LoRA is completed, training deployment can be completed with a single card. Interestingly, they named the model camel because both LLaMA (llama) and alpaca (alpaca) belong to the order Artiodactyla - family Camelidae. From this point of view, this name is also expected.

This model is based on Meta’s open source LLaMA and was trained on Chinese with reference to the two projects Alpaca and Alpaca-LoRA.

Project address: https://github.com/LC1332/Chinese-alpaca-lora

Currently, the project has released two models, luotuo-lora-7b-0.1 and luotuo-lora-7b-0.3, and another model is being planned:

The following is the effect display:

#But luotuo-lora-7b-0.1 (0.1) , luotuo-lora-7b-0.3 (0.3) still has a gap. When the user asked for the address of Central China Normal University, 0.1 answered incorrectly:

In addition to simple conversations, there are also people who have performed model optimization in insurance-related fields. According to this Twitter user, with the help of the Alpaca-LoRA project, he entered some Chinese insurance question and answer data, and the final results were good.

Specifically, the author used more than 3K Chinese question and answer insurance corpus to train the Chinese version of Alpaca LoRa. The implementation process used the LoRa method and fine-tuned the Alpaca 7B model, which took 240 minutes. Final Loss 0.87.

## Source: https://twitter.com/nash_su/status/1639273900222586882

The following is the training process and results:

The above is the detailed content of Training a Chinese version of ChatGPT is not that difficult: you can do it with the open source Alpaca-LoRA+RTX 4090 without A100. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

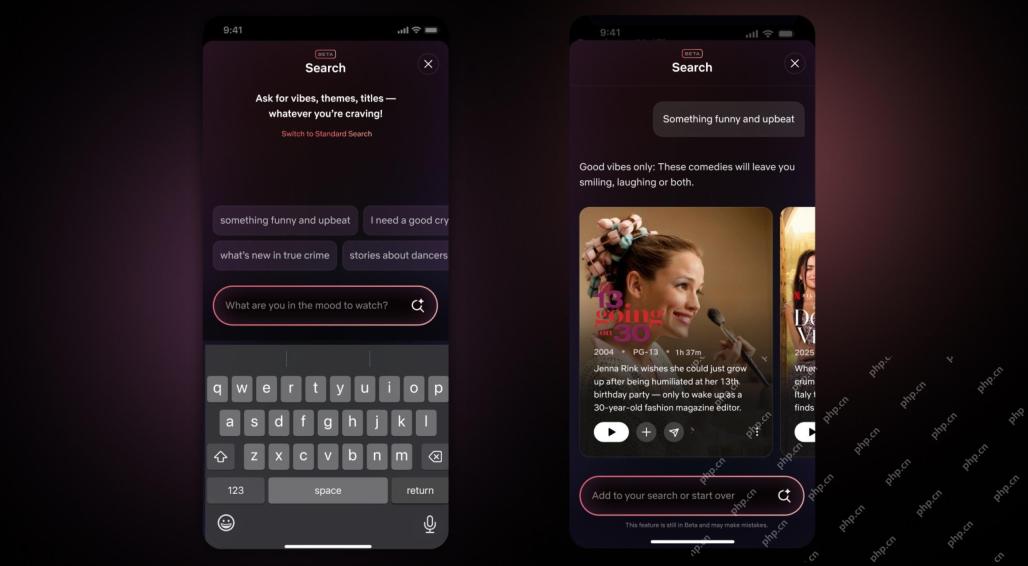

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Linux new version

SublimeText3 Linux latest version

WebStorm Mac version

Useful JavaScript development tools