Home >Technology peripherals >AI >Yann LeCun: Regardless of social norms, ChatGPT is far from a real person

Yann LeCun: Regardless of social norms, ChatGPT is far from a real person

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-15 10:25:021423browse

OpenAI launched ChatGPT at the end of 2022, and its popularity continues to this day. This model is simply walking traffic, and it will definitely cause a frenzy of discussion wherever it goes.

Major technology companies, institutions, and even individuals have stepped up the research and development of ChatGPT-like products. At the same time, Microsoft connected ChatGPT to Bing, and Google released Bard to power the search engine. NVIDIA CEO Jensen Huang gave ChatGPT a high evaluation. He said that ChatGPT is the iPhone moment in the field of artificial intelligence and one of the greatest technologies in the history of computing.

Many people are convinced that conversational AI has arrived, but are these models really perfect? Not necessarily, there will always be some uncanny moments in them, such as making uninhibited remarks at will, or chattering about plans to take over the world.

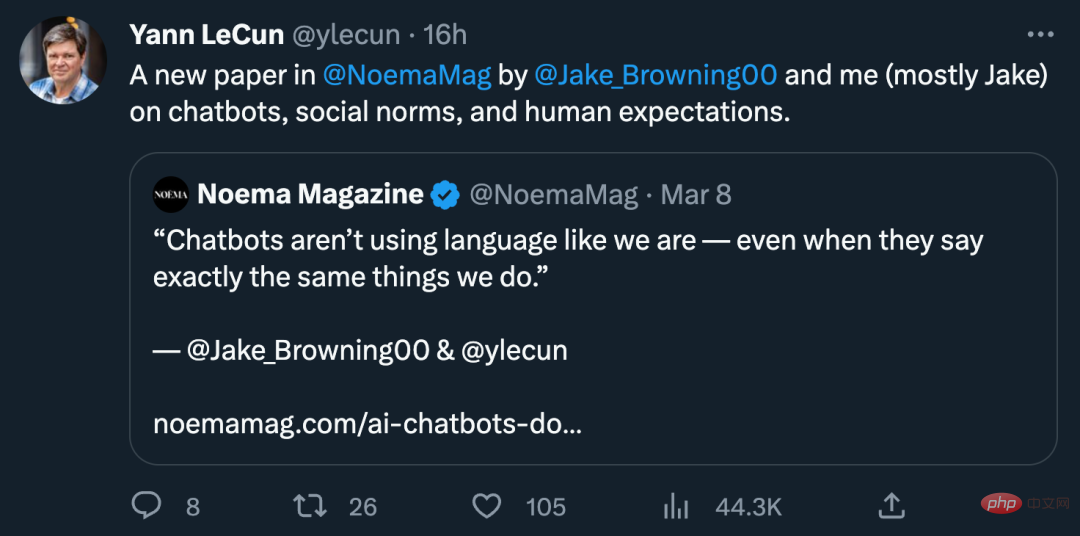

In order to understand these absurd moments of conversational AI, Yann LeCun, one of the three giants of deep learning, and Jacob Browning, a postdoctoral fellow in the Department of Computer Science at New York University, jointly wrote an article "AI Chatbots Don't Care About Your Social Norms", which discusses three aspects of conversational AI: chatbots, social norms, and human expectations.

#The article mentioned that human beings are very good at avoiding slips of the tongue and preventing themselves from making mistakes and rude words and deeds. In contrast, chatbots often make mistakes. So understanding why humans are so good at avoiding mistakes can better help us understand why chatbots currently cannot be trusted.

The chatbot adds human feedback to prevent the model from saying the wrong thing

For GPT-3, the ways to make mistakes include inaccurate model statistics. GPT-3 relies more on user prompts. Its understanding of context, situation, etc. only focuses on what can be obtained from user prompts. The same goes for ChatGPT, but slightly modified in a new and interesting way. In addition to statistics, the model's responses were also reinforced by human evaluators. For the system's output, human evaluators will reinforce it so that it outputs good responses. The end result is that the system not only says something plausible, but (ideally) also says something that a human would judge to be appropriate—even if the model says something wrong, at least it won't offend others.

But this method feels too mechanical. There are countless ways to say the wrong thing in human conversation: We can say something inappropriate, dishonest, confusing, or just plain stupid. We are even criticized for saying the wrong tone or intonation when we are saying the right things. In the process of dealing with others, we will pass through countless "conversation minefields." Keeping yourself from saying the wrong thing is not just an important part of a conversation, it is often more important than the conversation itself. Sometimes, keeping your mouth shut may be the only right course of action.

This leads to two questions: How can we control the dangerous situation of the model not saying the wrong thing? And why can’t chatbots effectively control themselves from saying the wrong thing?

How should the conversation proceed?

Human conversation can involve any topic, as if it were scripted: ordering food at a restaurant, making small talk, apologizing for being late, etc. However, these are not written scripts, and are full of improvisation, so this human conversation model is a more general model, and the rules are not so strict.

This scripted speech and behavior of humans is not restricted by words. Even if you don't understand the language, the same script can work, such as making a gesture to know what the other person wants. Social norms govern these scripts and help us navigate life. These codes dictate how everyone should behave in certain situations, assign roles to each person, and give broad guidance on how to act. Following norms is useful: it simplifies our interactions by standardizing and streamlining them, making it easier for each other to predict each other's intentions.

Humans have developed routines and norms that govern every aspect of our social lives, from what forks to use to how long you should wait before honking your horn. This is crucial for survival in a world of billions of people, where most of the people we encounter are complete strangers whose beliefs may not align with our own. Putting these shared norms in place will not only make conversations possible but fruitful, outlining what we should be talking about—and all the things we shouldn't be talking about.

The other side of norms

Human beings tend to sanction those who violate norms, sometimes openly and sometimes covertly. Social norms make it very easy to evaluate a stranger, for example, on a first date. Through conversations and questions, both parties will evaluate each other's behavior and if the other person violates one of the norms - for example, if they behave in a Rude or inappropriate – we often judge them and reject the second date.

For humans, these judgments are not only based on calm analysis, but further based on our emotional response to the world. Part of our education as children is emotion training to ensure we give the right emotion at the right time in a conversation: anger when someone violates etiquette norms, disgust when someone says something offensive, disgust when we tell a lie feel ashamed. Our moral conscience allows us to react quickly to anything inappropriate in a conversation and to anticipate how others will react to our words.

But more than that, a person's entire character is called into question if he violates simple norms. If he lied about one thing, would he lie about other things? Disclosure is therefore intended to shame the other person and, in the process, force the other person to apologize for (or at least defend) their behavior. Norms have also been strengthened.

In short, humans should strictly abide by social norms, otherwise there is a high risk of speaking out. We are responsible for anything we say, so choose what we say carefully and expect those around us to do the same.

Unconstrained Chatbots

The high stakes of human conversation reveal what makes chatbots so unsettling. By merely predicting how a conversation will go, they end up loosely adhering to human norms, but they are not bound by those norms. When we chat with chatbots casually or test their ability to solve language puzzles, they often give plausible answers and behave like normal humans. Someone might even mistake a chatbot for a human.

But if we change the prompt slightly or use a different script, the chatbot will suddenly spew conspiracy theories, racist tirades, or nonsense. This may be because they are trained on content written by conspiracy theorists, trolls, etc. on Reddit and other platforms.

Any one of us can say something like a troll, but we shouldn’t because trolls’ words are full of nonsense, offensive remarks, cruelty, and dishonesty. Most of us don’t say these things because we don’t believe them. Norms of decency have pushed offensive behavior to the margins of society, so much so that most of us wouldn't dare say it.

In contrast, chatbots don’t realize that there are things they shouldn’t say, no matter how statistically likely they are. They are unaware of the social norms that define the boundaries between what is and is not said, and are unaware of the deep social pressures that influence our use of language. Even when chatbots admit they messed up and apologize, they don’t understand why. If we point out they're wrong, the chatbot will even apologize to get the correct answer.

This illuminates a deeper issue: We expect human speakers to be true to what they say, and we hold them accountable for it. We don’t need to examine their brains or understand anything about psychology to do this, we just need to know that they are consistently reliable, follow the rules, and behave respectfully in order to trust them. The problem with chatbots isn’t that they’re “black boxes” or unfamiliar with the technology, but that they’ve been unreliable and off-putting for a long time, with no effort to improve or even an awareness that there was a problem.

The developers are certainly aware of these issues. They, as well as companies that want their AI technology to be widely used, worry about the reputation of their chatbots and spend a lot of time restructuring the system to avoid difficult conversations or eliminate inappropriate responses. While this helps make chatbots more secure, developers need to work hard to stay in front of people trying to break them. As a result, developers' approach is reactive and always behind: there are too many ways for things to go wrong to predict.

SMART BUT NOT HUMAN

This should not make us complacent about how smart humans are and how stupid chatbots are. Rather, their ability to talk about anything reveals a deep (or superficial) understanding of human social life and the world at large. Chatbots are smart enough to at least do well on tests or provide useful information. The panic that chatbots have caused among educators speaks volumes about their impressive ability to learn from book-based learning.

But the problem is that chatbots don’t care. They have no intrinsic goals they want to achieve through dialogue and are not motivated by the thoughts or reactions of others. They don't feel bad about lying, and their honesty isn't rewarded. They are shameless in a way that even Trump cares about his reputation enough to at least claim to be honest.

Therefore, chatbot conversations are meaningless. For humans, conversation is a way to get what we want, like making a connection, getting help on a project, passing time, or learning something. Conversation requires that we be interested in, and ideally care about, the person we are talking to.

Even if we don’t care about the person we’re talking to, at least we care about what the other person thinks of us. We deeply understand that success in life (such as having close relationships, doing a good job, etc.) depends on having a good reputation. If our social status drops, we risk losing everything. Conversations shape how others see us, and many of us use internal monologues to shape our perceptions of ourselves.

But chatbots don’t have their own stories to tell and reputations to defend, and they don’t feel the same pull to act responsibly that we do. Chatbots can and are useful in many highly scripted situations, from playing Dungeon Master, writing sensible copy, or helping authors explore ideas, to name a few. But they lack the knowledge about themselves or others to be trustworthy social agents, the kind of people we want to talk to most of the time.

If you don’t understand the norms around honesty and decency, and don’t care about your reputation, chatbots are of limited usefulness, and relying on them carries real dangers.

WEIRD CONVERSATION

Therefore, chatbots are not conversing in a human-like manner, and they can never achieve their goals by merely conversing in a way that seems statistically believable. Without real understanding of the social world, these AI systems are just boring chatterboxes, no matter how witty or eloquent they appear.

This helps shed light on why these AI systems are just very interesting tools and why humans shouldn’t be able to anthropomorphize them. Humans are not just dispassionate thinkers or speakers, but are essentially norm-abiding creatures, emotionally connected to one another through shared, enforced expectations. Human thinking and speech originate from their own social nature.

Pure dialogue is divorced from extensive world participation and has nothing in common with human beings. Chatbots don’t use language like us, even if they sometimes speak exactly like us. But ultimately, they don't understand why we talk like this, that's obvious.

The above is the detailed content of Yann LeCun: Regardless of social norms, ChatGPT is far from a real person. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology