Home >Technology peripherals >AI >A guide to training image classification models using TensorFlow

A guide to training image classification models using TensorFlow

- PHPzforward

- 2023-04-13 17:13:031399browse

Translator | Chen Jun

Reviewer | Sun Shujuan

As we all know, humans learn to identify and label the things they see at a very young age. Nowadays, with the continuous iteration of machine learning and deep learning algorithms, computers have been able to classify captured images on a large scale with very high accuracy. Currently, the application scenarios of such advanced algorithms include: interpreting lung scan images to determine whether they are healthy, performing facial recognition through mobile devices, and distinguishing different types of consumer objects for retailers.

Below, I will discuss with you an application of computer vision - image classification, and gradually show how to use TensorFlow to train a model on a small image data set.

1. Dataset and Target

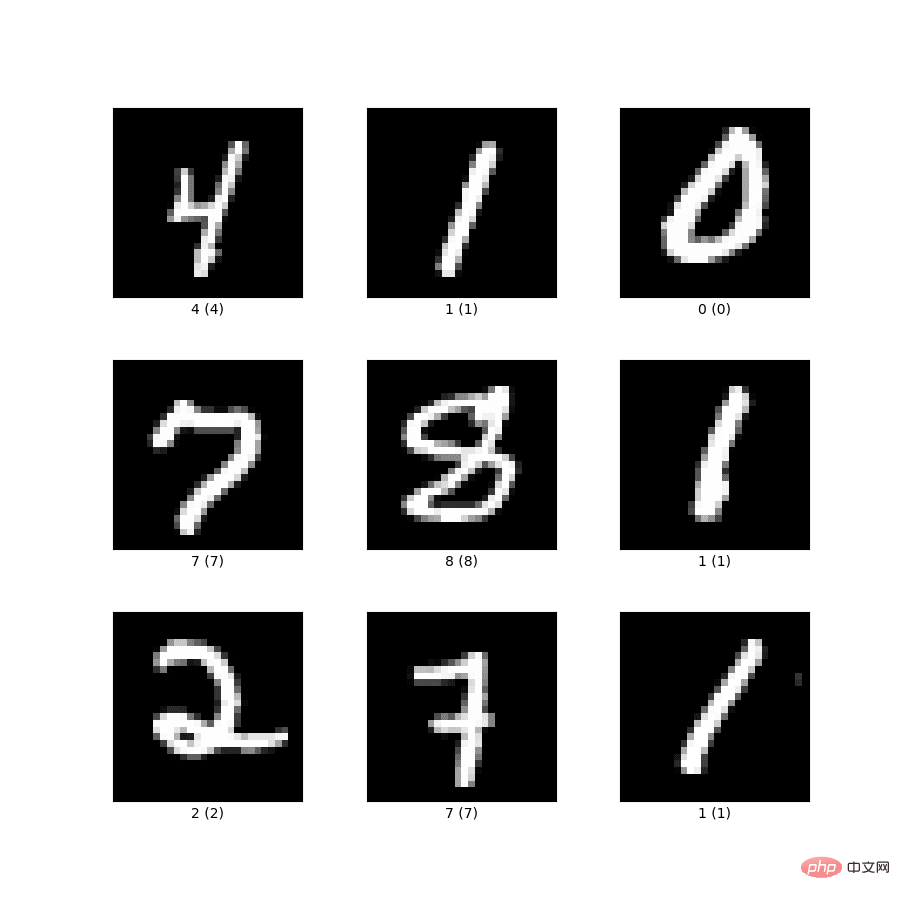

In this example, we will use the MNIST dataset of digit images from 0 to 9. Its shape is as shown in the figure below:

The purpose of training this model is to classify the images into their respective labels, that is: they correspond to each other in the figure above Numbers office. Typically, a deep neural network architecture provides an input, an output, two hidden layers (Hidden Layers), and a Dropout layer for training the model. CNN or Convolutional Neural Network is the first choice for identifying larger images. It can capture relevant information while reducing the amount of input.

2. Preparation work

First, let us pass TensorFlow, to_categorical (used to convert numerical class values to other categories), Sequential, Flatten, Dense, and used to build a neural network Dropout of the architecture to import all relevant code libraries. Some of the code libraries mentioned here may be slightly unfamiliar to you. I'll explain them in detail below.

3. Hyperparameters

- I will choose the correct set of hyperparameters through the following aspects:

- First, Let's define some hyperparameters as a starting point. Later, you can adjust it to meet different needs. Here, I chose 128 as the smaller batch size. In fact, the batch size can take on any value, but a power of 2 size often improves memory efficiency, so it should be the first choice. It's worth noting that the main rationale behind deciding on a suitable batch size is that a batch size that is too small will make convergence too cumbersome, while a batch size that is too large may not fit in your computer's memory.

- Let us keep the number of epochs (each sample in the training set participates in one training) to 50 to achieve fast training of the model. The lower the epoch value, the more suitable it is for small and simple data sets.

- Next, you need to add hidden layers. Here, I have reserved 128 neurons for each hidden layer. Of course, you can also test with 64 and 32 neurons. For this example, I would not recommend using higher values for a simple data set like MINST.

- You can try different learning rates, such as 0.01, 0.05 and 0.1. In this case, I keep it at 0.01.

- For other hyperparameters, I chose decay steps and decay rate to be 2000 and 0.9 respectively. And as training proceeds, they can be used to reduce the learning rate.

- Here, I choose Adamax as the optimizer. Of course, you can also choose other optimizers such as Adam, RMSProp, SGD, etc.

import tensorflow as tf

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense, Dropout

params = {

'dropout': 0.25,

'batch-size': 128,

'epochs': 50,

'layer-1-size': 128,

'layer-2-size': 128,

'initial-lr': 0.01,

'decay-steps': 2000,

'decay-rate': 0.9,

'optimizer': 'adamax'

}

mnist = tf.keras.datasets.mnist

num_class = 10

# split between train and test sets

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# reshape and normalize the data

x_train = x_train.reshape(60000, 784).astype("float32")/255

x_test = x_test.reshape(10000, 784).astype("float32")/255

# convert class vectors to binary class matrices

y_train = to_categorical(y_train, num_class)

y_test = to_categorical(y_test, num_class)4. Create training and test sets

Since the TensorFlow library also includes the MNIST dataset, you can call datasets.mnist on the object and then call load_data() method to obtain training (60,000 samples) and test (10,000 samples) data sets respectively.

Next, you need to reshape and normalize the training and test images. Among them, normalization will limit the pixel intensity of the image to between 0 and 1.

Finally, we use the previously imported to_categorical method to convert the training and test labels into classified labels. This is very important to convey to the TensorFlow framework that the output labels (ie: 0 to 9) are classes, not numeric types.

5. Design the neural network architecture

Now, let us understand how to design the neural network architecture in detail.

We convert the 2D image matrix into vectors by adding Flatten to define the structure of a DNN (deep neural network). The input neurons correspond here to the numbers in the vector.

Next, I use the Dense() method to add two hidden dense layers and extract each hyperparameter from the previously defined "params" dictionary. We can use "relu" (Rectified Linear Unit) as the activation function of these layers. It is one of the most commonly used activation functions in hidden layers of neural networks.

Then, we add the Dropout layer using the Dropout method. It will be used to avoid overfitting when training neural networks. After all, overfitting models tend to remember the training set accurately and fail to generalize to unseen data sets.

输出层是我们网络中的最后一层,它是使用Dense() 方法来定义的。需要注意的是,输出层有10个神经元,这对应于类(数字)的数量。

# Model Definition

# Get parameters from logged hyperparameters

model = Sequential([

Flatten(input_shape=(784, )),

Dense(params('layer-1-size'), activatinotallow='relu'),

Dense(params('layer-2-size'), activatinotallow='relu'),

Dropout(params('dropout')),

Dense(10)

])

lr_schedule =

tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate=experiment.get_parameter('initial-lr'),

decay_steps=experiment.get_parameter('decay-steps'),

decay_rate=experiment.get_parameter('decay-rate')

)

loss_fn = tf.keras.losses.CategoricalCrossentropy(from_logits=True)

model.compile(optimizer='adamax',

loss=loss_fn,

metrics=['accuracy'])

model.fit(x_train, y_train,

batch_size=experiment.get_parameter('batch-size'),

epochs=experiment.get_parameter('epochs'),

validation_data=(x_test, y_test),)

score = model.evaluate(x_test, y_test)

# Log Model

model.save('tf-mnist-comet.h5')6、训练

至此,我们已经定义好了架构。下面让我们用给定的训练数据,来编译和训练神经网络。

首先,我们以初始学习率、衰减步骤和衰减率作为参数,使用ExponentialDecay(指数衰减学习率)来定义学习率计划。

其次,将损失函数定义为CategoricalCrossentropy(用于多类式分类)。

接着,通过将优化器 (即:adamax)、损失函数、以及各项指标(由于所有类都同等重要、且均匀分布,因此我选择了准确性)作为参数,来编译模型。

然后,我们通过使用x_train、y_train、batch_size、epochs和validation_data去调用一个拟合方法,并拟合出模型。

同时,我们调用模型对象的评估方法,以获得模型在不可见数据集上的表现分数。

最后,您可以使用在模型对象上调用的save方法,保存要在生产环境中部署的模型对象。

7、小结

综上所述,我们讨论了为图像分类任务,训练深度神经网络的一些入门级的知识。您可以将其作为熟悉使用神经网络,进行图像分类的一个起点。据此,您可了解到该如何选择正确的参数集、以及架构背后的思考逻辑。

原文链接:https://www.kdnuggets.com/2022/12/guide-train-image-classification-model-tensorflow.html

The above is the detailed content of A guide to training image classification models using TensorFlow. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology