Home >Technology peripherals >AI >The picture cannot be loaded for a long time and is a mosaic? Google's open source model prioritizes showing the most interesting parts of images

The picture cannot be loaded for a long time and is a mosaic? Google's open source model prioritizes showing the most interesting parts of images

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-13 17:10:031138browse

When observing an image, what content in the image will you pay attention to first, or which areas in the image will first attract your attention? Can the machine learn this form of human attention? In a study from Google, their open-source attention center model can do just that. And the model can be used on JPEG XL image format.

For example, the figure below is some prediction examples of the attention center model, where the green dot is the predicted attention center point of the image.

##Image from Kodak image dataset: http://r0k.us/graphics/kodak/

The attention center model size is 2MB and the format is TensorFlow Lite. It takes an RGB image as input and outputs a 2D point that is the predicted center of attention point on the image.

In order to train a model to predict attention centers, you first need some real data from attention centers. Given an image, some attention points can be collected using an eye tracker or approached by clicking on the image with a mouse. This study first performs temporal filtering on these attention points, retaining only the initial attention points, and then applies spatial filtering to remove noise. Finally, the center of the remaining attention points is calculated as the ground truth attention center. An example illustration of the process of obtaining the truth value is shown below.

Project address: https://github.com/google/attention-center

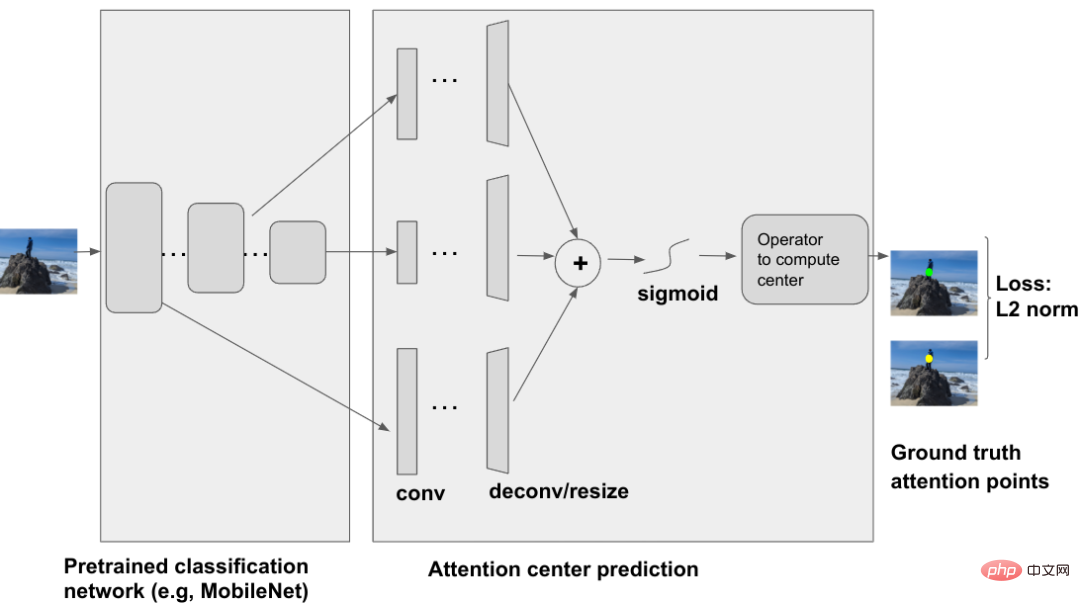

Attention center model architectureThe attention center model is a deep neural network that takes an image as input and uses pre-trained classification networks such as ResNet, MobileNet, etc. backbone. Several intermediate layers output from the backbone network are used as inputs to the attention center prediction module. These different intermediate layers contain different information, for example, shallow layers usually contain lower level information like intensity/color/texture, while deeper layers usually contain higher, more semantic information like shape/object.

Attention center prediction uses convolution and deconvolution adjustment operators, combined with aggregation and sigmoid functions, to generate a weight map of the attention center. Then an operator (in the example the Einstein summation operator) can be used to calculate the center from the weighted graph. The L2 norm between the predicted attention center and the true attention center is used as the training loss.

Additionally, JPEG XL is a new image format that allows users to encode an image in such a way that the interesting parts are shown first. The advantage of this is that when users browse images online, the attractive parts of the image can be displayed first, which is the part that the user sees first. Ideally, once the user looks at the rest of the image, the part of the image will be displayed first. Other parts are already in place and decoded.

In JPEG XL, the image is usually divided into a matrix of size 256 x 256. The JPEG XL encoder will select a starting group in the image and then generate concentric square. Chrome has added progressive decoding of JPEG XL images since version 107. Currently, JPEG XL is still an experimental product and can be enabled by searching for jxl in chrome://flags.

To understand the effect of progressive loading of JPEG XL images, you can visit the URL to view:

https://google.github. io/attention-center/

The above is the detailed content of The picture cannot be loaded for a long time and is a mosaic? Google's open source model prioritizes showing the most interesting parts of images. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology