Home >Technology peripherals >AI >The super evolved version of Meta 'Divide Everything' is here! IDEA leads the top domestic team to create: detect, segment, and generate everything, and grab 2k stars

The super evolved version of Meta 'Divide Everything' is here! IDEA leads the top domestic team to create: detect, segment, and generate everything, and grab 2k stars

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-13 14:40:031988browse

After Meta’s “divide everything” model came out, people in the industry have already exclaimed that CV does not exist.

Just one day after SAM was released, the domestic team created an evolved version "Grounded-SAM" based on this.

Note: The project logo was made by the team using Midjourney for an hour

Grounded-SAM integrates SAM with BLIP and Stable Diffusion, integrating the three capabilities of image "segmentation", "detection" and "generation" into one, becoming the most powerful Zero-Shot visual application.

Netizens expressed that it was too curly!

Wenhu Chen, a research scientist at Google Brain and an assistant professor of computer science at the University of Waterloo, said "This is too fast."

AI boss Shen Xiangyang also recommended this latest project to everyone:

Grounded- Segment-Anything: Automatically detect, segment and generate anything with image and text input. Edge segmentation can be further improved.

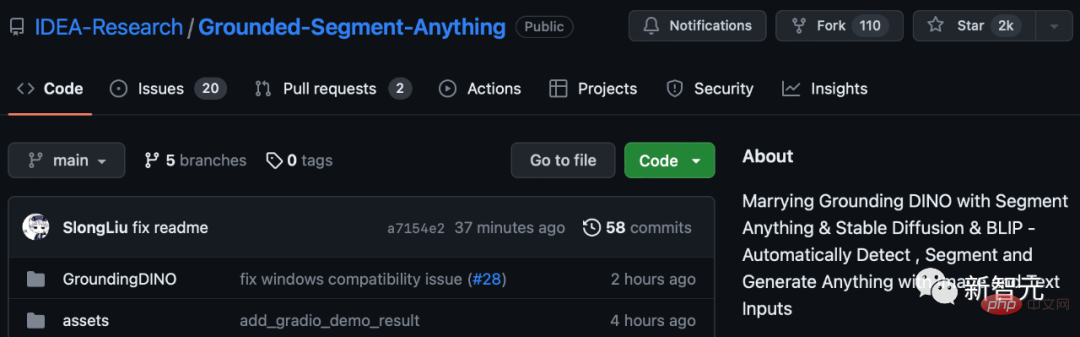

So far, this project has garnered 2k stars on GitHub.

Detect everything, split everything, generate everything

Last week, the release of SAM welcomed CV Here comes the GPT-3 moment. Even, Meta AI claims that this is the first basic image segmentation model in history.

This model can specify a point, a bounding box, and a sentence in a unified framework prompt encoder to directly segment any object with one click.

SAM has broad versatility, that is, it has the ability to migrate with zero samples, which is enough to cover various use cases. With additional training, it can be used out of the box in new imaging domains, whether underwater photos or cell microscopy.

It can be seen that SAM can be said to be extremely strong.

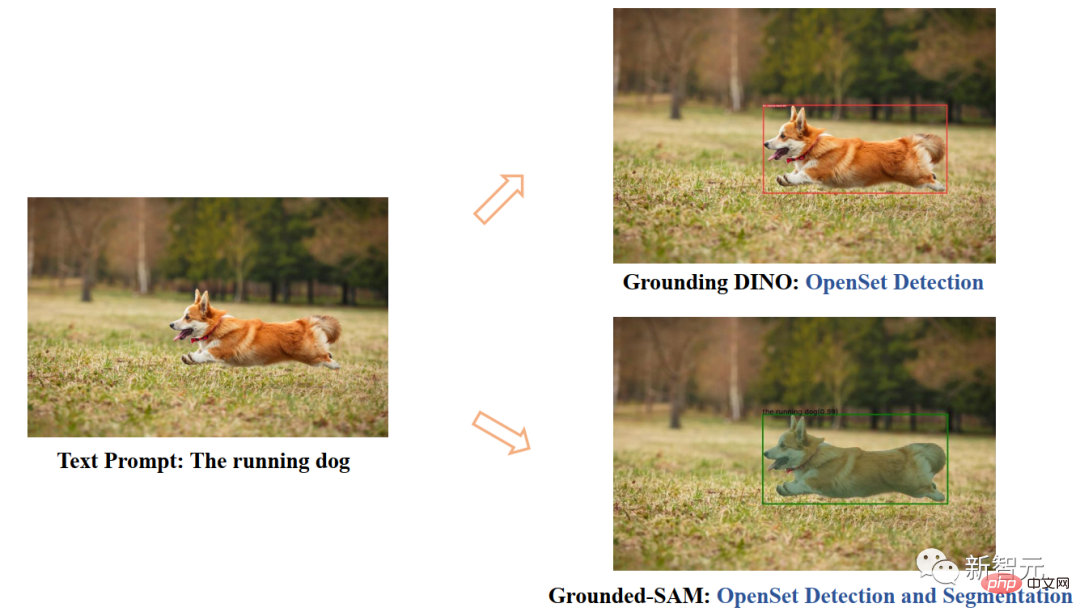

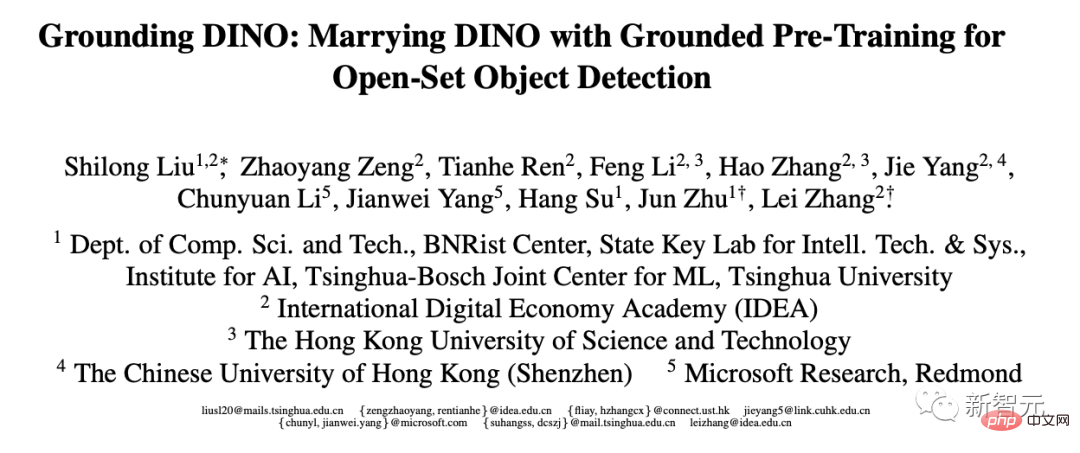

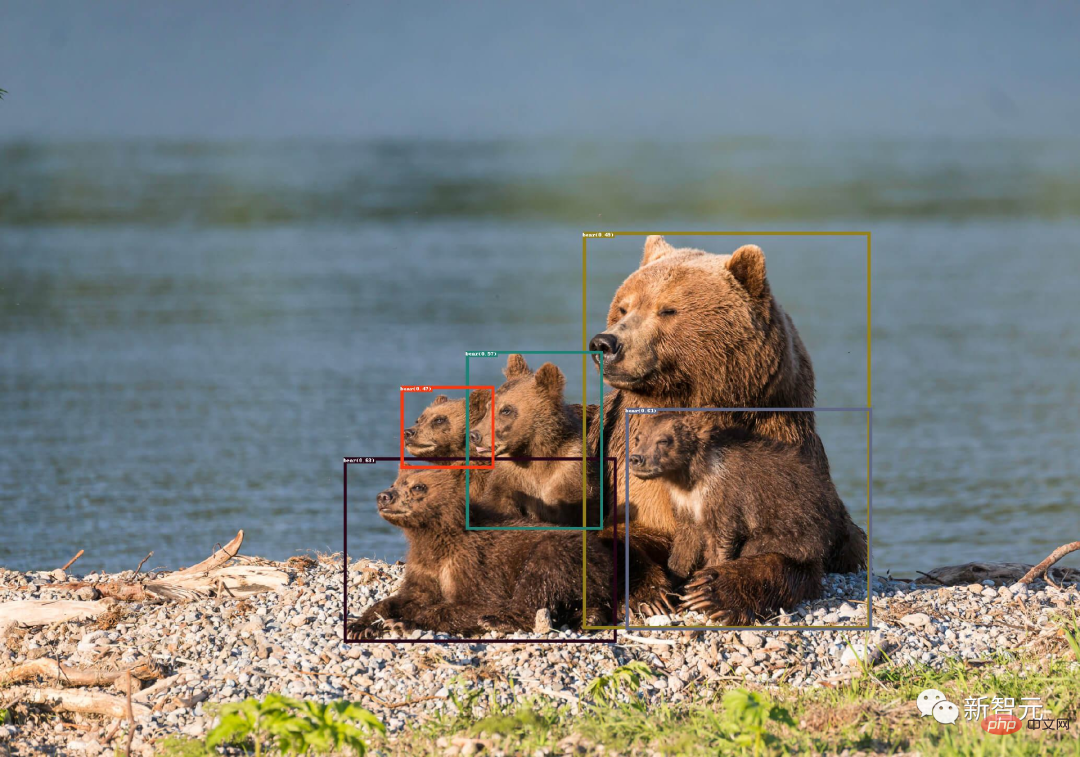

Now, domestic researchers have come up with new ideas based on this model. Combining the powerful zero-sample target detector Grounding DINO with it, it can detect and segment through text input. everything.

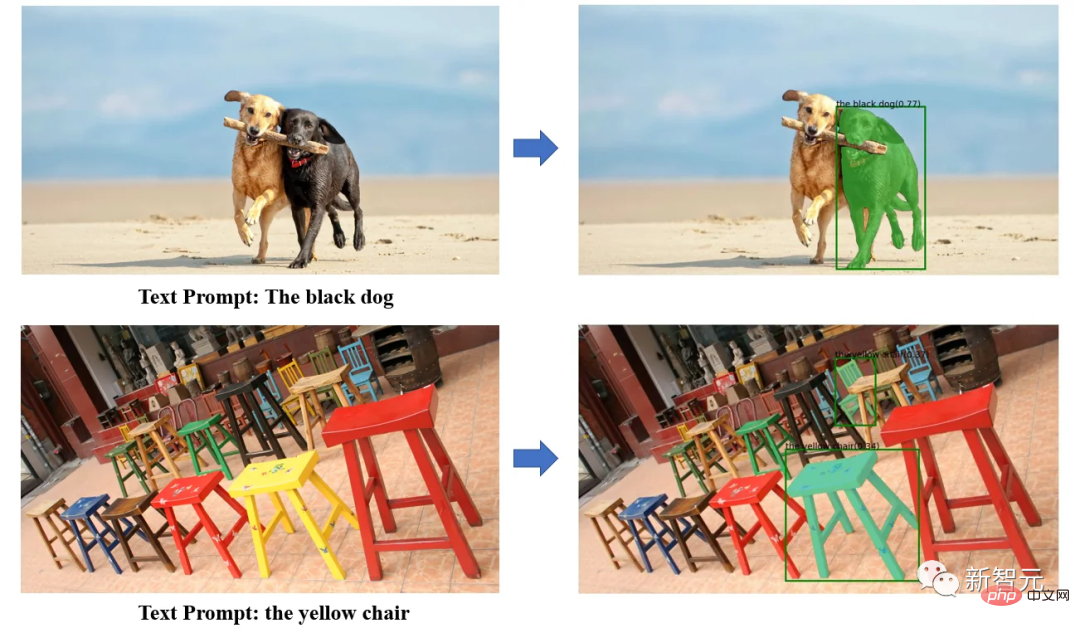

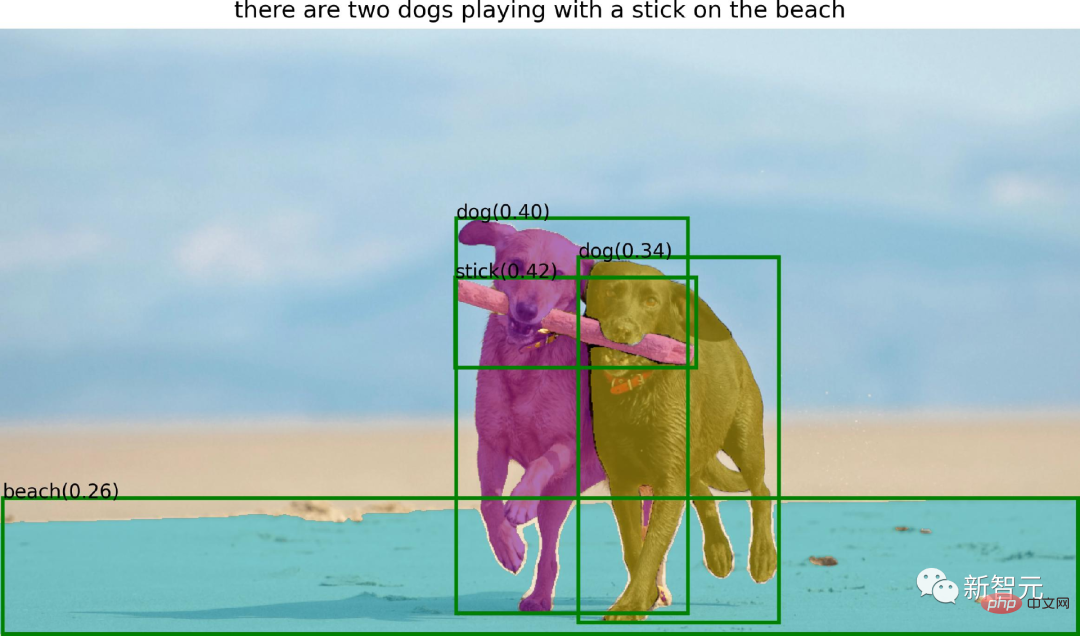

With the powerful zero-sample detection capability of Grounding DINO, Grounded SAM can find any object in the picture through text description, and then use SAM's powerful segmentation capability to segment out the objects in a fine-grained manner. mas.

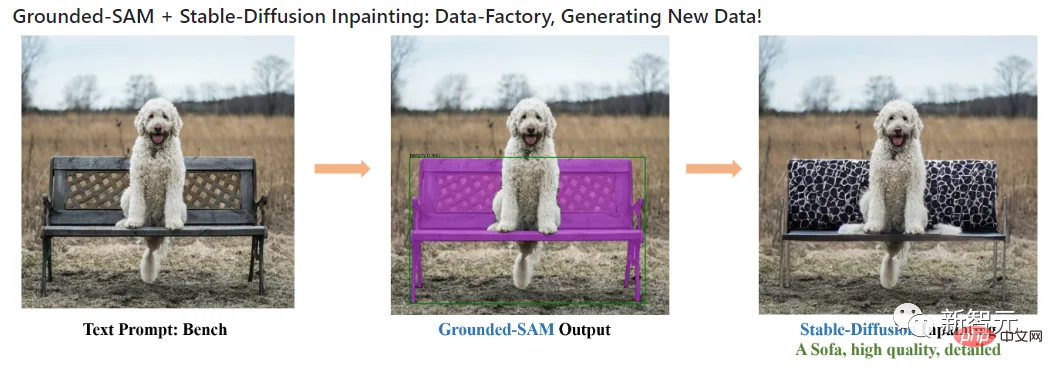

Finally, you can also use Stable Diffusion to generate controllable text and images in the segmented areas.

In the specific practice of Grounded-SAM, the researchers combined Segment-Anything with three powerful zero-sample models to build an automatic labeling system process and demonstrated a very, very impressive result!

This project combines the following models:

· BLIP: Powerful Image Annotation Model

· Grounding DINO: State-of-the-art zero-shot detector

· Segment-Anything: Powerful zero-shot segmentation Model

· Stable-Diffusion: Excellent generative model

All models can be combined used, or can be used independently. Build a powerful visual workflow model. The entire workflow has the ability to detect everything, segment everything, and generate everything.

Features of the system include:

BLIP Grounded-SAM=Automatic Labeler

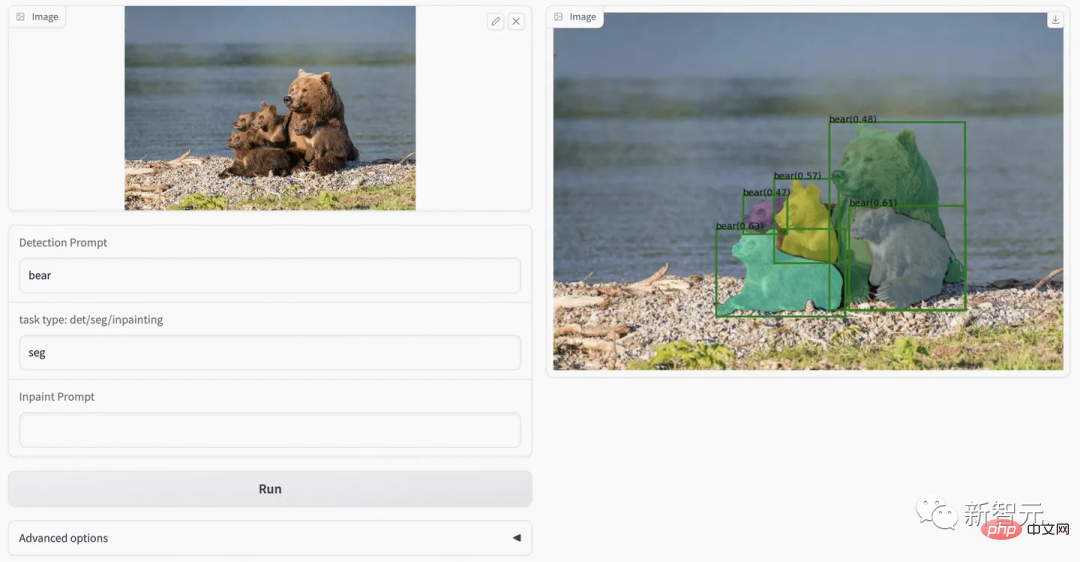

Use the BLIP model to generate titles, extract tags, and use Ground-SAM to generate boxes and masks:

· Semi-automatic annotation system: Detection Input text and provide precise box annotation and mask annotation.

· Fully automatic annotation system:

First use the BLIP model Generate reliable annotations for input images, then let Grounding DINO detect entities in the annotations, followed by SAM for instance segmentation on their box cues.

##Stable Diffusion Grounded-SAM=Data Factory

· Used as a data factory to generate new data: Diffusion repair models can be used to generate new data based on masks.

Segment Anything HumanEditing

In this branch, the author uses Segment Anything to edit people's hair/face.

· SAM Hair Editor

· SAM Fashion Editor

Automatically generate images to build new datasets; a more powerful base model pre-trained for segmentation; collaboration with (Chat-)GPT models; a complete pipeline for automatically annotating images ( including bounding boxes and masks) and generate a new image.

Author introduction

One of the researchers of the Grounded-SAM project is Liu Shilong, a third-year doctoral student in the Department of Computer Science at Tsinghua University.He recently introduced the latest project he and his team have made on GitHub, and said it is still being improved.

Now, Liu Shilong is an intern at the Computer Vision and Robot Research Center of the Guangdong-Hong Kong-Macao Greater Bay Area Digital Economy Research Institute (IDEA Research Institute), guided by Professor Zhang Lei. His research directions include target detection and multi-modal learning. Prior to this, he received a bachelor's degree in Industrial Engineering from Tsinghua University in 2020 and interned at Megvii for a period of time in 2019. Personal homepage: http://www.lsl.zone/ By the way, Liu Shilong It is also a work of the target detection model Grounding DINO released in March this year. In addition, 4 of his papers were accepted by CVPR 2023, 2 papers were accepted by ICLR 2023, and 1 paper was accepted by AAAI 2023. ##Paper address: https://arxiv.org/pdf/2303.05499.pdf The big boss Liu Shilong mentioned, Ren Tianhe, is currently working as a computer vision algorithm engineer at the IDEA Research Institute. He is also guided by Professor Zhang Lei. His main research directions are target detection and multi-modality. # In addition, the project’s collaborators include Li Kunchang, a third-year doctoral student at the University of Chinese Academy of Sciences, whose main research directions are video understanding and multi-modal learning. ; Cao He, an intern at the Computer Vision and Robotics Research Center of the IDEA Research Institute, whose main research direction is generative models; and Chen Jiayu, a senior algorithm engineer at Alibaba Cloud. ## Ren Tianhe, Liu Shilong Install and run Install Segment Anything:

1. Use BLIP (or other labeling models) to generate a label. 2. Extract tags from annotations and use ChatGPT to process potentially complex sentences. 3. Use Grounded-Segment-Anything to generate boxes and masks.

python -m pip install -e segment_anything

python -m pip install -e GroundingDINO

pip install --upgrade diffusers[torch]

pip install opencv-python pycocotools matplotlib onnxruntime onnx ipykernel

Download groundingdino checkpoint:

cd Grounded-Segment-Anything

wget https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha/groundingdino_swint_ogc.pth

export CUDA_VISIBLE_DEVICES=0

python grounding_dino_demo.py

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py

--grounded_checkpoint groundingdino_swint_ogc.pth

--input_image assets/demo1.jpg

--output_dir "outputs"

--box_threshold 0.3

--text_threshold 0.25

--text_prompt "bear"

--device "cuda"

##Grounded-Segment- Anything BLIP Demonstration

##Grounded-Segment- Anything BLIP Demonstration

Automatically generating pseudo-labels is simple:

export CUDA_VISIBLE_DEVICES=0

python automatic_label_demo.py

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py

--grounded_checkpoint groundingdino_swint_ogc.pth

--sam_checkpoint sam_vit_h_4b8939.pth

--input_image assets/demo3.jpg

--output_dir "outputs"

--openai_key your_openai_key

--box_threshold 0.25

--text_threshold 0.2

--iou_threshold 0.5

--device "cuda"

伪标签和模型预测可视化将保存在output_dir中,如下所示:

Grounded-Segment-Anything+Inpainting演示

CUDA_VISIBLE_DEVICES=0 python grounded_sam_inpainting_demo.py --config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py --grounded_checkpoint groundingdino_swint_ogc.pth --sam_checkpoint sam_vit_h_4b8939.pth --input_image assets/inpaint_demo.jpg --output_dir "outputs" --box_threshold 0.3 --text_threshold 0.25 --det_prompt "bench" --inpaint_prompt "A sofa, high quality, detailed" --device "cuda"

Grounded-Segment-Anything+Inpainting Gradio APP

python gradio_app.py

作者在此提供了可视化网页,可以更方便的尝试各种例子。

网友评论

对于这个项目logo,还有个深层的含义:

一只坐在地上的马赛克风格的熊。坐在地面上是因为ground有地面的含义,然后分割后的The super evolved version of Meta Divide Everything is here! IDEA leads the top domestic team to create: detect, segment, and generate everything, and grab 2k stars可以认为是一种马赛克风格,而且马塞克谐音mask,之所以用熊作为logo主体,是因为作者主要示例的The super evolved version of Meta Divide Everything is here! IDEA leads the top domestic team to create: detect, segment, and generate everything, and grab 2k stars是熊。

看到Grounded-SAM后,网友表示,知道要来,但没想到来的这么快。

项目作者任天和称,「我们用的Zero-Shot检测器是目前来说最好的。」

未来,还会有web demo上线。

最后,作者表示,这个项目未来还可以基于生成模型做更多的拓展应用,例如多领域精细化编辑、高质量可信的数据工厂的构建等等。欢迎各个领域的人多多参与。

The above is the detailed content of The super evolved version of Meta 'Divide Everything' is here! IDEA leads the top domestic team to create: detect, segment, and generate everything, and grab 2k stars. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology