Technology peripherals

Technology peripherals AI

AI With only so much computing power, how to improve language model performance? Google has a new idea

With only so much computing power, how to improve language model performance? Google has a new ideaWith only so much computing power, how to improve language model performance? Google has a new idea

In recent years, language models (LM) have become more prominent in natural language processing (NLP) research and increasingly influential in practice. In general, increasing the size of a model has been shown to improve performance across a range of NLP tasks.

However, the challenge of scaling up the model is also obvious: training new, larger models requires a lot of computing resources. In addition, new models are often trained from scratch and cannot utilize the training weights of previous models.

Regarding this problem, Google researchers explored two complementary methods to significantly improve the performance of existing language models without consuming a lot of additional computing resources.

First of all, in the article "Transcending Scaling Laws with 0.1% Extra Compute", the researchers introduced UL2R, a lightweight second-stage pre-training model that uses a Mixed enoisers target. UL2R improves performance on a range of tasks, unlocking bursts of performance even on tasks that previously had near-random performance.

Paper link: https://arxiv.org/pdf/2210.11399.pdf

In addition, In "Scaling Instruction-Finetuned Language Models", we explore the problem of fine-tuning language models on a data set worded with instructions, a process we call "Flan". This approach not only improves performance but also improves the usability of the language model to user input.

##Paper link: https://arxiv.org/abs/2210.11416

Finally, Flan and UL2R can be combined as complementary technologies in a model called Flan-U-PaLM 540B, which outperforms the untuned PaLM 540B model on a range of challenging evaluation benchmarks. Performance is 10% higher.

Training of UL2R

Traditionally, most language models are pre-trained on causal language modeling goals so that the model can Predict the next word in a sequence (like GPT-3 or PaLM) or denoising goals, where the model learns to recover original sentences from corrupted word sequences (like T5).

Although there are some trade-offs in the language modeling objective, i.e., language models for causality perform better at long sentence generation, while language models trained on the denoising objective perform better at fine-tuning aspect performed better, but in previous work, the researchers showed that a hybrid enoisers objective that included both objectives achieved better performance in both cases.

However, pre-training large language models from scratch on different targets is computationally difficult. Therefore, we propose UL2 repair (UL2R), an additional stage that continues pre-training with the UL2 target and requires only a relatively small amount of computation.

We apply UL2R to PaLM and call the resulting new language model U-PaLM.

In our empirical evaluation, we found that with only a small amount of UL2 training, the model improved significantly.

For example, by using UL2R on the intermediate checkpoint of PaLM 540B, the performance of PaLM 540B on the final checkpoint can be achieved while using 2 times the computational effort. Of course, applying UL2R to the final PaLM 540B checkpoint will also bring huge improvements.

Comparison of calculation and model performance of PaLM 540B and U-PaLM 540B on 26 NLP benchmarks. U-PaLM 540B continues to train PaLM, with a very small amount of calculation but a great improvement in performance.

Another benefit of using UL2R is that it performs much better on some tasks than models trained purely on causal language modeling goals. For example, there are many BIG-Bench tasks with so-called "emergent capabilities", which are capabilities that are only available in sufficiently large language models.

While the most common way to discover emerging capabilities is by scaling up the model, UL2R can actually inspire emerging capabilities without scaling up the model.

For example, in the navigation task of BIG-Bench, which measures the model's ability to perform state tracking, all models except U-PaLM have fewer training FLOPs. At 10^23. Another example is BIG-Bench’s Snarks task, which measures a model’s ability to detect sarcastic language.

For both capabilities from BIG-Bench, emerging task performance is demonstrated, U-PaLM achieves emerging performance at a smaller model size due to the use of the UL2R target .

Instruction fine-tuning

In the second paper, we explore instruction fine-tuning, which involves fine-tuning instructions in a set of instructions. Fine-tuning LM on NLP dataset.

In previous work, we applied instruction fine-tuning to a 137B parameter model on 62 NLP tasks, such as answering a short question, classifying the emotion expressed in a movie, or classifying a sentence Translated into Spanish and more.

In this work, we fine-tune a 540B parameter language model on over 1.8K tasks. Furthermore, previous work only fine-tuned language models with few examples (e.g., MetaICL) or zero-instance language models with no examples (e.g., FLAN, T0), whereas we fine-tune a combination of both.

We also include thought chain fine-tuning data, which enables the model to perform multi-step inference. We call our improved method "Flan" for fine-tuning language models.

It is worth noting that even when fine-tuned on 1.8K tasks, Flan only uses a fraction of the computation compared to pre-training (for PaLM 540B, Flan only uses Requires 0.2% of pre-training calculations).

Fine-tune the language model on 1.8K tasks formulated as instructions and evaluate the model on new tasks. Not included in trimming. Fine-tuning is performed with/without examples (i.e., 0-shot and few-shot), and with/without thought chains, allowing the model to be generalized across a range of evaluation scenarios.

In this paper, LMs of a range of sizes are instructed to fine-tune, with the purpose of studying the joint effects of simultaneously expanding the size of the language model and increasing the number of fine-tuning tasks.

For example, for the PaLM class language model, it includes 8B, 62B and 540B parameter specifications. Our model is evaluated on four challenging benchmark evaluation criteria (MMLU, BBH, TyDiQA, and MGSM) and found that both expanding the number of parameters and fine-tuning the number of tasks can improve performance on new and previously unseen tasks.

Expanding the parameter model to 540B and using 1.8K fine-tuning tasks can improve performance. The y-axis of the above figure is the normalized mean of the four evaluation suites (MMLU, BBH, TyDiQA and MGSM).

In addition to better performance, instruction fine-tuning LM is able to react to user instructions at inference time without requiring a small number of examples or hint engineering. This makes LM more user-friendly across a range of inputs. For example, LMs without instruction fine-tuning sometimes repeat inputs or fail to follow instructions, but instruction fine-tuning can mitigate such errors.

Our instruction fine-tuned language model Flan-PaLM responds better to instructions than the PaLM model without instruction fine-tuning.

Combining powerful forces to achieve "1 1>2"

Finally, we show that UL2R and Flan can be combined to train the Flan-U-PaLM model.

Since Flan uses new data from NLP tasks and can achieve zero-point instruction tracking, we use Flan as the second choice method after UL2R.

We again evaluate the four benchmark suites and find that the Flan-U-PaLM model outperforms the PaLM model with only UL2R (U-PaLM) or only Flan (Flan-PaLM). Furthermore, when combined with thought chaining and self-consistency, Flan-U-PaLM reaches a new SOTA on the MMLU benchmark with a score of 75.4%.

Compared with using only UL2R (U-PaLM) or only using Flan (Flan-U-PaLM), combining UL2R and Flan (Flan -U-PaLM) combined leads to the best performance: the normalized average of the four evaluation suites (MMLU, BBH, TyDiQA and MGSM).

In general, UL2R and Flan are two complementary methods for improving pre-trained language models. UL2R uses the same data to adapt LM to denoisers' mixed objectives, while Flan leverages training data from over 1.8K NLP tasks to teach the model to follow instructions.

As language models get larger, techniques like UL2R and Flan, which improve general performance without requiring heavy computation, may become increasingly attractive.

The above is the detailed content of With only so much computing power, how to improve language model performance? Google has a new idea. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

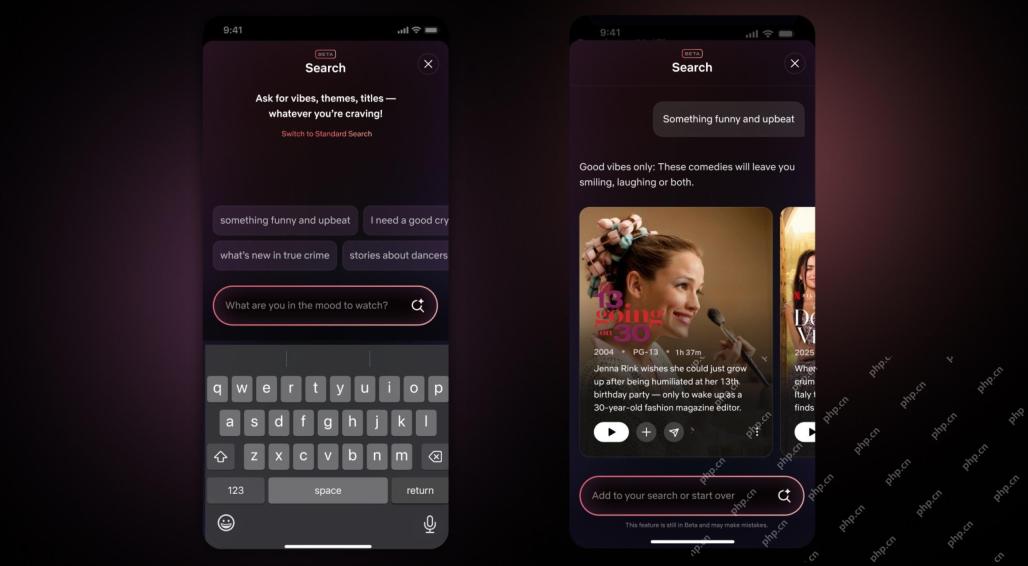

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver Mac version

Visual web development tools

Dreamweaver CS6

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.