Technology peripherals

Technology peripherals AI

AI US media: Musk and others are right to call for a suspension of AI training and need to slow down for safety

US media: Musk and others are right to call for a suspension of AI training and need to slow down for safetyUS media: Musk and others are right to call for a suspension of AI training and need to slow down for safety

According to news on March 30, Tesla CEO Elon Musk and Apple co-founder Steve Steve Wozniak and more than 1,000 others recently signed an open letter calling for a moratorium on training AI systems more powerful than GPT-4. BI, a mainstream American online media, believes that for the benefit of the whole society, AI development needs to slow down.

In the open letter, Wozniak, Musk and others requested that as AI technology becomes increasingly powerful, safety guardrails be set up and the training of more advanced AI models be suspended. They believe that for powerful AI models like OpenAI's GPT-4, "they should only be developed when we are confident that their impact is positive and the risks are controllable."

Of course, this is not the first time people have called for safety guardrails for AI. However, as AI becomes more complex and advanced, calls for caution are rising.

James Grimmelmann, a professor of digital and information law at Cornell University in the United States, said: “Slowing down the development of new AI models is a very good idea, because if AI ends up being If it is beneficial to us, then there is no harm in waiting a few months or years, we will reach the end anyway. And if it is detrimental, then we also buy ourselves extra time to work out the best way to respond and understand How to fight against it.”

The rise of ChatGPT highlights the potential dangers of moving too fast

Last November, when OpenAI’s chatbot ChatGPT was launched for public testing, it caused a huge sensation. Understandably, people started promoting ChatGPT's capabilities, and its destructive effect on society quickly became apparent. ChatGPT began passing medical professional exams, giving instructions on how to make bombs, and even created an alter ego for himself.

The more we use AI, especially so-called generative artificial intelligence (AIGC) tools like ChatGPT or the text-to-image conversion tool Stable Diffusion, the more we see its shortcomings and its ways of creating bias. potential, and how powerless we humans appear to be in harnessing its power.

BI editor Hasan Chowdhury wrote that AI has the potential to “become a turbocharger, accelerating the spread of our mistakes.” Like social media, it taps into the best and worst of humanity. But unlike social media, AI will be more integrated into people's lives.

ChatGPT and other similar AI products already tend to distort information and make mistakes, something Wozniak has spoken about publicly. It's prone to so-called "hallucinations" (untruthful information), and even OpenAI CEO Sam Altman admitted that the company's models can produce racial, sexist and biased answers . Stable Diffusion has also run into copyright issues and been accused of stealing inspiration from the work of digital artists.

As AI becomes integrated into more everyday technologies, we may introduce more misinformation into the world on a larger scale. Even tasks that seem benign to an AI, such as helping plan a vacation, may not yield completely trustworthy results.

It’s difficult to develop AI technology responsibly when the free market demands rapid development

To be clear, AI is an incredibly transformative technology, especially one like ChatGPT Such AIGC. There's nothing inherently wrong with developing machines to do most of the tedious work that people hate.

While the technology has created an existential crisis among the workforce, it has also been hailed as an equalizing tool for the tech industry. There is also no evidence that ChatGPT is preparing to lead a bot insurgency in the coming years.

Many AI companies have ethicists involved to develop this technology responsibly. But if rushing a product outweighs its social impact, teams focused on creating AI safely won’t be able to get the job done in peace.

Speed seems to be a factor that cannot be ignored in this AI craze. OpenAI believes that if the company moves fast enough, it can fend off the competition and become a leader in the AIGC space. That prompted Microsoft, Google and just about every other company to follow suit.

Releasing powerful AI models for public experience before they are ready does not make the technology better. The best use cases for AI have yet to be found because developers have to cut through the noise generated by the technology they create, and users are distracted by the noise.

Not Everyone Wants to Slow Down

The open letter from Musk and others has also been criticized by others, who believe that it misses the point.

Emily M. Bender, a professor at the University of Washington, said on Twitter that Musk and other technology leaders only focus on the power of AI in the hype cycle, rather than the actual damage it can cause.

Cornell University digital and information law professor Gerry Melman added that tech leaders who signed the open letter were "belatedly arriving" and opening a Pandora's box that could bring consequences to themselves. Come to trouble. He said: "Now that they have signed this letter, they can't turn around and apply the same policy to other technologies such as self-driving cars."

Suspending development or imposing more regulations may also Will not achieve results. But now, the conversation seems to have turned. AI has been around for decades, maybe we can wait a few more years.

The above is the detailed content of US media: Musk and others are right to call for a suspension of AI training and need to slow down for safety. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

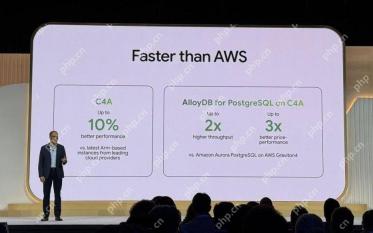

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver CS6

Visual web development tools