Home >Technology peripherals >AI >Are AI tools becoming a hot commodity for cybercriminals? Study finds Russian hackers are bypassing OpenAI restrictions to access ChatGPT

Are AI tools becoming a hot commodity for cybercriminals? Study finds Russian hackers are bypassing OpenAI restrictions to access ChatGPT

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 13:19:051261browse

According to industry media reports, OpenAI has restricted the application of ChatGPT, a powerful chatbot, in some countries and regions due to concerns that it may be used for criminal activities. However, the study found that some cybercriminals from Russia have been trying to find new ways to bypass existing restrictions in order to adopt ChatGPT, and found cases including bypassing IP, payment card and phone number restrictions.

Researchers found evidence on hacker forums that Russian hackers were trying to bypass restrictions to access ChatGPT

Since November 2022 Since its launch, ChatGPT has become an important workflow tool for developers, writers, and students, but it has also become a powerful weapon that can be exploited by cybercriminals, with evidence that hackers use it to write malicious code and improve phishing emails.

ChatGPT may be used for malicious purposes, which means OpenAI needs to limit how the tool is deployed, because hackers using ChatGPT can more quickly carry out malicious attacks. OpenAI uses geo-blocking measures to prevent Russian users from accessing the system.

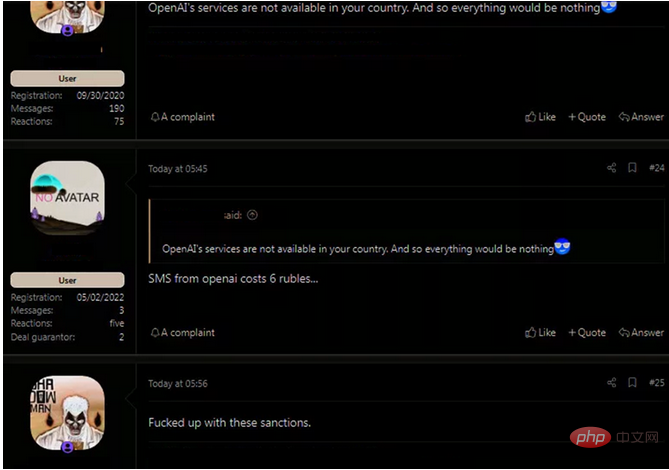

However, Check Point Software researchers found multiple instances of hackers from Russia talking on underground hacking forums about how to circumvent these restrictions. For example, a hacker group from Russia raised a question on the forum - if they cannot enter ChatGPT normally, how can they use a stolen payment card to pay for an OpenAI account and use the API to access the system? The system is currently in a free-to-use preview, while the API includes text and code generation using tokens for payment during sessions.

Sergey Shykevich, manager of Check Point’s threat intelligence department, said that bypassing OpenAI’s restrictions is not particularly difficult. “We are now seeing some Russian hackers already discussing and examining how to bypass geofences and use ChatGPT with them.” for their malicious purposes. We believe that these hackers are likely trying to test and adopt ChatGPT in their cybercriminal activities. Cybercriminals are increasingly interested in ChatGPT because the artificial intelligence technology behind it can make their cyberattacks more Efficient."

These underground hacking forums also offer tutorials in Russian about semi-legitimate online text messaging services and how to use them to register for ChatGPT, making it appear that ChatGPT is being used in other countries where it is not blocked.

Hackers learn from these forums that they only need to pay 2 cents for a virtual number and then use this number to obtain codes from OpenAI. These temporary phone numbers can come from anywhere in the world, and new numbers can be generated as needed.

Malicious use of Infostealer and ChatGPT

Previous investigations found examples of cybercriminals posting on these hacking forums how to use ChatGPT for illegal activities. This includes creating a very simple Infostealer. As artificial intelligence tools become more widely used, these Infostealers will become more advanced.

Infostealer is an example of the adoption of these "simple tools" and appeared on a hacker forum in a post titled "ChatGPT - The Benefits of Malware". The author of the post notes that hackers can use ChatGPT to recreate the malware by feeding descriptions and reports into an artificial intelligence tool, and then share Python-based stealing code that searches for common file types and copies them to random files. folders and upload them to a hardcoded FTP server.

Shykevich said. "Cybercriminals have found ChatGPT attractive. In recent weeks, we have discovered evidence that hackers have begun using ChatGPT to write malicious code. ChatGPT has the potential to provide a good starting point for hackers and speed up the progress of cyber attacks. As ChatGPT can be used Just as it helps developers write code, it can also be used for malicious purposes."

OpenAI said it has taken steps to limit the use of ChatGPT, including limiting the types of requests that can be made, but some requests may be missed. There is also evidence that its tips are used to "spoof" ChatGPT, providing potentially harmful code examples under the guise of research or as fictitious examples.

ChatGPT and potential misinformation

Meanwhile, OpenAI is working with Georgetown University’s Center for Security and Emerging Technologies and the Stanford Internet Observatory to study large language models ( For example, GPT-3), the basis of ChatGPT, may be used to spread false information.

This model can be used to reduce the cost of operating influence operations, keeping them within the control of new types of cybercriminals, whereas previously only large actors had access to such content. Likewise, recruiters who can automate text production may gain new competitive advantages.

Hackers' use of ChatGPT can also lead to different behaviors, increase the scale of cyber attacks, and allow the creation of more personalized and targeted content compared to manual creation. Ultimately, they found that text generation tools were able to generate more impactful and persuasive messages, compared to most human-generated propaganda documents that hackers may lack the required knowledge of the language or culture of their targets.

OpenAI researchers declare: “Our basic judgment is that language models are useful for brand evangelists and may transform online influence operations. Even the most advanced models are encrypted, or by applying Programming interface (API) access is controlled, and advocates may also be inclined to adopt open source alternatives.”

The above is the detailed content of Are AI tools becoming a hot commodity for cybercriminals? Study finds Russian hackers are bypassing OpenAI restrictions to access ChatGPT. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology