Home >Technology peripherals >AI >The past and present of ChatGPT: OpenAI's technology 'stubbornness” and 'big gamble”

The past and present of ChatGPT: OpenAI's technology 'stubbornness” and 'big gamble”

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-12 12:16:031187browse

1. ChatGPT, artificial intelligence that is no longer “stupid”

ChatGPT related topics should be regarded as the most popular artificial intelligence hotspot since AlphaGo. To put it simply, it is a robot that can talk in natural language. You can ask it any question (of course it may answer wrong, but you can guide and correct it), and it will answer in a very smooth and standard natural language. you. Not only that, it can also answer coding questions, math questions, etc., and you can have a great conversation with it about any issue.

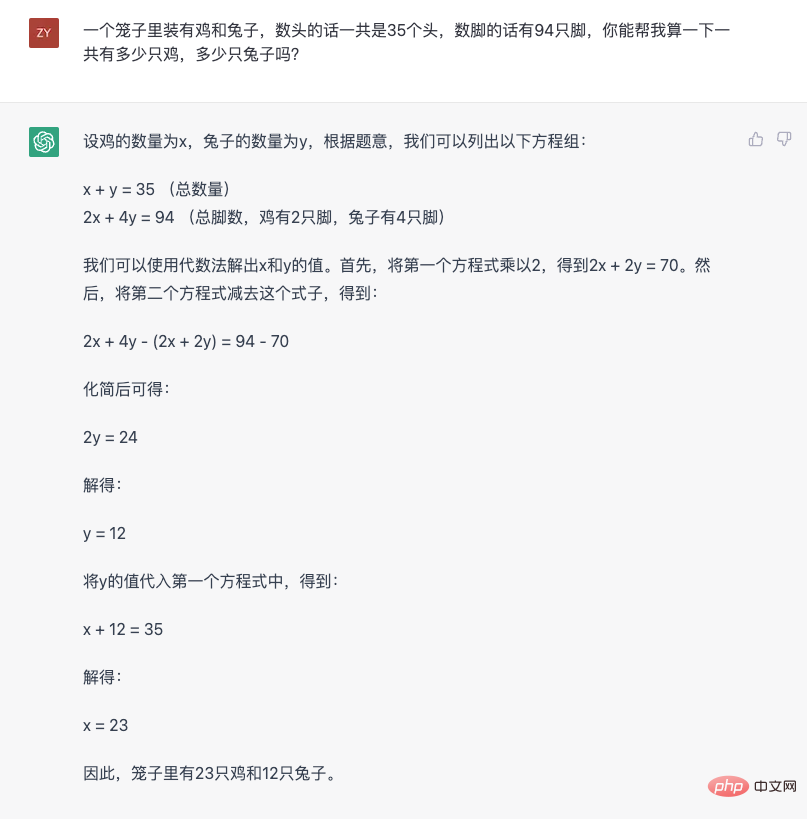

We can use a classic chicken and rabbit problem to understand the capabilities of ChatGPT perceptually:

Several characteristics can be observed from this answer. The first is the ability to understand and convert natural language into mathematical problems. Secondly, it breaks down a more complex reasoning problem step by step to obtain the final answer step by step. This ability is called "Chain of thought" in the industry. Next, change the question and see how it responds.

It can be found from this picture that ChatGPT is aware of what it says and can give The reason for saying that. In addition, it can also be found that it does make mistakes (the first time I calculated the number of ears was wrong. Here is a trivia fact, chickens have functional organs similar to "ears"), but it can be given guidance. Give the correct answer and tell you why you are wrong.

If I don’t tell you in advance that this is an artificial intelligence model, ChatGPT does feel like a real person with logical thinking and language communication capabilities. Its appearance made everyone feel for the first time that artificial intelligence seemed to be able to communicate normally with people. Although it sometimes made mistakes, at least there were no language and logical barriers in the communication process. It could "Understand" what you are talking about, and give you feedback according to human thinking patterns and language norms. This very intelligent experience is the reason why it breaks through the small circles of the industry and brings a sense of impact to the public.

I would also like to emphasize this experience issue again, because perhaps in the past, due to technical limitations, the industry ignored this point in order to complete specific tasks in the scene. The emergence of ChatGPT today means that artificial intelligence is no longer the "useful, but stupid" form of the past.

In order to better understand how ChatGPT’s very intelligent feeling came about, it is inevitable to start with the “stupid” artificial intelligence of the past. To be precise, ChatGPT still uses natural language processing (NLP) technology, but it breaks the original paradigm.

To understand this, you can first take a look at the current mainstream practice. Human communication relies on language, and many people even believe that human thinking is also based on language. Therefore, understanding and using natural language has always been an important topic in artificial intelligence. But language is too complex, so in order for computers to understand and use language, this process is usually divided into many subdivisions. This is what is often called "task" in the technical field. To give a few examples:

- The emotional analysis task is aimed at understanding the emotional tendencies contained in language;

- The syntactic analysis task is aimed at analyzing the linguistic structure of the text;

- Entity recognition task is aimed at locating entity fragments from text, such as addresses, names, etc.;

- Entity connection task, It is aimed at extracting the relationship between entities from text;

#There are many such tasks, all of which analyze natural language from a certain subdivision. ,deal with. There are many benefits to doing this. For example, with these splits, you can examine the segmentation capabilities of a natural language processing system from different dimensions; you can also design a system or model specifically for a certain segmented item. From a technical perspective, splitting a complex task (understanding and using natural language) into many simple tasks (various NLP tasks) is indeed a typical way to solve complex problems. This is also current mainstream practice. However, after the emergence of ChatGPT, with hindsight, perhaps this split is not the most effective way to allow computers to understand and use natural language.

Excellent performance on a single task does not mean that the system has mastered natural language. People's "sense of intelligence" for artificial intelligence is based on the overall ability to apply natural language to it, which is clearly reflected in ChatGPT. Although OpenAI has not opened the API service of ChatGPT, and the outside world has not been able to evaluate its specific effects on various subdivided NLP tasks, past external tests of its predecessor GPT-3, InstructGPT and other models have shown that for some specific Task, a small model fine-tuned with specialized data can indeed achieve better results (for detailed analysis, please refer to "In-depth Understanding of the Emergent Capability of Language Models"). But these small models that perform better on a single task do not cause a big out-of-the-circle effect. Ultimately, it's because they only have a single ability. A single outstanding ability does not mean that they have the ability to understand and use natural language, and thus cannot play a role in actual application scenarios alone. Because of this, usually in a real application scenario, multiple modules with single-point capabilities are artificially designed and put together. This artificial way of patchwork makes past artificial intelligence systems feel unintelligent. one of the reasons.

From the perspective of human beings understanding and using natural language, this phenomenon is actually easy to understand. When ordinary people understand and use natural language, they do not split it into many different tasks in their minds, analyze each task one by one, and then summarize it. This is not the way humans use natural language. Just like when a person hears a sentence, he does not analyze its syntactic structure, entity content and relationship, emotional tendency, etc. one by one, and then piece together the meaning of the sentence. The process of people's understanding of language It is a holistic process. Furthermore, people's overall understanding of this sentence will be fully expressed in the form of natural language through replies. This process is not like an artificial intelligence system, which splits a single task and then outputs sentiment analysis labels, fragments of entity information, or the results of some other single task one by one, and then uses these things to piece together a response.

AndRepresented by ChatGPT, what the GPT series models do is truly close to the human ability to understand and use language - directly receive natural language, and then Directly reply to natural language, ensuring the fluency and logic of the language. This is a way for people to communicate, so everyone regards it as a "very smart" experience. Maybe many people will think that it would be great if it could be done like ChatGPT. The splitting of tasks in the past was a last resort due to technical limitations. From the perspective of technical application, such a roundabout approach is of course necessary. This method has been adopted for a long time and can indeed solve many problems in practical scenarios. But if you look back at the development process of the GPT series models, you will find that OpenAI "betted" on another path, and they "betted" and won.

2. OpenAI’s “Gamble”

The first generation of GPT, where everything begins

As early as 2018, OpenAI released the initial version of the GPT model. From OpenAI’s public paper (Improving Language Understanding by Generative Pre-Training), we can learn that this model uses a 12-layer Transformer Decoder structure. , approximately 5GB of unsupervised text data was used to train the language model task. Although the first-generation GPT model already used generative pre-training (which is also the origin of the name of GPT, Generative Pre-Training, that is, generative pre-training), it used unsupervised pre-training downstream tasks. Fine-tuned paradigm. This paradigm is actually not a new invention. It has been widely used in the field of CV (computer vision). However, due to the outstanding performance of the ELMo model that year, it was re-introduced to the field of NLP.

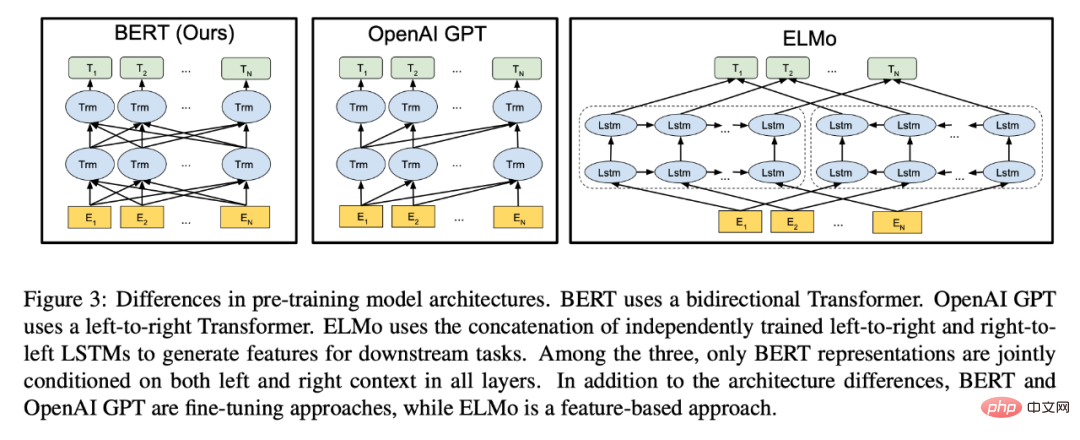

The emergence of the GPT model did attract some attention in the industry that year, but it was not the C protagonist that year. Because in the same year, Google’s BERT model came out, attracting almost all the attention with its excellent results (this scene is a bit like the current ChatGPT, I can’t help but sigh that the “grievances” between Google and OpenAI are really reincarnation) .

The picture comes from the BERT paper. From the illustration, you can see that BERT was benchmarked against GPT, and Bidirectional encoding capabilities are proudly noted.

#Although the BERT model also uses the same Transformer model structure as GPT, it is almost a model tailor-made for the paradigm of "unsupervised pre-training and downstream task fine-tuning". Compared with GPT, although the Masked Language Model used by BERT loses the ability to directly generate text, it gains the ability to bidirectional encoding, which gives the model stronger text encoding. Performance is directly reflected in the substantial improvement of downstream task effects. In order to retain the ability to generate text, GPT can only use one-way encoding.

From the perspective of that year, BERT is definitely a better model. Because both BERT and GPT adopt the "pre-training fine-tuning" paradigm, and the downstream tasks are still "classic" NLP task forms such as classification, matching, sequence labeling, etc., then the BERT model pays more attention to feature encoding. Quality, downstream tasks choose an appropriate loss function to match the task for fine-tuning, which is obviously more direct than GPT's text generation method to "roundabout" complete these tasks.

After the BERT model came out, the paradigm of "unsupervised training downstream task fine-tuning" established its dominance. Various types of people followed BERT's ideas and thought about "how to get better results." "Text feature encoding" methods have emerged in large numbers, so that GPT, a model targeting generative tasks, seems like an "alien". In hindsight, if OpenAI had "followed the trend" and abandoned the path of generative pre-training, we might have had to wait longer to see models like ChatGPT.

The hope brought by GPT-2

Of course, we have now seen ChatGPT, so OpenAI has not given up on generation Pre-training route. In fact, the "reward" of persistence loomed in the second year, that is, 2019. OpenAI released the GPT-2 model with a 48-layer Transformer structure. In the published paper (Language Models are Unsupervised Multitask Learners), they found that through unsupervised data and generative training, GPT demonstrated zero-shot multi-task capabilities. The amazing thing is that these multi-tasking capabilities are not explicitly or artificially added to the training data. To give an example in layman's terms, one of the capabilities demonstrated by GPT-2 is translation, but surprisingly, models usually used specifically for translation require a large amount of parallel corpus (that is, between two different languages) Paired data) for supervised training, but GPT-2 does not use this kind of data, but only conducts generative training on a large amount of corpus, and then it can "suddenly" translate. This discovery is more or less subversive. It shows people three important phenomena:

- If you want the model to complete an NLP task, you may not need annotated data that matches the task. For example, GPT-2 does not use annotated translation data during training, but it will do translation;

- If you want the model to complete an NLP task, it may not be possible. Training goals that need to match the task. For example, GPT-2 did not design translation tasks and related loss functions during training. It only performed language model tasks.

- #A model trained only with language model tasks (i.e., generative tasks) can also have multi-task capabilities. For example, GPT-2 has demonstrated capabilities in translation, question answering, reading comprehension, etc.

Although from today's perspective, the various capabilities demonstrated by GPT-2 at that time were still relatively rudimentary, and the effect was far from that of some other models that were fine-tuned using supervised data. There are still obvious gaps, but this does not prevent OpenAI from being full of expectations for the potential capabilities it contains, so that in the last sentence of the abstract of the paper, they put forward their expectations for the future of the GPT series models:

“These findings suggest a promising path towards building language processing systems which learn to perform tasks from their naturally occurring demonstrations.”

The development of a series of events later proved that they had indeed been Moving forward in the direction of this promising path. If in 2018, when the first-generation GPT model came out, GPT's generative pre-training was still facing being crushed in all aspects by pre-training models such as BERT that aimed to "extract features", then in GPT-2 It is found that generative pre-training gives a potential advantage that cannot be replaced by BERT-type models, that is, the multi-task capability brought by language model tasks, and this multi-task capability does not require labeling data.

Of course, at that point in time, the generative technology path still faces risks and challenges. After all, the performance of GPT-2 at that time on various tasks was still worse than the fine-tuned model. This resulted in that although GPT-2 had translation, summary, etc. capabilities, the effect was too poor to be actually used. Therefore, if you want a usable translation model at that time, the best option is still to use the annotated data to train a model specifically for translation.

GPT-3, the beginning of the data flywheel

Looking back from the ChatGPT era, maybe OpenAI is in GPT- The findings in 2 indeed strengthened their confidence in the GPT series models, and they decided to increase investment in research and development. Because in 2020, they released GPT-3 with 175 billion parameters, a model that is amazingly large even from today's perspective. Although OpenAI did not disclose the cost of training this model, everyone estimated that it cost $12 million at the time. Also released is a more than 60-page paper (Language Models are Few-Shot Learners), which details the new capabilities demonstrated by this new behemoth. The most important discovery is what is stated in the title of the paper, that the language model has the ability to learn with small samples (few-shot).

Small sample learning is a professional term in the field of machine learning, but it has a very simple concept, that is, "human beings can learn a new language task through a small number of examples." ”. Imagine learning in a Chinese class how to replace the word "put" with the word "be" (the clothes got wet from the rain - the clothes got wet from the rain). The teacher will give a few examples, and the students will Be able to master this ability.

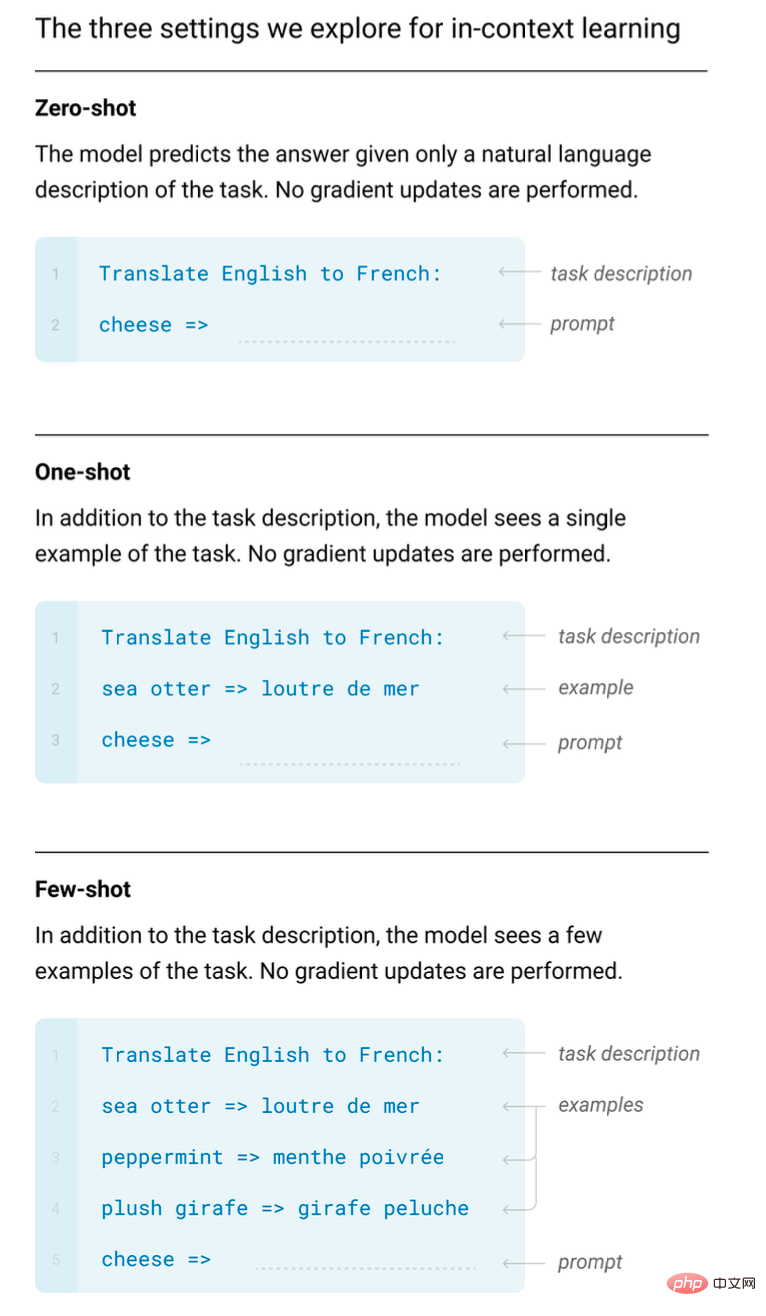

But for deep learning models, it usually needs to learn (train) thousands of examples to master a new ability, but everyone found that GPT-3 is like humans Also have similar abilities. And the key point is that you only need to show it a few examples, and it will "imitate" the tasks given by the examples without requiring additional training (that is, without the need for gradient backpropagation and conventional training) parameter update). Later research showed that this ability is unique to giant models, and is called "in context learning" in the industry.

GPT-3 The In context learning ability of English to French demonstrated in the paper.

In fact, the small sample learning ability itself is not a very surprising discovery. After all, the industry has been conducting research on small sample learning, and many models that specialize in small sample learning have excellent small sample learning capabilities. However, the small-sample ability of "learning in context" demonstrated by GPT-3 is very unexpected. The reason is the same as the multi-task ability demonstrated by GPT-2:

- GPT-3 does not make special designs in training data and training methods in order to obtain the ability of small samples. It is still just a generative model trained with language model tasks;

- GPT-3’s small sample capability is demonstrated through “learning in context”. In other words, if you want it to acquire new abilities, you don't need to retrain it, but you only need to show it a few demonstration examples.

In addition to this ability, GPT-3 also shows excellent text generation capabilities. Compared with GPT-2, the content it generates is smoother and can generate a lot of text. long content. These capabilities were integrated into one model, which made GPT-3 the focus of everyone's attention at the time. It also became the model for OpenAI to officially provide services to the outside world.

But with the opening of this model service, more and more people are trying to use this model. Since then, OpenAI has also been collecting more diverse data by opening it up to the public (the content entered by users may be used for model training, which is written in the user terms) , these data also play an important role in subsequent model iterations. Since then, the data flywheel of the GPT series of models has been turning. The more high-quality user data, the better the iteration of the model.

Different from ChatGPT, GTP-3 is not a conversational interaction model, but a text continuation model (that is, following the text you input) (written below), so it does not have the multi-turn conversation capabilities that today's ChatGPT has. But it can already do a lot of things, such as writing stories, auto-complete emails, etc. But at the same time, everyone has gradually discovered some problems. For example, it will output content that is not consistent with the facts, and it will output some harmful remarks, etc. This is the most prominent drawback of this text generation model. Although it has gone through many iterations, ChatGPT still faces similar problems today.

CodeX, let the computer write the code itself

OpenAI has another surprise in its research on GPT-3 Found that it can generate very simple code based on some comments. Therefore, in 2021, they devoted dedicated research investment to generating code and released the CodeX model. It can be regarded as a GPT model with code specialization capabilities, which can generate relatively complex code based on natural language input.

From an external perspective, we cannot tell whether the research on code generation and the development of the GPT series models are being carried out at the same time. But at that time, it was indeed more meaningful from a practical perspective to give the model the ability to generate code. After all, GPT-3 did not yet have the powerful capabilities of ChatGPT today. On the other hand, letting the model generate code also avoids the risk of it generating harmful text content.

Several key points were mentioned in the CodeX paper. First, let the GPT model pre-trained with text data be used in special code data (the data comes from the open source code of github, a total of 159G) Training can indeed significantly improve the model's ability to understand and output code. Secondly, the paper uses a "small" model with 12 billion parameters. This information reflects from the side that in addition to the 175 billion parameter GPT-3 model with an open interface, there are other model versions of different sizes within OpenAI.

The original intention of adding code training to allow the model to understand and generate code decisions may just be to hope that GPT can have one more application scenario. It does not seem to have much connection with the ability of the GPT series models to understand and use natural language, but according to subsequent research (for detailed analysis, please refer to the article "Dismantling and Tracing GPT- 3.5 The origin of various capabilities》), the increased training of code data is likely to trigger the later GPT model’s ability to perform complex reasoning and thinking chains in natural language.

Perhaps OpenAI did not expect such a result when they first started doing CodeX, but it is like they have been using text generation tasks to make GPT models, and then in GPT-2 and GPT -3 "unlocked" the "ability to multi-task" and "the ability to learn in context", the introduction of code data once again gave them unexpected gains. Although it seems a bit accidental, forward-looking understanding of the technical route, coupled with persistence and continuous investment are obviously a crucial factor.

InstructGPT, let GPT speak properly

We mentioned GPT-3 earlier, although it already has a strong However, after it went online, as more and more people used it, many problems were discovered. The most serious ones should be "seriously talking nonsense" and "outputting harmful content". While OpenAI seems to be temporarily focusing on getting models to understand and generate code in 2021, they should have been trying to solve these problems with GPT-3.

In early 2022, OpenAI published the InstructGPT paper (Training language models to follow instructions with human feedback), from which we can get a glimpse of how to solve these problems. The core idea of the paper is to allow the model to accept human teaching (feedback), which is clearly stated in the title.

The reason why GPT-3 has problems such as "seriously talking nonsense" and "outputting harmful content" comes from the training data it uses. A behemoth like GPT-3 requires huge amounts of data. We can find its data sources from GPT-3 papers, which can be roughly divided into three categories: web content, encyclopedia content, and books. Although the amount of web content is very large, it is also very "dirty, messy, and poor" and naturally contains a lot of untrue and harmful content. GPT-3 trains on this data and naturally learns these things.

But as a product that provides services to the outside world, GPT-3 should be more careful in its answers. To solve this problem, one of the difficulties lies in how to define how the model should speak. Because the output content of the generative model is natural language itself, rather than a clear, objective right or wrong content such as a classification label or an entity noun. There is no clear right or wrong, which makes it impossible to design training tasks directly for the goal like training classic NLP models.

The solution given by InstructGPT is very direct. Since there are many different influencing factors for the evaluation index of "good answer", and these factors are intertwined with each other, then Let someone teach it how to write answers. Because humans are better at dealing with this kind of problem with "both clear requirements and vague scope", let real people write some "excellent examples" and let the model learn these "excellent examples". This is exactly what InstructGPT proposed general idea.

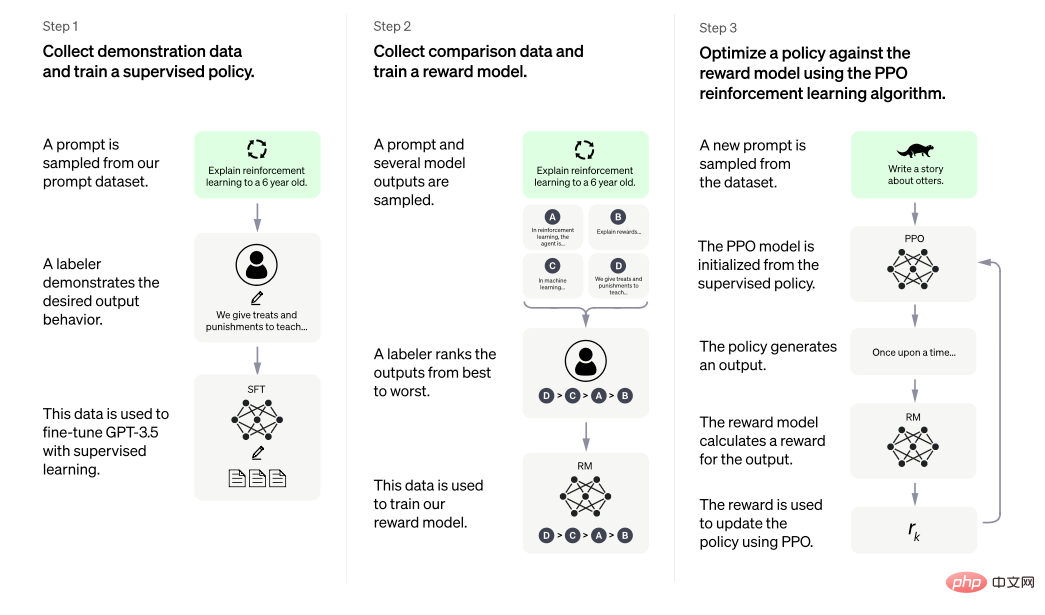

Specifically, InstructGPT proposes a two-stage path to allow GPT to learn "excellent examples" given by humans. The first stage is supervised learning. The second stage is reinforcement learning. In the first stage (corresponding to the leftmost Step 1 in the figure below), let the real person follow different prompts (roughly speaking, it can be thought of as the text we enter in the dialog box when using ChatGPT. In the industry, this thing is called a command) Write answers that are truthful, harmless, and helpful. During the actual operation, in order to ensure the quality of this content, some normative guidelines will be given to the annotators who write the answers, and then the pre-trained GPT model will be allowed to continue training on these human-edited data. This stage can be regarded as a kind of "discipline" for the model. To use a loose analogy, it is like the Chinese teacher asking you to write excellent essays silently.

The picture comes from the InstructGPT paper, which proposes to make the output of the model reasonable through supervised instruction fine-tuning and reinforcement learning of human feedback.

The second stage is reinforcement learning, which is technically divided into two steps. The first step (corresponding to Step 2 in the middle of the figure above) is to let the "trained" model generate multiple different answers based on different prompts, and let humans sort these answers according to good or bad standards. Then use these labeled data to train a scoring model so that it can automatically sort and score more data. The second step of the reinforcement learning stage (corresponding to Step 3 on the right in the above figure) is to use this scoring model as environmental feedback in reinforcement learning, and use the policy gradient (Policy Gradient, to be precise, the PPO algorithm) to evaluate the already "regulated The trained GPT model is trained. The entire second stage process can be seen as a "strengthening" of the model. To use a loose analogy, it is like a Chinese teacher giving you grades for your essays and asking you to distinguish from the scores what is good and what is good. Not good, and then keep improving.

Therefore, In a very loose way, but ordinary people may also be able to understand it, InstructGPT first lets a GPT that is "unobtrusive" By "writing excellent human essays silently", you can initially learn to "speak well", and then "give it a score for what it writes alone, and let it go back to understand it and continue to make progress" . Of course, things will be more technically involved, such as data issues such as the specific specifications and quantity of "excellent essays", as well as algorithmic issues such as the selection of scoring models in reinforcement learning and the setting of algorithm parameters, which will all affect the final outcome. The effect has an impact. But the final results show that this method is very effective. The paper also points out that a small model of 1.3 billion trained through the above method can outperform a larger model that has not been trained in this way.

In addition, there are some contents in the paper that are worth mentioning. First, some findings about Prompt. The Prompt used during InstructGPT training is mainly composed of two parts. One part is written by a specialized AI trainer, and the other part comes from the content written by users during the online service of the OpenAI model. At this time, the role of the data flywheel is reflected. . It can be found that no matter what they are, these prompts are written by real people. Although the article does not conduct a detailed analysis of the specific coverage, distribution and questioning methods of these prompts, it can be reasonably guessed that these prompts have certain characteristics. diversity and high quality. In fact, the article compared the model trained using these prompts written by real people and the model trained using prompts built in some open source NLP task data sets (such as T0 data set, FLAN data set). The conclusion is that the model trained by real people writing prompts , the answers given are more acceptable to the reviewers.

Another point is about the generalization ability of the trained model to the new Prompt. It is conceivable that if the trained model cannot produce the generalization ability of the Prompt , then the ability shown by ChatGPT now to answer almost all questions is unlikely to be possible. Because in the fine-tuning stage of the model, no matter how much data there is, it is impossible to completely cover all the content that people may input. The InstrctGPT paper points out that the method used in the paper can produce the generalization ability of Prompt.

The reason why I spent more words introducing InstructGPT is because according to the introduction on the official ChatGPT page, the methods in InstructGPT are exactly the methods used to train ChatGPT. The only difference is that ChatGPT Conversational data organization is used.

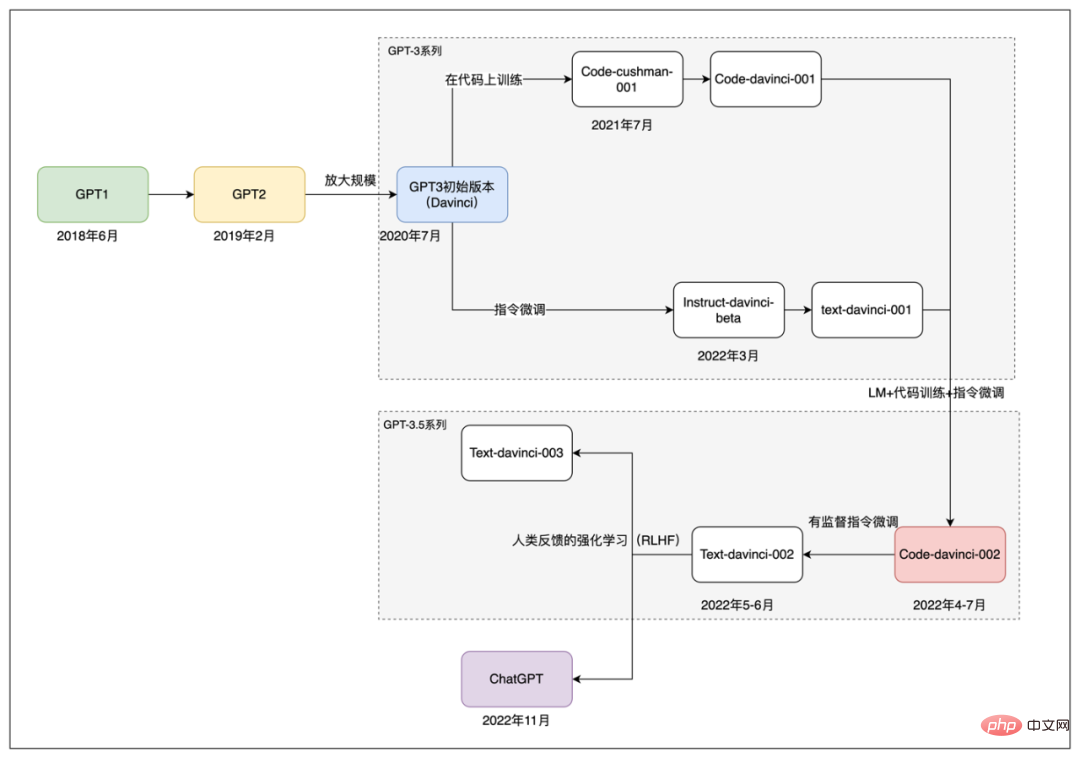

GPT-3.5 era and the birth of ChatGPT

In the subsequent time, OpenAI released multiple models called the GPT-3.5 series. Although these models did not have related papers published, according to The analysis of this articleGPT-3.5 series should be developed by integrating the technology, data and experience accumulated by OpenAI in the GPT-3 era. Since there is no detailed official public information reference, the outside world mainly speculates on the specific information of these models by analyzing the experience of use, related technical papers, and the introduction of OpenAI’s API documentation.

According to analysis, the GPT-3.5 series of models may not be fine-tuned on GPT-3, but may be a fusion of code and natural language data. A basic model was trained from scratch. This model, which may be larger than GPT-3’s 175 billion parameters, is named codex-davinci-002 in OpenAI’s API. Then based on this basic model, a series of subsequent models were obtained through instruction fine-tuning and human feedback, including ChatGPT.

The development path of GPT series models.

Briefly, starting from the code-davince-002 model, text-davinci-002 is obtained through supervised instruction fine-tuning. And the subsequent text-davinci-003 and ChatGPT were also obtained through instruction fine-tuning and human reinforcement learning feedback on a model of the GPT-3.5 series. And text-davinci-003 and ChatGPT were both released in November 2022. The difference is that text-davinci-003, like GPT-3, is a text completion model. According to the official introduction of ChatGPT, it is trained by processing past data into the form of conversational interaction and adding new conversational data.

So far, we have roughly reviewed the development and iteration process of the OpenAI GPT series models from the first generation GPT in 2018 to the current ChatGPT. In this process, OpenAI has always maintained its "stubbornness" towards the technical path of generative pre-training models, and has also been absorbing new methods from the evolving NLP technology. , from the initial Transformer model structure to the later emergence of technologies such as command fine-tuning (Prompt tuning), these factors have jointly contributed to the success of ChatGPT today. With an understanding of the development of the GPT series models, we can go back and look at today's ChatGPT.

3. Take a closer look at ChatGPT

In the first chapter, we explained that the main reason why ChatGPT is out of the circle is: "It is smooth and logical. It uses natural language to feed back the natural language input by humans, thus giving people who communicate with it a strong sense of intelligence. In Chapter 2, we will learn about ChatGPT’s path to success by reviewing the development history of the GPT series models. This chapter will try to go a little deeper into the technical content in a way that is as understandable as possible for outsiders, and explore why some of the current large-scale text generative models fail to achieve the same effect. The main reference for this part comes from the two articles "In-depth Understanding of the Emergent Capabilities of Language Models" and "Dismantling and Tracing the Origins of GPT-3.5 Capabilities" and some related papers. It is recommended for those who want to learn more about the details. Readers read the original text.

Although it was pointed out in the first chapter that the amazing effects brought by ChatGPT are comprehensively reflected by many different NLP tasks, when analyzing the technology behind it, it is still through It will be clearer to break down its capabilities. Overall, the capabilities embodied by ChatGPT can be roughly divided into the following dimensions:

- Text generation capabilities: All output of ChatGPT is generated instantly Text, so the ability to generate text is its most basic requirement.

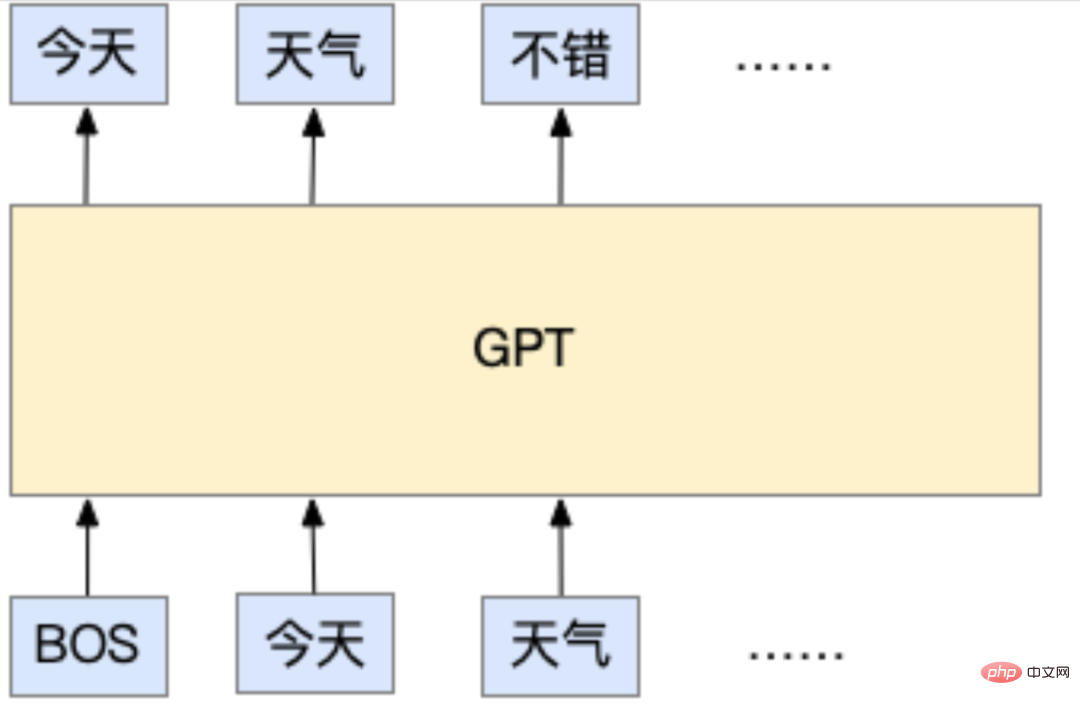

This capability actually comes from its training method. During pre-training, ChatGPT is a standard autoregressive language model task, which is the basis for all OpenAI GPT series models. The so-called autoregressive language model task, the popular understanding is this: it can predict what the next token should be based on the text that has been input. The token mentioned here represents the smallest unit of character fragments used by the model. It can be a character (it is very common to use characters in Chinese) or a word (each word in English is naturally separated by spaces). separated, so words are often used), or even letters. But current methods usually use subwords (subwords, between letters and words, the main purpose is to reduce the number of words). But no matter which one it is, the basic idea of the autoregressive language model task is to predict the next text to be output based on the text that has been input, just like in the example below:

In this example, BOS represents the beginning of the input, and each token is a word. The GPT model uses the input "today" and " With the two words "weather", it is predicted that the next output will be "good".

During training, a lot of text data will be prepared, such as articles on web pages, various books, etc. As long as it is normal text content, it can be used for training . It is worth mentioning that this kind of data does not require additional manual annotation, because this kind of data is originally written by people. What the model needs to do is to learn "given the previous text, based on the text written by these people, Then the question "What should this place behind these words be?" This is what the industry calls "unsupervised training." In fact, the model is not really unsupervised (otherwise, what would the model learn?), but its data does not require additional manual annotation. Precisely because this task does not require additional annotation, a large amount of data can be obtained "for free". Thanks to the popularity of the Internet, a large amount of text content written by real people can be "easily" obtained for training. This is also one of the characteristics of the GPT series of models. Massive amounts of data are used to train large models.

So when we use ChatGPT, how does it work? In fact, it is the same as its training method. The model will predict the next token following the content based on the content we enter in the dialog box. After getting this token, it will be spliced with the previous content into a new one. The text is given to the model, and the model predicts the next token, and so on, until a certain condition is met and stops. There are many different ways to design this stopping condition. For example, it can be that the output text reaches a specific length, or the model predicts a special token used to represent stopping. It is also worth mentioning that when the model predicts the next token, it is actually a sampling process behind the scenes. In other words, when the model predicts a token, it actually outputs the probability of all possible tokens, and then samples a token from this probability distribution. Therefore, when using ChatGPT, you will find that even for the same input, its output will be different every time because it secretly samples different tokens as output.

After understanding this, you can go back and think about what the model is learning. Is it learning how to answer questions and answers? Or is it learning how to understand the information, logic, and emotions contained in natural language? Or is it learning a huge amount of knowledge? Judging from the design of the training task, it seems that there is no such thing. It only learns from the massive text data "what a human will write next based on the input text." But it is this kind of model that, when it "evolves" to ChatGPT, it has mastered rich knowledge, complex logical reasoning, etc. It seems to have mastered almost all the abilities required by a human to use language. This is a very magical thing, and its "evolution" process will be introduced in more depth in the next chapter.

- Rich knowledge reserve: ChatGPT can correctly answer a lot of questions, including history, literature, mathematics, physics, programming, etc. Because the current version of ChatGPT does not utilize external knowledge, the content of this knowledge is "stored" within the model.

ChatGPT’s rich knowledge base comes from its training data and its large enough size to learn these contents. Although the official details of the training data used by ChatGPT have not been disclosed, it can be inferred from the paper of its predecessor GPT-3 that the data can be roughly divided into three broad categories: web content, book content, and encyclopedia content. It is conceivable that these contents naturally contain a large amount of knowledge, not to mention encyclopedias and books, but web content also contains a lot of news, comments, opinions, etc., and web pages also include many specialized Q&A vertical websites. , these are the knowledge sources of ChatGPT. The official introduction points out that ChatGPT cannot answer what happened after 2021, so a reasonable guess is that the training data collection ends in 2021.

But the amount of data is only one aspect. For the model to "master" this data, its own volume cannot be small. Take GPT-3 as an example. It has 175 billion parameters. It can be roughly understood that the content of these data and the various capabilities of the model are fixed in the trained model in the form of specific values of each parameter. . The perceptual understanding is that if a model has only 1 parameter, then it can't do anything. For more rigorous analysis and comparison, please refer to the evaluation of this paper "Holistic Evaluation of Language Models". The directional conclusion is that the larger the model, the better it performs on tasks that require knowledge to complete.

Paper address: https://arxiv.org/pdf/2211.09110.pdf

- Logical reasoning and thinking chain capabilities: As can be seen from the example of a chicken and a rabbit in the same cage in the picture in Chapter 1, ChatGPT has strong logical reasoning capabilities. And it can break down complex content into multiple small steps, perform reasoning step by step, and obtain the final answer. This ability is called a thinking chain.

We know from the previous introduction that the model does not have specific designs for logical reasoning and thinking chains during training. The current mainstream view is that logical reasoning and thinking chains are likely to be related to two factors. The first is the size of the model, and the second is whether the model has been trained on code data.

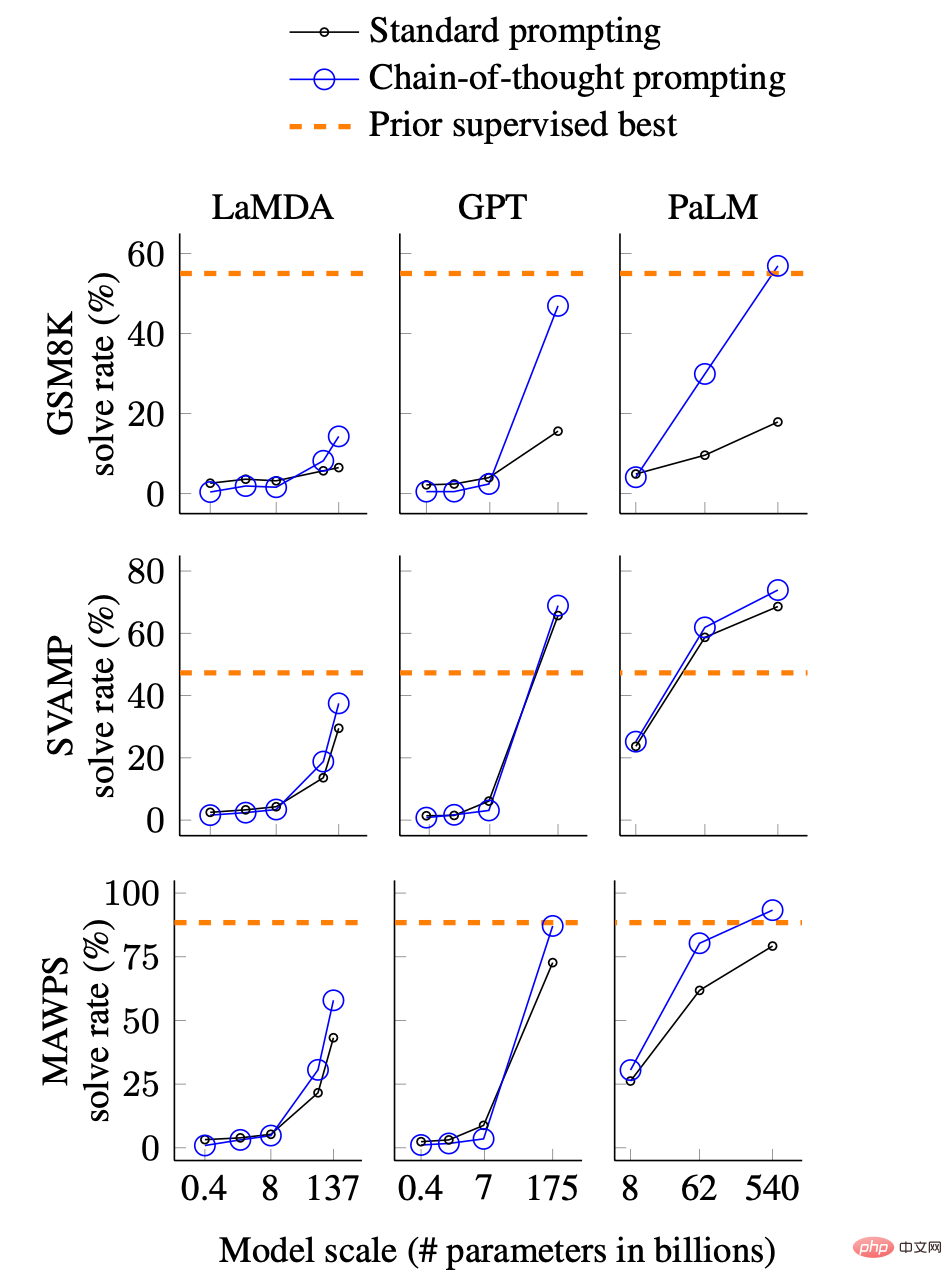

Regarding the relationship between model size and reasoning and thinking chain capabilities, there is a corresponding introduction in "In-depth Understanding of the Emergent Capabilities of Language Models". The picture below shows the relationship between thinking chain capabilities and model size.

Comparison of the thinking chain effects of different models and different sizes, the picture comes from the paper. GSM8K, SVAMP and MAWPS are three mathematical word problem data sets that require logical reasoning, and LaMDA, GPT and PaLM are three different models respectively.

Briefly, the chart shows the correct answer rates of three different models on three mathematical word problem data sets. What deserves attention are the following aspects:

- The ability of the thinking chain (blue solid line) has a sudden change in effect when the model size is large enough;

- The ability of the thinking chain is more effective than the standard prompting (black solid line) method if the model is large enough;

- The ability of the thinking chain can be close to or even exceed the supervised method (orange dotted line) when the model is large enough.

In layman's terms, when the model is large enough, the ability of the thinking chain suddenly appears, reaching or even exceeding those who specialize in reasoning data sets. Models for supervised training. This figure may partially explain the excellent reasoning and thought chain capabilities of ChatGPT that we see now.

Another factor regarding reasoning and thinking chain capabilities is related to whether the model has been trained on code data. At present, this is just an inference. The analysis in "Dismantling and Tracing the Origin of GPT-3.5's Capabilities" shows that large models of similar size have very weak or almost no thinking chain and reasoning if they have not been trained on the code. ability. And ChatGPT is indeed trained on code data, which can also be seen from its ability to understand and generate code. As mentioned in the review of the development process in Chapter 2, OpenAI released the CodeX model specifically for code in 2021. The addition of code data to the training data of GPT should have started from then on.

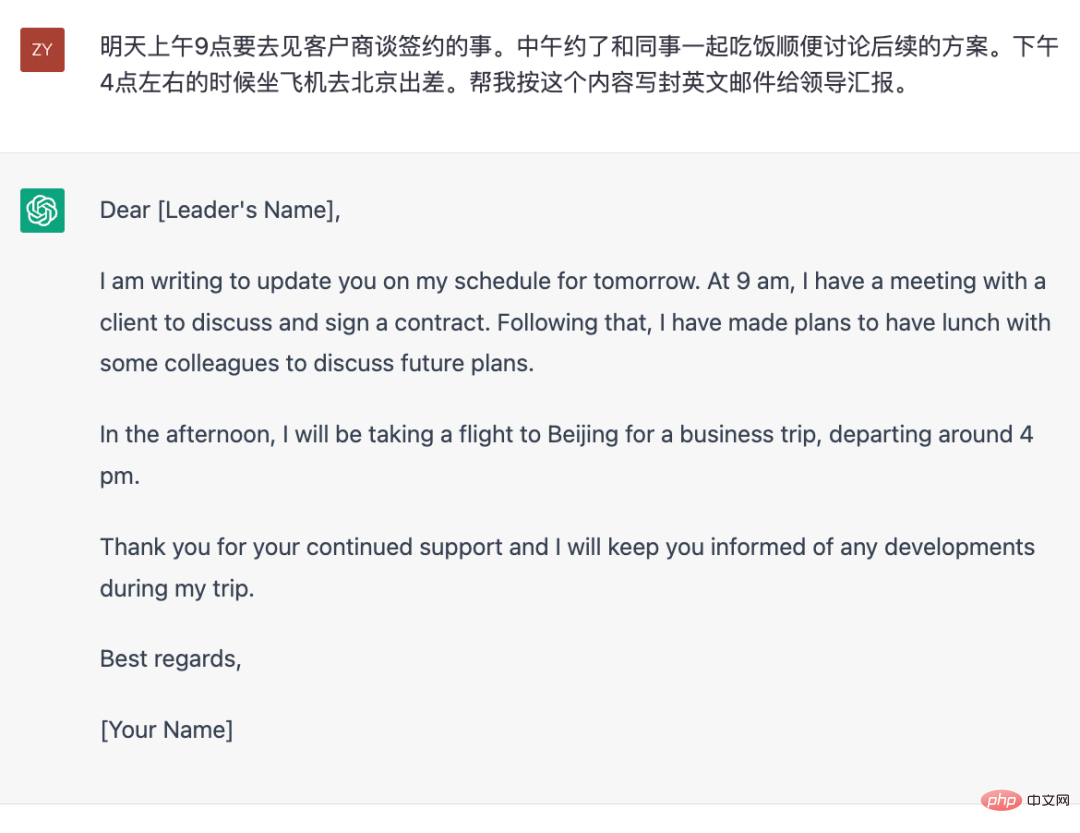

- The ability to respond to people's questions or instructions: In addition to using the narrow "question and answer" form of interaction, ChatGPT can also respond to input requirements . For example, it has also demonstrated excellent capabilities when responding to command-style requests such as "Write a letter for me." This ability makes it not only an "advanced search engine" that provides answers, but also a word processing tool that can interact with natural language.

Although the public currently focuses on ChatGPT as a search engine-like tool, accessing relevant knowledge and giving answers is not its only ability. In fact, as far as ChatGPT itself is concerned, answering knowledge questions is not its strong point. After all, its own training data is fixed in 2021. Even if it is trained with updated data, it cannot keep up with changes in current affairs. Therefore, if you want to use it as a knowledge question and answer tool, you still need to combine it with external knowledge sources such as search engines, just like what Bing does now.

But from another perspective, ChatGPT is like a "language-complete" text tool, that is, it can complete specified tasks in text form according to the requirements you give it. The expressed content is like the example below.

Generate English emails for reporting according to the given plan content.

#The "language completeness" mentioned here refers to the ability to use language. It can be seen that in the above example, there is actually no intellectual content involved, because the content it needs to write has already been provided to it. But writing this email involves the ability to use language, such as choosing words and sentences, switching languages, email format, etc.

Now let’s go back and try to analyze how it obtained its ability to “complete tasks according to instructions”. In academia, this kind of instruction is called prompt. In fact, user input in conversations and questions in Q&A are also prompts. Therefore, it can be roughly understood that everything entered in the chat box is prompt. If you understand what we introduced the language model in the first section of this chapter, then a more rigorous statement should be that "the above input to the model" are all prompts.

ChatGPT's ability to reply according to the input command (prompt) comes from a model training method called command fine-tuning (prompt tuning). In fact, the principle is very simple. The model is still "predicting the next token based on the input content." However, in the instruction fine-tuning stage, the input content is replaced by these pre-written prompts, and the content that needs to be generated after the prompt is , which is the answer written in advance. Therefore, the biggest difference between this stage and the unsupervised autoregressive language model training mentioned at the beginning lies in the data. The data here, that is, prompts and corresponding responses, are all written by humans. In other words, this stage uses supervised training with manually labeled data.

When it comes to manually labeled data, it naturally involves the amount of data required, because each piece of labeled data requires a cost. If there is no need for labeling (just like the first stage of training), then there will naturally be a massive amount of text data available for training, but if labeling is required, how much of this data is needed? You know, the cost is very high for the annotator to handwrite a prompt and then handwrite a true and detailed answer of several hundred words. According to the paper "Training language models to follow instructions with human feedback", the data required does not actually require much (compared to the data used in the unsupervised stage). Although there is no exact information disclosed as to how much ChatGPT is used, it is certain that it must be much smaller in magnitude than the data sets composed of web pages, encyclopedias and books used for unsupervised training.

Paper address: https://arxiv.org/pdf/2203.02155.pdf

Only a relatively small amount is needed Manually annotated prompt data can achieve the purpose of allowing the model to respond according to instructions. This fact actually implies a phenomenon, which is called the generalization ability of prompts in the academic community. As you can imagine, people all over the world are asking ChatGPT questions all the time, and the questions they ask must be all kinds of weird. These questions are actually prompts. But there are definitely not so many prompts used to fine-tune the instructions of ChatGPT. This shows that after the model learns a certain amount of prompts and corresponding answers, it can "draw inferences" and answer prompts it has not seen before. It is the generalization ability of prompt. The analysis of the article "Dismantling and tracing the origins of GPT-3.5's various capabilities" points out that this generalization ability is closely related to the instruction fine-tuning stage. Relevant to the amount and diversity of labeled data that the model learns from.

In addition, a small amount of prompt data can be used to fine-tune a model with powerful capabilities like ChatGPT. There is another guess behind it, that is, the various items displayed by the model. Ability may already exist in the model during the unsupervised training stage. In fact, this is easy to understand. After all, compared with unsupervised data, the number of these manually labeled prompts is too small. It is difficult to imagine that the model has developed various abilities by learning from these only labeled data. From this perspective, the process of instruction fine-tuning is more about letting the model learn to respond according to certain specifications, and its knowledge, logic and other capabilities already exist.

- The ability to be "objective and impartial": If you ask ChatGPT some harmful or controversial questions, you can see that ChatGPT's answers are very "cautious" and very Answer like a trained press spokesman. Although it is still not good enough, this ability is the core factor that makes OpenAI dare to use it publicly as a product.

#Making the output of the model consistent with human values is what OpenAI has been doing. As early as GPT-3 in 2020, OpenAI discovered that this model trained through online data will generate discriminatory, dangerous, and controversial content. As a product that provides external services, these harmful contents are obviously inappropriate. The current ChatGPT has significantly improved on this point. The main method for making the model make this "behavior change" also comes from the InstructGPT paper. To be more precise, it is through supervised instruction fine-tuning plus human feedback. This is accomplished jointly with reinforcement learning, which has also been introduced in Chapter 2.

Through the above analysis, we can find that from the perspective of technical methods, all the content related to ChatGPT is known, but why is it the only one with such amazing performance currently? In fact, since the launch of ChatGPT, the NLP community has been analyzing the reasons. Although many conclusions are speculative, they also bring some enlightenment for the localization of similar models.

Factors of model size

#The premise for the emergence of capabilities is that the model size reaches a certain scale. Although There are no specific indicator guidelines, but judging from the current facts, relatively "advanced" capabilities such as thinking chain need to perform well enough in models with tens of billions of parameters or more.

Factors of data volume

The size of the model is not the only factor. DeepMind provides some analytical conclusions in this paper "Training Compute-Optimal Large Language Models", pointing out that the amount of training data needs to increase accordingly with the size of the model. To be more precise, when training the model, "seen The number of "tokens" needs to increase with the size of the model.

Paper address: https://arxiv.org/pdf/2203.15556.pdf

Data Factors of quality

#For unsupervised data, the amount of data is not a big obstacle, but the quality of the data is often more easily overlooked. In fact, in the GPT-3 paper, there is special content on data processing. In order to clean the training data of GPT-3, OpenAI specially trained a data filtering model to obtain higher quality data from massive web page data. In comparison, some open source models of comparable size to GPT-3, such as Meta’s Opt and BigScience’s Bloom, do not seem to have undergone this step of cleaning. This may be one of the reasons why these two open source models are worse than GPT-3.

In addition, the measurement dimension of data quality is not single. Factors such as data diversity, content duplication, and data distribution are all factors that need to be considered. For example, although the total amount of web page data is the largest among the three categories of data used by GPT-3: web pages, encyclopedias, and books, the sampling of these three types of data during training is not based on the amount of actual data.

It is also worth mentioning that in the stage of fine-tuning instructions, the use of manual writing of instructions may be an important influencing factor. The InstructGPT paper clearly points out that during the evaluation process, models trained using manually written instructions have better results than models trained using existing NLP data sets to construct instructions through templates. This may explain why models trained on instruction data sets composed of NLP data sets such as T0 and FLAN are less effective.

Impact of the training process

This type of giant model is trained through the cluster while using data parallelism , model parallelism, and ZeRO optimizer (a method to reduce the memory usage of the training process), these methods introduce more variables to the stability of training. The following analysis points out that even whether the model uses bfloat16 precision has a significant impact on the results.

Analysis link: https://jingfengyang.github.io/gpt

I believe you understand the above Content, everyone will have a general understanding of how to forge a ChatGPT-like version and the problems that will be faced. Fortunately, OpenAI has proven that this technical path is feasible, and the emergence of ChatGPT is indeed changing the development trend of NLP technology.

4. Future Outlook

ChatGPT has attracted great attention since its launch in November 2022. I believe that even non-professionals, and even groups who have little contact with computers, are more or less aware of its existence. This phenomenon itself reflects that its appearance is somewhat unusual. The public outside the circle perceive its appearance more perceptually with curiosity, surprise or admiration. For practitioners, its emergence is more about thinking about future technological trends.

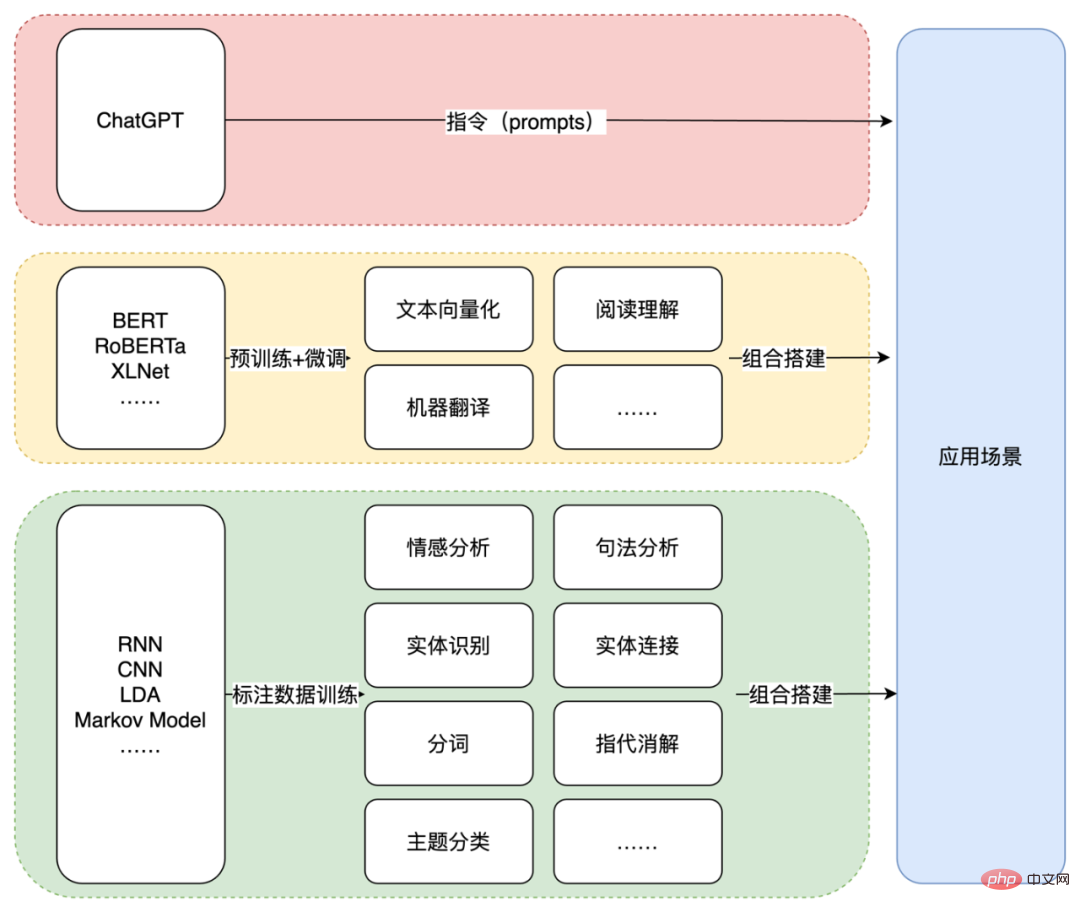

#From a technical perspective, the emergence of ChatGPT marks another paradigm shift in the field of NLP. The reason why it is said to be "again" is because in 2018, the year when the first generation GPT was released, the BERT model released in the same year ushered in the era of NLP's "pre-training fine-tuning" paradigm with its excellent performance. , the specific content has been introduced in Chapter 2. Here we mainly introducethe paradigm of the "text generation command" enabled by ChatGPT. Specifically, it is to use the trained ChatGPT or similar text generation model to complete a specific scenario by inputting appropriate instructions (prompt).

This paradigm is very different from previous NLP technology applications. Whether it is the early era of using statistical models such as LDA and RNN or small deep learning models, or the later era of using pre-training and fine-tuning such as BERT, the capabilities provided by the technology are relatively atomized and are far away from actual application scenarios. a certain distance.

Take the example above of asking ChatGPT to write an English email according to the requirements. According to the previous practice, you may need to extract entities, events and other content (such as time, location, events, etc.) first, and then use templates or The model forms the format of the email, which is then converted into English through a translation model. Of course, if the amount of data is sufficient to train the end-to-end model, you can also skip some intermediate steps. But no matter which method is used, either the final scene needs to be broken down into atomic NLP tasks, or corresponding annotation data is needed. For ChatGPT, all it takes is a suitable command.

Three stages of NLP technology paradigm.

This kind of generative model combined with prompt directly skips the various NLP capability components in the middle and solves the application in the most direct way The problem with the scene. Under this paradigm, the technical path to complete terminal applications will no longer be a combination of single-point NLP capability modules through building blocks.

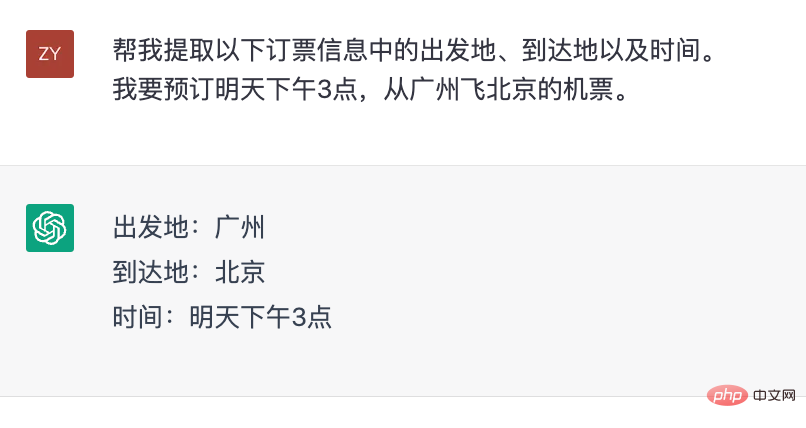

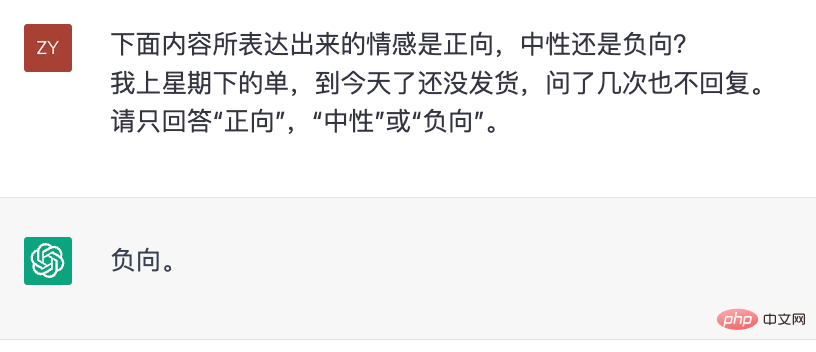

Of course, this process is not achieved overnight, nor does it mean that the single-point ability of NLP becomes unimportant. From an evaluation perspective, the quality of each single point of ability can still be used as an indicator to evaluate the effectiveness of the model. Moreover, in some scenarios, single-point capabilities are still a strong demand. For example, in the ticket booking system, it is necessary to extract time and location. But unlike before, ChatGPT itself can also complete single-point capabilities without using additional functional modules.

ChatGPT performs information extraction.

ChatGPT makes emotional judgments.

From this perspective, ChatGPT can be regarded as an NLP tool that uses natural language as the interactive medium. If in the past, we completed certain NLP capabilities by designing training tasks with model data, then ChatGPT completes these capabilities by designing instructions.

As you can imagine, the emergence of ChatGPT has greatly lowered the application threshold of NLP technology. But it is not yet omnipotent. The most important point is that it lacks accurate and reliable vertical domain knowledge. In order to make its answers reliable, the most direct way is to provide it with external sources of knowledge, just like Microsoft uses Bing's search results as the source of information for its answers.

Therefore, "traditional" NLP technology will not completely die out, but will play an "auxiliary" role to supplement the current shortcomings of ChatGPT. This may be the future A new paradigm in the application of NLP technology.

The above is the detailed content of The past and present of ChatGPT: OpenAI's technology 'stubbornness” and 'big gamble”. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology