Technology peripherals

Technology peripherals AI

AI The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”

The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”

Because it can do things that it has not been trained on, large language models seem to have some kind of magic, and therefore have become the focus of hype and attention from the media and researchers.

When expanding a large language model, occasionally some new capabilities will appear that are not available in smaller models. This attribute similar to "creativity" is called "emergent" capability, which represents We have taken a giant step towards general artificial intelligence.

Now, researchers from Google, Stanford, Deepmind and the University of North Carolina are exploring the "emergent" ability in large language models.

DALL-E prompted by the decoder

Magical "emergency" ability

Natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data. Scaling up language models often improves performance and sample efficiency on a range of downstream NLP tasks.

In many cases, we can predict the performance of a large language model by extrapolating the performance trends of smaller models. For example, the effect of scale on language model perplexity has been demonstrated across more than seven orders of magnitude.

However, performance on some other tasks did not improve in a predictable way.

For example, the GPT-3 paper shows that the language model's ability to perform multi-digit addition has a flat scaling curve for models from 100M to 13B parameters, is approximately random, but will decrease in One node causes a performance jump.

#Given the increasing use of language models in NLP research, it is important to better understand these capabilities that may arise unexpectedly.

In a recent paper "Emergent Power of Large Language Models" published in Machine Learning Research (TMLR), researchers demonstrated the "emergent power" produced by dozens of extended language models. ”Examples of abilities.

The existence of this "emergent" capability raises the question of whether additional scaling can further expand the range of capabilities of language models.

Certain tips and fine-tuning methods will only produce improvements in larger models

"Emergent" prompt task

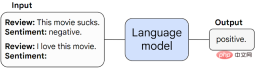

First, we discuss the "emergent" abilities that may appear in the prompt task.

In this type of task, a pre-trained language model is prompted to perform the task of next word prediction and performs the task by completing the response.

Without any further fine-tuning, language models can often perform tasks not seen during training.

#We call a task an "emergent" task when it unpredictably surges from random to above-random performance at a specific scale threshold. .

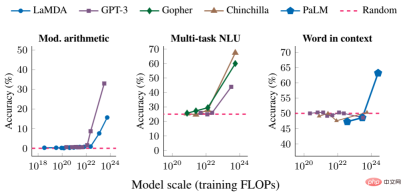

Below we present three examples of prompted tasks with "emergent" performance: multi-step arithmetic, taking a college-level exam, and identifying the intended meaning of a word.

In each case, language models perform poorly, with little dependence on model size, until a certain threshold is reached - where their performance spikes.

For models of sufficient scale, performance on these tasks only becomes non-random - for example, training floating point operations per second for arithmetic and multi-task NLU tasks ( FLOP) exceeds 10 to the 22nd power, and the training FLOP of words in the context task exceeds 10 to the 24th power.

"Emergent" prompt strategy

The second category of "emergent" capabilities includes prompt strategies that enhance language model capabilities.

Prompting strategies are a broad paradigm for prompting that can be applied to a range of different tasks. They are considered "emergent" when they fail for small models and can only be used by sufficiently large models.

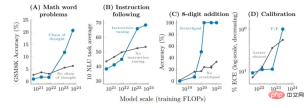

Thought chain prompts are a typical example of the "emergent" prompt strategy, where the prompt model generates a series of intermediate steps before giving the final answer.

Thought chain prompts enable language models to perform tasks that require complex reasoning, such as multi-step math word problems.

It is worth mentioning that the model can acquire the ability of thought chain reasoning without explicit training. The figure below shows an example of a thought chain prompt.

#The empirical results of the thinking chain prompt are as follows.

For smaller models, applying the thought chain prompt is no better than the standard prompt, for example when applied to GSM8K, which is a Challenging math word problem benchmark.

However, for large models, Thought Chain prompts achieved a 57% solution rate on GSM8K, significantly improving performance in our tests.

The significance of studying "emergent" abilities

So what is the significance of studying "emergent" abilities?

Identifying “emergent” capabilities in large language models is the first step in understanding this phenomenon and its potential impact on future model capabilities.

For example, because “emergent” small-shot hinting capabilities and strategies are not explicitly encoded in pre-training, researchers may not know the full range of small-shot hinting capabilities of current language models.

In addition, the question of whether further expansion will potentially give larger models "emergent" capabilities is also very important.

- Why does "emergent" ability appear?

- When certain capabilities emerge, will new real-world applications of language models be unlocked?

- Since computing resources are expensive, can emergent capabilities be unlocked through other means (such as better model architecture or training techniques) without increasing scalability?

Researchers say these questions are not yet known.

However, as the field of NLP continues to develop, it is very important to analyze and understand the behavior of language models, including the "emergent" capabilities produced by scaling.

The above is the detailed content of The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

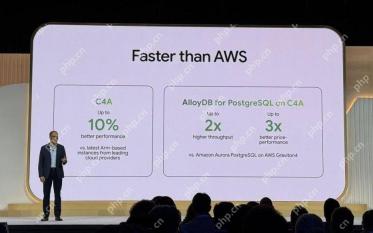

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Linux new version

SublimeText3 Linux latest version

Notepad++7.3.1

Easy-to-use and free code editor

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software