Technology peripherals

Technology peripherals AI

AI For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!

For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!

2022 is the year of the explosion of artificial intelligence-generated content (AIGC). One of the popular directions is to edit pictures through text descriptions (text prompts). Existing methods usually rely on generative models trained on large-scale data sets, which not only results in high data acquisition and training costs, but also results in larger model sizes. These factors have brought a high threshold to the actual development and application of technology, limiting the development and creativity of AIGC.

In response to the above pain points, NetEase Interactive Entertainment AI Lab collaborated with Shanghai Jiao Tong University to conduct research and innovatively proposed a solution based on differentiable vector renderer - CLIPVG, for the first time It achieves text-guided image editing without relying on any generative model. This solution cleverly uses the characteristics of vector elements to constrain the optimization process, so it can not only avoid massive data requirements and high training overhead, but also achieve the optimal level of generation effects. The corresponding paper "CLIPVG: Text-Guided Image Manipulation Using Differentiable Vector Graphics" has been included in AAAI 2023.

- ##Paper address: https://arxiv.org/abs/2212.02122

- Open source code: https://github.com/NetEase-GameAI/clipvg

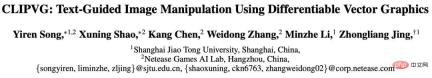

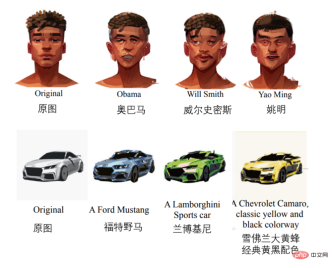

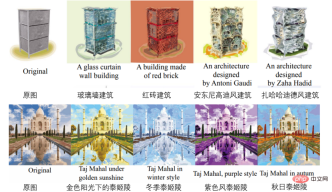

Some of the effects are as follows (in order) For face editing, car model modification, building generation, color change, pattern modification, font modification).

Ideas and technical background

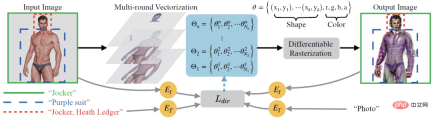

From the perspective of the overall process, CLIPVG first proposed a multi-round vectorization method that can robustly convert pixel images into vectors domain and adapt to subsequent image editing needs. An ROI CLIP loss is then defined as the loss function to support guidance with different text for each region of interest (ROI). The entire optimization process uses a differentiable vector renderer to perform gradient calculations on vector parameters (such as color block colors, control points, etc.).CLIPVG combines technologies from two fields, one is text-guided image editing in the pixel domain, and the other is the generation of vector images. Next, the relevant technical background will be introduced in turn.

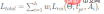

Text-guided image translation

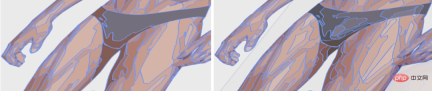

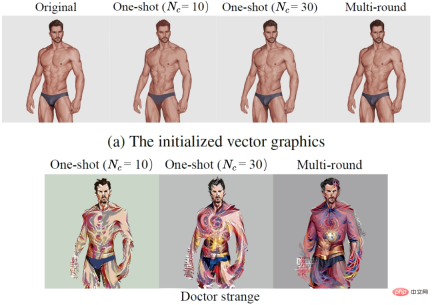

Typical methods to allow AI to "understand" text guidance during image editing It uses the Contrastive Language-Image Pre-Training (CLIP) model. The CLIP model can encode text and images into comparable latent spaces and provide cross-modal similarity information about "whether the image conforms to the text description", thereby establishing a semantic connection between text and images. However, in fact, it is difficult to effectively guide image editing directly using only the CLIP model. This is because CLIP mainly focuses on the high-level semantic information of the image and lacks constraints on pixel-level details, causing the optimization process to easily fall into a local optimum. (local minimum) or adversarial solutions.The existing common method is to combine CLIP with a pixel domain generation model based on GAN or Diffusion, such as StyleCLIP (Patashnik et al, 2021), StyleGAN-NADA (Gal et al, 2022), Disco Diffusion (alembics 2022), DiffusionCLIP (Kim, Kwon, and Ye 2022), DALL·E 2 (Ramesh et al, 2022) and so on. These schemes utilize generative models to constrain image details, thus making up for the shortcomings of using CLIP alone. But at the same time, these generative models rely heavily on training data and computing resources, and will make the effective range of image editing limited by the training set images. Limited by the ability to generate models, methods such as StyleCLIP, StyleGAN-NADA, and DiffusionCLIP can only limit a single model to a specific field, such as face images. Although methods such as Disco Diffusion and DALL·E 2 can edit any image, they require massive data and computing resources to train their corresponding generative models. There are currently very few solutions that do not rely on generative models, such as CLIPstyler (Kwon and Ye 2022). During optimization, CLIPstyler will divide the image to be edited into random patches, and use CLIP guidance on each patch to strengthen the constraints on image details. The problem is that each patch will independently reflect the semantics defined by the input text. As a result, this solution can only perform style transfer, but cannot perform overall high-level semantic editing of the image. Different from the above pixel domain methods, the CLIPVG solution proposed by NetEase Interactive Entertainment AI Lab uses the characteristics of vector graphics to constrain image details to replace the generative model. CLIPVG can support any input image and can perform general-purpose image editing. Its output is a standard svg format vector graphic, which is not limited by resolution. Some existing works consider text-guided vector graphics generation, such as CLIPdraw (Frans, Soros, and Witkowski 2021), StyleCLIPdraw (Schaldenbrand, Liu, and Oh 2022) et al. A typical approach is to combine CLIP with a differentiable vector renderer, and start from randomly initialized vector graphics and gradually approximate the semantics represented by the text. The differentiable vector renderer used is Diffvg (Li et al. 2020), which can rasterize vector graphics into pixel images through differentiable rendering. CLIPVG also uses Diffvg to establish the connection between vector images and pixel images. Different from existing methods, CLIPVG focuses on how to edit existing images rather than directly generating them. Since most of the existing images are pixel images, they need to be vectorized before they can be edited using the characteristics of vector graphics. Existing vectorization methods include Adobe Image Trace (AIT), LIVE (Ma et al. 2022), etc., but these methods do not consider subsequent editing needs. CLIPVG introduces multiple rounds of vectorization enhancement methods based on existing methods to specifically improve the robustness of image editing. Technical implementation The overall process of CLIPVG is shown in the figure below. First, the input pixel image is subjected to multi-round vectorization (Multi-round Vectorization) with different precisions, where the set of vector elements obtained in the i-th round is marked as Θi. The results obtained in each round will be superimposed together as an optimization object, and converted back to the pixel domain through differentiable vector rendering (Differentiable Rasterization). The starting state of the output image is the vectorized reconstruction of the input image, and then iterative optimization is performed in the direction described in the text. The optimization process will calculate the ROI CLIP loss ( The entire iterative optimization process can be seen in the following example, in which the guide text is "Jocker, Heath Ledger" (Joker, Heath Ledger) . Vectorization Vector graphics can be defined as a collection of vector elements, where each vector element is controlled by a series of parameters. The parameters of the vector element depend on its type. Taking a filled curve as an example, its parameters are , where is the control Point parameters, are parameters for RGB color and opacity. There are some natural constraints when optimizing vector elements. For example, the color inside an element is always consistent, and the topological relationship between its control points is also fixed. These features make up for CLIP's lack of detailed constraints and can greatly enhance the robustness of the optimization process. Theoretically, CLIPVG can be vectorized using any existing method. But research has found that doing so can lead to several problems with subsequent image editing. First of all, the usual vectorization method can ensure that the adjacent vector elements of the image are perfectly aligned in the initial state, but each element will move with the optimization process, causing "cracks" to appear between the elements. Secondly, sometimes the input image is relatively simple and only requires a small number of vector elements to fit, while the effect of text description requires more complex details to express, resulting in the lack of necessary raw materials (vector elements) during image editing. In response to the above problems, CLIPVG proposed a multi-round vectorization strategy. In each round, existing methods will be called to obtain a vectorized result, which will be superimposed in sequence. Each round improves accuracy relative to the previous round, i.e. vectorizes with smaller blocks of vector elements. The figure below reflects the difference in different precisions during vectorization. The set of vector elements obtained by the i-th round of vectorization can be expressed as Loss function Similar to StyleGAN-NADA and CLIPstyler, CLIPVG uses a directional CLIP loss to Measures the correspondence between generated images and description text, which is defined as follows, where Ai is the i-th ROI area, which is The total loss is the sum of ROI CLIP losses in all areas, that is, Here is the one The region can be a ROI, or a patch cropped from the ROI. CLIPVG will optimize the vector parameter set Θ based on the above loss function. When optimizing, you can also target only a subset of Θ, such as shape parameters, color parameters, or some vector elements corresponding to a specific area. In the experimental part, CLIPVG first verified the effectiveness of multiple rounds of vectorization strategies and vector domain optimization through ablation experiments, and then compared it with the existing baseline A comparison was made, and unique application scenarios were finally demonstrated. Ablation experiment The study first compared the multi-round vectorization (Multi-round) strategy and only one-round vectorization (One- shot) effect. The first line in the figure below is the initial result after vectorization, and the second line is the edited result. where Nc represents the accuracy of vectorization. It can be seen that multiple rounds of vectorization not only improve the reconstruction accuracy of the initial state, but also effectively eliminate the cracks between vector elements after editing and enhance the performance of details. #In order to further study the characteristics of vector domain optimization, the paper compares CLIPVG (vector domain method) and CLIPstyler (pixel domain method) using different patch sizes The effect of enhancement. The first line in the figure below shows the effect of CLIPVG using different patch sizes, and the second line shows the effect of CLIPstyler. Its textual description is "Doctor Strange". The resolution of the entire image is 512x512. It can be seen that when the patch size is small (128x128 or 224x224), both CLIPVG and CLIPstyler will display the representative red and blue colors of "Doctor Strange" in small local areas, but the semantics of the entire face do not change significantly. . This is because the CLIP guidance at this time is not applied to the entire image. When CLIPVG increases the patch size to 410x410, you can see obvious changes in character identity, including hairstyles and facial features, which are effectively edited according to text descriptions. If patch enhancement is removed, the semantic editing effect and detail clarity will be reduced, indicating that patch enhancement still has a positive effect. Unlike CLIPVG, CLIPstyler still cannot change the character's identity when the patch is larger or the patch is removed, but only changes the overall color and some local textures. The reason is that the method of enlarging the patch size in the pixel domain loses the underlying constraints and falls into a local optimum. This set of comparisons shows that CLIPVG can effectively utilize the constraints on details in the vector domain and achieve high-level semantic editing combined with the larger CLIP scope (patch size), which is difficult to achieve with pixel domain methods. Comparative experiment In the comparative experiment, the study first used CLIPVG and two methods to edit any picture. The pixel domain methods were compared, including Disco Diffusion and CLIPstyler. As you can see in the figure below, for the example of "Self-Portrait of Vincent van Gogh", CLIPVG can edit the character identity and painting style at the same time, while the pixel domain method only can achieve one of them. For "Gypsophila", CLIPVG can edit the number and shape of petals more accurately than the baseline method. In the examples of "Jocker, Heath Ledger" and "A Ford Mustang", CLIPVG can also robustly change the overall semantics. Relatively speaking, Disco Diffusion is prone to local flaws, while CLIPstyler generally only adjusts the texture and color. (Top down: Van Gogh painting, gypsophila, Heath Ledger Joker , Ford Mustang) #The researchers then compared pixel domain methods for images in specific fields (taking human faces as an example), including StyleCLIP, DiffusionCLIP and StyleGAN-NADA. Due to the restricted scope of use, the generation quality of these baseline methods is generally more stable. In this set of comparisons, CLIPVG still shows that the effect is not inferior to existing methods, especially the degree of consistency with the target text is often higher. (Top to bottom: Doctor Strange, White Walkers, Zombies) Using the characteristics of vector graphics and ROI-level loss functions, CLIPVG can support a series of innovative gameplay that are difficult to achieve with existing methods. For example, the editing effect of the multi-person picture shown at the beginning of this article is achieved by defining different ROI level text descriptions for different characters. The left side of the picture below is the input, the middle is the editing result of the ROI level text description, and the right side is the result of the entire picture having only one overall text description. The descriptions corresponding to areas A1 to A7 are 1. "Justice League Six", 2. "Aquaman", 3. "Superman", 4. "Wonder Woman" ), 5. "Cyborg" (Cyborg), 6. "Flash, DC Superhero" (The Flash, DC) and 7. "Batman" (Batman). It can be seen that the description at the ROI level can be edited separately for each character, but the overall description cannot generate effective individual identity characteristics. Since the ROIs overlap with each other, it is difficult for existing methods to achieve the overall coordination of CLIPVG even if each character is edited individually. CLIPVG can also achieve a variety of special editing effects by optimizing some vector parameters. The first line in the image below shows the effect of editing only a partial area. The second line shows the font generation effect of locking the color parameters and optimizing only the shape parameters. The third line is the opposite of the second line, achieving the purpose of recoloring by optimizing only the color parameters. (Top-down: sub-area editing, font stylization, image color change)Vector image generation

in the figure below) based on the area range and associated text of each ROI, and optimize each vector element according to the gradient, including color parameters and shape parameters.

in the figure below) based on the area range and associated text of each ROI, and optimize each vector element according to the gradient, including color parameters and shape parameters.

, and the results produced by all rounds The set of vector elements obtained after superposition is denoted as

, and the results produced by all rounds The set of vector elements obtained after superposition is denoted as  , which is the total optimization object of CLIPVG.

, which is the total optimization object of CLIPVG.

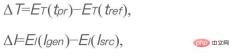

represents the input text description.

represents the input text description.  is a fixed reference text, set to "photo" in CLIPVG, and

is a fixed reference text, set to "photo" in CLIPVG, and  is the generated image (the object to be optimized).

is the generated image (the object to be optimized).  is the original image.

is the original image.  and

and  are the text and image codecs of CLIP respectively. ΔT and ΔI represent the latent space directions of text and image respectively. The purpose of optimizing this loss function is to make the semantic change direction of the image after editing conform to the description of the text. The fixed t_ref is ignored in subsequent formulas. In CLIPVG, the generated image is the result of differentiable rendering of vector graphics. In addition, CLIPVG supports assigning different text descriptions to each ROI. At this time, the directional CLIP loss will be converted into the following ROI CLIP loss,

are the text and image codecs of CLIP respectively. ΔT and ΔI represent the latent space directions of text and image respectively. The purpose of optimizing this loss function is to make the semantic change direction of the image after editing conform to the description of the text. The fixed t_ref is ignored in subsequent formulas. In CLIPVG, the generated image is the result of differentiable rendering of vector graphics. In addition, CLIPVG supports assigning different text descriptions to each ROI. At this time, the directional CLIP loss will be converted into the following ROI CLIP loss,

The associated text description. R is a differentiable vector renderer, and R(Θ) is the entire rendered image.

The associated text description. R is a differentiable vector renderer, and R(Θ) is the entire rendered image.  is the entire input image.

is the entire input image.  represents a cropping operation, which means cropping the area

represents a cropping operation, which means cropping the area  from image I. CLIPVG also supports a patch-based enhancement scheme similar to that in CLIPstyler, that is, multiple patches can be further randomly cropped from each ROI, and the CLIP loss is calculated for each patch based on the text description corresponding to the ROI.

from image I. CLIPVG also supports a patch-based enhancement scheme similar to that in CLIPstyler, that is, multiple patches can be further randomly cropped from each ROI, and the CLIP loss is calculated for each patch based on the text description corresponding to the ROI.

is the loss weight corresponding to each area.

is the loss weight corresponding to each area. Experimental results

More applications

The above is the detailed content of For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

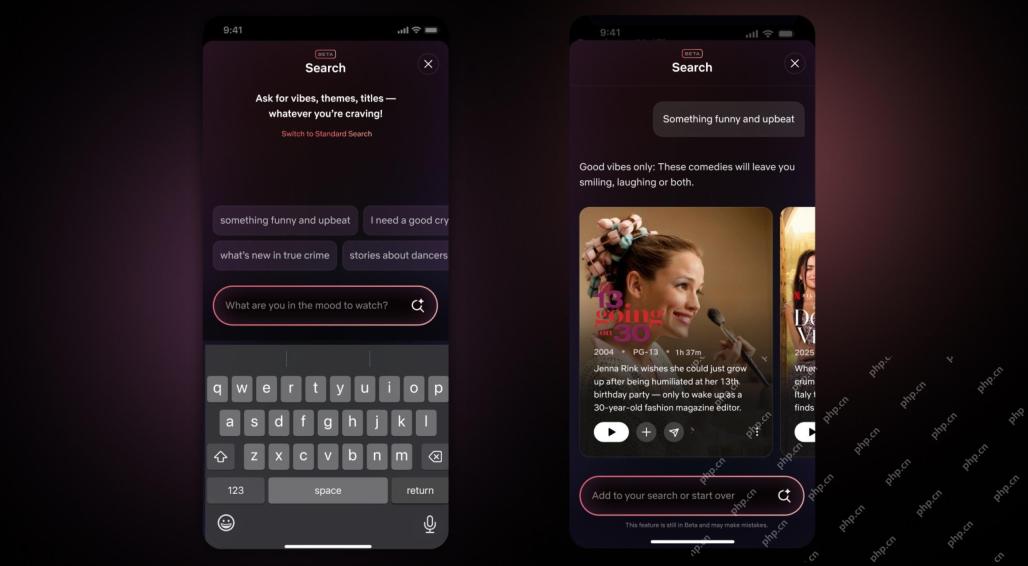

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

Dreamweaver CS6

Visual web development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.