Technology peripherals

Technology peripherals AI

AI Xiaozha spent a lot of money! Meta has developed an AI model specifically for the Metaverse

Xiaozha spent a lot of money! Meta has developed an AI model specifically for the MetaverseXiaozha spent a lot of money! Meta has developed an AI model specifically for the Metaverse

Artificial intelligence will become the backbone of the virtual world.

Artificial intelligence can be combined with a variety of related technologies in the metaverse, such as computer vision, natural language processing, blockchain and digital twins.

#In February, Zuckerberg showed off what the Metaverse would look like at Inside The Lab, the company’s first virtual event. He said the company is developing a new series of generative AI models that will allow users to generate their own virtual reality avatars simply by describing them.

Zuckerberg announced a series of upcoming projects, such as Project CAIRaoke, a fully end-to-end neural model for building on-device voice assistants that can help users communicate with their voice assistants more naturally . Meanwhile, Meta is working hard to build a universal speech translator that provides direct speech-to-speech translation for all languages.

A few months later, Meta fulfilled their promise. However, Meta isn't the only tech company with skin in the game. Companies such as NVIDIA have also released their own self-developed AI models to provide a richer Metaverse experience.

Open source pre-trained Transformer (OPT-175 billion parameters)

GAN verse 3D

GANverse 3D is developed by NVIDIA AI Research and is A model that uses deep learning to process 2D images into 3D animated versions, a tool described in a research paper published at ICLR and CVPR last year, can produce simulations faster and at a lower cost.

This model uses StyleGAN to automatically generate multiple views from a single image. The application can be imported as an extension to NVIDIA Omniverse to accurately render 3D objects in virtual worlds. Omniverse launched by NVIDIA helps users create simulations of their final ideas in a virtual environment.

The production of 3D models has become a key factor in building the metaverse. Retailers such as Nike and Forever21 have set up their virtual stores in the Metaverse to drive e-commerce sales.

Visual Acoustic Matching Model (AViTAR)

Meta’s Reality Lab team collaborated with the University of Texas to build an artificial intelligence model that to improve the sound quality of metaspace. This model helps match audio and video in a scene. It transforms audio clips to make them sound like they were recorded in a specific environment. The model uses self-supervised learning after extracting data from random online videos. Ideally, users should be able to view their favorite memories on their AR glasses and hear the exact sounds produced by the actual experience.

Meta AI has released AViTAR as open source, along with two other acoustic models, which is very rare considering that sound is an often overlooked part of the metaverse experience.

Visually Impacted Vibration Reduction (VIDA)

The second acoustic model released by Meta AI is used to remove reverberation in acoustics.

The model is trained on a large-scale dataset with various realistic audio renderings from 3D models of homes. Reverb not only reduces the quality of the audio, making it difficult to understand, but it also improves the accuracy of automatic speech recognition.

VIDA is unique in that it uses audio for observation as well as visual cues. Improving on typical audio-only approaches, VIDA can enhance speech and identify voices and speakers.

Visual Voice (VisualVoice)

VisualVoice, the third acoustic model released by Meta AI, can extract speech from videos. Like VIDA, VisualVoice is trained on audio-visual cues from unlabeled videos. The model has automatically separated speech.

This model has important application scenarios, such as making technology for the hearing-impaired, enhancing the sound of wearable AR devices, transcribing speech from online videos in noisy environments, etc.

Audio2Face

Last year, Nvidia released an open beta of Omniverse Audio2Face to generate AI-driven facial animations to match any voiceover. This tool simplifies the long and tedious process of animating games and visual effects. The app also allows users to issue commands in multiple languages.

At the beginning of this year, Nvidia released an update to the tool, adding features such as BlendShape Generation to help users create a set of blendhapes from a neutral avatar. Additionally, the functionality of a streaming audio player has been added, allowing streaming of audio data using text-to-speech applications. Audio2Face sets up a 3D character model that can be animated with audio tracks. The audio is then fed into a deep neural network. Users can also edit characters in post-processing to change their performance.

The above is the detailed content of Xiaozha spent a lot of money! Meta has developed an AI model specifically for the Metaverse. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

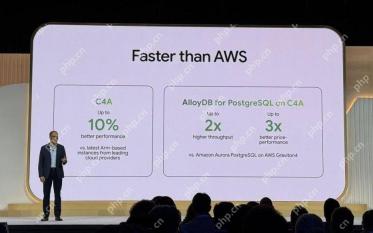

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function