On November 28, NeurIPS 2022 officially opened.

As one of the most prestigious artificial intelligence events in the world, NeurIPS is the focus of attention in the field of computer science at the end of every year. Papers accepted by NeurIPS represent the highest level of current neuroscience and artificial intelligence research, and also reflect changes in industry trends.

What’s interesting is that this year’s “contestants” seem to have a special liking for “games” in their research.

For example, Li Feifei’s team’s MineDojo, based on the Minecraft game environment, won the best data set and benchmark paper awards. Relying on the openness of the game, researchers can train agents through various types of tasks in MineDojo, thereby giving AI more general capabilities.

And through the strict admission rate, another paper also included in the field of gaming may be relevant to many gamers.

After all, who hasn’t played King of Kings?

Paper "Arena: A Generalization Environment for Competitive Reinforcement Learning"

Address: https://openreview.net/pdf?id=7e6W6LEOBg3

In the article, the researchers proposed a game based on the MOBA game "The King of Kings" Glory” test environment. The purpose is actually similar to MineDojo - to train AI.

Why are MOBA game environments so popular?

Since DeepMind launched AlphaGo, games, as a simulated environment with high degree of freedom and high complexity, have long become an important choice for AI research and experiments.

However, compared to humans who can continuously learn from open-ended tasks, agents trained in lower-complexity games cannot generalize their abilities. to specific tasks. To put it simply, these AIs can only play chess or play ancient Atari games.

In order to develop AI that can be more "general-purpose", the focus of academic research has gradually shifted from board games to more complex games, including non-perfect information game games (such as Poker) and strategy games (such as MOBA and RTS games).

At the same time, as Li Feifei’s team said in the award-winning paper, in order for the agent to be able to generalize to more tasks, the training environment needs to provide enough tasks .

DeepMind, which relied on AlphaGo and its derivative version AlphaZero to defeat all the invincible players in the Go circle, quickly realized this.

#In 2016, DeepMind teamed up with Blizzard to launch the "StarCraft II Learning Environment" based on "StarCraft II" with a space complexity of 10 to the power of 1685. Environment, SC2LE), provides researchers with specifications for agent actions and rewards, and an open source Python interface for communicating with game engines.

There is also an "AI training ground" with excellent qualifications in China——

As In the well-known MOBA game, the player's action state space in "Honor of Kings" is as high as 10 to the 20,000th power, which is far larger than Go and other games, and even exceeds the total number of atoms in the entire universe (10 to the 80th power).

Like DeepMind, Tencent’s AI Lab also teamed up with “Honor of Kings” to jointly develop the “Honor of Kings AI Open Research Environment” that is more suitable for AI research.

Currently, the "Glory of Kings AI Open Research Environment" includes a 1v1 battle environment and baseline algorithm model, and supports mirror battle tasks for 20 heroes. and non-mirror battle missions.

Specifically, the "Glory of Kings AI Open Research Environment" can support 20×20=400 battle sub-tasks when only considering the selection of heroes from both sides. If you include summoner skills, there will be 40,000 seed quests.

In order to let everyone better understand the generalization challenges that the agent accepts in the "Glory of Kings AI Open Research Environment", we can use the two tests in the paper to Verify:

First make a behavior tree AI (BT) whose level is entry-level "gold". The opposite is the agent (RL) trained by the reinforcement learning algorithm.

In the first experiment, only Diao Chan (RL) and Diao Chan (BT) were allowed to fight, and then the trained RL (Diao Chan) was used to challenge different heroes (BT). .

The results after 98 rounds of testing are shown in the figure below:

When the opponent hero changes, the performance of the same training strategy drops sharply decline. Because changes in opponent heroes make the test environment different from the training environment, the strategies learned by existing methods lack generalization.

Figure 1 Generalization challenge across opponents

In the second In this experiment, only Diao Chan (RL) and Diao Chan (BT) were allowed to fight, and then the trained RL model was used to control other heroes to challenge Diao Chan (BT).

The results after 98 rounds of testing are as shown below:

When the target controlled by the model changes from Diao Chan to other heroes, the same The performance of the training strategy drops sharply. Because the change in target hero makes the meaning of the action different from Diao Chan's actions in the training environment.

Figure 2 Cross-target generalization challenge

Causes this result The reason is very simple. Each hero has its own unique operating skills. After a single-trained agent gets a new hero, it doesn't know how to use it, so it can only turn a blind eye.

The same goes for human players. Players who can "kill randomly" in the middle may not be able to achieve a good KDA after changing to the jungle.

It is not difficult to see that this actually goes back to the question we raised at the beginning. It is difficult to train "universal" AI in a simple environment. MOBA games with high complexity just provide an environment that is convenient for testing the generalization of the model.

Of course, the game cannot be used directly to train AI, so a specially optimized "training ground" came into being.

Thus, researchers can test and train their own models in environments such as the "StarCraft II Learning Environment" and the "Glory of Kings AI Open Research Environment."

How do domestic researchers access appropriate platform resources?

The development of DeepMind is inseparable from the strong support of Google. MineDojo proposed by Li Feifei's team not only uses the resources of Stanford, a top university, but also has strong support from NVIDIA.

The current domestic artificial intelligence industry is still not solid enough at the infrastructure level, especially for ordinary companies and universities, which are facing a shortage of research and development resources.

In order to allow more researchers to participate, Tencent officially opened the "Honor of Kings AI Open Research Environment" to the public on November 21 this year.

Users only need to register an account on the official website of Enlightenment Platform, submit information and pass the platform review to download it for free.

## Website link: https://aiarena.tencent.com/aiarena/zh/open-gamecore

It is worth mentioning that in order to better support scholars and algorithm developers in their research, the Enlightenment Platform not only encapsulates the "Honor of Kings AI Open Research Environment" for ease of use, but also provides Standard code and training framework.

Next, let’s have a “shallow” experience on how to start an AI training project on the Enlightenment Platform!

Since we want AI to "play" "Honor of Kings", the first thing we have to do is to make the "intelligent agent" used to control the hero.

Sounds a bit complicated? However, in the "Glory of Kings AI Open Research Environment", this is actually very simple.

First, start the gamecore server:

cd gamecoregamecore-server.exe server --server-address :23432

Install the hok_env package:

git clone https://github.com/tencent-ailab/hok_env.gitcd hok_env/hok_env/pip install -e .

and run Test script:

cd hok_env/hok_env/hok/unit_test/python test_env.py

Now, you can import hok and call hok.HoK1v1.load_game to create the environment:

import hok

env = HoK1v1.load_game(runtime_id=0, game_log_path="./game_log", gamecore_path="~/.hok", config_path="config.dat",config_dicts=[{"hero":"diaochan", "skill":"rage"} for _ in range(2)])

Following, We obtain our first observation from the agent by resetting the environment:

obs, reward, done, infos = env.reset()

obs is a list of NumPy arrays describing the agent's response to the environment observation.

reward is a list of floating point scalars describing the immediate reward received from the environment.

done is a Boolean list describing the state of the game.

infosThe variable is a tuple of dictionaries whose length is the number of agents.

Then perform operations in the environment until time runs out or the agent is killed.

Here, just use the env.step method.

done = False while not done: action = env.get_random_action() obs, reward, done, state = env.step(action)

Like the "StarCraft II Learning Environment", you can also use visualization tools to view the replay of the agent in the "Glory of Kings AI Open Research Environment".

At this point, your first agent has been created.

Next, you can drag "her/him" to perform various trainings!

# Speaking of this, it is probably not difficult for everyone to find that the "Glory of Kings AI Open Research Environment" is not just a training environment The AI environment makes the entire process simple and easy to understand through familiar operations and rich documentation.

This will allow more people who are interested in entering the AI field to get started easily.

Game AI, what other possibilities are there?

Seeing this, there is actually a question that remains unanswered - as a research platform led by enterprises, why does Tencent Enlightenment Platform choose to open it up on a large scale?

In August this year, the Chengdu Artificial Intelligence Industry Ecological Alliance and the think tank Yuqian Consultants jointly released the country’s first game AI report. It is not difficult to see from the report that games are one of the key points in promoting the development of artificial intelligence. Specifically, games can improve the application of AI in three aspects.

First of all, the game is an excellent training and testing ground for AI.

- Rapid iteration: The game can be interacted with and tried and made at will, without any real cost. At the same time, there is an obvious reward mechanism, which can fully demonstrate the effectiveness of the algorithm.

- Rich tasks: There are many types of games with various difficulties and complexities. Artificial intelligence must adopt complex strategies to deal with them. Conquering different types of games reflects the improvement of algorithm level.

- Clear success or failure criteria: Calibrate the ability of artificial intelligence through game scores to facilitate further optimization of artificial intelligence.

Secondly, games can train different abilities of AI and lead to different applications.

For example, chess games train AI to make sequence decisions and gain long-term deduction capabilities; card games train AI to dynamically adapt and gain adaptability; real-time strategy games train AI to machine memory capabilities , long-term planning capabilities, multi-agent collaboration capabilities, and action coherence.

In addition, the game can also break environmental constraints and promote intelligent decision-making.

For example, games can promote virtual simulation real-time rendering and virtual simulation information synchronization, and upgrade virtual simulation interactive terminals.

The enlightenment platform relies on the advantages of Tencent AI Lab and King of Glory in terms of algorithms, computing power, complex scenarios, etc. After it is opened, it can Build a bridge of effective cooperation between games and AI development, linking university discipline construction, competition organization, and industry talent incubation. When the talent pool is sufficient, scientific research progress and commercial applications will spring up like mushrooms after a rain.

In the past two years, the Kaiwu Platform has taken many layout measures in the field of industry, academia and research: it held the "Kaiwu Multi-Agent Reinforcement Learning Competition", which attracted TOP2 people including Qingbei A group of top university teams, including prestigious universities, participated; a university science and education consortium was formed. The School of Information Science and Technology of Peking University launched a popular elective course "Algorithms in Game AI". The after-school homework was to conduct experiments in the Honor of Kings 1V1 environment...

Looking forward to the future, we can expect that these talents who have gone global with the help of the "Enlightenment" platform will radiate into various fields of the AI industry and realize the full bloom of the platform's upstream and downstream ecology.

The above is the detailed content of What is the use of letting AI learn to beat the king?. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

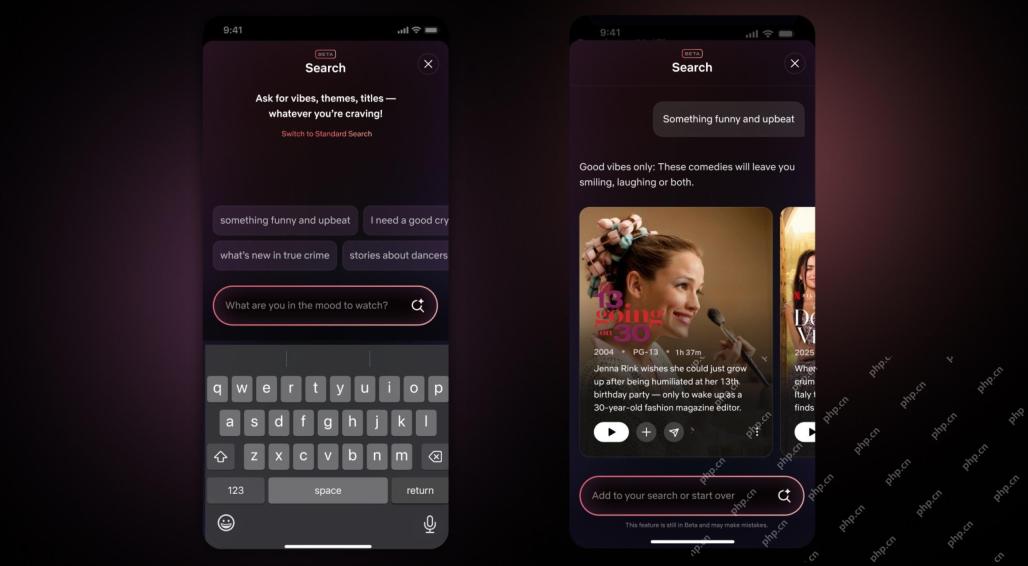

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Dreamweaver Mac version

Visual web development tools

Dreamweaver CS6

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.