Home >Technology peripherals >AI >The second generation of Meta digital people is here! Say goodbye to VR headsets, just swipe with iPhone

The second generation of Meta digital people is here! Say goodbye to VR headsets, just swipe with iPhone

- 王林forward

- 2023-04-09 14:21:061452browse

Meta’s realistic digital human 2.0 has evolved again and can now be generated with iPhone, just take out your phone and scan it!

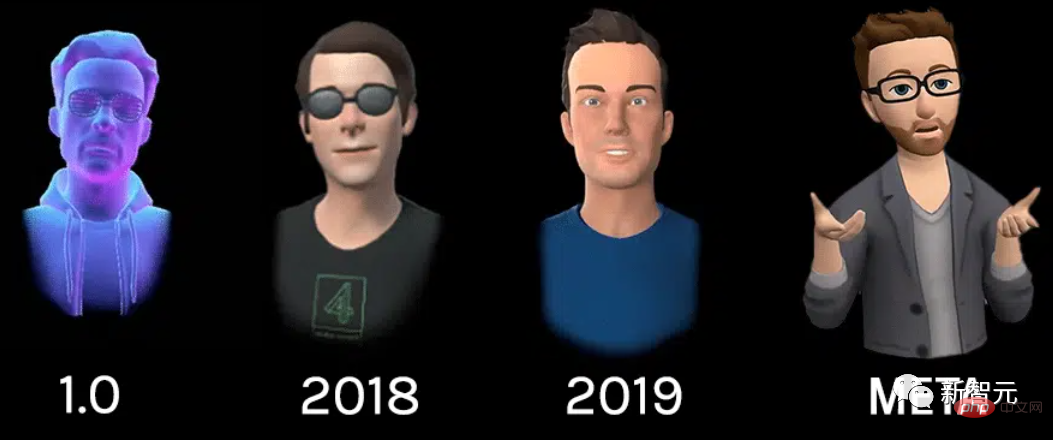

As early as March 2019, Facebook demonstrated the first-generation digital human image (Codec Avatar 1.0) at the event. The first generation of digital humans were generated using a dedicated capture rig with 132 cameras using multiple neural networks.

Once generated, 5 cameras on the VR headset device. Each eye provides two internal views and three external views below the face. Like below.

#Since then, Facebook has continued to improve the realism of these avatars, which for example require only a microphone and eyeballs With tracking technology, you can get a more realistic image. It finally evolved into Codec Avatar version 2.0 in August 2020. The biggest improvement of version 2.0 over version 1.0 is that the camera no longer needs to scan and track faces, but only needs to track eye movements.

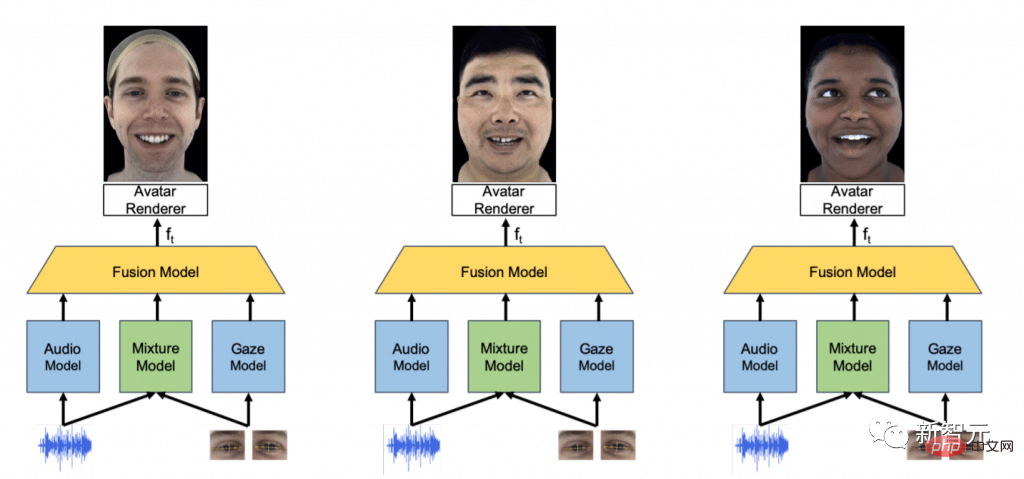

New neural network fuses eye-tracking data from VR headsets with audio feeds from microphones to infer wear possible facial expressions. The sound model and eye movement model data are fed back to the hybrid model, and then the Avatar image is output by the renderer after calculation and processing by the fusion model.

In May of this year, the team further announced that version 2.0 of Avatar has completely achieved " Completely realistic" effect. "I would say one of the big challenges over the next decade is whether we can achieve remote Avatar interactions that are indistinguishable from face-to-face interactions," said Sheikh, one of the project leaders.

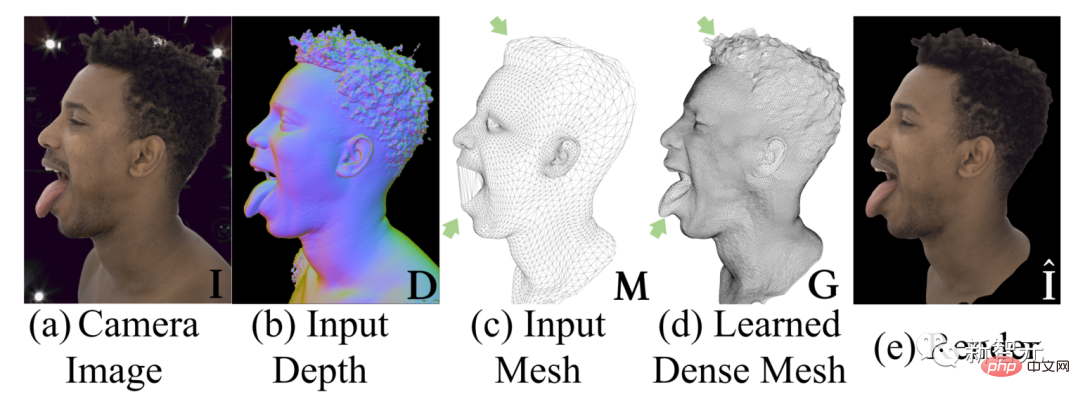

Look at the comparison above, a is a real photo, e is the final rendering The virtual person doesn't seem to be bragging much? Don’t worry, the above is the comparison result in the experiment. In actual application scenarios, the current image of Meta virtual human is like this.

Even the "most realistic" image on the far right is still a cartoon image. This is far from the truth. "Completely lifelike" is probably still a bit far away, but Meta is talking about ten years. Looking at the images in the demo, there is still a lot of hope that this goal can be achieved.

Moreover, the progress of virtual human technology is not only reflected in the direction of realism. There is no need to keep following one path. Meta is also trying other directions. For example, take off the VR headset?

In the past, generating a separate Codec avatar required a special capture device called "MUGSY", which had 171 high-resolution cameras. It’s the one in the picture above (the secret is afraid of retreating).

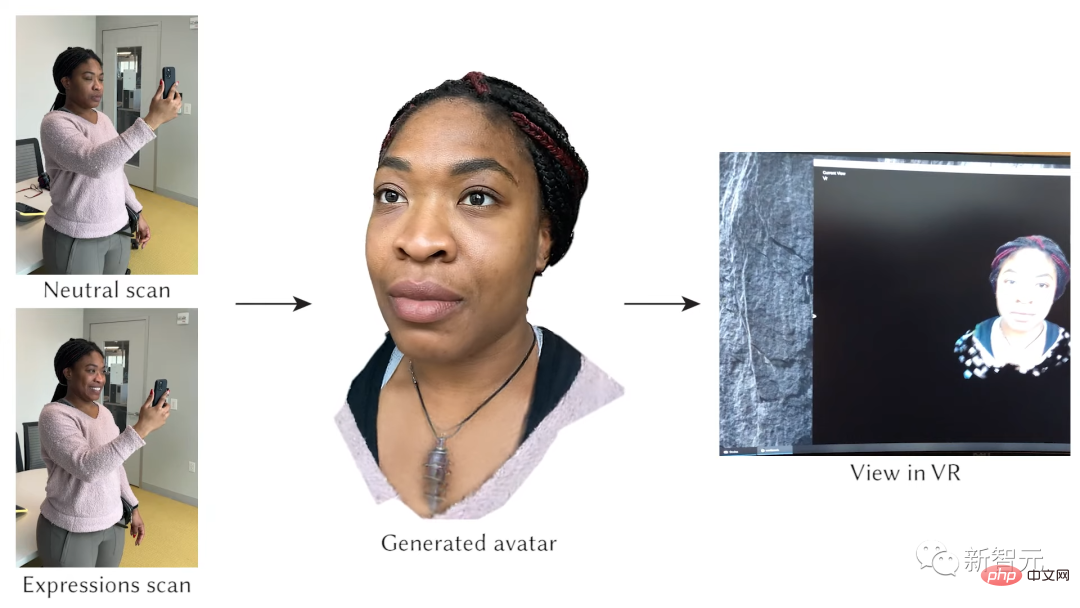

Meta now says that you don’t need to bring this thing, just have an iPhone! As long as a smartphone with a front-facing depth sensor (such as an iPhone with FaceID function) can directly scan (to be exact, dozens of scans), a realistic virtual human avatar can be generated.

First keep your face expressionless, scan it once, and then make various expressions, up to 65 different expressions are supported.

Meta said that it takes about 3-4 minutes on average to complete facial expression scanning with a mobile phone. Of course, this requires the support of computing power. The final generated realistic virtual human avatar takes about 6 hours on a machine with four high-end GPUs. Of course, if this technology is used in products, these calculations will be handed over to the cloud GPU, without the user's own computing resources.

So, why can something that previously required 100 cameras be done with just one mobile phone? The secret is a general model called Hypernetwork. This is a neural network that generates the weights of another neural network. In the above example, it is to generate a Codec Avatar for a specific person.

The researchers passed The model was trained by scanning 255 different faces, using an advanced capture device, much like MUGSY, but with only 90 cameras.

Although other researchers have demonstrated portraits generated by scanning with smartphones, and Meta stated that the generated results are SOTA-level.

However, the current system still cannot cope with glasses and long hair. And it can only go to the head, not other parts of the body.

Of course, Meta still has a long way to go before reaching this level of fidelity. Meta’s portraits now have a cartoon style. And that sense of realism slowly decreased over time. Now this image may be more suitable for people who use Quest 2 to play Horizon Worlds.

However, Codec Avatar may end up being a separate option instead of now This cartoon style upgrade. Meta CEO Zuckerberg described the future like this: You may use an expressionistic portrait to play ordinary games, and use a more realistic portrait to participate in work meetings.

In April this year, Yaser Sheikh, who is in charge of the Codec Avatar team, said, "It is impossible to predict how long it will take for Codec Avatar to be put into use." However, he made it clear that, He believes that the project has made great progress.

The above is the detailed content of The second generation of Meta digital people is here! Say goodbye to VR headsets, just swipe with iPhone. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology