While scientists and engineers are constantly creating new materials with special qualities that can be used for 3D printing, this can be a challenging and costly task.

To find the optimal parameters that consistently produce the best print quality for new materials, professional operators often need to conduct manual trial and error experiments, sometimes creating thousands of prints. Print speed and the amount of material deposited by the printer are some of the variables.

Now, MIT researchers are using AI to simplify this process. They developed an ML system that uses computer vision to monitor the production process and fix processing errors in real time.

After using simulations to train the neural network on how to change printing parameters to reduce errors, they put the controller on a real 3D printer.

This work avoids the process of printing tens or hundreds of millions of actual objects to teach neural networks. Additionally, this may make it easier for engineers to incorporate novel materials into their designs, allowing them to create products with unique chemical or electrical properties. This may also make it easier for technicians to make quick adjustments to the printing process if there are unexpected changes in settings or the material being printed.

Selecting the best parameters for a digital manufacturing method can be one of the most expensive steps in the process due to the amount of trial and error involved. Furthermore, once the technician discovers a combination that functions well, these parameters are only optimal in that specific situation. Because there is a lack of information about how the substance performs in various environments, on various equipment, or whether new batches have different characteristics.

In addition, there are difficulties in using ML systems. The researchers had to first make real-time measurements of what was happening at the printer.

To do this, they developed a machine vision device with two cameras pointed at the nozzle of the 3D printer. The technology illuminates the material as it is deposited and determines its thickness based on the amount of light passing through.

Training a neural network-based controller to understand this manufacturing process would require millions of prints, a data-intensive operation.

Their controller is trained using a method called reinforcement learning, which educates the model by paying it when it makes an error. The model requires the selection of printing parameters that can produce specific objects in the virtual environment. When the model is given a prediction result, it can be obtained by selecting parameters that minimize the variance between the printed result and the expected result.

In this case, "error" means that the model is either allocated too much material, filling spaces that should remain empty, or it doesn't have enough material, leaving spaces that need to be filled.

However, the real world is rougher than the model. In practice, conditions often change due to small fluctuations or printing process noise. The researchers used this method to simulate noise, producing more accurate results.

When the controller was tested, this printed objects more accurately than any other control strategy they examined. It is particularly effective when printing infill materials, which involves printing the inside of an object. The researchers' controller changed the printing path so that the object remained horizontal, while some other controllers placed large amounts of material so that the printed object would protrude upward.

Even after the material is deposited, the control strategy can understand how it disperses and adapts to the parameters.

The researchers intended to create controls for other manufacturing processes, and now they have demonstrated the efficiency of this approach in 3D printing. They also want to study how to change the strategy to accommodate situations where there are multiple material layers or various materials being produced simultaneously. Additionally, their method assumes a constant viscosity for each material, but future versions may use AI to detect and calculate viscosity in real time.

The above is the detailed content of How to use AI to control digital manufacturing?. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

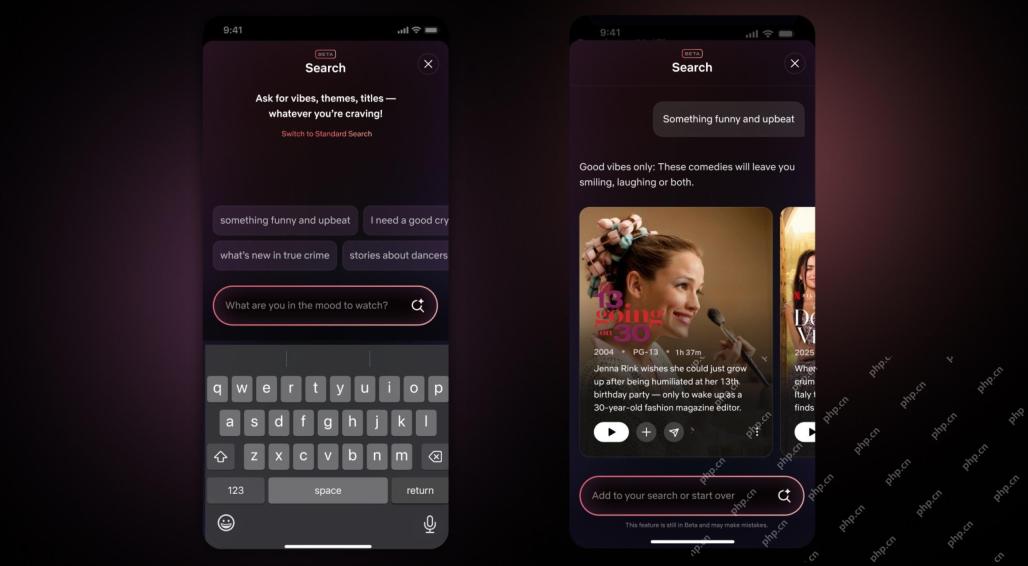

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment