Home >Technology peripherals >AI >Harness the power of the universe to crunch data! 'Physical networks” far outperform deep neural networks

Harness the power of the universe to crunch data! 'Physical networks” far outperform deep neural networks

- PHPzforward

- 2023-04-09 09:01:111305browse

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

In a soundproof crate lies one of the world's worst neural networks. After seeing an image of the number 6, the neural network pauses for a moment and then displays the number it recognizes: 0.

Peter McMahon, a physicist and engineer at Cornell University who led the development of the neural network, said with a sheepish smile that this was because the handwritten numbers looked sloppy. . Logan Wright, a postdoc visiting McMahon's lab from the NTT Institute, said the device usually gives the right answer, though he acknowledged that mistakes are common. Despite its mediocre performance, this neural network was groundbreaking research. The researchers turned the crate over, revealing not a computer chip but a microphone tilted toward a titanium plate affixed to the speaker.

Unlike neural networks that operate in the digital world of 0s and 1s, this device operates on the principle of sound. When Wright was given an image of a number, the image's pixels were converted into audio, and a speaker vibrated the titanium plate, filling the lab with a faint chirping sound. In other words, it is the metal echo that does the "reading" operation, not the software running on the silicon chip.

The success of this device is incredible, even for its designers. "Whatever the role of the vibrating metal is, it shouldn't have anything to do with classifying handwritten digits," McMahon said. In January of this year, the Cornell University research team published a paper in the journal Nature titled It is "Deep physical neural networks trained with backpropagation".

The paper's description of the device's raw reading capabilities gives hope to McMahon and others that, with many improvements, the device may Bring revolutionary changes to computing.

## Paper link: https://www.nature.com/articles/s41586-021-04223-6When it comes to traditional machine learning, computer scientists have found that the bigger the neural network, the better. The specific reasons can be found in the article in the picture below. This article called "Computer Scientists Prove Why Bigger Neural Networks Do Better" proves: If you want the network to be reliable Keeping in mind its training data, then overparameterization is not only valid, but required to be enforced.

Article address: https://www.quantamagazine.org/computer-scientists-prove-why-bigger-neural-networks -do-better-20220210/ Filling a neural network with more artificial neurons (nodes that store values) can improve its ability to distinguish between dachshunds and Dalmatians, as well as can be used to successfully complete countless other pattern recognition tasks.

Really huge neural networks can complete writing papers (such as OpenAI's GPT-3), drawing illustrations (such as OpenAI's DALL·E, DALL·E2 and Google's Imagen), and More difficult tasks that make people think deeply and fear them. With more computing power, greater feats become possible. This possibility encourages efforts to develop more powerful and efficient computing methods. McMahon and a group of like-minded physicists embrace an unconventional approach: Let the universe crunch the data for us.

McMahon said: "Many physical systems are naturally capable of doing certain calculations more efficiently or faster than computers." He pointed to wind tunnels as an example: When engineers design an airplane, they might put the blueprints into digitize, then spend hours on a supercomputer simulating the air flow around the wing. Alternatively, they could put the aircraft in a wind tunnel to see if it can fly. From a computational perspective, wind tunnels can instantly "calculate" the interaction of an aircraft wing with the air.

Caption: Cornell University team members Peter McMahon and Tatsuhiro Onodera write programs for various physical systems to complete learning tasks .

Photo source: Dave BurbankThe wind tunnel can simulate aerodynamics and is a machine with a single function.

Researchers like McMahon are working on a device that can learn to do anything—a system that can adjust its behavior through trial and error to acquire any new ability, such as Abilities such as sorting handwritten digits or distinguishing one vowel from another.

Latest research shows that physical systems such as light waves, superconductor networks and electron branching streams can all be learned. "We're not just reinventing the hardware, we're reinventing the entire computing paradigm," says Benjamin Scellier, a mathematician at ETH Zurich in Switzerland who helped design a new physics learning algorithm.

1 Learning and Thinking

Learning is an extremely unique process. Ten years ago, the brain was the only system that could learn. It is the structure of the brain that partly inspired computer scientists to design deep neural networks, currently the most popular artificial learning model. A deep neural network is a computer program that learns through practice.

A deep neural network can be thought of as a grid: layers of nodes used to store values are called neurons, and neurons are connected to neurons in adjacent layers by lines. Seed lines are also called "synapses". Initially, these synapses are just random numbers called "weights." If you want the network to read 4, you can have the first layer of neurons represent the original image of 4, and you can store the shadow of each pixel as a value in the corresponding neuron.

The network then "thinks", moving layer by layer, filling the next layer of neurons with neuron values multiplied by synaptic weights. The neuron with the largest value in the last layer is the answer of the neural network. For example, if this is the second neuron, the network guesses that it saw 2. To teach the network to make smarter guesses, the learning algorithm works in reverse. After each try, it calculates the difference between the guess and the correct answer (in our case, this difference will be represented by a high value in the fourth neuron of the last layer and a low value elsewhere).

The algorithm then works its way back through the network, layer by layer, calculating how to adjust the weights so that the value of the final neuron rises or falls as needed. This process is called backpropagation and is the core of deep learning. By repeatedly guessing and adjusting, backpropagation directs the weights to a set of numbers that will be output via a cascade of multiplications initiated by one image.

Source: Quanta Magazine Merrill ShermanBut compared with the brain’s thinking, artificial nerves Digital learning on the Internet appears to be very inefficient. On less than 2,000 calories a day, a human child can learn to speak, read, play games and more in just a few years. Under such limited energy conditions, the GPT-3 neural network capable of fluent conversation may take a thousand years to learn to chat.

From a physicist's perspective, a large digital neural network is just trying to do too much math. Today's largest neural networks must record and manipulate more than 500 billion numbers. This staggering number comes from the paper "Pathways Language Model (PaLM): Scaling to 540 Billion Parameters for Breakthrough Performance)" in the image below:

Paper link: https://ai.googleblog.com/2022/04/pathways-language-model-palm-scaling-to .htmlMeanwhile, the universe continues to present tasks that far exceed the limits of computers' meager computing power. There may be trillions of air molecules bouncing around in a room.

For a full-fledged collision simulation, this is the number of moving objects that the computer cannot keep track of, but the air itself can easily determine its own behavior at every moment. Our current challenge is to build a physical system that can naturally complete the two processes required for artificial intelligence - the "thinking" of classifying images, and correctly classifying such images The “learning” required.

A system that masters these two tasks is truly harnessing the mathematical power of the universe, rather than just doing mathematical calculations. "We never calculated anything like 3.532 times 1.567," Scellier said. "The system calculates, but implicitly by following the laws of physics."

2 Thinking Section

McMahon and co-scholars We've made progress on the "thinking" part of the puzzle. In the months before the COVID-19 pandemic, McMahon was setting up his lab at Cornell University and mulling over a strange discovery. Over the years, the best-performing neural networks for image recognition have become increasingly deep. That is, a network with more layers is better at taking a bunch of pixels and giving it a label, like "poodle."

This trend inspired mathematicians to study the transformation implemented by neural networks (from pixels to "poodles"). In 2017, several groups reported on the paper "Arbitrarily Deep Residual Neural Networks" "Reversible Architectures for Arbitrarily Deep Residual Neural Networks" proposes that the behavior of a neural network is an approximate version of a smooth mathematical function.

Paper address: https://arxiv.org/abs/1709.03698In Mathematics , the function converts an input (usually an x value) into an output (the y value or height of the curve at this location). In certain types of neural networks, more layers work better because the function is less jagged and closer to some ideal curve. The research got McMahon thinking.

Perhaps through a smoothly changing physical system one can circumvent the blocking inherent in digital methods. The trick is to find a way to tame a complex system—to adjust its behavior through training. McMahon and his collaborators chose titanium plates for such a system because their many vibration modes mix incoming sound in complex ways.

To make the slab work like a neural network, they fed in a sound that encoded the input image (such as a handwritten 6) and another sound that represented the synaptic weights. The peaks and troughs of sound need to hit the titanium plate at the right time for the device to combine the sounds and give an answer - for example, a new sound that is the loudest within six milliseconds represents a classification of "6."

Illustration: A Cornell University research team trained three different physical systems to "read" handwritten numbers: from left to right, a vibrating titanium plate, a crystal and an electronic circuit. Source: The left picture and the middle picture were taken by Rob Kurcoba of Cornell University; the right picture was taken by Charlie Wood of Quanta Magazine. The team also implemented their scheme in an optical system - where the input image and weights are encoded in two beams mixed together by a crystal - and a system that can similarly transform the input in electronic circuits.

In principle, any system with Byzantine behavior could do so, but the researchers believe optical systems hold special promise. Not only do crystals mix light extremely quickly, but light also contains rich data about the world. McMahon imagines that miniature versions of his optical neural networks will one day serve as the eyes of self-driving cars, able to recognize stop signs and pedestrians and then feed the information into the car's computer chips, much like our retinas do some basic vision for incoming light. Treated the same.

The Achilles heel of these systems, however, is that training them requires a return to the digital world. Backpropagation involves running a neural network in reverse, but film and crystals cannot easily resolve sound and light. So the team built a digital model of each physical system. Inverting these models on a laptop, they can use the backpropagation algorithm to calculate how to adjust the weights to give accurate answers.

Through this training, the titanium plate learned to classify handwritten digits with an accuracy rate of 87%. The accuracy of the circuit and laser in the picture above reaches 93% and 97% respectively. The results show that "not just standard neural networks can be trained with backpropagation," says physicist Julie Grollier of France's National Center for Scientific Research (CNRS). "It's beautiful."

The research team's vibrating titanium plate has not yet brought computing efficiency anywhere near the astonishing efficiency of the brain, and the device is not even as fast as a digital neural network. But McMahon thinks his device is amazing because it proves that people can think with more than just their brains or computer chips. "Any physical system can be a neural network." He said.

3 Learning part

Another difficult problem is how to make a system learn completely autonomously. Florian Marquardt, a physicist at the Max Planck Institute for Light Science in Germany, thinks one way is to build a machine that runs backwards. Last year, he and a collaborator proposed a backpropagation algorithm that can run on such a system in the paper "Self-learning Machines based on Hamiltonian Echo Backpropagation" Physics simulation.

##Paper address: https://arxiv.org/abs/2103.04992In order to prove this To do so, they used digital techniques to simulate a laser device similar to McMahon's device, encoding tunable weights in one light wave that is mixed with another input wave (encoding, such as an image). They bring the output closer to the correct answer and use optical components to break up the waves, reversing the process.

"The magic is," Marquardt said, "when you try the device again with the same input, the output tends to be closer to where you want it to be." Next, they Work is underway with experimentalists to build such a system. But focusing on systems running in reverse limits options, so other researchers have left backpropagation behind entirely.

Because they knew that the way the brain learns is not standard backpropagation, their research was not hit, but went further. "The brain doesn't backpropagate," Scellier said. When neuron A communicates with neuron B, "the propagation is one-way."

Note: CNRS physicist Julie Grollier has implemented a physics learning algorithm that is seen as a promising alternative to backpropagation.

Image source: Christophe CaudroyIn 2017, Scellier and Yoshua Bengio, a computer scientist at the University of Montreal, developed a method called A one-way learning approach to balanced propagation.

We can understand how it works like this: imagine a network of arrows like neurons, their directions representing 0 or 1, connected in a grid by springs that act as synaptic weights . The looser the spring, the harder it will be for the connecting arrows to line up. First, rotate the leftmost row of arrows to reflect the pixels of the handwritten digits, then rotate the other arrows while leaving the leftmost row of arrows unchanged and let this perturbation spread out through the springs.

When the flipping stops, the rightmost arrow gives the answer. The point is, we don't need to flip arrows to train this system. Instead, we can connect another set of arrows at the bottom of the network that show the correct answer. These correct arrows will cause the upper set of arrows to flip over, and the entire grid will enter a new equilibrium state.

Finally, compare the new direction of the arrows to the old direction and tighten or loosen each spring accordingly. After many experiments, the springs acquired smarter tensions, which Scellier and Bengio have shown is equivalent to backpropagation. "People thought there could be no connection between physical neural networks and backpropagation," Grollier said. "That's changed recently, which is very exciting."

About Balanced Propagation ’s initial work was theoretical. But in a forthcoming article, Grollier and CNRS physicist Jérémie Laydevant describe the execution of the algorithm on a quantum annealing machine built by D-Wave. The device has a network of thousands of interacting superconductors that work like arrows connected by springs, naturally calculating how the "spring" should update. However, the system cannot automatically update these synaptic weights.

4 Closing the Loop

At least one team has gathered some components to build an electronic circuit that uses physics to do all the heavy lifting. , the tasks it can accomplish include thinking, learning and updating weights. "We've been able to close the loop for a small system," said Sam Dillavou, a physicist at the University of Pennsylvania.

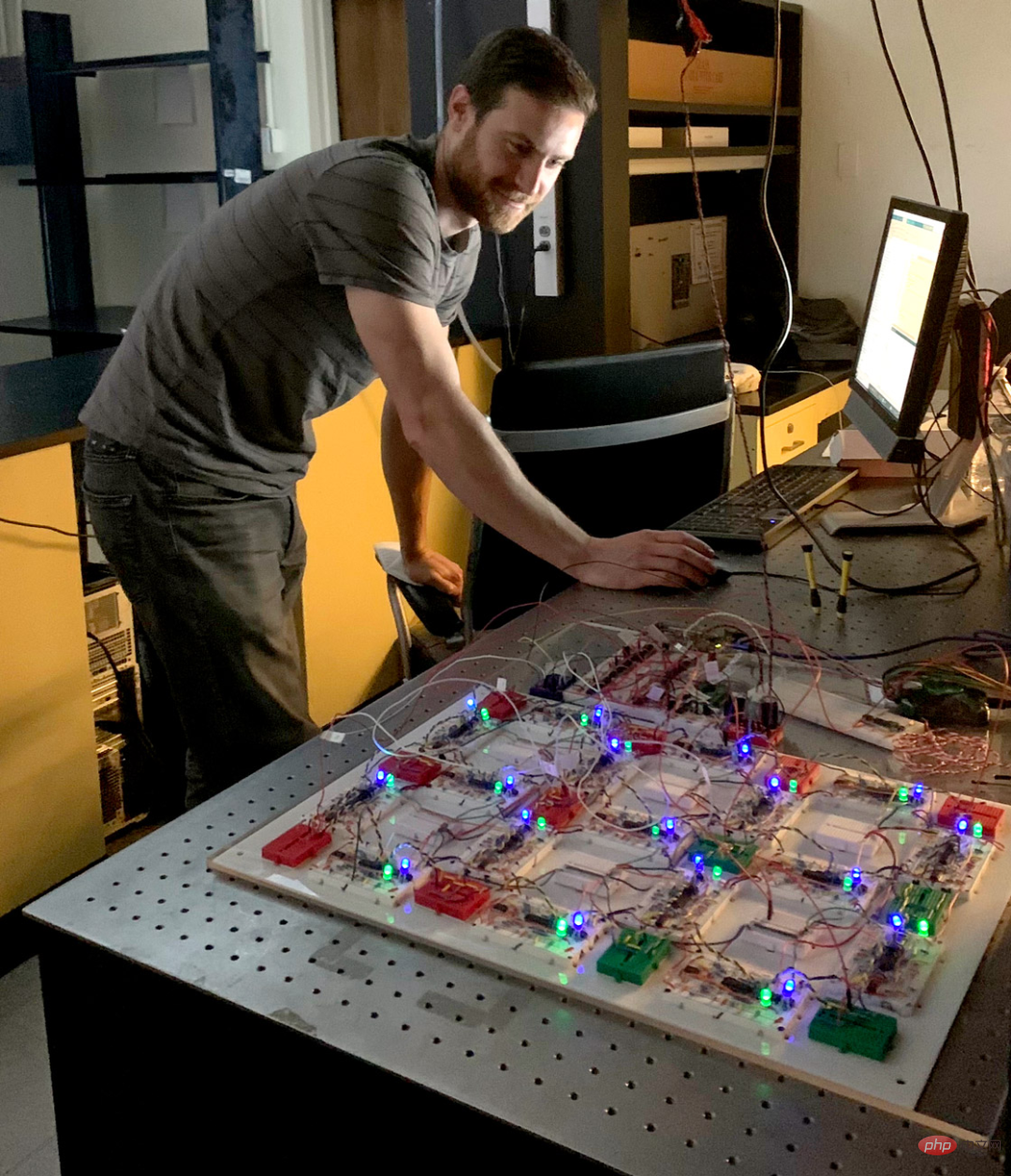

Caption: Pennsylvania University physicist Sam Dillavou tinkered with a circuit that can modify itself as it learns. The goal of Dillavou and his collaborators is to imitate the brain, which is true intelligence and is a relatively unified system that does not require any single structure to give orders. "Each neuron is doing its own thing," he said. To do this, they built a self-learning circuit in which the synaptic weights were variable resistors and the neurons were voltages measured between the resistors.

To classify a given input, this circuit converts the data into voltages applied to several nodes. Electric current flows through the circuit, looking for the path that dissipates the least energy, changing the voltage as it stabilizes. The answer is to specify the voltage at the output node. The innovation of this idea lies in the challenging learning step, for which they designed a scheme similar to balanced propagation, called coupled learning.

When one circuit receives data and "guesses" an outcome, another identical circuit starts with the correct answer and incorporates it into its behavior. Finally, the electronics connecting each pair of resistors automatically compare their values and adjust them for a "smarter" configuration.

The group described their basic circuit in a preprint last summer (shown below) titled "Demonstration of Decentralized Proof, Physics-Driven Learning" , Physics-Driven Learning)” paper shows that this circuit can learn to distinguish three types of flowers with an accuracy of 95%. And now they're working on a faster, more powerful device.

Paper address: https://arxiv.org/abs/2108.00275Even this upgrade cannot defeat the most powerful Advanced silicon chips. But the physicists who build these systems suspect that, as powerful as they appear today, digital neural networks will eventually appear slow and inadequate compared to analog networks.

Digital neural networks can only be scaled up to a certain point before they get bogged down in overcomputation, but larger physical networks just have to be themselves. "This is a very large, rapidly growing, ever-changing field, and I firmly believe that some very powerful computers will be built using these principles," Dillavou said.

The above is the detailed content of Harness the power of the universe to crunch data! 'Physical networks” far outperform deep neural networks. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology