ViP3D: End-to-end visual trajectory prediction through 3D agent query

arXiv paper "ViP3D: End-to-end Visual Trajectory Prediction via 3D Agent Queries", uploaded on August 2, 22, Tsinghua University, Shanghai (Yao) Qizhi Research Institute, CMU, Fudan, Li Auto and MIT, etc. joint work.

Existing autonomous driving pipelines separate the perception module from the prediction module. The two modules communicate through manually selected features such as agent boxes and trajectories as interfaces. Due to this separation, the prediction module receives only partial information from the perception module. Worse, errors from the perception module can propagate and accumulate, adversely affecting prediction results.

This work proposes ViP3D, a visual trajectory prediction pipeline that uses the rich information of the original video to predict the future trajectory of the agent in the scene. ViP3D uses sparse agent query throughout the pipeline, making it fully differentiable and interpretable. In addition, a new evaluation index for the end-to-end visual trajectory prediction task is proposed, End-to-end Prediction Accuracy (EPA, End-to-end Prediction Accuracy), which comprehensively considers perception and prediction accuracy. At the same time, the predicted trajectory and the ground truth trajectory are scored.

The picture shows the comparison between the traditional multi-step cascade pipeline and ViP3D: the traditional pipeline involves multiple non-differentiable modules, such as detection, tracking and prediction; ViP3D takes multi-view video as input, in an end-to-end manner Generate predicted trajectories that effectively utilize visual information, such as vehicle turn signals.

ViP3D aims to solve the trajectory prediction problem of original videos in an end-to-end manner. Specifically, given a multi-view video and a high-definition map, ViP3D predicts the future trajectories of all agents in the scene.

The overall process of ViP3D is shown in the figure: First, the query-based tracker processes multi-view videos from surrounding cameras to obtain the query of the tracked agent with visual features. The visual features in the agent query capture the movement dynamics and visual characteristics of the agents, as well as the relationships between agents. After that, the trajectory predictor takes the query of the tracking agent as input and associates it with the HD map features, and finally outputs the predicted trajectory.

# Query-based tracker extracts visual features from the raw video of the surrounding camera. Specifically, for each frame, image features are extracted according to DETR3D. For time domain feature aggregation, a query-based tracker is designed according to MOTR ("Motr: End-to-end multiple-object tracking with transformer". arXiv 2105.03247, 2021), including two key steps :query feature update and query supervision. The agent query will be updated over time to model the movement dynamics of the agent.

Most existing trajectory prediction methods can be divided into three parts: agent encoding, map encoding and trajectory decoding. After query-based tracking, the query of the tracked agent is obtained, which can be regarded as the agent characteristics obtained through agent encoding. Therefore, the remaining tasks are map encoding and trajectory decoding.

Represent prediction and true value agents as unordered sets Sˆ and S respectively, where each agent is represented by the agent coordinates of the current time step and K possible future trajectories. For each agent type c, calculate the prediction accuracy between Scˆ and Sc. Define the cost between the prediction agent and the truth agent as:

The EPA between Scˆ and Sc is defined as:

The experimental results are as follows:

The above is the detailed content of ViP3D: End-to-end visual trajectory prediction through 3D agent query. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

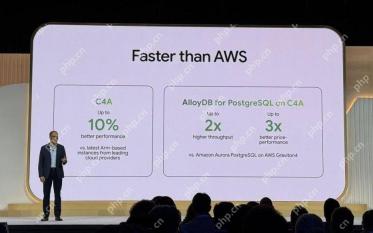

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver CS6

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)