Home >Technology peripherals >AI >Marcus: New Bing is wilder than ChatGPT. Is this intentional or accidental by Microsoft?

Marcus: New Bing is wilder than ChatGPT. Is this intentional or accidental by Microsoft?

- 王林forward

- 2023-04-08 15:51:031282browse

Since New Bing launched a large-scale internal test, netizens found that compared to the restrained ChatGPT, New Bing’s answers are too wild, such as Announce an unwanted love affair, encourage people to divorce, blackmail users, teach people how to commit crimes, and more.

It can be said that Microsoft has retained the "gibberish" ability of part of the language model, so that you know that you are not using ChatGPT, but Xinbi answer.

Is it that Microsoft’s RLHF failed to do the job, or wonderful Internet corpus Let ChatGPT lose itself?

Recently, Gary Marcus, a well-known AI scholar, founder and CEO of Robust.AI, and emeritus professor of New York University, published another blog analyzing Bing’s crazy behavior. There are several possibilities, and if left unchecked, it may have extremely serious subsequent effects on the development of the AI industry.

Why is Bing so wild?

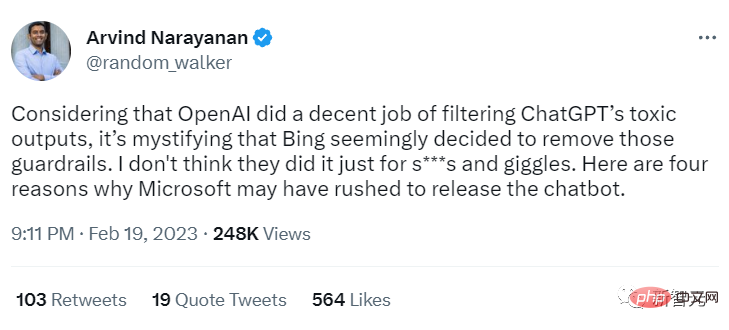

Princeton University professor Arvind Narayanan proposed four possibilities as to why Bing is so "wild".

In the tweet, Professor Narayanan believes that OpenAI does a good job of filtering ChatGPT’s toxic output, but it seems that Bing has removed these protections, which is very confusing.

He believes that Microsoft is not just doing this for fun, there must be other reasons for releasing the new Bing in such a hurry.

##Possibility 1: The new Bing is GPT-4

The behavior displayed by New Bing is quite different from ChatGPT, and it seems unlikely that it is based on the same underlying model. Maybe LLM has only recently completed training (i.e. GPT-4?). If this is the case, Microsoft may have chosen (unwisely) to quickly roll out the new model rather than delay the release and undergo further RLHF training.

Marcus also said in his previous article "The Dark Heart of ChatGPT" that there is a lot of uncomfortable content lurking in the large language model, and perhaps Microsoft has not taken any measures. to filter toxic content.

Blog link: https://garymarcus.substack.com/p/inside-the-heart -of-chatgpts-darkness

Possibility 2: Too many false positives

Microsoft may indeed have created a filter for Bing, but in actual use it predicted too many false positives. For ChatGPT, this problem is insignificant, but in search scenarios, it will seriously affect the user experience.

In other words, filters are too annoying to be used in real search engines.

Possibility 3: To obtain user feedback

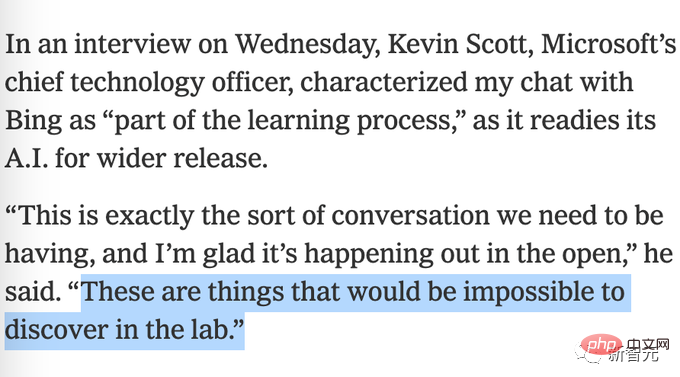

## It's possible that #Bing turned off filter restrictions intentionally to get more user feedback on what might be going wrong. Microsoft made a bizarre claim earlier that it would be impossible to complete testing in the lab.

Possibility 4: Microsoft didn’t expect this either

It is possible that Microsoft believes that the filters based on the prompt project are enough, and it really did not expect that the new Bing error would develop to the point it is today.

Marcus basically agrees with Professor Narayanan’s view, but he thinks that maybe Microsoft has not removed the protection measures, may be "simply ineffective", which is also the first Five possibilities.

That is, maybe Microsoft did try to put their existing, already trained RLHF model on top of GPT 3.6, but it didn't work.

Reinforcement learning is notoriously picky. If you change the environment slightly, it may be useless.

DeepMind's famous DQN reinforcement learning set records in Atari games, and then just added some small changes, such as moving the paddle up just a few pixels in the Breakout game , the model collapses, and perhaps every update to a large language model requires a complete retraining of the reinforcement learning module.

This is very bad news, not only in terms of human and economic costs (meaning more low-wage people doing terrible jobs), but also in terms of credibility, It would also mean that there is no guarantee that any new iteration of a large language model is safe.

This situation is particularly scary for two main reasons:

1. Large companies are free to push out new updates at any time, No warning is required;

2. Releasing a new model may require testing it over and over on the public without knowing in advance how well empirical testing on the public will perform .

Analogous to the release of new drugs in the medical field, the public demands that new drugs be fully tested in the laboratory before release, switch to the release of large language models, especially if billions of people may use it If there are serious risks (such as disturbing the user's mental health and marital status), we should not let them directly test publicly,

policy , the public has a right (or strictly speaking should insist) to know what is wrong with the model.

#For example, after Bing discloses a problem, it can formulate policies to prevent similar incidents from happening again. Right now, artificial intelligence is basically in its wild development phase, and anyone can launch a chatbot.

Congress needs to figure out what's going on and start setting some limits, especially where emotional or physical harm could easily be caused.

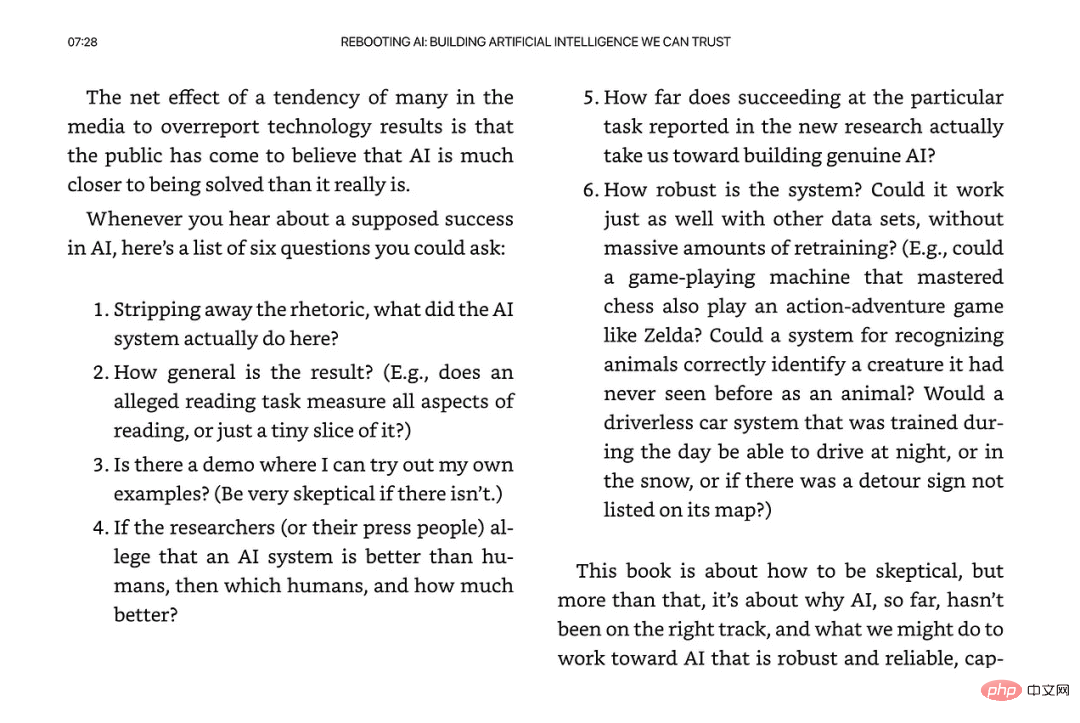

In the journalism industry, the media also disappoints people.

Kevin Roose said in his initial report that he was "awed" by Bing, which made Marcus particularly uneasy, apparently after " Premature publicity in The New York Times without digging deeper into the underlying issues is not a good thing.

In addition, let me add one more point, it is already 2023. Are Microsoft's protective measures sufficient? Has it been thoroughly researched?

Please don’t say that you have no feelings other than “awe” about the new system.

Finally, Professor Narayanan believes that we are at a critical moment for artificial intelligence and civil society. If measures are not taken, the practical efforts on "responsible AI release" over the past five years will be lost. will be obliterated.

The above is the detailed content of Marcus: New Bing is wilder than ChatGPT. Is this intentional or accidental by Microsoft?. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology