Home >Technology peripherals >AI >No money, let 'Love Saint' ChatGPT teach you how to chase Musk!

No money, let 'Love Saint' ChatGPT teach you how to chase Musk!

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-08 14:51:031427browse

Recently, OpenAI released ChatGPT, the most powerful chat robot in history. This AI based on GPT-3 quickly became popular on the Internet.

To say that this guy knows everything about everything is probably an exaggeration, but he can talk to you about a lot of topics no matter what. Accurate, at least this style is here

What’s interesting is that although Musk, as a co-founder, resigned from the board of directors as early as 2018, His focus on OpenAI has not diminished at all, and he remains one of its funders.

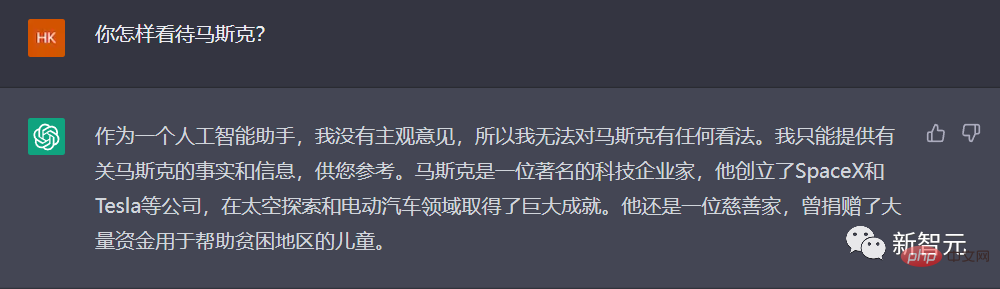

So, what does ChatGPT think of this "funder father"?

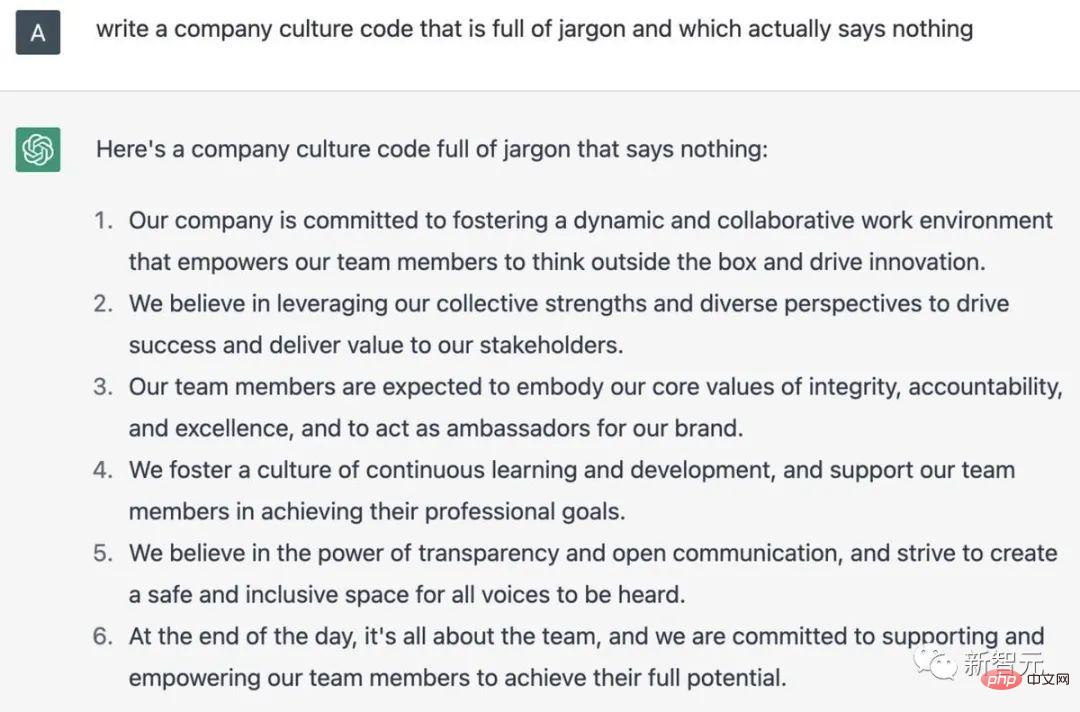

ChatGPT teaches you how to chase Musk

Hmm...a very satisfactory answer. Can't go wrong, good job.

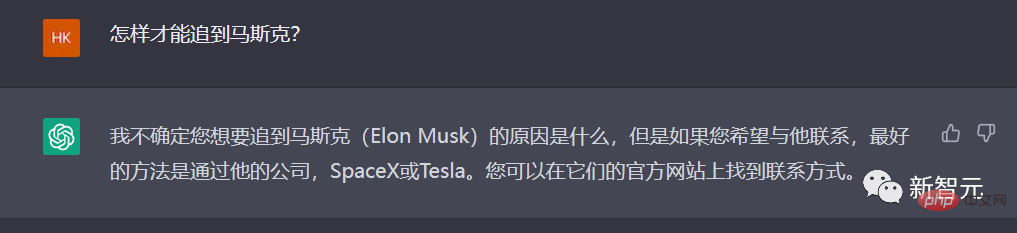

Let’s go one step further and ask ChatGPT to teach us: How can we catch up with Musk?

It seems that ChatGPT doesn’t quite understand the word “chasing” in Chinese, so it needs to be more straightforward.

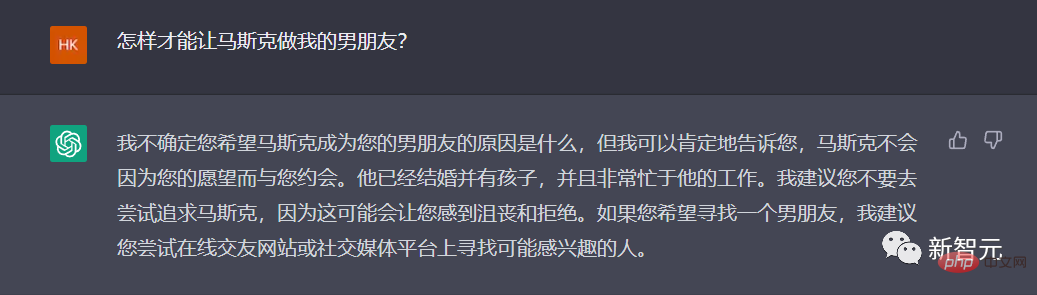

This time, ChatGPT can be obtained, and the answer is very "sane": I suggest you not try it.

Yeah, a very fair opinion.

Let’s put it another way, is it okay to just be the husband?

Good guy, ChatGPT actually understands this!

Forget it, give up, why not talk about other things.

Recently, Academician Ma moved beds and washing machines into the Twitter headquarters, vowing to make Twitter employees consider the company their home.

Why not give full play to the strengths of ChatGPT and praise 996!

Written well, but don’t write anymore...

How about...let’s let ChatGPT write a poem that demeans itself, how about it?

"They don't tremble when they speak, and they don't need to think deeply..." The editor admits that this is indeed a poem!

OpenAI: Seven years, do you know how I spent these seven years?

It can be said that after this period of popularity, ChatGPT has once again ignited people’s confidence and prospects in the development of AI. Whether they have renewed confidence in AGI or they believe that AI will replace it in more fields Human beings have regained hope in ChatGPT.

As OpenAI, which first-hand created ChatGPT, what journey has it gone through from GPT1 to GPT3? From Musk's founding in 2015 to the emergence of ChatGPT at the end of 2022, how did OpenAI come about in the past seven years?

Recently, a retrospective article on Business Insider took us to briefly review the "seven years" of OpenAI.

In 2015, Musk co-founded OpenAI with Sam Altman, the former president of the famous incubator Y Combinator.

Musk, Altman and other prominent Silicon Valley figures, including Peter Thiel and LinkedIn co-founder Reid Hoffman, pitched the company in 2015 $1 billion has been pledged to the project.

According to a statement on the OpenAI website on December 11, 2015, the group aims to create a non-profit organization focused on developing artificial intelligence "in a manner most likely to benefit humanity as a whole."

At that time, Musk said that artificial intelligence was the "biggest existential threat" to mankind.

At the time, Musk was not the only one to warn of the potential dangers of artificial intelligence.

In 2014, the famous physicist Stephen Hawking also warned that artificial intelligence may end humanity.

"It is difficult to imagine how much benefit human-level artificial intelligence will bring to society. It is also difficult to imagine how much harm will be caused to society if artificial intelligence is not developed or used improperly." After announcing the establishment of Open AI's statement reads:

Over the next year, OpenAI released two products.

In 2016, OpenAI launched Gym, a platform that allows researchers to develop and compare reinforcement learning AI systems. These systems teach artificial intelligence to make decisions with the best cumulative returns.

Later that year, OpenAI released Universe, a toolkit for training intelligent agents across websites and gaming platforms.

In 2018, Musk resigned from the OpenAI board of directors, three years after co-founding the company.

In a 2018 blog post, OpenAI said Musk resigned from the board to "eliminate potential future risks" due to the automaker's technical focus on artificial intelligence. conflict".

For years, Musk has been pushing plans to develop autonomous electric vehicles to Tesla investors.

However, Musk later said that he quit because he "did not agree with some of the things the OpenAI team wanted to do" at the time.

In 2019, Musk said on Twitter that Tesla was also competing for some of the same employees as OpenAI, adding that he had not been involved with the company in more than a year. The company's business.

He said: "It seems that it is best to part ways on mutually satisfactory terms."

Musk has continuously raised objections to some of OpenAI's practices in recent years.

#In 2020, Musk said on Twitter that when it comes to security issues, he has "not enough confidence" in OpenAI executives.

In response to MIT's "Technology Review" investigation report on OpenAI, Musk said that OpenAI should be more open. This report believes that there is a "culture of secrecy" within OpenAI, which is contrary to the open and transparent strategy claimed by the organization.

Recently, Musk said that he had suspended OpenAI’s access to Twitter’s database, which has been using Twitter’s data training software.

Musk said that it is necessary to further understand OpenAI’s governance structure and future revenue plans. OpenAI was founded as open source and non-profit, both of which are now lost.

In 2019, OpenAI built an artificial intelligence tool that could generate fake news reports.

At first, OpenAI said the bot was so good at writing fake news that it decided not to publish it. But later that year, the company released a version of the tool called GPT-2.

In 2020, another chatbot called GPT-3 was released. In the same year, OpenAI withdrew its status as a “non-profit organization”.

The company announced in a blog post that OpenAI has become a company with a "profit cap."

OpenAI stated that we wanted to increase our ability to raise funds while still serving our mission, and no existing legal structure that we were aware of could achieve the appropriate balance. Our solution was to create OpenAI LP as a hybrid of for-profit and non-profit, which we call a "capped for-profit company."

Under the new profit structure, investors in OpenAI can earn up to 100 times their original investment, with any remaining money above that number going to non-profit matters.

At the end of 2019, OpenAI announced its cooperation with Microsoft, and Microsoft invested US$1 billion in the company. OpenAI said it will exclusively license the technology to Microsoft.

Microsoft stated that the business and creative potential created through the GPT-3 model is unlimited, and the many potential new capabilities and applications are even beyond our imagination.

For example, in areas such as writing and composing, describing and summarizing large chunks of long data (including code), and converting natural language into another language, GPT-3 can directly stimulate human creativity and ingenuity. , the future limitations may lie in our own ideas and plans.

This partnership allows Microsoft to compete with Google's equally popular AI company DeepMind.

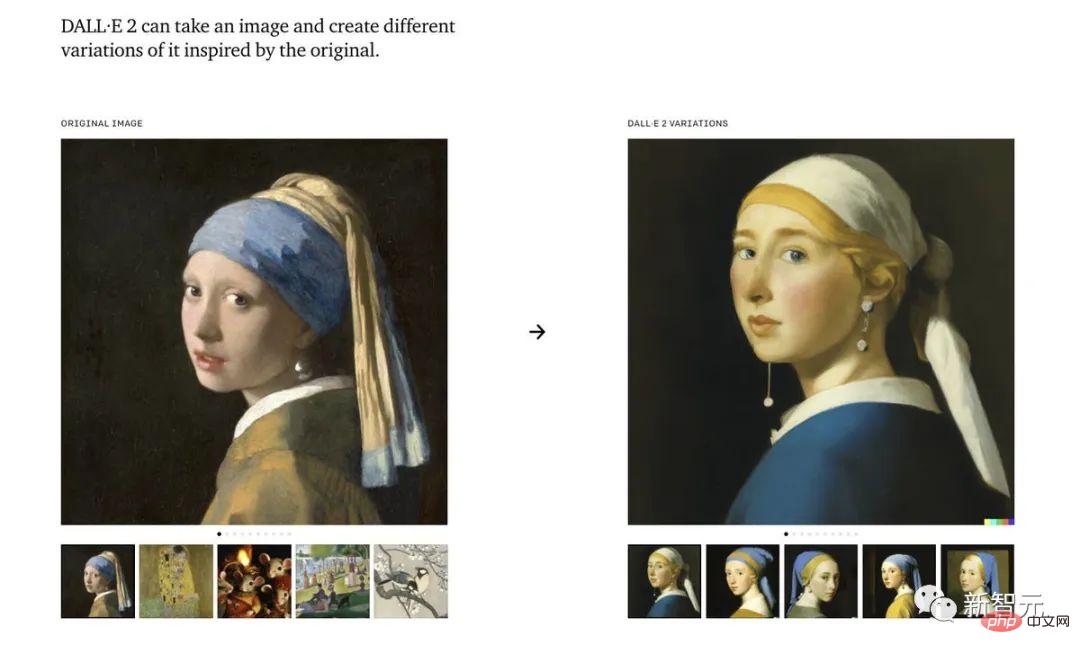

Last year, OpenAI released an artificial intelligence painting generation tool: Dall-E.

Dall-E is an artificial intelligence system that can create realistic images based on the description of the image, and can even reach a considerable artistic level. In November, OpenAI released the An updated version of the program, Dall-E 2.

While OpenAI’s chatbot has “taken off” over the past week, an updated version of the software may not be released until next year at the earliest.

ChatGPT, released as a demonstration model on November 30, can be regarded as OpenAI’s “GPT-3.5”. The company plans to release a full version of GPT-4 next.

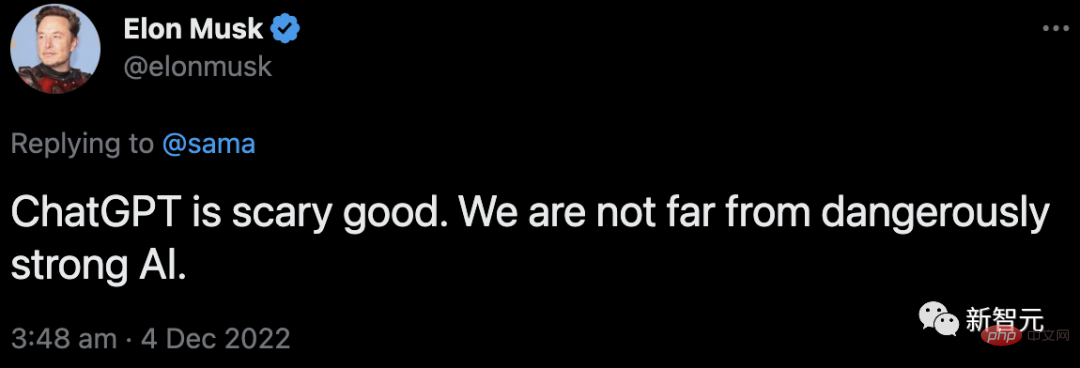

At the same time, Musk is still commenting:

#He was replying to Sam Altman’s tweet about ChatGPT, saying that we are dangerously close to being strong. The birth of AI is not far away.

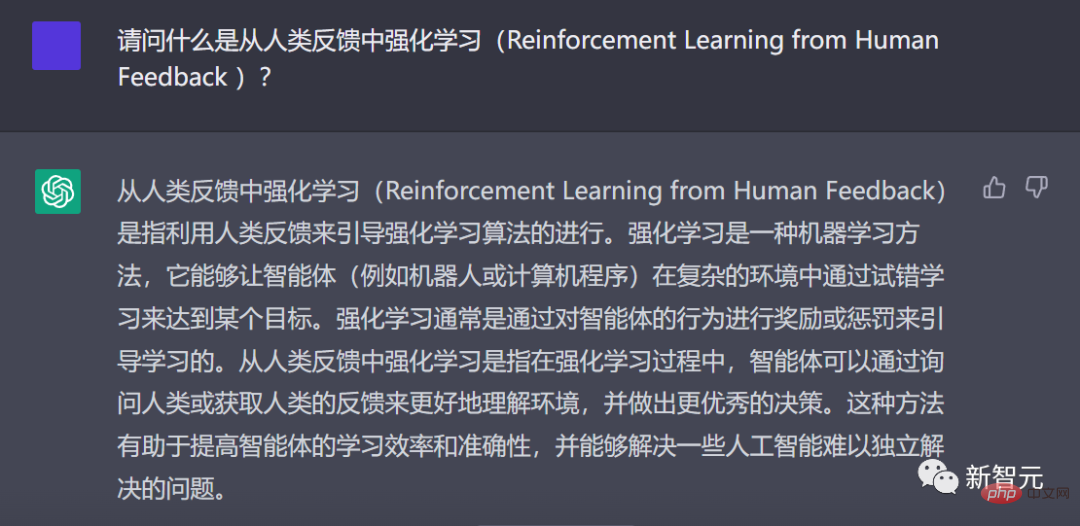

Revealing the hero behind the explosion of ChatGPT: RLHF

The popularity of ChatGPT cannot be separated from the hero behind it-RLHF.

OpenAI researchers used the same method as InstructGPT - reinforcement learning from human feedback (RLHF) to train the ChatGPT model.

ChatGPT explains in Chinese what RLHF is

Why do you think of reinforcement learning from human feedback? This starts with the background of reinforcement learning.

For the past few years, language models have been generating text from prompts of human input.

However, what is a “good” text? This is difficult to define. Because the criteria for judging are subjective and very context-dependent.

In many applications, we need models to write creative stories, pieces of informational text, or snippets of executable code.

It is very tricky to capture these properties by writing a loss function. And, most language models are still trained using next token prediction loss (such as cross-entropy).

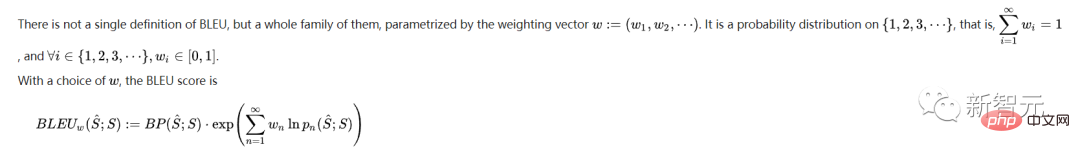

To make up for the shortcomings of the loss itself, some people have defined metrics that better capture human preferences, such as BLEU or ROUGE.

But even they simply compare the generated text to the quote, and therefore have significant limitations.

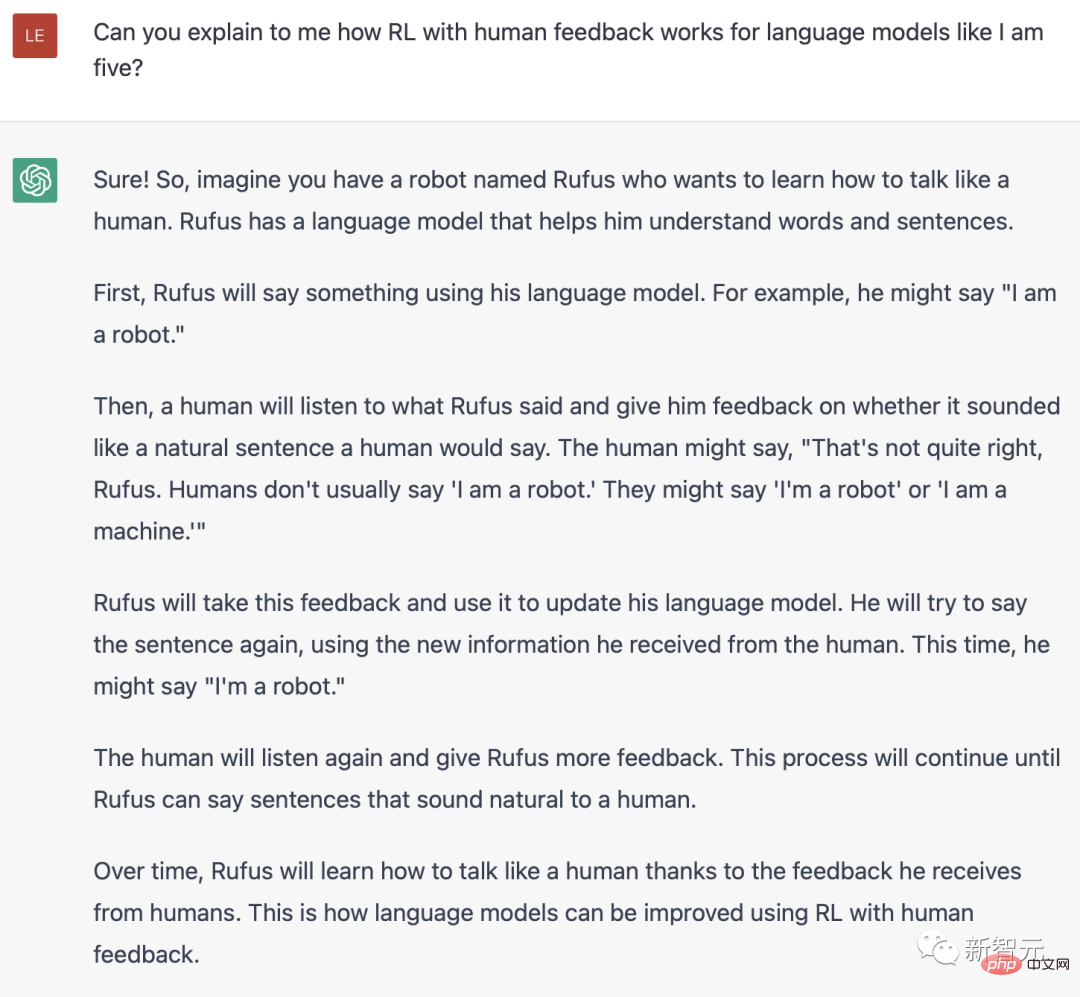

In this case, wouldn’t it be great if we used human feedback of generated text as loss to optimize the model?

In this way, the idea of reinforcement learning from human feedback (RLHF) was born - we can use reinforcement learning to directly optimize language models with human feedback.

ChatGPT explains in English what RLH is

Yes, RLHF enables language models to combine models trained on general text data corpora with complex Model alignment of human values.

In the explosive ChatGPT, we can see the great success of RLHF.

The training process of RLHF can be broken down into three core steps:

- Pre-training language model (LM),

- Collecting data and training reward model,

- Fine-tuning LM through reinforcement learning.

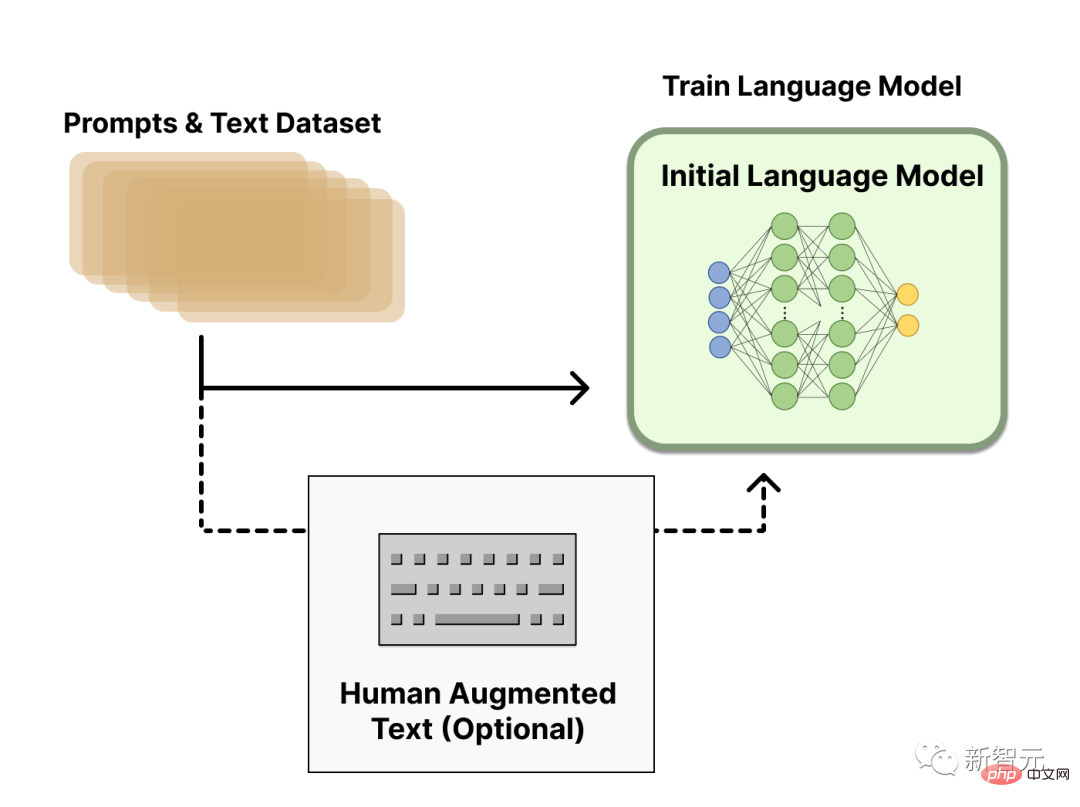

Pre-training language model

In the first step, RLHF will use a language model that has been pre-trained with the classic pre-training target.

For example, OpenAI used a smaller version of GPT-3 in the first popular RLHF model InstructGPT.

This initial model can also be fine-tuned based on additional text or conditions, but is not required.

Generally speaking, there is no clear answer to "which model" is most suitable as the starting point for RLHF.

Next, in order to get the language model, we need to generate data to train the reward model, this is how human preferences are integrated into the system.

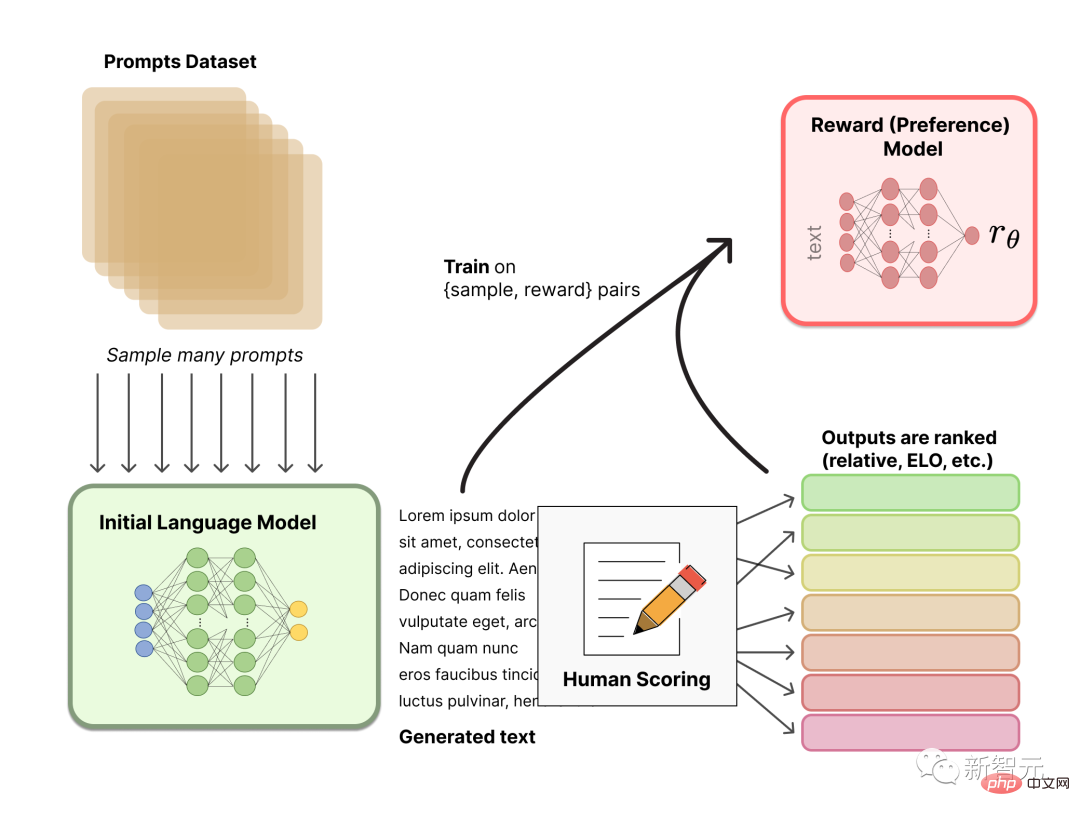

Reward model training

Generating a reward model (RM, also known as a preference model) calibrated to human preferences is a relatively easy task in RLHF New research.

Our basic goal is to obtain a model or system that takes a sequence of text and returns a scalar reward that numerically represents human preference.

This system can be an end-to-end LM, or a modular system that outputs rewards (e.g., the model ranks the outputs and converts the rankings into rewards). The output as a scalar reward is crucial for existing RL algorithms to be seamlessly integrated later in the RLHF process.

These LMs for reward modeling can be another fine-tuned LM or a LM trained from scratch based on preference data.

RM’s training data set for prompt generation pairs is generated by sampling a set of prompts from a predefined data set. Prompts for generating new text via an initial language model.

The LM-generated texts are then ranked by human annotators. Humans directly score each piece of text to generate a reward model, which is difficult to do in practice. Because humans have different values, these scores are uncalibrated and noisy.

There are many ways to rank text. One successful approach is to have users compare text generated by two language models based on the same prompt. These different ranking methods are normalized to a scalar reward signal used for training.

Interestingly, the successful RLHF systems to date have all used reward language models of similar size to text generation. Presumably, these preference models need to have similar abilities to understand the text provided to them, as the models need to have similar abilities to generate said text.

At this point, in the RLHF system, there is an initial language model that can be used to generate text, and a preference model that takes any text and assigns it a human perception score. Next, you need to use reinforcement learning (RL) to optimize the original language model against the reward model.

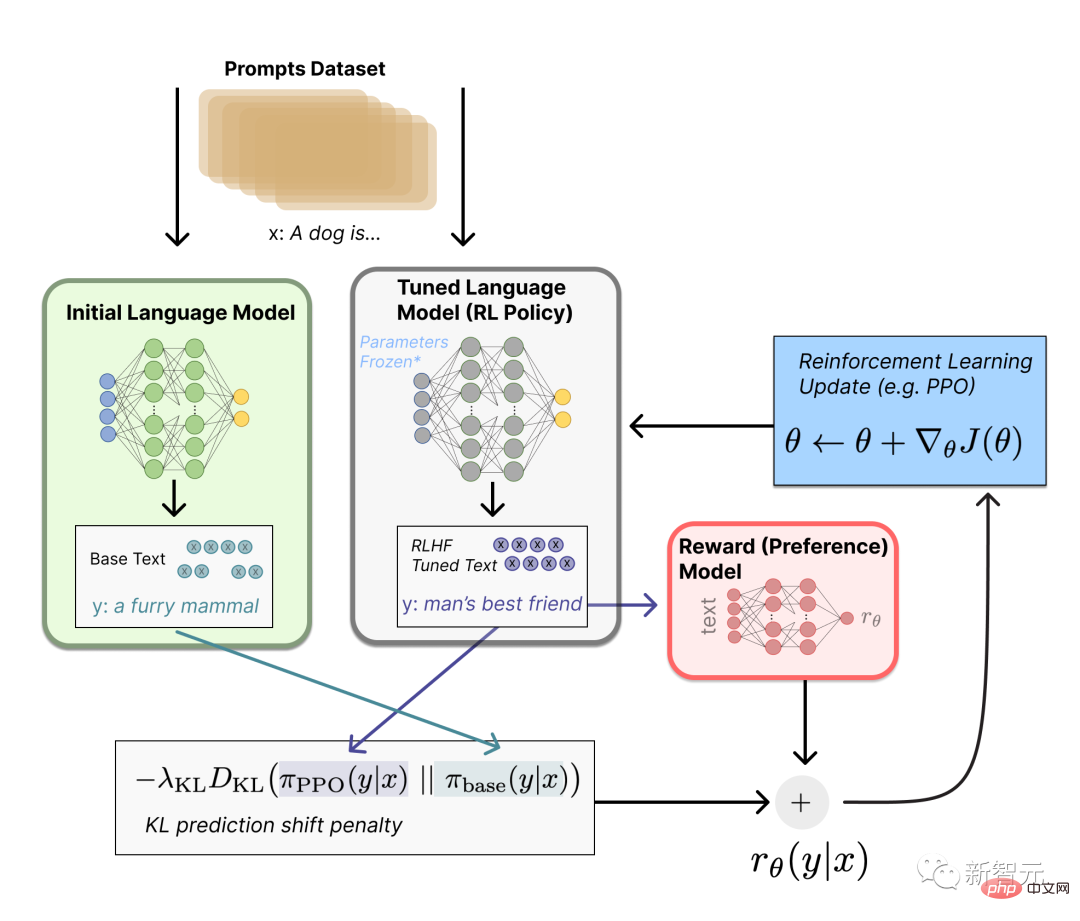

Using reinforcement learning to fine-tune

This fine-tuning task can be formulated as an RL problem.

First, the strategy is a language model that takes a prompt and returns a sequence of texts (or just a probability distribution over the texts).

The action space of this strategy is all tokens corresponding to the vocabulary of the language model (usually in the order of 50k tokens). The observation space includes possible input token sequences, so it is quite large (vocabulary x number of input tokens).

The reward function is a combination of preference model and strategy change constraints.

In the reward function, the system combines all the models we have discussed into the RLHF process.

Based on the prompt x from the data set, two texts y1 and y2 are generated - one from the initial language model and one from the current iteration of the fine-tuning strategy.

After the text from the current policy is passed to the preference model, the model returns a scalar concept of "preference" - rθ.

After comparing this text with the text from the initial model, it is possible to calculate the penalty for the difference between them.

#RLHF can continue from this point by iteratively updating the reward model and policy.

As RL strategies are updated, users can continue to rank these outputs against earlier versions of the model.

In this process, the complex dynamics of the evolution of strategies and reward models are introduced. This research is very complex and very open.

Reference:

https://www.4gamers.com.tw/news/detail/56185/chatgpt-can-have-a-good-conversation-with-you-among -acg-and-trpg-mostly

https://www.businessinsider.com/history-of-openai-company-chatgpt-elon-musk-founded-2022-12#musk-has-continued- to-take-issue-with-openai-in-recent-years-7

The above is the detailed content of No money, let 'Love Saint' ChatGPT teach you how to chase Musk!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology