Home >Technology peripherals >AI >AIGC is developing too fast! Meta releases the first text-based 4D video synthesizer: Will 3D game modelers also be laid off?

AIGC is developing too fast! Meta releases the first text-based 4D video synthesizer: Will 3D game modelers also be laid off?

- 王林forward

- 2023-04-08 11:21:15898browse

AI generation models have made tremendous progress in the past period. As far as the image field is concerned, users can generate images by inputting natural language prompts (such as DALL-E 2, Stable Diffusion) , it can also be extended in the time dimension to generate continuous videos (such as Phenaki), or in the spatial dimension to directly generate 3D models (such as Dreamfusion).

But so far, these tasks are still in an isolated research state, and there is no technical intersection with each other.

Recently, Meta AI researchers have combined the advantages of video and 3D generation models and proposed a new text to four-dimensional (three-dimensional time) generation system MAV3D (MakeA-Video3D ), takes a natural language description as input and outputs a dynamic three-dimensional scene representation that can be rendered from any perspective.

##Paper link: https://arxiv.org/abs/2301.11280

Project link: https://make-a-video3d.github.io/

##MAV3D is alsothe first can Generate a model of a three-dimensional dynamic scene based on a given text description.

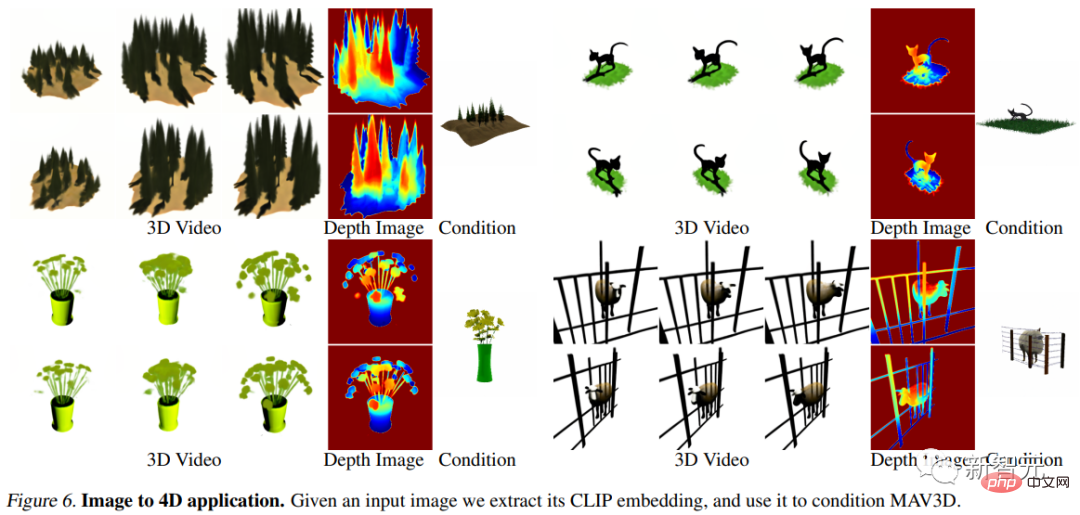

The method proposed in this paper uses a 4D dynamic neural radiation field (NeRF) to optimize scene representation, density and motion consistency by querying a text-to-video (T2V) diffusion-based model , the dynamic video output generated from the provided text can be viewed from any camera position and angle, and composited into any 3D environment.This method can be used to generate 3D assets for video games, visual effects, or augmented and virtual reality.

There are no ready-made 4D model collections.

##Corgi playing ball

##Corgi playing ball

MAV3D’s training Not requiring any 3D or 4D data, the T2V model only needs to be trained on text-image pairs and unlabeled videos.

In the experimental part, the researchers conducted comprehensive quantitative and qualitative experiments to prove the effectiveness of the method, which significantly improved the previously established internal baseline. Text to 4D dynamic scene

Due to the lack of training data, researchers have conceived several ideas to solve this task.

One approach might be to find a pre-trained 2D video generator and extract a 4D reconstruction from the generated video. However, reconstructing the shape of deformable objects from videos is still a very challenging problem, namelyNon-Rigid Structure from Motion (NRSfM).

The task becomes simpler if you are given multiple simultaneous viewpoints of an object. Although multi-camera setups are rare in real-world data, the researchers believe that existing video generators implicitly generate arbitrary viewpoint models of the scene.

In other words, the video generator can be used as a "statistical" multi-camera setup to reconstruct the geometry and photometry of deformable objects.

The MAV3D algorithm achieves this by optimizing the dynamic Neural Radiation Field (NeRF) and decoding input text into video, sampling random viewpoints around the object.

Directly using the video generator to optimize dynamic NeRF has not achieved satisfactory results. There are still several problems that need to be overcome during the implementation process:

1. An effective, end-to-end learnable dynamic 3D scene representation is needed;

2. A data source for supervised learning is needed, because currently there is no large-scale Large-scale datasets of (text, 4D) pairs are available for learning;

3. The resolution of the output needs to be expanded in both spatial and temporal dimensions, since 4D output requires a large amount of memory and Computing power;

MAV3D model

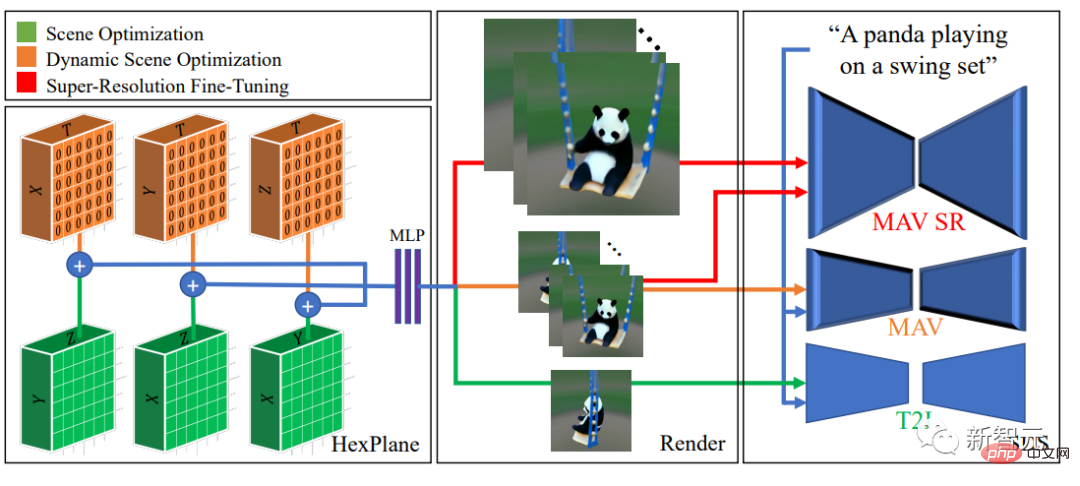

The MAV3D model is based on the latest work on neural radiation fields (NeRFs), combining results in efficient (static) NeRFs and dynamic NeRFs, And represent the 4D scene as a set of six multi-resolution feature planes.

To supervise this representation without corresponding (text, 4D) data, the researchers proposed a multi-stage training pipeline for dynamic scene rendering and demonstrated that each importance of each component in achieving high-quality results.

One of the key observations is that using the Text-to-Video (T2V) model, Score Distillation Sampling (SDS) is used to directly optimize the dynamics Scenarios can lead to visual artifacts and suboptimal convergence.

So the researchers chose to first use the text-to-image (T2I) model to match the static 3D scene with text prompts, and then dynamically enhance the 3D scene model.

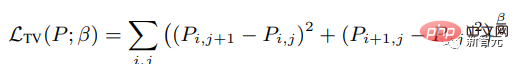

In addition, a new temporal-aware SDS loss and motion regularization term are introduced in the model, which are experimentally proven to be critical for realistic and challenging motion.

and extends to higher resolution output through an additional temporal-aware super-resolution fine-tuning stage.

Finally, use the SDS of the super-resolution module of the T2V model to obtain high-resolution gradient information for supervised learning of the three-dimensional scene model, increase its visual fidelity, and enable inference The higher resolution output is sampled during the process.

Experimental part

Evaluation metrics

##Use CLIP R-Precision to evaluate the generated video , can be used to measure the consistency between text and generated scenes, and can reflect the accuracy of retrieval of input cues from the rendered frame. The researchers used the ViT-B/32 variant of CLIP and extracted frames in different views and time steps.

In addition to using four qualitative metrics, (i) Video quality can be derived by asking human annotators for their preferences among the two generated videos ; (ii) fidelity to text cues; (iii) amount of movement; and (iv) authenticity of movement.

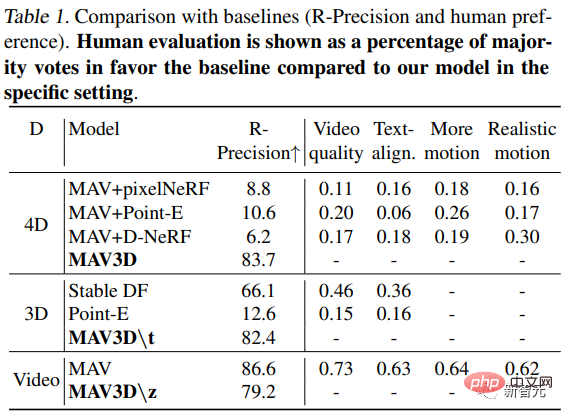

Text-to-4D comparison

Since there was no method to convert text to 4D before , so the researchers established three baselines based on the T2V generation method for comparison. The sequence of two-dimensional frames will be converted into a sequence of three-dimensional scene representation using three different methods.

The first sequence is obtained through the one-shot neural scene renderer (Point-E); the second is generated by applying pixelNeRF independently to each frame; the third Is applying D-NeRF combined with the camera position extracted using COLMAP.

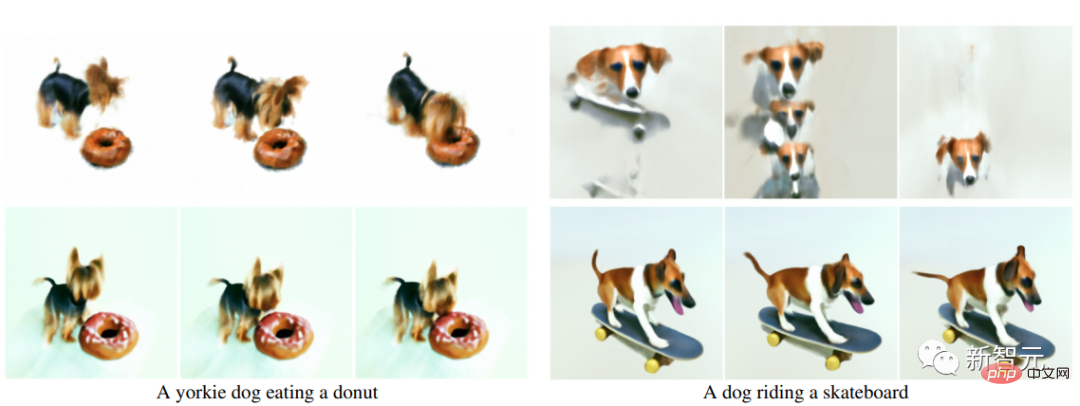

It can be seen that this method exceeds the baseline model on the objective R-accuracy metric, and is rated higher by human annotators on all metrics.

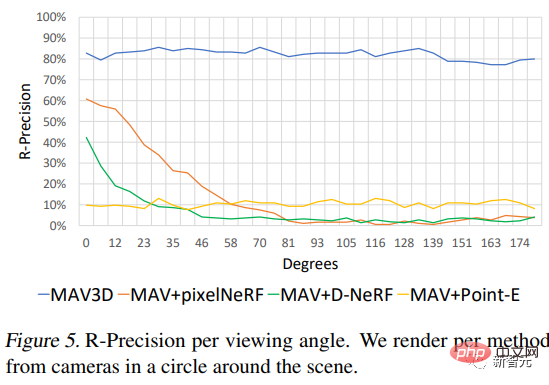

#In addition, the researchers also explored the performance of the method under different camera viewing angles.

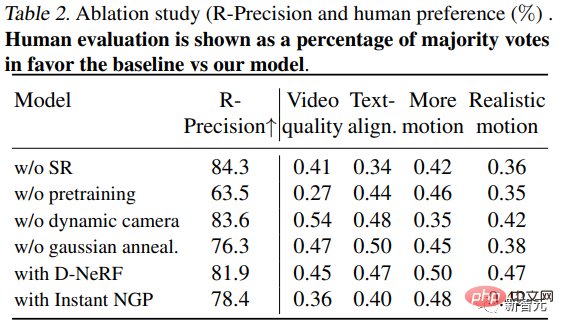

Ablation experiment

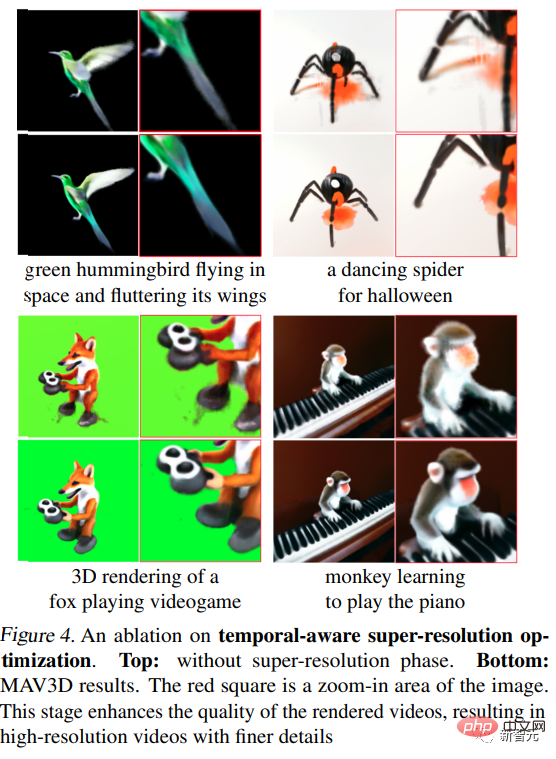

1. In no scene super For models trained with resolution (SR) fine-tuning , with the same number of steps as MAV3D (stage 3), human annotators are more likely to choose models trained with SR in terms of quality, text alignment, and motion. Model.

In addition, super-resolution fine-tuning enhances the quality of rendered videos, making high-resolution videos with finer details and less noise.

2. No pre-training: The steps for directly optimizing dynamic scenes (without static scene pre-training) are the same as MAV3D In the cases, the result is much lower scene quality or poor convergence: in 73% and 65% of the cases, the model pretrained with static is preferred in terms of video quality and realistic motion.

The above is the detailed content of AIGC is developing too fast! Meta releases the first text-based 4D video synthesizer: Will 3D game modelers also be laid off?. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology