Evaluating language models remains a significant challenge. How can we accurately assess a model's comprehension, text coherence, and response accuracy? Among numerous evaluation metrics, perplexity stands out as a fundamental and widely used tool in Natural Language Processing (NLP) and Language Model (LM) assessment.

Perplexity's application spans from early statistical language modeling to the current era of large language models (LLMs). This article delves into perplexity: its definition, mechanics, mathematical basis, implementation, strengths, weaknesses, comparisons with other metrics, and how to mitigate its limitations. Upon completion, you'll grasp perplexity and be equipped to implement it for language model evaluation.

Table of Contents:

- What is Perplexity?

- How Perplexity Works

- Calculating Perplexity

- Alternative Perplexity Representations:

- Entropy-Based Perplexity

- Perplexity as a Multiplicative Inverse

- Python Implementation from Scratch

- Example and Results

- Perplexity in NLTK

- Advantages of Perplexity

- Limitations of Perplexity

- Addressing Limitations with LLM-as-Judge

- Practical Applications

- Comparison to Other LLM Evaluation Metrics

- Conclusion

What is Perplexity?

Perplexity quantifies how effectively a probability model predicts a sample. In language models, it reflects the model's "surprise" or "confusion" when encountering a text sequence. Lower perplexity indicates superior predictive ability.

Intuitively:

- Low perplexity: The model confidently and accurately predicts subsequent words.

- High perplexity: The model is uncertain and struggles with predictions.

Perplexity answers: "On average, how many words could plausibly follow each word, according to the model?" A perfect model achieves a perplexity of 1 (minimum). Real models, however, distribute probability across various words, resulting in higher perplexity.

Quick Check: If a model assigns equal probability to 10 potential next words, its perplexity is 10.

How Perplexity Works

Perplexity assesses a language model's performance on a test set:

- Training: A language model is trained on a text corpus.

- Evaluation: The model is evaluated on unseen test data.

- Probability Calculation: The model assigns a probability to each word in the test sequence, considering preceding words. These probabilities are combined into a single perplexity score.

Calculating Perplexity

Mathematically, perplexity is the exponential of the average negative log-likelihood:

Where:

-

Wis the test sequence (w_1, w_2, …, w_N) -

Nis the number of words -

P(w_i|w_1, w_2, …, w_{i-1})is the conditional probability ofw_igiven previous words.

Using the chain rule:

Where P(w_1, w_2, …, w_N) is the joint probability of the sequence.

(Implementation and remaining sections will follow a similar structure of simplification and rewording, maintaining the core information and image placement.)

The above is the detailed content of Perplexity Metric for LLM Evaluation - Analytics Vidhya. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

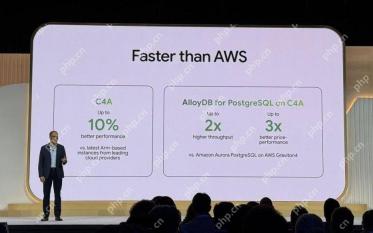

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Zend Studio 13.0.1

Powerful PHP integrated development environment