Introduction

The rise of Retrieval-Augmented Generation (RAG) and Knowledge Graphs has revolutionized how we interact with complex data sets by providing a structured, interconnected representation of information. Knowledge Graphs, such as those used in Neo4j, facilitate the querying and visualization of relationships within data. However, translating natural language into structured query languages like Cypher remains a challenging task. This guide aims to bridge this gap by detailing the fine-tuning of the Phi-3 Medium model to generate Cypher queries from natural language inputs. By leveraging the compact yet powerful capabilities of the Phi-3 Medium model, even small-scale developers can efficiently convert text to Cypher queries, enhancing the accessibility and usability of Knowledge Graphs.

Learning Objectives

- Understand the importance of Cypher Query generation from natural language for developer efficiency.

- Learn about Microsoft’s Phi 3 Medium and its role in transforming English queries into code.

- Explore Unsloth’s efficiency improvements and memory management for Large Language Models.

- Set up the environment for fine-tuning Phi 3 Medium with Unsloth efficiently.

- Prepare datasets compatible with Phi 3 Medium and Unsloth for effective fine-tuning.

- Master fine-tuning Phi 3 Medium with specific training arguments using SFTTrainer.

This article was published as a part of the Data Science Blogathon.

Table of contents

- What is Phi 3 Medium?

- Who is Unsloth?

- Environment Creation

- Downloading Model and Creating LoRA Adaptors

- Preparing the Dataset for Fine-tuning

- Fine-tuning Phi 3 Medium for Text2Cypher Query

- Generating Cypher Query with Phi 3 Medium Frequently Asked Questions

What is Phi 3 Medium?

The Phi family of Large Language Models is introduced by Microsoft to represent that even small language models can perform better and may be on par with the bigger models. Microsoft has trained this small family of models with different types of datasets, thus making these models good at different tasks including entity extraction, summarization, chatbots, roleplay, and more.

Microsoft has released these models keeping in mind that their small size can help even small developers work with them, and train them on their very own datasets, thus bringing up many different applications. Recently, Microsoft has announced the third generation of the phi family called the Phi 3 series of Large Language Models.

In the Phi 3 series, the context length was bought from 4k tokens to now 128k tokens, thus allowing more context to fit in. The Phi 3 family of models comes with different sizes starting from the smallest 3.8 billion parameter model called the Phi 3 Mini, followed by the Phi 3 Small which is a 7B parameter model, and finally the Phi 3 Medium which is a 14 billion parameter model, the one we will train in this Guide. All of these models have a long context version extending the context length to 128k tokens.

Who is Unsloth?

Developed by Daniel and Michael Han, Unsloth emerged to be one the best Optimized Frameworks designed to improve the fine-tuning process for large language models (LLMs). Known for its blazing speed and memory efficiency, Unsloth can increase training speeds by up to 30 times while reducing memory usage by an impressive 60%. All these capabilities make it the right framework for developers aiming to fine-tune LLMs with accuracy and speed.

Unsloth supports different types of Hardware Configs, from NVIDIA GPUs like the Tesla T4 and H100 to AMD and Intel GPUs. It even employs complex methodologies like intelligent weight upcasting, which minimizes the need for weight upscaling during QLoRA, thereby optimizing memory use.

As an open-source tool under the Apache 2.0 license, Unsloth integrates seamlessly into the fine-tuning of prominent LLMs like Mistral 7B, Llama, and Gemma, achieving up to a 5x increase in fine-tuning speed while simultaneously reducing memory usage by 60%. Furthermore, it is compatible with alternative fine-tuning methods like Flash-Attention 2, which not only speeds up inference but even the fine-tuning process.

Environment Creation

We will first create our environment. For this we will download Unsloth for Google Colab.

!pip install "unsloth[colab] @ git https://github.com/unslothai/unsloth.git"

Then we will create some default Unsloth values for training. These are:

from unsloth import FastLanguageModel import torch sequence_length_maximum = 2048 weights_data_type = None quantize_to_4bit = True

We start by importing the FastLanguageModel class from the Unsloth library. Then we define some variables to be worked with throughout the guide:

- sequence_length_maximum: It is the max sequence length that a model can handle. We give it a value of 4096.

- weights_data_type: Here we tell what data type the model weights should be. We gave it None, which will auto-select the data type.

- quantize_to_4bit: Here, we give it a value of True. This then tells the model to load in 4 bits, so that it can easily fit in the Colab GPU.

Downloading Model and Creating LoRA Adaptors

Here, we will start downloading the Phi 3 Medium Model. We will do this with the Unsloth’s FastLanguageModel class.

model, tokenizer = FastLanguageModel.from_pretrained( model_name = "unsloth/Phi-3-medium-4k-instruct", max_seq_length = sequence_length_maximum, dtype = weights_data_type, load_in_4bit = quantize_to_4bit, token = "YOUR_HF_TOKEN" )

When we run the code, the output generated can be seen in the pic above. Both the Phi 3 Medium model and its tokenizer will be downloaded to the Colab environment by fetching it from the HuggingFace Repository.

We cannot finetune the whole Phi 3 Medium model. So we just train a few weights of the Phi 3 Model. For this, we work with LoRA (Low-Rank Adaptation), which works by training only a subset of parameters. So for this, we need to create a LoRA config and get the Parameter Efficient Finetuned Model (peft model) from this LoRA config. The code for this will be:

model = FastLanguageModel.get_peft_model( model, r = 16, target_modules = ["q_proj", "k_proj", "down_proj", "v_proj", "o_proj", "up_proj", "gate_proj"], lora_alpha = 16, bias = "none", lora_dropout = 0, random_state = 3407, use_gradient_checkpointing = "True", )

- Here “r” is the Rank of the LoRA Matrix. If we have a higher rank, then we need to train more parameters, and if lower rank, then a lower number of parameters. We set this to a value of 16.

- Here the lora_alpha is the scaling factor of the weights present in the LoRA Matrix. It is usually kept the same as rank to get optimal results.

- Dropout will randomly shut down some of the weights in the LoRA weight matrix. We have kept it to 0, so that we can get an increase in the training speed and it has little impact on the performance.

- We can have a bias parameter for the weights in the LoRA matrix. But setting to None will further increase the memory efficiency and decrease the training time,

After running this code, the LoRA Adapters for the Phi 3 Medium will be created. Now we can work with this peft model and finetune it with a dataset of our choice.

Preparing the Dataset for Fine-tuning

Here, we will be training the Phi 3 Medium Large Language Model with a dataset that will allow the model to generate Cypher Queries which are necessary for querying the Knowledge Graph Databases like the neo4j. So for this, we will download the dataset provided from a GitHub Repository. The command for this will be:

!wget https://raw.githubusercontent.com/neo4j-labs/text2cypher\ /main/datasets/synthetic_gpt4turbo_demodbs/text2cypher_gpt4turbo.csv

The above command will download a CSV file. This CSV file contains the dataset that we will be working with to train the Phi 3 Medium LLM. Before that, we need to do some preprocessing. We are only taking a certain part i.e. a subset of the dataset. The code for this will be:

import pandas as pd

df = pd.read_csv('/content/text2cypher_gpt4turbo.csv')

df = df[(df['database'] == 'recommendations') &

(df['syntax_error'] == False) & (df['timeout'] == False)]

df = df[['question','cypher']]

df.rename(columns={'question': 'input','cypher':'output'}, inplace=True)

df.reset_index(drop=True, inplace=True)

Here, we filter the data. We need the data coming from the recommendations database. We need only those columns which do not have any syntax error and where there is no timeout. This is necessary because we need the Phi 3 to give us a syntax error-free Cypher Queries when asked.

The dataset contains many columns, but only the question and the cypher column are the ones we need. And we even renamed these columns to input and output, where the question column is the input and the cypher column is the output that needs to be generated by the Large Language Model.

In the output pic, we can see the first 5 rows of the dataset. It contains only two columns, input and output. The database we are working with, for the training data, has a schema to it.

Schema for this Database

graph_schema = """ Node properties: - **Movie** - `url`: STRING Example: "https://themoviedb.org/movie/862" - `runtime`: INTEGER Min:1, Max: 915 - `revenue`: INTEGER Min: 1, Max: 2787965087 - `budget`: INTEGER Min: 1, Max: 380000000 - `imdbRating`: FLOAT Min: 1.6, Max: 9.6 - `released`: STRING Example: "1995-11-22" - `countries`: LIST Min Size: 1, Max Size: 16 - `languages`: LIST Min Size: 1, Max Size: 19 - `imdbVotes`: INTEGER Min: 13, Max: 1626900 - `imdbId`: STRING Example: "0114709" - `year`: INTEGER Min: 1902, Max: 2016 - `poster`: STRING Example: "https://image.tmdb.org/t/p/w440_and_h660_face/uXDf" - `movieId`: STRING Example: "1" - `tmdbId`: STRING Example: "862" - `title`: STRING Example: "Toy Story" - **Genre** - `name`: STRING Example: "Adventure" - **User** - `userId`: STRING Example: "1" - `name`: STRING Example: "Omar Huffman" - **Actor** - `url`: STRING Example: "https://themoviedb.org/person/1271225" - `bornIn`: STRING Example: "France" - `bio`: STRING Example: "From Wikipedia, the free encyclopedia Lillian Di" - `died`: DATE Example: "1954-01-01" - `born`: DATE Example: "1877-02-04" - `imdbId`: STRING Example: "2083046" - `name`: STRING Example: "François Lallement" - `poster`: STRING Example: "https://image.tmdb.org/t/p/w440_and_h660_face/6DCW" - `tmdbId`: STRING Example: "1271225" - **Director** - `url`: STRING Example: "https://themoviedb.org/person/88953" - `bornIn`: STRING Example: "Burchard, Nebraska, USA" - `bio`: STRING Example: "Harold Lloyd has been called the cinema’s “first m" - `died`: DATE Min: 1930-08-26, Max: 2976-09-29 - `born`: DATE Min: 1861-12-08, Max: 2018-05-01 - `imdbId`: STRING Example: "0516001" - `name`: STRING Example: "Harold Lloyd" - `poster`: STRING Example: "https://image.tmdb.org/t/p/w440_and_h660_face/er4Z" - `tmdbId`: STRING Example: "88953" - **Person** - `url`: STRING Example: "https://themoviedb.org/person/1271225" - `bornIn`: STRING Example: "France" - `bio`: STRING Example: "From Wikipedia, the free encyclopedia Lillian Di" - `died`: DATE Example: "1954-01-01" - `born`: DATE Example: "1877-02-04" - `imdbId`: STRING Example: "2083046" - `name`: STRING Example: "François Lallement" - `poster`: STRING Example: "https://image.tmdb.org/t/p/w440_and_h660_face/6DCW" - `tmdbId`: STRING Example: "1271225" Relationship properties: - **RATED** - `rating: FLOAT` Example: "2.0" - `timestamp: INTEGER` Example: "1260759108" - **ACTED_IN** - `role: STRING` Example: "Officer of the Marines (uncredited)" - **DIRECTED** - `role: STRING` The relationships: (:Movie)-[:IN_GENRE]->(:Genre) (:User)-[:RATED]->(:Movie) (:Actor)-[:ACTED_IN]->(:Movie) (:Actor)-[:DIRECTED]->(:Movie) (:Director)-[:DIRECTED]->(:Movie) (:Director)-[:ACTED_IN]->(:Movie) (:Person)-[:ACTED_IN]->(:Movie) (:Person)-[:DIRECTED]->(:Movie) """

The schema contains all the Node properties and the Relationships between the nodes that are presented in the recommendations graph database. Now, we will convert these to an instruction format, so the model will only output a cypher query only when it has been instructed to do so. The function for this will be.

prompt = """Given are the instruction below, having an input \

that provides further context.

### Instruction:

{}

### Input:

{}

### Response:

{}"""

token_eos = tokenizer.eos_token

def format_prompt(columns):

instructions = f"Use the below text to generate a cypher query. \

The schema is given below:\n{graph_schema}"

inps = columns["input"]

outs = columns["output"]

text_list = []

for input, output in zip(inps, outs):

text = prompt.format(instructions, input, output) token_eos

text_list.append(text)

return { "text" : texts, }

- Here we first define our Prompt Template. In this template, we start by defining the instruction then followed by the input, and finally the output.

- Then we create a function called format_prompt(). This takes in the data and then extracts the input and output columns from the data.

- Then we iterate through each row in the input and output column and fit them to the Prompt Template.

- Along with that, we even added the end-of-sentence token called token_eos to the Prompt, which will tell the model that the generation needs to be stopped.

- We finally return the list containing all these Prompts in a dictionary format.

This function above will be passed to our dataset to create the final column. The code for this will be:

from datasets import Dataset dataset = Dataset.from_pandas(df) dataset = dataset.map(format_prompt, batched = True)

- Here, we start by importing the Dataset class from the datasets library.

- Then we convert our dataset, which is of type DataFrame to the Dataset type by calling the .from_pandas() method and passing it to the DataFrame.

- Now, we will map the function that we have created to create our final dataset for training.

Running the code will create a new column called “text”, which will contain the prompts that we have defined in the format_prompt() function. From the pic above, we can see that there are a total of 700 rows of data in our dataset and there are three columns, that are text, input, and output. With this, we have our data ready for fine-tuning.

Fine-tuning Phi 3 Medium for Text2Cypher Query

We are now ready to fine-tune the Phi 3 Medium on the Cypher Query dataset. In this section, we start by creating our Trainer and the corresponding Training Arguments that we need to train our model on this dataset that we have prepared. The code for this will be:

from trl import SFTTrainer

from transformers import TrainingArguments

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = sequence_length_maximum,

dataset_num_proc = 2,

packing = False,

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

num_train_epochs=1,

learning_rate = 2e-4,

fp16 = True,

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.02,

lr_scheduler_type = "linear",

output_dir = "outputs",

),

)

- We start by importing the SFTTrainer from the trl library which we will work with to perform the Supervised Fine Tuning.

- We even import the TrainingArguments class from the transformers library to set the training config for training the model.

- Then we create an instance of SFTTrainer with various parameters and store it in the trainer variable model = model: Tells the pre-trained model to be fine-tuned.

- tokenizer = tokenizer: Tells the tokenizer associated with the modeltrain_dataset = dataset: Sets the dataset that we have prepared for training the model.

- dataset_text_field = “text”: Indicates the field in the dataset that contains the text data.

- max_seq_length = sequence_max_length: Here, we provide the maximum sequence length for the model.

- dataset_num_proc = 2: Number of processes to use for data loading.

- packing = False: Disables packing of sequences, which can speed up training for short sequences.

While training a Large Language Model or a Deep Learning model, we must set many different hyperparameters, which bring out the best-performing model. These include different parameters.

Different Parameters

- At a time we send two examples to the processor, so we select a batch size of 2.

- We need 4 accumulation steps before updating the gradients in the backward pass. So we have set it to 4.

- We have set the warmup steps to 3, so the learning rate will not be in effect until three steps are completed.

- We want to run the training for the whole dataset, so gave one epoch for the training.

- We need to print out the metrics after every step, so we will log the training metrics like the accuracy and the training loss for each step.

- The optimizer will take care of the gradients so that they will reach a global minimum so that the accuracy loss is decreased. Here for the optimizer, we will go with the Adam optimizer.

- Weight decay is needed so the weights do not go to extreme values. So gave it a decay value of 0.02.

- The learning rate scheduler will change the learning rate while the training is happening. Here we want it to change linearly so we gave it the option called “linear”.

We are now done with defining our Trainer and the TrainingArguments for training our quantized Phi 3 Medium 14Billion Large Language Model. Running the trainer.train() will start the training.

trainer_stats = trainer.train()

Running the above will start the training process. In Google Colab, working with the free T4 GPU, it takes around 1 hour and 40 minutes to go through 1 epoch on the training data. It has taken around 95 epochs to complete one epoch. Finally, the training is completed.

Generating Cypher Query with Phi 3 Medium

We have now finished training the model. Now we will test the model to check how well it generates cypher queries given a text.

FastLanguageModel.for_inference(model)

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: \n{graph_schema}",

"What are the top 5 movies with a runtime greater than 120 minutes"

"",

)

], return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

- We start by loading the trained model for inference by passing it to the for_inference() method of the FastLanguageModel class.

- Then we call the tokenizer and give it the input Prompt. We work with the same Prompt Template that we have defined and give the questions “What are the top 5 movies?.

- These are then given to the model to give out the output tokens and we have set the max new tokens to 128 and store the generated result in the output variable.

- Finally, we decode the output tokens and print it.

We can see the results of running this code in the above pic. We see that the Cypher Query generated by the model matches the ground truth, Cypher Query. Let us test with some more examples to see the performance of the fine-tuned Phi 3 Medium for Cypher Query generation.

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: \n{graph_schema}",

"Which 3 directors have the longest bios in the database?"

"",

)

], return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

inputs = tokenizer(

[

prompt.format(

f"Convert text to cypher query based on this schema: \n{graph_schema}",

"List the genres that have movies with an imdbRating less than 4.0.",

"",

)

], return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 128)

print(tokenizer.decode(outputs[0], skip_special_tokens = True))

We can see that in both the examples above, the fine-tuned Phi 3 Medium model has generated the correct Cypher Query for the provided question. In the first example, the Phi 3 Medium did provide the right answer but took slightly a different approach. With this, we can say that finetuning Phi 3 Medium on the Cypher Dataset has made its generation slightly more accurate while generating Cypher Queries given a text.

Conclusion

This guide has detailed the fine-tuning process of the Phi 3 Medium model for generating Cypher queries from natural language inputs, aimed at enhancing accessibility to Knowledge Graphs like Neo4j. Through leveraging tools like Unsloth for efficient model training and deploying techniques such as LoRA adapters to optimize parameter usage, developers can effectively translate complex data queries into structured Cypher commands.

Key Takeaways

- Phi 3 Family of models developed by Microsoft provides small developers to train these models on their personalized datasets for different scenarios.

- Unsloth, a Python library is a great tool for fine-tuning small language models which improve the training speeds and memory efficiency.

- Creating the environment involves installing necessary libraries and configuring parameters like the sequence length and data type.

- Lora is a method that allows us to train only a subset of the whole parameters of the Large Language Model thus allowing us to train them on a consumer hardware.

- Text to Cypher query generation will allow developers to let Large Language Models access Graph Databases to provide more accurate responses.

Frequently Asked Questions

Q1. What are the benefits of using Phi-3 Medium for this task?

A. Phi-3 Medium is a compact and powerful LLM, making it suitable for developers with limited resources. Fine-tuning allows it to specialize in Cypher query generation, improving accuracy and efficiency.

A. Unsloth is a framework specifically designed to optimize the fine-tuning process for large language models. It offers significant speed and memory usage improvements compared to traditional methods

Q3. What fine-tuning dataset is required?A. The guide uses a dataset containing pairs of natural language questions and their corresponding Cypher queries. This dataset helps the model learn the relationship between text and the structured query language.

Q4. How does the fine-tuning process work?A. The guide outlines steps for setting up the training environment, downloading the pre-trained model, and preparing the dataset. It then details how to fine-tune the model using Unsloth and a specific training configuration.

Q5. How do I generate Cypher queries with the fine-tuned model?A. Once trained, the model can be used to generate Cypher Query from Text. The guide provides an example of how to structure the input and decode the generated query.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

The above is the detailed content of Finetuning Phi-Medium to Generate Cypher Query from Text. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

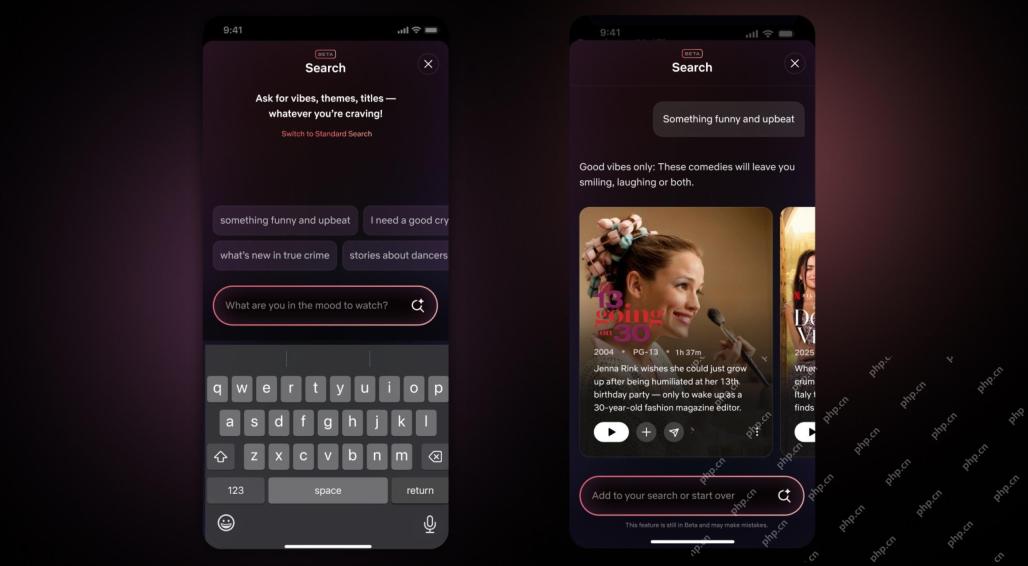

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.