Google DeepMind's Gemma: A Deep Dive into Open-Source LLMs

The AI landscape is buzzing with activity, particularly concerning open-source Large Language Models (LLMs). Tech giants like Google, Meta, and Twitter are increasingly embracing open-source development. Google DeepMind recently unveiled Gemma, a family of lightweight, open-source LLMs built using the same underlying research and technology as Google's Gemini models. This article explores Gemma models, their accessibility via cloud GPUs and TPUs, and provides a step-by-step guide to fine-tuning the Gemma 7b-it model on a role-playing dataset.

Understanding Google's Gemma

Gemma (meaning "precious stone" in Latin) is a family of decoder-only, text-to-text open models developed primarily by Google DeepMind. Inspired by the Gemini models, Gemma is designed for lightweight operation and broad framework compatibility. Google has released model weights for two Gemma sizes: 2B and 7B, each available in pre-trained and instruction-tuned variants (e.g., Gemma 2B-it and Gemma 7B-it). Gemma's performance rivals other open models, notably outperforming Meta's Llama-2 across various LLM benchmarks.

Image Source

Image Source

Gemma's versatility extends to its support for multiple frameworks (Keras 3.0, PyTorch, JAX, Hugging Face Transformers) and diverse hardware (laptops, desktops, IoT devices, mobile, and cloud). Inference and supervised fine-tuning (SFT) are possible on free Cloud TPUs using popular machine learning frameworks. Furthermore, Google provides a Responsible Generative AI Toolkit alongside Gemma, offering developers guidance and tools for creating safer AI applications. Beginners in AI and LLMs are encouraged to explore the AI Fundamentals skill track for foundational knowledge.

Accessing Google's Gemma Model

Accessing Gemma is straightforward. Free access is available via HuggingChat and Poe. Local usage is also possible by downloading model weights from Hugging Face and utilizing GPT4ALL or LMStudio. This guide focuses on using Kaggle's free GPUs and TPUs for inference.

Running Gemma Inference on TPUs

To run Gemma inference on TPUs using Keras, follow these steps:

- Navigate to Keras/Gemma, select the "gemma_instruct_2b_en" model variant, and click "New Notebook."

- In the right panel, select "TPU VM v3-8" as the accelerator.

- Install necessary Python libraries:

!pip install -q tensorflow-cpu !pip install -q -U keras-nlp tensorflow-hub !pip install -q -U keras>=3 !pip install -q -U tensorflow-text

- Verify TPU availability using

jax.devices(). - Set

jaxas the Keras backend:os.environ["KERAS_BACKEND"] = "jax" - Load the model using

keras_nlpand generate text using thegeneratefunction.

Image Source

Image Source

Running Gemma Inference on GPUs

For GPU inference using Transformers, follow these steps:

- Navigate to google/gemma, select "transformers," choose the "7b-it" variant, and create a new notebook.

- Select GPT T4 x2 as the accelerator.

- Install required packages:

%%capture %pip install -U bitsandbytes %pip install -U transformers %pip install -U accelerate

- Load the model using 4-bit quantization with BitsAndBytes for VRAM management.

- Load the tokenizer.

- Create a prompt, tokenize it, pass it to the model, decode the output, and display the result.

Image Source

Image Source

Fine-Tuning Google's Gemma: A Step-by-Step Guide

This section details fine-tuning Gemma 7b-it on the hieunguyenminh/roleplay dataset using a Kaggle P100 GPU.

Setting Up

- Install necessary packages:

%%capture %pip install -U bitsandbytes %pip install -U transformers %pip install -U peft %pip install -U accelerate %pip install -U trl %pip install -U datasets

- Import required libraries.

- Define variables for the base model, dataset, and fine-tuned model name.

- Log in to Hugging Face CLI using your API key.

- Initialize Weights & Biases (W&B) workspace.

Loading the Dataset

Load the first 1000 rows of the role-playing dataset.

Loading the Model and Tokenizer

Load the Gemma 7b-it model using 4-bit precision with BitsAndBytes. Load the tokenizer and configure the pad token.

Adding the Adapter Layer

Add a LoRA adapter layer to efficiently fine-tune the model.

Training the Model

Define training arguments (hyperparameters) and create an SFTTrainer. Train the model using .train().

Saving the Model

Save the fine-tuned model locally and push it to the Hugging Face Hub.

Model Inference

Generate responses using the fine-tuned model.

Gemma 7B Inference with Role Play Adapter

This section demonstrates how to load the base model and the trained adapter, merge them, and generate responses.

Final Thoughts

Google's release of Gemma signifies a shift towards open-source collaboration in AI. This tutorial provided a comprehensive guide to using and fine-tuning Gemma models, highlighting the power of open-source development and cloud computing resources. The next step is to build your own LLM-based application using frameworks like LangChain.

The above is the detailed content of Fine Tuning Google Gemma: Enhancing LLMs with Customized Instructions. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

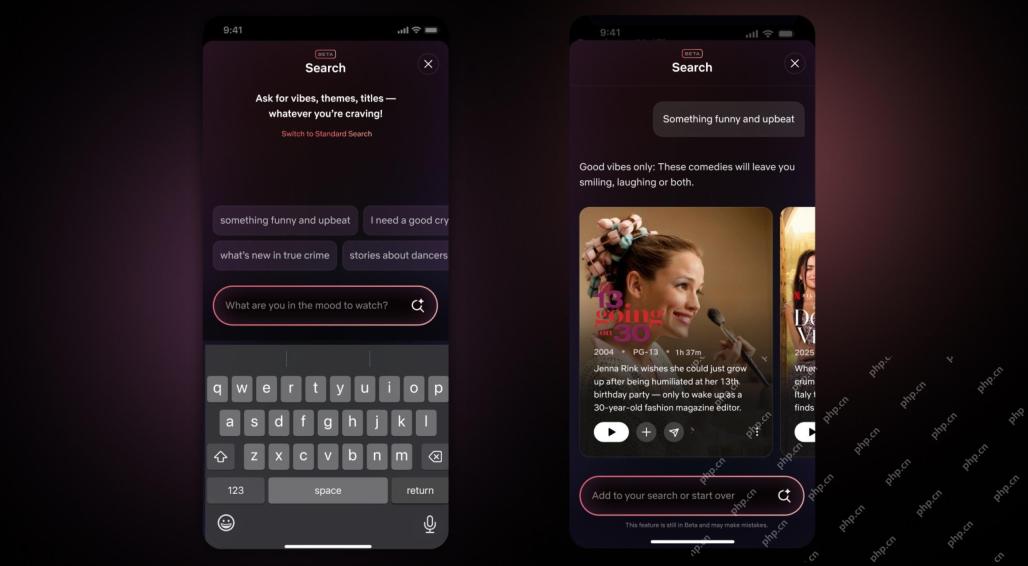

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft