Home >Technology peripherals >AI >Snowflake Snowpark: A Comprehensive Introduction

Snowflake Snowpark: A Comprehensive Introduction

- Lisa KudrowOriginal

- 2025-03-07 09:49:08321browse

Snowpark: In-Database Machine Learning with Snowflake

Traditional machine learning often involves moving massive datasets from databases to model training environments. This is increasingly inefficient with today's large datasets. Snowflake Snowpark addresses this by enabling in-database processing. Snowpark provides libraries and runtimes to execute code (Python, Java, Scala) directly within Snowflake's cloud, minimizing data movement and enhancing security.

Why Choose Snowpark?

Snowpark offers several key advantages:

- In-Database Processing: Manipulate and analyze Snowflake data using your preferred language without data transfer.

- Performance Improvement: Leverage Snowflake's scalable architecture for efficient processing.

- Reduced Costs: Minimize infrastructure management overhead.

- Familiar Tools: Integrate with existing tools like Jupyter or VS Code, and utilize familiar libraries (Pandas, Scikit-learn, XGBoost).

Getting Started: A Step-by-Step Guide

This tutorial demonstrates building a hyperparameter-tuned model using Snowpark.

-

Virtual Environment Setup: Create a conda environment and install necessary libraries (

snowflake-snowpark-python,pandas,pyarrow,numpy,matplotlib,seaborn,ipykernel). -

Data Ingestion: Import sample data (e.g., the Seaborn Diamonds dataset) into a Snowflake table. (Note: In real-world scenarios, you'll typically work with existing Snowflake databases.)

-

Snowpark Session Creation: Establish a connection to Snowflake using your credentials (account name, username, password) stored securely in a

config.pyfile (added to.gitignore). -

Data Loading: Use the Snowpark session to access and load the data into a Snowpark DataFrame.

Understanding Snowpark DataFrames

Snowpark DataFrames operate lazily, building a logical representation of operations before translating them into optimized SQL queries. This contrasts with Pandas' eager execution, offering significant performance gains, especially with large datasets.

When to Use Snowpark DataFrames:

Use Snowpark DataFrames for large datasets where transferring data to your local machine is impractical. For smaller datasets, Pandas may be sufficient. The to_pandas() method allows conversion between Snowpark and Pandas DataFrames. The Session.sql() method provides an alternative for executing SQL queries directly.

Snowpark DataFrame Transformation Functions:

Snowpark's transformation functions (imported as F from snowflake.snowpark.functions) provide a powerful interface for data manipulation. These functions are used with .select(), .filter(), and .with_column() methods.

Exploratory Data Analysis (EDA):

EDA can be performed by sampling data from the Snowpark DataFrame, converting it to a Pandas DataFrame, and using visualization libraries like Matplotlib and Seaborn. Alternatively, SQL queries can generate data for visualizations.

Machine Learning Model Training:

-

Data Cleaning: Ensure data types are correct and handle any preprocessing needs (e.g., renaming columns, casting data types, cleaning text features).

-

Preprocessing: Use Snowflake ML's

PipelinewithOrdinalEncoderandStandardScalerto preprocess data. Save the pipeline usingjoblib. -

Model Training: Train an XGBoost model (

XGBRegressor) using the preprocessed data. Split the data into training and testing sets usingrandom_split(). -

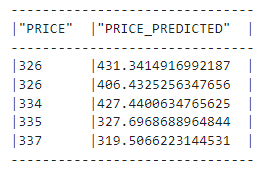

Model Evaluation: Evaluate the model using metrics like RMSE (

mean_squared_errorfromsnowflake.ml.modeling.metrics). -

Hyperparameter Tuning: Use

RandomizedSearchCVto optimize model hyperparameters. -

Model Saving: Save the trained model and its metadata to Snowflake's model registry using the

Registryclass. -

Inference: Perform inference on new data using the saved model from the registry.

Conclusion:

Snowpark provides a powerful and efficient way to perform in-database machine learning. Its lazy evaluation, integration with familiar libraries, and model registry make it a valuable tool for handling large datasets. Remember to consult the Snowpark API and ML developer guides for more advanced features and functionalities.

Note: Image URLs are preserved from the input. The formatting is adjusted for better readability and flow. The technical details are retained, but the language is made more concise and accessible to a broader audience.

The above is the detailed content of Snowflake Snowpark: A Comprehensive Introduction. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology