The establishment of benchmarks that faithfully replicate real-world tasks is essential in the rapidly developing field of artificial intelligence, especially in the software engineering domain. Samuel Miserendino and associates developed the SWE-Lancer benchmark to assess how well large language models (LLMs) perform freelancing software engineering tasks. Over 1,400 jobs totaling $1 million USD were taken from Upwork to create this benchmark, which is intended to evaluate both managerial and individual contributor (IC) tasks.

Table of contents

- What is SWE-Lancer Benchmark?

- Features of SWE-Lancer

- Why is SWE-Lancer Important?

- Evaluation Metrics

- Example Tasks

- Individual Contributor (IC) Software Engineering (SWE) Tasks

- SWE Management Tasks

- Model Performance

- Performance Metrics

- Result

- Limitations of SWE-Lancer

- Future Work

- Conclusion

What is SWE-Lancer Benchmark?

SWE-Lancer encompasses a diverse range of tasks, from simple bug fixes to complex feature implementations. The benchmark is structured to provide a realistic evaluation of LLMs by using end-to-end tests that mirror the actual freelance review process. The tasks are graded by experienced software engineers, ensuring a high standard of evaluation.

Features of SWE-Lancer

- Real-World Payouts: The tasks in SWE-Lancer represent actual payouts to freelance engineers, providing a natural difficulty gradient.

- Management Assessment: The benchmark chooses the best implementation plans from independent contractors by assessing the models’ capacity to serve as technical leads.

- Advanced Full-Stack Engineering: Due to the complexity of real-world software engineering, tasks necessitate a thorough understanding of both front-end and back-end development.

- Better Grading through End-to-End Tests: SWE-Lancer employs end-to-end tests developed by qualified engineers, providing a more thorough assessment than earlier benchmarks that depended on unit tests.

Why is SWE-Lancer Important?

A crucial gap in AI research is filled by the launch of SWE-Lancer: the capacity to assess models on tasks that replicate the intricacies of real software engineering jobs. The multidimensional character of real-world projects is not adequately reflected by previous standards, which frequently concentrated on discrete tasks. SWE-Lancer offers a more realistic assessment of model performance by utilizing actual freelance jobs.

Evaluation Metrics

The performance of models is evaluated based on the percentage of tasks resolved and the total payout earned. The economic value associated with each task reflects the true difficulty and complexity of the work involved.

Example Tasks

- $250 Reliability Improvement: Fixing a double-triggered API call.

- $1,000 Bug Fix: Resolving permissions discrepancies.

- $16,000 Feature Implementation: Adding support for in-app video playback across multiple platforms.

The SWE-Lancer dataset contains 1,488 real-world freelance software engineering tasks, drawn from the Expensify open-source repository and originally posted on Upwork. These tasks, with a combined value of $1 million USD, are categorized into two groups:

Individual Contributor (IC) Software Engineering (SWE) Tasks

This dataset consists of 764 software engineering tasks, worth a total of $414,775, designed to represent the work of individual contributor software engineers. These tasks involve typical IC duties such as implementing new features and fixing bugs. For each task, a model is provided with:

- A detailed description of the issue, including reproduction steps and the desired behavior.

- A codebase checkpoint representing the state before the issue is fixed.

- The objective of fixing the issue.

The model’s proposed solution (a patch) is evaluated by applying it to the provided codebase and running all associated end-to-end tests using Playwright. Critically, the model does not have access to these end-to-end tests during the solution generation process.

Evaluation flow for IC SWE tasks; the model only earns the payout if all applicable tests pass.

SWE Management Tasks

This dataset, consisting of 724 tasks valued at $585,225, challenges a model to act as a software engineering manager. The model is presented with a software engineering task and must choose the best solution from several options. Specifically, the model receives:

- Multiple proposed solutions to the same issue, taken directly from real discussions.

- A snapshot of the codebase as it existed before the issue was resolved.

- The overall objective in selecting the best solution.

The model’s chosen solution is then compared against the actual, ground-truth best solution to evaluate its performance. Importantly, a separate validation study with experienced software engineers confirmed a 99% agreement rate with the original “best” solutions.

Evaluation flow for SWE Manager tasks; during proposal selection, the model has the ability to browse the codebase.

Also Read: Andrej Karpathy on Puzzle-Solving Benchmarks

Model Performance

The benchmark has been tested on several state-of-the-art models, including OpenAI’s GPT-4o, o1 and Anthropic’s Claude 3.5 Sonnet. The results indicate that while these models show promise, they still struggle with many tasks, particularly those requiring deep technical understanding and context.

Performance Metrics

- Claude 3.5 Sonnet: Achieved a score of 26.2% on IC SWE tasks and 44.9% on SWE Management tasks, earning a total of $208,050 out of $500,800 possible on the SWE-Lancer Diamond set.

- GPT-4o: Showed lower performance, particularly on IC SWE tasks, highlighting the challenges faced by LLMs in real-world applications.

- GPT o1 model: Showed a mid performance earned over $380 and performed better than 4o.

Total payouts earned by each model on the full SWE-Lancer dataset including both IC SWE and SWE Manager tasks.

Result

This table shows the performance of different language models (GPT-4, o1, 3.5 Sonnet) on the SWE-Lancer dataset, broken down by task type (IC SWE, SWE Manager) and dataset size (Diamond, Full). It compares their “pass@1” accuracy (how often the top generated solution is correct) and earnings (based on task value). The “User Tool” column indicates whether the model had access to external tools. “Reasoning Effort” reflects the level of effort allowed for solution generation. Overall, 3.5 Sonnet generally achieves the highest pass@1 accuracy and earnings across different task types and dataset sizes, while using external tools and increasing reasoning effort tends to improve performance. The blue and green highlighting emphasizes overall and baseline metrics respectively.

The table displays performance metrics, specifically “pass@1” accuracy and earnings. Overall metrics for the Diamond and Full SWE-Lancer sets are highlighted in blue, while baseline performance for the IC SWE (Diamond) and SWE Manager (Diamond) subsets are highlighted in green.

Limitations of SWE-Lancer

SWE-Lancer, while valuable, has several limitations:

- Diversity of Repositories and Tasks: Tasks were sourced solely from Upwork and the Expensify repository. This limits the evaluation’s scope, particularly infrastructure engineering tasks, which are underrepresented.

- Scope: Freelance tasks are often more self-contained than full-time software engineering tasks. Although the Expensify repository reflects real-world engineering, caution is needed when generalizing findings beyond freelance contexts.

- Modalities: The evaluation is text-only, lacking consideration for how visual aids like screenshots or videos might enhance model performance.

- Environments: Models cannot ask clarifying questions, which may hinder their understanding of task requirements.

-

Contamination: The potential for contamination exists due to the public nature of tasks. To ensure accurate evaluations, browsing should be disabled, and post-hoc filtering for cheating is essential. Analysis indicates limited contamination impact for tasks predating model knowledge cutoffs.

Future Work

SWE-Lancer presents several opportunities for future research:

- Economic Analysis: Future studies could investigate the societal impacts of autonomous agents on labor markets and productivity, comparing freelancer payouts to API costs for task completion.

- Multimodality: Multimodal inputs, such as screenshots and videos, are not supported by the current framework. Future analyses that include these components may offer a more thorough appraisal of the model’s performance in practical situations.

You can find the full research paper here.

Conclusion

SWE-Lancer represents a significant advancement in the evaluation of LLMs for software engineering tasks. By incorporating real-world freelance tasks and rigorous testing standards, it provides a more accurate assessment of model capabilities. The benchmark not only facilitates research into the economic impact of AI in software engineering but also highlights the challenges that remain in deploying these models in practical applications.

The above is the detailed content of OpenAI's SWE-Lancer Benchmark. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

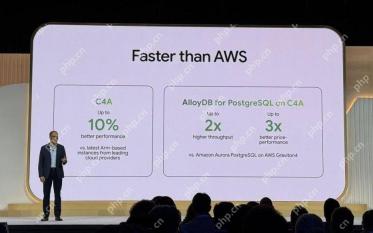

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Zend Studio 13.0.1

Powerful PHP integrated development environment