In this blog, we’ll build a multilingual code explanation app to showcase Llama 3.3’s capabilities, particularly its strengths in reasoning, following instructions, coding, and multilingual support.

This app will allow users to:

- Input a code snippet in any programming language.

- Choose a language for the explanation (English, Spanish, French, etc.).

- Generate a beginner-friendly explanation of the code.

The app will be built using:

- Llama 3.3 from Hugging Face for processing.

- Streamlit for the user interface.

- Hugging Face Inference API for integration.

We’ll jump straight to building our Llama 3.3 app, but if you’d like an overview of the model first, check out this guide on Llama 3.3. Let’s get started!

Setting Up Llama 3.3

To get started, we’ll break this into a few steps. First, we’ll cover how to access Llama 3.3 using Hugging Face, set up your account, and get the necessary permissions. Then, we’ll create the project environment and install the required dependencies.

Accessing Llama 3.3 on Hugging Face

One way to access Llama 3.3 is via Hugging Face, one of the most popular platforms for hosting machine learning models. To use Llama 3.3 via Hugging Face’s Inference API, you’ll need:

- A Hugging Face account.

- Go to Hugging Face and sign up for an account if you don’t already have one.

- Once signed in, go to your Access Tokens page.

- Generate a new Read token.

- Copy the token securely.

- Navigate to the Llama-3.3-70B-Instruct page.

- You’ll need to agree to the license terms and request access.

- Note: Access to this model requires a Pro subscription. Ensure your account is upgraded.

Prepare your project environment

With access to the model secured, let’s set up the app’s environment. First, we are going to create a folder for this project. Open your terminal, navigate to where you want to create your project folder, and run:

mkdir multilingual-code-explanation cd multilingual-code-explanation

Then, we’ll create a file called app.py to hold the code: touch app.pyNow, we create an environment, and we activate it:

python3 -m venv venv source venv/bin/activate’

Install required dependencies

Now that the environment is ready, let’s install the necessary libraries. Make sure that you are running Python 3.8 . In the terminal, run the following command to install Streamlit, Requests, and Hugging Face libraries:

pip install streamlit requests transformers huggingface-hub

By now, you should have:

- A Hugging Face account with an API token and access to the Llama 3.3 model.

- A clean project folder ready for coding.

- All the necessary libraries installed, including:

- Streamlit for the interface.

- Requests for making API calls.

- Transformers and huggingface-hub for interacting with Hugging Face models.

Now that the setup is complete, we’re ready to build the app! In the next section, we’ll start coding the multilingual code explanation app step by step.

Write the Backend

The backend communicates with the Hugging Face API to send the code snippet and receive the explanation.

Import required libraries

First, we need to import the requests library. This library allows us to send HTTP requests to APIs. At the top of your app.py file, write:

mkdir multilingual-code-explanation cd multilingual-code-explanation

Set up API access

To interact with the Llama 3.3 API hosted on Hugging Face, you need:

- The API endpoint (URL where the model is hosted).

- Your Hugging Face API key for authentication.

python3 -m venv venv source venv/bin/activate’

In the code above:

- Replace "hf_your_api_key_here" with the token you generated earlier.

- The HEADERS dictionary includes the API key so Hugging Face knows you’re authorized to use the endpoint.

Write the function to query Llama 3.3

Now, we’ll write a function to send a request to the API. The function will:

- Construct a prompt that tells the model what to do.

- Send the request to Hugging Face.

- Handle the response and extract the generated explanation.

pip install streamlit requests transformers huggingface-hub

The prompt tells Llama 3.3 to explain the code snippet in the desired language.

Disclaimer: I experimented with different prompts to find the one that produced the best output, so there was definitely an element of prompt engineering involved!

Next, the payload is defined. For the input, we specify that the prompt is sent to the model. In the parameters, max_new_tokens controls the response length, while temperature adjusts the output's creativity level.

The requests.post() function sends the data to Hugging Face. If the response is successful (status_code == 200), the generated text is extracted. If there’s an error, a descriptive message is returned.

Finally, there are steps to clean and format the output properly. This ensures it is presented neatly, which significantly improves the user experience.

Build the Streamlit Frontend

The frontend is where users will interact with the app. Streamlit is a library that creates interactive web apps with just Python code and makes this process simple and intuitive. This is what we will use to build the frontend of our app. I really like Streamlit to build demos and POC!

Import Streamlit

At the top of your app.py file, add:

mkdir multilingual-code-explanation cd multilingual-code-explanation

Set up the page configuration

We will use set_page_config() to define the app title and layout. In the code below:

- page_title: Sets the browser tab title.

- layout="wide": Allows the app to use the full screen width.

python3 -m venv venv source venv/bin/activate’

Create sidebar instructions

To help users understand how to use the app, we are going to add instructions to the sidebar: In the code below:

- st.sidebar.title(): Creates a title for the sidebar.

- st.sidebar.markdown(): Adds text with simple instructions.

- divider(): Adds a clean visual separation.

- Custom HTML: Displays a small footer at the bottom with a personal touch. Feel free to personalise this bit!

pip install streamlit requests transformers huggingface-hub

Add main app components

We are going to add the main title and subtitle to the page:

import requests

Now, to let users paste code and choose their preferred language, we need input fields. Because the code text is likely to be longer than the name of the language, we are choosing a text area for the code and a text input for the language:

- text_area(): Creates a large box for pasting code.

- text_input(): Allows users to type in the language.

HUGGINGFACE_API_KEY = "hf_your_api_key_here" # Replace with your actual API key

API_URL = "https://api-inference.huggingface.co/models/meta-llama/Llama-3.3-70B-Instruct"

HEADERS = {"Authorization": f"Bearer {HUGGINGFACE_API_KEY}"}

We now add a button to generate the explanation. If the user enters the code and the language and then clicks the Generate Explanation button, then an answer is generated.

def query_llama3(input_text, language):

# Create the prompt

prompt = (

f"Provide a simple explanation of this code in {language}:\n\n{input_text}\n"

f"Only output the explanation and nothing else. Make sure that the output is written in {language} and only in {language}"

)

# Payload for the API

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 500, "temperature": 0.3},

}

# Make the API request

response = requests.post(API_URL, headers=HEADERS, json=payload)

if response.status_code == 200:

result = response.json()

# Extract the response text

full_response = result[0]["generated_text"] if isinstance(result, list) else result.get("generated_text", "")

# Clean up: Remove the prompt itself from the response

clean_response = full_response.replace(prompt, "").strip()

# Further clean any leading colons or formatting

if ":" in clean_response:

clean_response = clean_response.split(":", 1)[-1].strip()

return clean_response or "No explanation available."

else:

return f"Error: {response.status_code} - {response.text}"

When the button is clicked, the app:

- Checks if the code snippet and language are provided.

- Displays a spinner while querying the API.

- Shows the generated explanation or a warning if input is missing.

Add a footer

To wrap up, let’s add a footer:

import streamlit as st

Run the Llama 3.3 App

It is time to run the app! To launch your app, run this code in the terminal:

st.set_page_config(page_title="Multilingual Code Explanation Assistant", layout="wide")

The app will open in your browser, and you can start playing with it!

Testing Llama 3.3 in Action

Now that we’ve built our multilingual code explanation app, it’s time to test how well the model works. In this section, we’ll use the app to process a couple of code snippets and evaluate the explanations generated in different languages.

Test 1: Factorial function in Python

For our first test, let’s start with a Python script that calculates the factorial of a number using recursion. Here’s the code we’ll use:

st.sidebar.title("How to Use the App")

st.sidebar.markdown("""

1. Paste your code snippet into the input box.

2. Enter the language you want the explanation in (e.g., English, Spanish, French).

3. Click 'Generate Explanation' to see the results.

""")

st.sidebar.divider()

st.sidebar.markdown(

"""

<div>

Made with ♡ by Ana

</div>

""",

unsafe_allow_html=True

)

This script defines a recursive function factorial(n) that calculates the factorial of a given number. For num = 5, the function will compute 5×4×3×2×1, resulting in 120. The result is printed to the screen using the print() statement. Here is the output when we generate an explanation in Spanish:

As a Spanish speaker, I can confirm that the explanation correctly identifies that the code calculates the factorial of a number using recursion. It walks through how the recursion works step by step, breaking it down into simple terms.

The model explains the recursion process and shows how the function calls itself with decreasing values of n until it reaches 0.

The explanation is entirely in Spanish, as requested, demonstrating Llama 3.3’s multilingual capabilities.

The use of simple phrases makes the concept of recursion easy to follow, even for readers unfamiliar with programming.

It summarizes and mentions how recursion works for other inputs like 3 and the importance of recursion as an efficient problem-solving concept in programming.

This first test highlights the power of Llama 3.3:

- It accurately explains code in a step-by-step manner.

- The explanation adapts to the requested language (Spanish in this case).

- The results are detailed, clear, and beginner-friendly, as instructed.

Now that we’ve tested a Python script, we can move on to other programming languages like JavaScript or SQL. This will help us further explore Llama 3.3’s capabilities across reasoning, coding, and multilingual support.

Test 2: Factorial function in Javascript

In this test, we’ll evaluate how well the multilingual code explanation app handles a JavaScript function and generates an explanation in French.

We use the following JavaScript code snippet in which I have intentionally chosen ambiguous variables to see how well the model handles this:

mkdir multilingual-code-explanation cd multilingual-code-explanation

This code snippet defines a recursive function x(a) that calculates the factorial of a given number a. The base condition checks if a === 1. If so, it returns 1. Otherwise, the function calls itself with a - 1 and multiplies the result by a. The constant y is set to 6, so the function x calculates 6×5×4×3×2×1. Fnally, the result is stored in the variable z and displayed using console.log. Here is the output and the translation in English:

Note: You can see that it looks like the response is suddenly cropped but it is because we have limited the output to 500 tokens!

After translating this, I concluded that the explanation correctly identifies that the function x(a) is recursive. It breaks down how recursion works, explaining the base case (a === 1) and the recursive case (a * x(a - 1)). The explanation explicitly shows how the function calculates the factorial of 6 and mentions the roles of y (the input value) and z (the result). It also notes how console.log is used to display the result.

The explanation is entirely in French, as requested. The technical terms like “récursive” (recursive), “factorielle” (factorial), and “produit” (product) are used correctly. And not just that, it identifies that this code calculates the factorial of a number in a recursive manner.

The explanation avoids overly technical jargon and simplifies recursion, making it accessible to readers new to programming.

This test demonstrates that Llama 3.3:

- Accurately explains JavaScript code involving recursion.

- Generates clear and detailed explanations in French.

- Adapts its explanation to include variable roles and code behavior.

Now that we’ve tested the app with Python and JavaScript, let’s move on to testing it with a SQL query to further evaluate its multilingual and reasoning capabilities.

Test 3: SQL query in German

In this last test, we’ll evaluate how the multilingual code explanation App handles a SQL query and generates an explanation in German. Here’s the SQL snippet used:

mkdir multilingual-code-explanation cd multilingual-code-explanation

This query selects the id column and calculates the total value (SUM(b.value)) for each id. It reads data from two tables: table_x (aliased as a) and table_y (aliased as b). Then, uses a JOIN condition to connect rows where a.ref_id = b.ref. It filters rows where b.flag = 1 and groups the data by a.id. The HAVING clause filters the groups to include only those where the sum of b.value is greater than 1000. Finally, it orders the results by total_amount in descending order.

After hitting the generate explanation button, this is what we get:

The generated explanation is concise, accurate, and well-structured. Each key SQL clause (SELECT, FROM, JOIN, WHERE, GROUP BY, HAVING, and ORDER BY) is clearly explained. Also, the description matches the order of execution in SQL, which helps readers follow the query logic step by step.

The explanation is entirely in German, as requested.

Key SQL terms (e.g., "filtert", "gruppiert", "sortiert") are used accurately in context. The explanation identifies that HAVING is used to filter grouped results, which is a common source of confusion for beginners. It also explains the use of aliases (AS) to rename tables and columns for clarity.

The explanation avoids overly complex terminology and focuses on the function of each clause. This makes it easy for beginners to understand how the query works.

This test demonstrates that Llama 3.3:

- Handles SQL queries effectively.

- Generates clause-by-clause explanations that are clear and structured.

- Supports German as an output language.

- Provides enough detail to help beginners understand the logic behind SQL queries.

We’ve tested the app with code snippets in Python, JavaScript, and SQL, generating explanations in Spanish, French, and German. In every test:

- The explanations were accurate, clear, and detailed.

- The model demonstrated strong reasoning skills and multilingual support.

With this test, we’ve confirmed that the app we have built is versatile, reliable, and effective for explaining code across different programming languages and natural languages.

Conclusion

Congratulations! You’ve built a fully functional multilingual code explanation assistant using Streamlit and Llama 3.3 from Hugging Face.

In this tutorial, you learned:

- How to integrate Hugging Face models into a Streamlit app.

- How to use the Llama 3.3 API to explain code snippets.

- How to clean up the API response and create a user-friendly app.

This project is a great starting point for exploring Llama 3.3’s capabilities in code reasoning, multilingual support, and instructional content. Feel free to create your own app to continue exploring this model's powerful features!

The above is the detailed content of Llama 3.3: Step-by-Step Tutorial With Demo Project. For more information, please follow other related articles on the PHP Chinese website!

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AM

7 Powerful AI Prompts Every Project Manager Needs To Master NowMay 08, 2025 am 11:39 AMGenerative AI, exemplified by chatbots like ChatGPT, offers project managers powerful tools to streamline workflows and ensure projects stay on schedule and within budget. However, effective use hinges on crafting the right prompts. Precise, detail

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AM

Defining The Ill-Defined Meaning Of Elusive AGI Via The Helpful Assistance Of AI ItselfMay 08, 2025 am 11:37 AMThe challenge of defining Artificial General Intelligence (AGI) is significant. Claims of AGI progress often lack a clear benchmark, with definitions tailored to fit pre-determined research directions. This article explores a novel approach to defin

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AM

IBM Think 2025 Showcases Watsonx.data's Role In Generative AIMay 08, 2025 am 11:32 AMIBM Watsonx.data: Streamlining the Enterprise AI Data Stack IBM positions watsonx.data as a pivotal platform for enterprises aiming to accelerate the delivery of precise and scalable generative AI solutions. This is achieved by simplifying the compl

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AM

The Rise of the Humanoid Robotic Machines Is Nearing.May 08, 2025 am 11:29 AMThe rapid advancements in robotics, fueled by breakthroughs in AI and materials science, are poised to usher in a new era of humanoid robots. For years, industrial automation has been the primary focus, but the capabilities of robots are rapidly exp

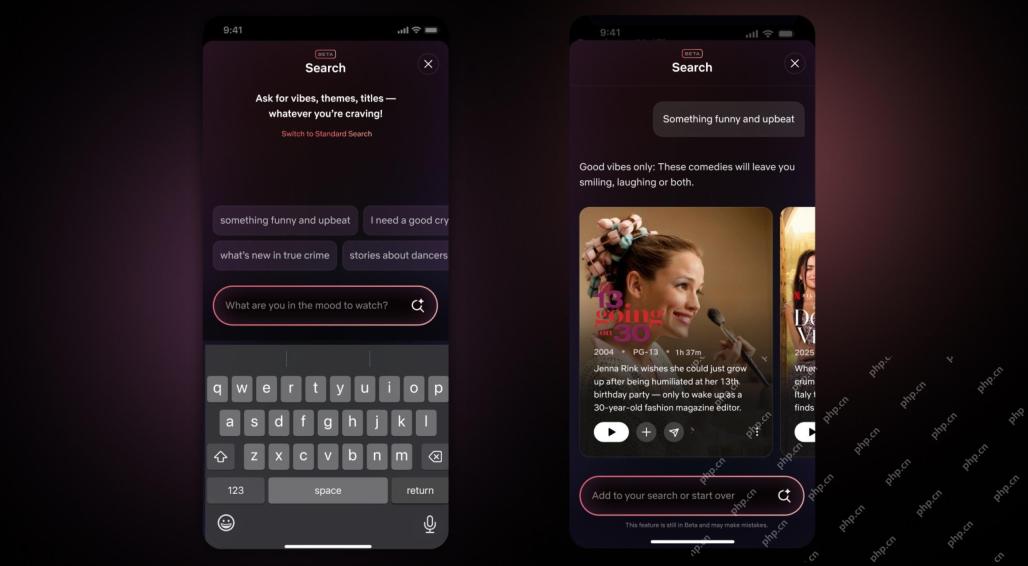

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AM

Netflix Revamps Interface — Debuting AI Search Tools And TikTok-Like DesignMay 08, 2025 am 11:25 AMThe biggest update of Netflix interface in a decade: smarter, more personalized, embracing diverse content Netflix announced its largest revamp of its user interface in a decade, not only a new look, but also adds more information about each show, and introduces smarter AI search tools that can understand vague concepts such as "ambient" and more flexible structures to better demonstrate the company's interest in emerging video games, live events, sports events and other new types of content. To keep up with the trend, the new vertical video component on mobile will make it easier for fans to scroll through trailers and clips, watch the full show or share content with others. This reminds you of the infinite scrolling and very successful short video website Ti

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AM

Long Before AGI: Three AI Milestones That Will Challenge YouMay 08, 2025 am 11:24 AMThe growing discussion of general intelligence (AGI) in artificial intelligence has prompted many to think about what happens when artificial intelligence surpasses human intelligence. Whether this moment is close or far away depends on who you ask, but I don’t think it’s the most important milestone we should focus on. Which earlier AI milestones will affect everyone? What milestones have been achieved? Here are three things I think have happened. Artificial intelligence surpasses human weaknesses In the 2022 movie "Social Dilemma", Tristan Harris of the Center for Humane Technology pointed out that artificial intelligence has surpassed human weaknesses. What does this mean? This means that artificial intelligence has been able to use humans

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AM

Venkat Achanta On TransUnion's Platform Transformation And AI AmbitionMay 08, 2025 am 11:23 AMTransUnion's CTO, Ranganath Achanta, spearheaded a significant technological transformation since joining the company following its Neustar acquisition in late 2021. His leadership of over 7,000 associates across various departments has focused on u

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AM

When Trust In AI Leaps Up, Productivity FollowsMay 08, 2025 am 11:11 AMBuilding trust is paramount for successful AI adoption in business. This is especially true given the human element within business processes. Employees, like anyone else, harbor concerns about AI and its implementation. Deloitte researchers are sc

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment