Home >Technology peripherals >AI >1 Outstanding, 5 Oral! Is ByteDance's ACL so fierce this year? Come and chat in the live broadcast room!

1 Outstanding, 5 Oral! Is ByteDance's ACL so fierce this year? Come and chat in the live broadcast room!

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOriginal

- 2024-08-15 16:32:32838browse

The focus of academic circles this week is undoubtedly the ACL 2024 Summit held in Bangkok, Thailand. This event attracted many outstanding researchers from around the world, who gathered together to discuss and share the latest academic results.

Official data shows that this year’s ACL received nearly 5,000 paper submissions, 940 of which were accepted by the main conference, and 168 works were selected for the oral report (Oral) of the conference. The acceptance rate is less than 3.4%. Among them, ByteDance has a total of 5 results and Oral was selected.

In the Paper Awards session on the afternoon of August 14th, ByteDance’s achievement "G-DIG: Towards Gradient-based DIverse and high-quality Instruction Data Selection for Machine Translation" was officially announced by the organizer as the Outstanding Paper (1/ 35).现 ACL 2024 on -site photos

Back to ACL 2021, byte beating has taken the only best papers with laurel. It is the second time that the Chinese scientist team has been picked for the second time since the establishment of ACL. Top prize!

Back to ACL 2021, byte beating has taken the only best papers with laurel. It is the second time that the Chinese scientist team has been picked for the second time since the establishment of ACL. Top prize!

In order to have an in-depth discussion of this year’s cutting-edge research results, we specially invited the core workers of ByteDance’s paper to interpret and share. Next Tuesday, August 20, from 19:00-21:00, the "ByteDance ACL 2024 Cutting-edge Paper Sharing Session" will be broadcast online!

Wang Mingxuan, leader of the Doubao language model research team, will join hands with many ByteDance researchers

Huang Zhichao, Zheng Zaixiang, Li Chaowei, Zhang Xinbo, and Outstanding Paper’s mysterious guestto share some of the exciting results and research directions of ACL It involves natural language processing, speech processing, multi-modal learning, large model reasoning and other fields. Welcome to make an appointment!

Event Agenda

Interpretation of Selected Papers

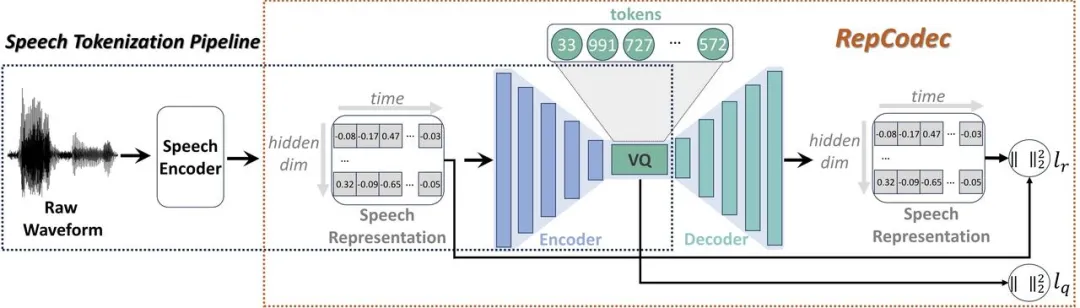

With the recent rapid development of large language models (LLMs), discrete speech tokenization plays an important role in injecting speech into LLMs. However, this discretization leads to a loss of information, thus harming the overall performance. To improve the performance of these discrete speech tokens, we propose RepCodec, a novel speech representation codec for semantic speech discretization. Framework of RepCodec

Unlike audio codecs that reconstruct the original audio, RepCodec learns the VQ codebook by reconstructing the speech representation from a speech encoder such as HuBERT or data2vec. The speech encoder, codec encoder, and VQ codebook together form a process that converts speech waveforms into semantic tokens. Extensive experiments show that RepCodec significantly outperforms the widely used k-means clustering method in speech understanding and generation due to its enhanced information retention capabilities. Furthermore, this advantage holds across a variety of speech coders and languages, affirming the robustness of RepCodec. This approach can facilitate large-scale language model research in speech processing. DINOISER: Diffusion conditional sequence generation model enhanced by noise manipulation Paper address: https://arxiv.org/pdf/2302.10025 While the diffusion model is generating Great success has been achieved with continuous signals such as images and audio, but difficulties remain in learning discrete sequence data like natural language. Although a recent series of text diffusion models circumvent this challenge of discreteness by embedding discrete states into a continuous state latent space, their generation quality is still unsatisfactory.

To understand this, we first deeply analyze the training process of sequence generation models based on diffusion models and identify three serious problems with them: (1) learning failure; (2) lack of scalability; (3) neglect condition signal. We believe that these problems can be attributed to the imperfection of discreteness in the embedding space, where the scale of the noise plays a decisive role.

In this work, we propose DINOISER, which enhances diffusion models for sequence generation by manipulating noise. We adaptively determine the range of the sampled noise scale during the training phase in a manner inspired by optimal transmission, and encourage the model during the inference phase to better exploit the conditional signal by amplifying the noise scale. Experiments show that based on the proposed effective training and inference strategy, DINOISER outperforms the baseline of previous diffusion sequence generation models on multiple conditional sequence modeling benchmarks. Further analysis also verified that DINOISER can better utilize conditional signals to control its generation process.

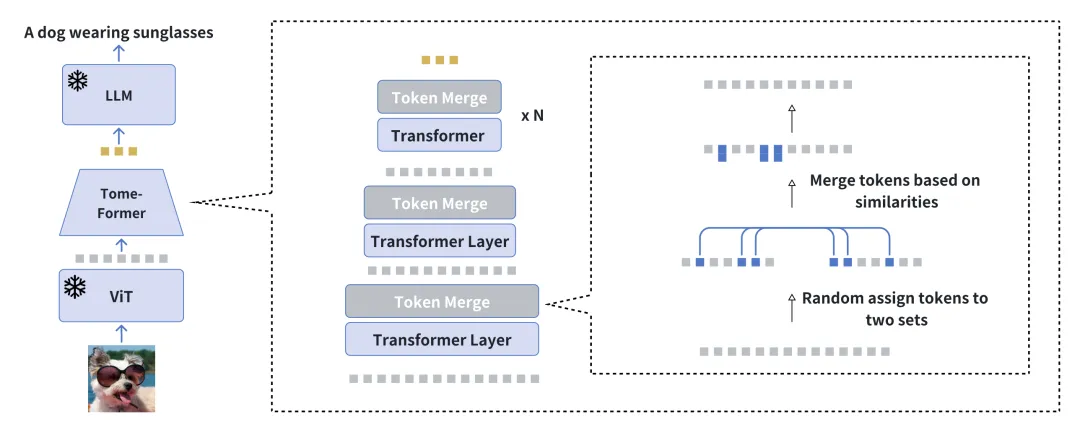

Accelerate the training of visual conditional language generation by reducing redundancy Paper address: https://arxiv.org/pdf/2310.03291 We introduce EVLGen, a tool for A simplified framework designed for the pre-training of visually conditional language generation models with high computational requirements, leveraging frozen pre-trained large language models (LLMs). Overview of the EVLGen The conventional approach in visual language pretraining (VLP) usually involves a two-stage optimization process: an initial resource-intensive stage dedicated to the general visual language Representation learning focuses on extracting and integrating relevant visual features. This is followed by a follow-up phase emphasizing end-to-end alignment between visual and language modalities. Our novel single-stage, single-loss framework bypasses the computationally demanding first training stage by gradually merging similar visual landmarks during training, while avoiding the model inconvenience caused by single-stage training of BLIP-2 type models. collapse. The gradual merging process effectively compresses visual information while retaining semantic richness, achieving fast convergence without affecting performance. Experimental results show that our method speeds up the training of visual language models by 5 times without significant impact on overall performance. Furthermore, our model significantly closes the performance gap with current visual language models using only 1/10th the data. Finally, we show how our image-text model can be seamlessly adapted to video-conditioned language generation tasks via a novel soft attentional temporal, labeled context module.

StreamVoice: Streamable context-aware language modeling for real-time zero-shot speech conversion

Paper address: https://arxiv.org/pdf/2401.11053

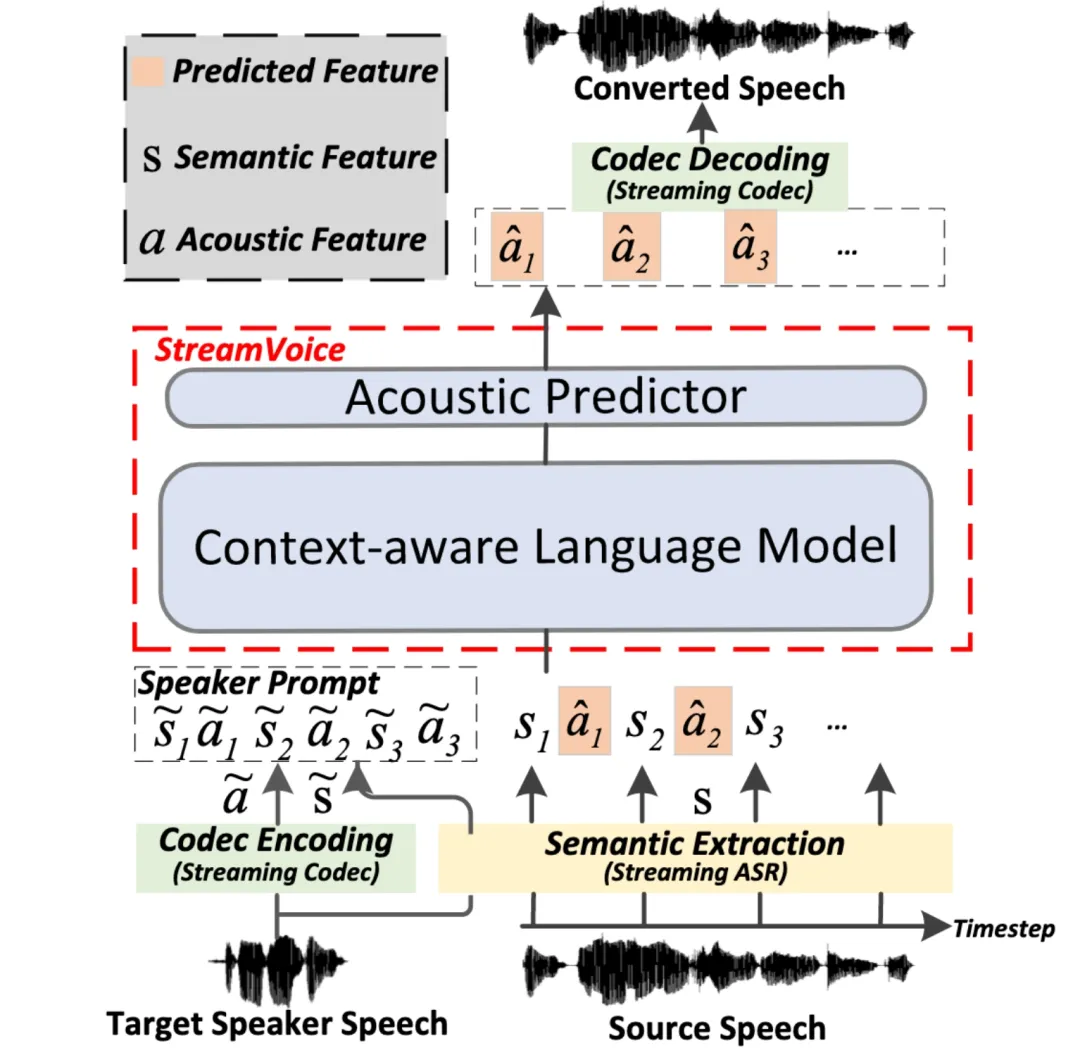

Streaming Streaming zero-shot voice conversion refers to the ability to convert input speech into the speech of any speaker in real time, and only requires one sentence of the speaker's voice as a reference, and does not require additional model updates. Existing zero-sample speech conversion methods are usually designed for offline systems and are difficult to meet the streaming capability requirements of real-time speech conversion applications. Recent methods based on language model (LM) have shown excellent performance in zero-shot speech generation (including conversion), but they require whole-sentence processing and are limited to offline scenarios. The overall architecture for StreamVoice

In this work, we propose StreamVoice, a new zero-shot speech conversion model based on streaming LM, to achieve real-time conversion for arbitrary speakers and input speech. Specifically, to achieve streaming capabilities, StreamVoice uses a context-aware fully causal LM as well as a timing-independent acoustic predictor, while alternating semantic and acoustic features in an autoregressive process eliminates the dependence on the complete source speech.

In order to solve the performance degradation caused by incomplete context in streaming scenarios, two strategies are used to enhance LM’s context awareness of the future and history: 1) teacher-guided context foresight, through teacher-guided context foresight The model summarizes the current and future accurate semantics to guide the model in predicting the missing context; 2) The semantic masking strategy encourages the model to achieve acoustic prediction from previously damaged semantic input and enhance the learning ability of historical context. Experiments show that StreamVoice has streaming conversion capabilities while achieving zero-shot performance close to non-streaming VC systems.

G-DIG: Committed to gradient-based machine translation diversity and high-quality instruction data selection Paper address: https://arxiv.org/pdf/2405.12915 Large Language models (LLMs) have demonstrated extraordinary capabilities in general scenarios. Fine-tuning of instructions allows them to perform on par with humans in a variety of tasks. However, the diversity and quality of instruction data remain two major challenges for instruction fine-tuning. To this end, we propose a novel gradient-based approach to automatically select high-quality and diverse instruction fine-tuning data for machine translation. Our key innovation lies in analyzing how individual training examples affect the model during training.

Overview of G-DIG

Specifically, we select training examples that have a beneficial impact on the model as high-quality examples with the help of the influence function and a small high-quality seed data set. Furthermore, to enhance the diversity of training data, we maximize the diversity of their influence on the model by clustering and resampling their gradients. Extensive experiments on WMT22 and FLORES translation tasks demonstrate the superiority of our method, and in-depth analysis further validates its effectiveness and generality.

GroundingGPT: Language-enhanced Multi-modal Grounding model Paper address: https://arxiv.org/pdf/2401.06071 Multimodal big language The model demonstrates excellent performance in various tasks across different modalities. However, previous models mainly emphasize capturing global information of multi-modal inputs. Therefore, these models lack the ability to effectively understand the details in the input data and perform poorly in tasks that require detailed understanding of the input. At the same time, most of these models suffer from serious hallucination problems. , limiting its widespread use.

In order to solve this problem and enhance the versatility of large multi-modal models in a wider range of tasks, we propose GroundingGPT, a multi-modal model that can achieve different granular understandings of images, videos, and audios. In addition to capturing global information, our proposed model is also good at handling tasks that require finer understanding, such as the model's ability to pinpoint specific regions in an image or specific moments in a video. In order to achieve this goal, we designed a diverse data set construction process to construct a multi-modal and multi-granular training data set. Experiments on multiple public benchmarks demonstrate the versatility and effectiveness of our model.

ReFT: Inference based on reinforcement fine-tuning Paper address: https://arxiv.org/pdf/2401.08967 A common type of reinforcement large language model (LLMs) inference The capable approach is supervised fine-tuning (SFT) using Chain of Thought (CoT) annotated data. However, this method does not show strong enough generalization ability because the training only relies on the given CoT data. Specifically, in data sets related to mathematical problems, there is usually only one annotated reasoning path for each problem in the training data. For the algorithm, if it can learn multiple labeled reasoning paths for a problem, it will have stronger generalization capabilities.

Comparison between SFT and ReFT on the presence of CoT alternatives

To solve this challenge, taking mathematical problems as an example, we propose a simple and effective method called Reinforced Fine-Tuning (ReFT) to enhance the generalization ability of LLMs during inference. ReFT first uses SFT to warm up the model, and then uses online reinforcement learning (specifically the PPO algorithm in this work) for optimization, which automatically samples a large number of reasoning paths for a given problem and obtains rewards based on the real answers for further fine-tuning. Model. Extensive experiments on GSM8K, MathQA and SVAMP datasets show that ReFT significantly outperforms SFT, and model performance can be further improved by combining strategies such as majority voting and reordering. It is worth noting that here ReFT only relies on the same training problem as SFT and does not rely on additional or enhanced training problems. This shows that ReFT has superior generalization ability. Looking forward to your interactive questions

Live broadcast time: August 20, 2024 (Tuesday) 19:00-21:00 Live broadcast platform: WeChat video account [Doubao Big Model Team], Xiao Red Book Number [Doubao Researcher] You are welcome to fill in the questionnaire and tell us about the questions you are interested in about the ACL 2024 paper, and chat with multiple researchers online! The Beanbao model team continues to be hotly recruited. Please click this link to learn about team recruitment related information.

The above is the detailed content of 1 Outstanding, 5 Oral! Is ByteDance's ACL so fierce this year? Come and chat in the live broadcast room!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology

Framework of RepCodec

Framework of RepCodec

The overall architecture for StreamVoice

The overall architecture for StreamVoice

Comparison between SFT and ReFT on the presence of CoT alternatives

Comparison between SFT and ReFT on the presence of CoT alternatives