Technology peripherals

Technology peripherals AI

AI Too complete! Apple launches new visual model 4M-21, capable of 21 modes

Too complete! Apple launches new visual model 4M-21, capable of 21 modesToo complete! Apple launches new visual model 4M-21, capable of 21 modes

Current multimodal and multitasking base models, such as **4M** or **UnifiedIO**, show promising results. However, their out-of-the-box ability to accept different inputs and perform different tasks is limited by the (usually small) number of modalities and tasks they are trained on.

, Based on this, researchers from the Ecole Polytechnique Fédérale de Lausanne (EPFL) and Apple jointly developed an **advanced** any-to-any modality single model that is **widely** diverse in dozens of Conduct training on various modalities, and perform collaborative training on large-scale multi-modal data sets and text corpora.

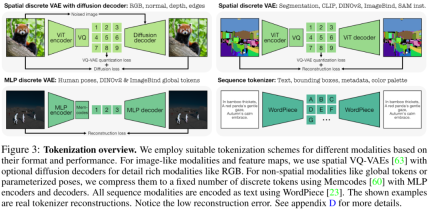

A key step in the training process is to perform discrete **tokenization** on various modalities, whether they are structured data such as image-like neural network **feature maps**, vectors, instance segmentation or human poses, or Data that can be represented as text.

Paper address: https://arxiv.org/pdf/2406.09406

Paper homepage https://4m.epfl.ch/

Paper title: 4M-21: An Any -to-Any Vision Model for Tens of Tasks and Modalities

This study shows that training a single model can also complete at least **three times** as many tasks/**modalities** as existing models, and does not Performance will be lost. In addition, this research also achieves finer-grained and more controllable multi-mode data generation capabilities.

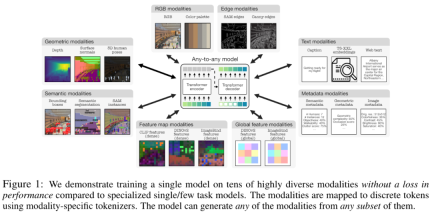

This research builds on the multi-modal mask pre-training scheme and improves model capabilities by training on dozens of highly diverse modalities. By encoding it using modality-specific discrete tokenizers, the study enables training a single unified model on different modalities.

Simply put, this research extends the capabilities of existing models in several key dimensions:

Modalities: from 7 modalities of the best existing any-to-any model to 21 different modalities , enabling cross-modal retrieval, controllable generation, and powerful out-of-the-box performance. This is the first time a single vision model can solve dozens of different tasks in an any-to-any manner without compromising performance and without any traditional multi-task learning.

Diversity: Add support for more structured data, such as human poses, SAM instances, metadata, and more.

tokenization: Study discrete tokenization of different modalities using modality-specific methods, such as global image embeddings, human poses, and semantic instances.

Extension: Expand model size to 3B parameters and dataset to 0.5B samples.

Collaborative training: collaborative training in vision and language at the same time.

Method Introduction

This study uses the 4M pre-training scheme (the study also came from EPFL and Apple and was released last year), which is proven to be a general method that can be effectively extended to multi-modality.

Specifically, this article keeps the architecture and multi-modal mask training goals unchanged, by expanding the size of the model and data sets, increasing the type and number of modalities involved in training the model, and jointly on multiple data sets Training can improve the performance and adaptability of the model.

Modalities are divided into the following categories: RGB, geometry, semantics, edge, feature map, metadata and text, as shown in the figure below.

Tokenization

Tokenization mainly includes converting different modalities and tasks into sequences or discrete tokens, thereby unifying their representation spaces. Researchers use different tokenization methods to discretize modes with different characteristics, as shown in Figure 3. In summary, this article uses three tokenizers, including ViT tokenizer, MLP tokenizer and text tokenizer.

In terms of architecture selection, this article adopts the 4M encoder-decoder architecture based on Transformer, and adds additional modal embeddings to adapt to new modalities.

Experimental results

Next, the paper demonstrates the multi-modal capabilities of 4M-21.

Multi-modal generation

Based on iterative decoding token, 4M-21 can be used to predict any training modality. As shown in Figure 2, this paper can generate all modalities in a consistent manner from a given input modality.

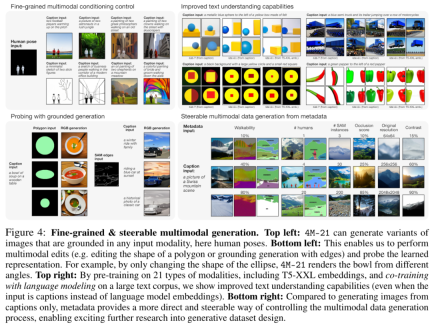

Furthermore, since this study can conditionally and unconditionally generate any training modality from any subset of other modalities, it supports several methods to perform fine-grained and multi-modal generation, as shown in Figure 4, For example, perform multimodal editing. Furthermore, 4M-21 demonstrates improved text understanding, both on T5-XXL embeddings and regular subtitles, enabling geometrically and semantically sound generation (Figure 4, top right).

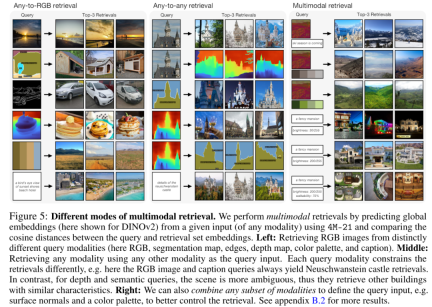

Multi-modal retrieval

As shown in Figure 5, 4M-21 unlocks retrieval capabilities that are not possible with the original DINOv2 and ImageBind models, such as retrieving RGB images or other modalities by using other modalities as queries . In addition, 4M-21 can combine multiple modalities to predict global embeddings for better control of retrieval, as shown in the image on the right.

Out of the box

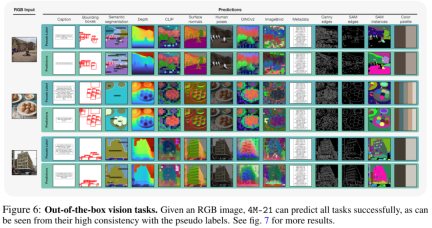

The 4M-21 is capable of performing a range of common vision tasks out of the box, as shown in Figure 6.

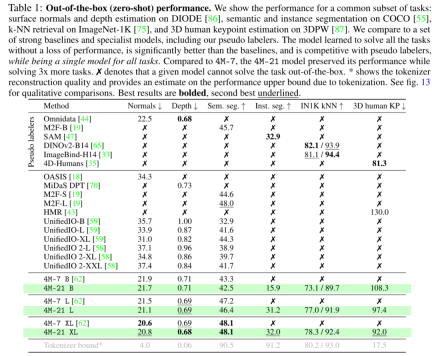

Table 1 evaluates DIODE surface normal and depth estimation, COCO semantic and instance segmentation, 3DPW 3D human pose estimation, etc.

Transfer experiment

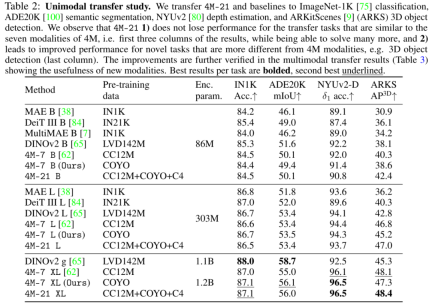

In addition, this article also trained models of three different sizes: B, L and XL. Their encoder is then transferred to downstream tasks and evaluated on single-modality (RGB) and multi-modality (RGB + depth) settings. All transfer experiments discard the decoder and instead train a task-specific head. The results are shown in Table 2:

Finally, this paper performs multi-modal transfer on NYUv2, Hypersim semantic segmentation and 3D object detection on ARKitScenes. As shown in Table 3, 4M-21 takes full advantage of the optional depth input and significantly improves the baseline.

The above is the detailed content of Too complete! Apple launches new visual model 4M-21, capable of 21 modes. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to save conversation history (conversation log) in ChatGPT!May 16, 2025 am 05:41 AM

An easy-to-understand explanation of how to save conversation history (conversation log) in ChatGPT!May 16, 2025 am 05:41 AMVarious ways to efficiently save ChatGPT dialogue records Have you ever thought about saving a ChatGPT-generated conversation record? This article will introduce a variety of saving methods in detail, including official functions, Chrome extensions and screenshots, etc., to help you make full use of ChatGPT conversation records. Understand the characteristics and steps of various methods and choose the one that suits you best. [Introduction to the latest AI proxy "OpenAI Operator" released by OpenAI] (The link to OpenAI Operator should be inserted here) Table of contents Save conversation records using ChatGPT Export Steps to use the official export function Save ChatGPT logs using Chrome extension ChatGP

Create a schedule with ChatGPT! Explaining prompts that can be used to create and adjust tablesMay 16, 2025 am 05:40 AM

Create a schedule with ChatGPT! Explaining prompts that can be used to create and adjust tablesMay 16, 2025 am 05:40 AMModern society has a compact pace and efficient schedule management is crucial. Work, life, study and other tasks are intertwined, and prioritization and schedules are often a headache. Therefore, intelligent schedule management methods using AI technology have attracted much attention. In particular, ChatGPT's powerful natural language processing capabilities can automate tedious schedules and task management, significantly improving productivity. This article will explain in-depth how to use ChatGPT for schedule management. We will combine specific cases and steps to demonstrate how AI can improve daily life and work efficiency. In addition, we will discuss things to note when using ChatGPT to ensure safe and effective use of this technology. Experience ChatGPT now and get your schedule

How to connect ChatGPT with spreadsheets! A thorough explanation of what you can doMay 16, 2025 am 05:39 AM

How to connect ChatGPT with spreadsheets! A thorough explanation of what you can doMay 16, 2025 am 05:39 AMWe will explain how to link Google Sheets and ChatGPT to improve business efficiency. In this article, we will explain in detail how to use the add-on "GPT for Sheets and Docs" that is easy for beginners to use. No programming knowledge is required. Increased business efficiency through ChatGPT and spreadsheet integration This article will focus on how to connect ChatGPT with spreadsheets using add-ons. Add-ons allow you to easily integrate ChatGPT features into your spreadsheets. GPT for Shee

6 Investor Predictions For AI In 2025May 16, 2025 am 05:37 AM

6 Investor Predictions For AI In 2025May 16, 2025 am 05:37 AMThere are overarching trends and patterns that experts are highlighting as they forecast the next few years of the AI revolution. For instance, there's a significant demand for data, which we will discuss later. Additionally, the need for energy is d

Use ChatGPT for writing! A thorough explanation of tips and examples of prompts!May 16, 2025 am 05:36 AM

Use ChatGPT for writing! A thorough explanation of tips and examples of prompts!May 16, 2025 am 05:36 AMChatGPT is not just a text generation tool, it is a true partner that dramatically increases writers' creativity. By using ChatGPT for the entire writing process, such as initial manuscript creation, ideation ideas, and stylistic changes, you can simultaneously save time and improve quality. This article will explain in detail the specific ways to use ChatGPT at each stage, as well as tips for maximizing productivity and creativity. Additionally, we will examine the synergy that combines ChatGPT with grammar checking tools and SEO optimization tools. Through collaboration with AI, writers can create originality with free ideas

How to create graphs in ChatGPT! No plugins required, so it can be used for Excel too!May 16, 2025 am 05:35 AM

How to create graphs in ChatGPT! No plugins required, so it can be used for Excel too!May 16, 2025 am 05:35 AMData visualization using ChatGPT: From graph creation to data analysis Data visualization, which conveys complex information in an easy-to-understand manner, is essential in modern society. In recent years, due to the advancement of AI technology, graph creation using ChatGPT has attracted attention. In this article, we will explain how to create graphs using ChatGPT in an easy-to-understand manner even for beginners. We will introduce the differences between the free version and the paid version (ChatGPT Plus), specific creation steps, and how to display Japanese labels, along with practical examples. Creating graphs using ChatGPT: From basics to advanced use ChatG

Pushing The Limits Of Modern LLMs With A Dinner Plate?May 16, 2025 am 05:34 AM

Pushing The Limits Of Modern LLMs With A Dinner Plate?May 16, 2025 am 05:34 AMIn general, we know that AI is big, and getting bigger. It’s fast, and getting faster. Specifically, though, not everyone’s familiar with some of the newest hardware and software approaches in the industry, and how they promote better results. Peopl

Archive your ChatGPT conversation history! Explaining the steps to save and how to restore itMay 16, 2025 am 05:33 AM

Archive your ChatGPT conversation history! Explaining the steps to save and how to restore itMay 16, 2025 am 05:33 AMChatGPT Dialogue Record Management Guide: Efficiently organize and make full use of your treasure house of knowledge! ChatGPT dialogue records are a source of creativity and knowledge, but how can growing records be effectively managed? Is it time-consuming to find important information? don’t worry! This article will explain in detail how to effectively "archive" (save and manage) your ChatGPT conversation records. We will cover official archive functions, data export, shared links, and data utilization and considerations. Table of contents Detailed explanation of ChatGPT's "archive" function How to use ChatGPT archive function Save location and viewing method of ChatGPT archive records Cancel and delete methods for ChatGPT archive records Cancel archive Delete the archive Summarize Ch

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

SublimeText3 Chinese version

Chinese version, very easy to use

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool